book - Networked Software Systems Laboratory

advertisement

אביב תשע"א

1102111

Network Software and Systems Lab

Electrical Engineering Faculty

Final Report

Submitted By: Omer Kiselov, Ofer Kiselov

Supervised By: Dmitri Perelman

Project in software

Technion

To my brother, who

needed this for his

interview. We wish

him good luck.

1

INDEX

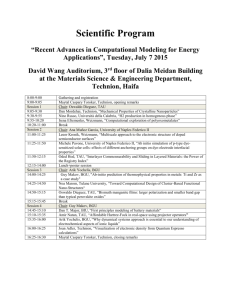

ABSTRACT ................................................................................................................... 5

1.INTRODUCTION .................................................................................................... 6

1.1Parallel Computing ............................................................................................................................ 6

1.2 Locks and the problems they present ............................................................................................... 9

2. BACKGROUND ..................................................................................................... 11

2.1 Software Transactional Memory Abstraction ................................................................................ 11

2.2STM Implementation example – Transactional Locking Overview ............................................... 14

2.3 Aborts in STM

.............................................................................................................................. 18

2.4 Unnecessary Aborts in STM ............................................................................................................ 19

2.4.1 What Are They? ...................................................................................................................... 19

2.4.2

Why do they happen? ......................................................................... 20

2.4.3

How do we detect them? .............................................................. 22

2.4.4

Example: Aborts in TL2 ...................................................................... 23

3.PROJECT GOAL .................................................................................................... 28

4.IMPLEMENTATION ............................................................................................ 29

4.1 Overview .......................................................................................................................................... 29

4.2 The log file and parser ..................................................................................................................... 29

4.3 The offline analysis .......................................................................................................................... 33

4.4 The online logging ............................................................................................................................ 37

5. EVALUATION ........................................................................................................ 54

5.1 Hardware.......................................................................................................................................... 54

Project in software

Technion

5.2 Deuce Framework ............................................................................................................................ 54

5.4 Benchmarks...................................................................................................................................... 56

5.4.1 AVL test bench .......................................................................................................................... 56

5.4.2 Vacation test bench .................................................................................................................. 57

5.4.3 SSCA2 test bench ...................................................................................................................... 58

5.5 Results .............................................................................................................................................. 59

6. CONCLUSION AND SUMMARY ........................................................................... 65

7, ACKNOWLEDGEMENTS ...................................................................................... 67

8. REFERENCES......................................................................................................... 68

9. INDEX A – CLASS LIST ......................................................................................... 70

2

Project in software

Technion

Figures List

Figure 1 - Serial Software ................................................................................ 7

Figure 2 - Parallel Programming Schematic .................................................... 8

Figure 3 - TL2 Tests vs. different algorithms.................................................. 18

Figure 4 - Aborts ............................................................................................ 20

Figure 5 - Example Of Unnecessary Aborts................................................... 21

Figure 6 - Precedence Graph ........................................................................ 22

Figure 7 - Structure of the classes representing the log ................................ 32

Figure 8 - A schematic description of the offline analysis part ....................... 34

Figure 9 - Another View of the Offline Design ................................................ 37

Figure 10 - A schematic description of the online logging part ...................... 38

Figure 11 - Online Part version 1 ................................................................... 41

Figure 12 - Online Part Final Version ............................................................. 43

Figure 13 - Deuce Method application ........................................................... 54

Figure 14 - Deuce Context for TM algorithms ................................................ 55

Figure 15 - Comparison between Deuce and similar methods for running TM

....................................................................................................................... 56

Figure 16 - AVL Benchmark results – commit ratio, aborts precentage ......... 59

Figure 17 - SSCA2 Benchmark results – commit ratio, aborts precentage .... 60

Figure 18 - Vacation Benchmark result – commit ratio, aborts precentage ... 60

Figure 19 – AVL benchmark results - Analysis of Aborts by type .................. 61

Figure 20 - SSCA2 Benchmark results - Analysis of Aborts by type .............. 61

Figure 21 – Vacation Benchmark results - Analysis of Aborts by type ........... 62

Figure 22 - AVL Benchmark results - Catagorizing by type and amounts ...... 63

Figure 23 - SSCA2 Benchmark results - Catagorizing by type and amounts . 63

Figure 24 – Vacation Benchmark results - Catagorizing by type and amounts

....................................................................................................................... 64

3

Project in software

Technion

Source Code List

Source Code 1 - Log Formation..................................................................... 32

Source Code2 - Logger Interface.................................................................. 39

Source Code 3 - Background Collector Run method ..................................... 42

Source Code 4 - Queue Manager Ct'r .......................................................... 44

Source Code 5 - Add Queue Method............................................................ 44

Source Code 6 - getNextLine & traverseQueues methods ........................... 46

Source Code 7 - Addition to the TL2 context ................................................. 47

Source Code 8 - Change in context C'tor ...................................................... 47

Source Code 9 - changes in TL2 Context init() .............................................. 48

Source Code 11 - The new exceptions in LockTable ..................................... 50

Source Code 11 - Exeption throw instance .................................................... 50

Source Code 12 - Change in Commit method ............................................... 51

Source Code 11 - Changes in the onReadAccess method ............................ 52

Source Code 14 - Changes in beforeReadAccess method .......................... 52

Source Code 15 - Change in Context objectid ............................................... 53

4

Project in software

Technion

Abstract

Aborts in Software Transactional Memory are a blow to a program's

performance – the aborted transaction must perform its action again, while

previous efforts are wasted, and CPU utilization decreases. Aborts in STM

algorithms are caused by many factors, but not all of them are necessary to

maintain correctness: sometimes, a transaction may abort only because

validating its correctness would be too complex.

In this project, our goals were to observe the amounts of aborts and

unnecessary aborts, and their impact on performance over a selected STM

algorithm. First, we formulated a log file structure, and built an offline "Abort

Analyzer", which able to read and conclude whether an abort is necessary.

Afterwards, we modified an existing STM environment, so that it may report

on every transactional action it performs (e.g. reads, writes, transaction starts,

commits and aborts) to a log file, matching the Abort Analyzer.

5

Project in software

Technion

1. Introduction

1.1 Parallel Computing

Over the years we had a rise in computer performance as a result

of technology improvements. In addition to technology

improvements we have computer architectures benefiting from

these improvements by adapting and embracing them. With these

improvements come many problems due to power dissipation and

scaling boundaries. Other than that we have the possibility to

create multiple circuits on a smaller area of die.

Parallel computing is a form of computer architecture and design in

which calculations can be carried out simultaneously. The key

principle is that large size problems can be divided into smaller

ones which can be solved concurrently. As power consumption

(and consequently heat generation) by computers has become a

concern in recent years, parallel computing has become the

dominant paradigm in computer architecture, mainly in the form

of multicore processors.

VLSI technology, allows larger and larger numbers of

components to fit on a chip and clock rates to increase. The

parallel computer architecture translates the potential of the

technology into greater performance and expanded capability of

the computer system. The leading character is parallelism. A larger

volume of resources means that more operations can be done at

once, in parallel. Parallel computer architecture is about organizing

these resources so that they work well together. Computers of all

types have harnessed parallelism more and more effectively to

gain performance from the raw technology, and the level at which

parallelism is exploited continues to rise. The other key character is

storage. The data that is operated on at an ever faster rate must be

held somewhere in the machine.

6

Project in software

Technion

Traditionally, computer software has been written for serial

computation. To solve a problem, an algorithm is constructed and

implemented as a serial stream of instructions. These instructions

are executed on a central processing unit on one computer. Only

one instruction may execute at a time—after that instruction is

finished, the next is executed.

Figure 1 - Serial Software

In the simplest sense, parallel computing is the simultaneous use

of multiple compute resources to solve a computational problem:

To be run using multiple CPUs

A problem is broken into discrete parts that can be solved

concurrently

Each part is further broken down to a series of instructions

Instructions from each part execute simultaneously on

different CPUs

7

Project in software

Technion

Figure 2 - Parallel Programming Schematic

Historically, parallel computing has been considered to be "the high

end of computing", and has been used to model difficult scientific

and engineering problems found in the real world. Some examples

are: Physics - applied, nuclear, particle, condensed matter, high

pressure, fusion, photonics, Bioscience, Biotechnology, Genetics,

Chemistry, Molecular Sciences, Geology, Seismology, Mechanical

Engineering - from prosthetics to spacecraft, Electrical Engineering,

Circuit Design, Microelectronics, Computer Science, Mathematics,

and graphics.

There are two directions to achieve parallelism in computing:

The first is through hardware – there is a way of building

mechanisms which takes a set of commands finds their

dependencies and executes the independent commands in

parallel.

Most of these hardware mechanisms are built in VLSI and are

using advanced caching algorithms and consist of high end

architecture and micro-architecture changes from the norm (X86 or

what not). We will not deal with these possible changes.

8

Project in software

Technion

Instead we will deal with software parallelism methods. There are

several ways to execute parallel computing above architecture

layer. The most common way to perform parallel computing is

concurrent programming using locks.

1.2 Locks and the problems they present

A lock is a synchronization mechanism for enforcing limits on

access to a resource in an environment where there are

many threads of execution. Locks are one way of

enforcing concurrency control policies.

Locks are an effective method for obtaining certain degree of

concurrency but they have many problems:

The first is the overhead while locking. There is a utilization of

some resources for the locking mechanism in itself, like the

memory allocated for the locks, the time the CPU takes to initialize

and destroy the locks (they are usually built out of an ADT called

semaphore), and of course the time each thread takes to acquire

and release the locks.

Naturally these are all problems one will have in all concurrency

algorithms but in this case the overhead limits our utilization and

our use of the mechanism. Thus we are forced to use larger locks

or less locking which in turn has its own issues.

Second there is the problem of contention. If there is a lock held by

one thread and another thread or two wants to obtain it – it must

stand in "line". This problem has a direct link to the matter of

granularity of the locks. The more granular the locks are the lower

the contention is. Granularity is the amount of substance locked

within each lock – a lock can obtain an entire matrix of memory

data or a singular cell, perhaps a row or column. As we can

immediately conclude we granularity has a direct link to the level of

contention and a trade-off with the overhead.

9

Project in software

Technion

The direct result of such lock is that we can have a few locks

locking a lot of data, a case which hurts concurrency and

performance of the machine but has much less overhead, or we

can have a large amount of locks locking less data – but again we

get the overhead.

Last we have the problem of deadlocks and livelocks. In both cases

we have two threads each containing lock which posses the

information which the other thread needs. Thus each of them is

waiting for the other to release the lock and no progress is made in

the process. The threads are stuck. The difference between

deadlocks and livelocks is that in livelocks we have some sort of

action still running and some reading and writing to memory is still

proceeding in an endless loop.

This introduction was a brief summary of the subject we are here to

discuss. We will talk of a new locking algorithm with high

granularity and low performance. This locking algorithm guaranties

atomicity and smart execution with no deadlocks.

This algorithm is called transactional memory.

We will not discuss the matter of hardware transactional memory

but the software transactional memory. That is because we want to

minimize the cost of the changes to the existing systems – the

existing systems of microprocessors could be adapted to

transactional memory but with great difficulty in back-fitting

versions. It will probably not fit with existing systems or earlier

system.

10

Project in software

Technion

2. Background

2.1 Software Transactional Memory Abstraction

Software transactional memory (STM) is a scheme for concurrent

programming with multiple threads that uses transactions similar to

those used in databases.

At its heart, a transaction is simply a way of performing a group of

operations in such a way that they appear to happen atomically, all at a

single instant. The intermediate states cannot be read or updated by

any other transaction. This enables you to keep tables consistent with

their constraints at all times - one operation can violate a constraint, but

both before and after each transaction, it will hold.

In typical implementations of transactions, you have the option of

either commiting or aborting a transaction at any time. When you

commit a transaction, you agree that the transaction went as planned

and make its changes permanently in the database. If you abort a

transaction on the other hand, this means that something went wrong

and you want to roll back, or reverse, any partial changes it's already

made. Good installers use a similar rollback scheme: if some

installation step fails and recovery is not possible, it will erase all the

cruft it's already put on your machine so that it's just like it was before.

If it fails to do this, the machine may become unusable or future

installation attempts may fail.

If we assume no faults happen, the way to ensure the atomicity of the

operations is usually based on locking or acquiring exclusively

ownership on the memory locations accessed by an operation. If a

transaction cannot capture an ownership it fails, and releases the

ownerships already acquired. To guarantee liveness one must first

eliminate deadlock, which for static transactions is done by acquiring

the ownerships needed in some increasing order. In order to continue

ensuring liveness in a situation where faults happen, we must make

11

Project in software

Technion

certain that every transaction completes even if the process which

executes it has been delayed, swapped out, or crashed. This is

achieved by a "helping" methodology, forcing other transactions which

are trying to capture the same location to help the owner of this location

to complete its own transaction. The key feature in the transactional

approach is that in order to free a location one need only help its single

owner transaction. Moreover, one can effectively avoid the overhead of

coordination among several transactions attempting to help release a

location by employing a "reactive" helping policy.

The benefit of this optimistic approach is increased concurrency: no

thread needs to wait for access to a resource, and different threads can

safely and simultaneously modify disjoint parts of a data structure that

would normally be protected under the same lock. Despite the

overhead of retrying transactions that fail, in most realistic programs,

conflicts arise rarely enough that there is an immense performance

gain over lock-based protocols on large numbers of processors.

However, in practice STM systems also suffer a performance hit

relative to fine-grained lock-based systems on small numbers of

processors. This is due primarily to the overhead associated with

maintaining the log and the time spent committing transactions. Even in

this case performance is typically no worse than twice as slow.

Hence we see that transactional memory eliminates the use of locks.

The use of software alone to implement requires only the adaptive

existing CAS command (compare and swap).

There are many ways to implement STM. STM can be implemented as

a lock-free algorithm or it can use locking. There are two types of

locking schemes: In encounter-time locking, memory writes are done

by first temporarily acquiring a lock for a given location, writing the

value directly, and logging it in the undo log. Commit-time locking locks

memory locations only during the commit phase.

12

Project in software

Technion

A commit-time scheme named "Transactional Locking II" implemented

by Dice, Shalev, and Shavit uses a global version clock. Every

transaction starts by reading the current value of the clock and storing it

as the read-version. Then, on every read or write, the version of the

particular memory location is compared to the read-version; and, if it's

greater, the transaction is aborted. This guarantees that the code is

executed on a consistent snapshot of memory. During commit, all write

locations are locked, and version numbers of all read and write

locations are re-checked. Finally, the global version clock is

incremented, new write values from the log are written back to memory

and stamped with the new clock version.

13

Project in software

Technion

2.2 STM Implementation example – Transactional Locking

Overview

To illustrate better the method and advantages of STM we will now

portray an example for an algorithm used to run and perform

transactional memory in software. Using the global clock variation it

has an effect on the method of use and increase concurrency. We

will examine the implementation in order to utilize the properties of

the algorithm to our future needs within the project.

The transactional locking 2 algorithm is based on a combination of

commit-time locking and a novel global version clock based

validation technique. The TL2 fits with any systems memory life

cycle, including the platforms using malloc and free. The TL2 also

avoids periods of unsafe execution. User code is guaranteed to

operate on consistent memory states. Eventually while providing

these properties the TL2 has a reasonable performance (better

than other STM algorithms).

Lock based STM tend to outperform non-blocking ones due to

simpler algorithm that result in lower overheads (as discussed

earlier in the section regarding the tradeoffs). The main limitations

of the STM discussed here were introduced by the writers in order

to set the criteria for commercial use of STM.

The first of those is that memory used transactionally must be

recyclable to be used non-transactionally. Hence the use of

garbage collecting languages is introduced. Even so in C hence a

garbage collector must be implemented.

The second limitation is that the STM algorithm must not use a

special runtime environment which cripples performance in many

efficient otherwise STM algorithms. The TL2 algorithm runs user

codes on consistent states of memory and thus eliminating the

need for specialized managed runtime environments.

14

Project in software

Technion

The TL2 is a two phase locking scheme that employs commit-time

lock acquisition mode. Each implemented transactional system has

a shared global version-clock variable. The global clock is

incremented using the CAS operation. The global version clock will

be read by each transaction.

There is a special versioned write-lock associated with every

transacted memory location. In its simplest form, the versioned

write-lock is a single word spinlock that uses a CAS operation to

acquire the lock and a store to release it. Since one only needs a

single bit to indicate that the lock is taken, the rest of the lock word

is used to hold a version number. This number is advanced by

every successful lock-release.

To implement a given data structure there is a need to allocate a

collection of versioned write-locks. Various schemes for associating

locks with shared data are used:

Per object (PO), where a lock is assigned per shared object or per

stripe (PS), where we allocate a separate large array of locks and

memory is striped (partitioned) using some hash function to map

each transactable location to a stripe.

Other mappings between transactional shared variables and locks

are possible.

The PO scheme requires either manual or compiler-assisted

automatic insertion of lock fields whereas PS can be used with

unmodified data structures.

The TL2 algorithm is:

For Write Transactions .

1. Sample global version-clock: Load the current value of the

global version clock and store it in a thread local variable called the

read-version number (rv). This value is later used for detection of

15

Project in software

Technion

recent changes to data fields by comparing it to the version fields of

their versioned write-locks.

2. Run through a speculative execution: Execute the transaction

code (load and store instructions are mechanically augmented and

replaced so that speculative execution does not change the shared

memory’s state.) Locally maintain a read-set of addresses loaded

and a write-set address/value pairs stored. This logging

functionality is implemented by augmenting loads with instructions

that record the read address and replacing stores with code

recording the address and value to-be-written. The transactional

load first checks to see if the load address already appears in the

write-set. If so, the transactional load returns the last value written

to the address. This provides the illusion of processor consistency

and avoids read-after-write hazards. A load instruction sampling

the associated lock is inserted before each original load, which is

then followed by post-validation code checking that the location’s

versioned write-lock is free and has not changed. Additionally,

make sure that the lock’s version field is smaller of equal to rv and

the lock bit is clear. If it is greater than rv it suggests that the

memory location has been modified after the current thread

performed step 1, and the transaction is aborted.

3. Lock the write-set: Acquire the locks (avoid indefinite deadlock).

In case not all of these locks are successfully acquired, the

transaction fails.

4. Increment global version-clock: Upon successful completion of

lock acquisitions of all locks in the write-set perform an incrementand-fetch (using a CAS operation) of the global version-clock

recording the returned value in a local write-version number

variable wv.

5. Validate the read-set: validate for each location in the read-set

that the version number associated with the versioned-write-lock is

16

Project in software

Technion

smaller or equal to rv. Verify that these memory locations have not

been locked by other threads. In case the validation fails, the

transaction is aborted. (By re-validating the read-set, there is a

guarantee that its memory locations have not been modified) while

steps 3 and 4 were being executed. In the special case where rv +

1 = wv it is not necessary to validate the read-set, as it is

guaranteed that no concurrently executing transaction could have

modified it.

6. Commit and release the locks: For each location in the write-set,

store to the location the new value from the write-set and release

the locations lock by setting the version value to the write-version

wv and clearing the write-lock bit.

Read-Only Transactions

1. Sample the global version-clock: Load the current value of the

global version-clock and store it in a local variable called readversion (rv).

2. Run through a speculative execution: Execute the transaction

code. Each load instruction is post-validated by checking that the

location’s versioned write-lock is free and making sure that the

lock’s version field is smaller or equal to rv. If it is greater than rv

the transaction is aborted, otherwise commits.

We will not discuss the method of the version clock implementation

nor do we want to deal with any further methods within the

implementation of TL2. The discussion here is purely on the

algorithm that TL2 presents for the transactions to perform.

Naturally the TL2 is different from other STM algorithm but we

believe that TL2 represents the majority of the STM algorithms.

What we intend to check are the aborts that the TL2 can perform.

On which we will discuss next chapter.

17

Project in software

Technion

We will now review the results of the TL2 empirical performance

evaluation as done by Dice, Shalev, and Shavit.

Figure 3 - TL2 Tests vs. different algorithms

The tests were run on a red black tree with customizable

contention. The result shows that the TL and TL2 are above all

other algorithms. The TL2 beats the TL algorithm in the highest

contention which means it poses a small overhead cost. In any way

according to these results the TL2 as preliminary test is a strong

representation to the STM algorithms as a whole.

2.3 Aborts in STM

As said in the TL2 algorithm and others as well there is a situation

where the transaction might encounter several inconsistencies

which will make it determine that the atomic actions set it holds

cannot be committed. Thus the actions are canceled and the

transaction aborts.

Aborts are in general not a "good" thing. They cripple performance

and jam the memory with wasted work. They lower concurrency at

times and as result create latency.

A transaction's abort may be initiated by a programmer or may be

the result of a TM decision. The last case when the transaction is

18

Project in software

Technion

forcefully aborted by the TM is the case we will handle mostly. Take

an example for a "regular" abort of a transaction which reads object

A and writes to object B. At the same time another transaction

reads the old value of B and writes A. One of the Transactions

must be aborted to ensure atomicity. This abort is a must. There is

no way to do it with out aborting one or both transactions.

Most existing TMs perform unnecessary (spare) aborts Aborts of transactions that could have committed without violating

correctness. Spare aborts have several drawbacks: like ordinary

aborts work done by the aborted transaction is lost, computer

resources are wasted, and the overall throughput decreases.

Moreover, after the aborted transactions restart, they may conflict

again, leading to livelock and degrading performance even further.

The point is – while some aborts are a "necessary evil" since we

cannot maintain atomicity without them, unnecessary aborts on the

other hand are wrong and are a waste in every way.

Some unnecessary aborts cannot be avoided – the algorithms are

built in a way that certain situations cause aborts in any way. On

the other hand some aborts can be avoided by adapting software

or algorithm to make possible to maintain atomicity while such an

incident occur.

2.4 Unnecessary Aborts in STM

2.4.1 What Are They?

Our first discussion was in fact to determine what are

unnecessary aborts?

The lowest margin and most accurate definition of an

unnecessary abort is an abort which we can avoid (by

software of hardware mechanism) and/or was caused not by

an actual conflict in the object version or any other real

conflict but by a step in the algorithm excluding such states

as "risky" and there for aborts when they are entered.

19

Project in software

Technion

In other words, an unnecessary abort is an abort that

shouldn't have occurred.

We discussed the TM abstraction in terms of utilization of

resources – a writing transaction access an object to write to

it. A reading transaction access an object to read from it. In

TL2 the rule of the thumb is that the version clock remains

constant while a transaction performs the action.

Hence to maintain correctness in TL2 we need that the

version clock remains steady.

2.4.2 Why do they happen?

There is more than one way to look at unnecessary aborts.

They may not seem unnecessary at all at times.

The point is that most TM implementations abort one

transaction whenever two overlapping transactions access

the same object and at least one access is a write.

While easy to implement, this approach may lead to high

abort rates, especially in situations with long-running

transactions and contended shared objects.

The TM algorithms created several odd situations.

Figure 4 - Aborts

For example in the figure it clearly shows that TL2 should not

have been avoided. What happened was that the algorithm

saw that two transactions accessed the same object and

20

Project in software

Technion

simply aborted one of them.

Figure 5 - Example Of Unnecessary Aborts

This occurs in most TM algorithms and has many examples.

The run can continue without violating correctness yet the

transaction aborts.

Of course here arise the question of why would the TM

algorithms allow such a state?

The conditions discussed contain a difficulty to check their

correctness. In order to check their correctness the algorithm

need to run speculatively to inspect the run of the program.

There is a trade-off in the "permissiveness" of the algorithm,

in terms of conditions allowed and the latency which the

correctness check by the algorithm requires.

The computational needs of the correctness check by the

algorithm were proven to be high by many of the TM

algorithms.

The main point is that most algorithms have low

permissiveness rates due that phenomenon and hence have

an impact on the commit rate and performance of the

algorithm (to be statistically proven by us here).

21

Project in software

Technion

2.4.3 How do we detect them?

In the article "On Avoiding Spare Aborts in Transactional

Memory" by prof. Idit Keidar and Dmitri Perelman they

concluded that Transactions, when placed correctly in read

list and write list may point to each other, forming a

Precedence Graph.

The precedence graph reflects the dependencies among the

transactions as created during the run. The vertexes of the

precedence graph are transactions.

The edges of the precedence graph are the dependencies

between them. For example – say we have two transactions

T1 and T2. If T1 reads an object named O1 and T2 writes to

O1 than the precedence graph contains an Edge (T1,T2)

labeled RAW – read after write. If the transaction T1 writes to

O1 and T2 also writes to O1 the precedence graph contains

an edge (T1,T2) labeled WAW – write after write. If T1 writes

to O1 and T2 read O1 before it was changed (O1 is written to

version n and T2 reads version n-1) than the edge (T1,T2)

will be labeled WAR – write after read.

Figure 6 - Precedence Graph

From here on end we have the definition of the precedence

graph. We can use the following conclusion to determine the

meaning of it.

22

Project in software

Technion

Corollary 1. Consider a TM that maintains object version lists

that keeps PG acyclic and forcefully aborts a set S of live

transactions only when aborting any subset S ' S of

transactions creates a cycle in PG. Then this TM satisfies opacity and online - opacity- permissiveness.

This is proven in the article.

What this conclusion means is that if after the transactions

are entered in to the precedence graph, the precedence

graph remains acyclic, and the transactions still abort, than

the abort was unnecessary.

In order to detect unnecessary aborts we can review their

speculative run and create a speculative precedence graph

as described above. In the said graph we will check (using

DFS algorithm or other means) for cycles, containing the

aborted transaction. If there were no cycles the abort was

unnecessary. Otherwise the abort was necessary since the

correctness of the run was unable to be maintained if the

transaction hasn't aborted.

So in order to check and analyze aborts we must document

the run of the transaction, place in a precedence graph, and

check for cycles. If there were cycles, the abort was

necessary, else it was unnecessary.

We will want more information than the abort ratio and the

ratio of the unnecessary aborts. We will discuss them further

in the evaluation part.

2.4.4 Example: Aborts in TL2

We review the TL2 algorithm and see where we can find

possible aborts:

For Write Transactions .

1. Sample global version-clock: Load the current value of

the global version clock and store it in a thread local variable

23

Project in software

Technion

called the read-version number (rv). This value is later used

for detection of recent changes to data fields by comparing it

to the version fields of their versioned write-locks.

24

Project in software

Technion

2. Run through a speculative execution: Execute the

transaction code. Locally maintain a read-set of addresses

loaded and a write-set address/value pairs stored. This

logging functionality is implemented by augmenting loads

with instructions that record the read address and replacing

stores with code recording the address and value to-bewritten. The transactional load first checks to see if the load

address already appears in the write-set. If so, the

transactional load returns the last value written to the

address. A load instruction sampling the associated lock is

inserted before each original load, which is then followed by

post-validation code checking that the location’s versioned

write-lock is free and has not changed. Additionally, make

sure that the lock’s version field is smaller of equal to rv and

the lock bit is clear. If it is greater than rv it suggests that the

memory location has been modified after the current thread

performed step 1, and the transaction is aborted.

3. Lock the write-set: Acquire the locks (avoid indefinite

deadlock). In case not all of these locks are successfully

acquired, the transaction fails.

4. Increment global version-clock: Upon successful

completion of lock acquisition of all locks in the write-set

performs an increment-and-fetch (using a CAS operation) of

the global version-clock recording the returned value in a

local write-version number variable wv.

25

Project in software

Technion

5. Validate the read-set: validate for each location in the

read-set that the version number associated with the

versioned-write-lock is smaller or equal to rv. Verify that

these memory locations have not been locked by other

threads. In case the validation fails, the transaction is

aborted. while steps 3 and 4 were being executed. In the

special case where rv + 1 = wv it is not necessary to validate

the read-set, as it is guaranteed that no concurrently

executing transaction could have modified it.

6. Commit and release the locks: For each location in the

write-set, store to the location the new value from the writeset and release the locations lock by setting the version

value to the write-version wv and clearing the write-lock bit.

Read-Only Transactions

1. Sample the global version-clock: Load the current value

of the global version-clock and store it in a local variable

called read-version (rv).

2. Run through a speculative execution: Execute the

transaction code. Each load instruction is post-validated by

checking that the location’s versioned write-lock is free and

making sure that the lock’s version field is smaller or equal

to rv. If it is greater than rv the transaction is aborted,

otherwise commits.

We can see that the TL2 algorithm has three abort types –

one is the "Version Incompatibility Abort", meaning that the

version is changed due to a writing transaction which

overlaps this one. The second is the "Locks Abort" in which

the transaction doesn't have all the required resources to

perform the operation (thus prevent deadlocks), and last is

26

Project in software

Technion

the "Read-Set Invalidation Abort", makes sure that the

required memory is free for writing.

These three different abort types are the places in which the

algorithm aborts. If we can diagnose the amounts we may be

able to offer treatment in a way in which the aborts could be

avoided in low cost to performance.

27

Project in software

Technion

3. Project Goal

Our goals are the following:

Analyze a given run statistically. Check the amount of aborts,

percentage of unnecessary aborts, percentage of wasted work,

impact of aborts and unnecessary aborts on performance. Impact

of aborts and unnecessary aborts on load.

Analyze aborts by types – see what the main causes of aborts in

several algorithms are. Categorize and compare results by

different contentions and running terms.

Check general performance.

Inspect the required data and answer the following question:

"Will it pay off to add designs to stop the unnecessary

aborts?”

Meaning – are they worth addressing? Is their impact on

performance so massive that a solution to some of them be a

practical visible change for the better in the performance,

speedup, and load?

28

Project in software

Technion

4. Implementation

4.1 Overview

To accomplish our goals, we had to find a way to get information about a

program's run. The information we looked for was the order of

transactional events (e.g transactions starts, reads, writes, commits and

aborts). Since trying to analyze the data while the program still runs may

hurt the program's parallelism, we decided to split the implementation to

three different parts:

Designing the log file generated through the run for future analysis.

The offline analysis part – this part includes reading the log file

written by the online part, and calculating the various statistics we

attempt to measure.

The online logging part – this part includes running the program in

STM environement, and writing the transactional events into a log

file.

This section will focus on the implementation of the classes we used to

log and analyze TL2. Through the implementation we used the standart

Java libraries, including their concurrency packages, the JgraphT library,

which supllies graph theory interface, XML database language and

Java's SAX library, as well as Object Oriented design patterns such as

singleton, abstract factory and visitor.

4.2 The log file and parser

The first step in implementing our abort analyzer was planning what data

will be written to the log file throughout the program's run. To know the

causes for each and every abort, we needed data on transactions'

chronological order of reads and writes, as well as the order of

transactions starting, commiting and aborting.

To ease the parsing of the log file by our analyzer, and to apply an

inflexible format to it, we have decided to use XML format for it. Using

Java's SAX (Simple API for XML), we are able to access the log file with

relative ease.

29

Project in software

Technion

The XML log is built with a main "log" tag, which is the container for

every action the online part would log. The log tag contains a number of

records. Each record is called a "LogLine", marked with a "line" tag, and

represents a single action of a single transaction: start, read, write or

commit.

The data wer'e saving for each action:

For transaction starts: the global version clock at that time.

For reads: an object Id for the read object, the object's lock

version and the result of the read– a "version" tag that contains the

read object's current version on success, and an "abort" tag, which

we also use in commit lines, on abort. The abort tag also contains

the read object's current version, but also has the "reason" for the

abort as attribute. While modifying the algorithm to log for us, we

inserted different names for different causes for aborts, to distinct

between different types.

For writes: the object's ID.

For commits: on commit we saved the write version for the written

objects (0 if no objects were overwritten), and an abort tag on

aborts, similar to those described for read lines. This time, the abort

tag's content is the version of the object preventing the transaction

from commiting.

Finally, the log's format is:

30

Project in software

Technion

<log>

<line type = "start" txn = (transaction ID)><rv>

(Global version clock version on transaction start)

</rv></line>

<line type="read" txn= (transaction ID)>

<obj> (object ID) </obj>

<result>

[On success:

<version>0</version>]

[On abort: <abort reason = (a string describing the

reason for the abort)> (Object current version)

</abort>]

</result>

</line>

<line type="write" txn= (transaction ID)><obj>

(object ID) </obj></line>

<line type="commit" txn= (transaction ID)>

<result>

[On success:

<write-set>

<wv> (transaction write version) </wv>

</write-set>]

[On abort: <abort reason = (a string describing the

reason for the abort)>0</abort>]

</result>

31

Project in software

Technion

</line>

</log>

Source Code 1 - Log Formation

This log structure is designed specificly for the TL2 algorithm. There are

no "abort" tags possible in start lines or write lines, since TL2 only

samples the global version clock on start, and adds objects to write-set

on write. For different algorithms, the log's format may be changed.

The structure of the classes representing each line is as follows:

name: String

result:

boolean

LogLine

reason:

String

opType:

Enum Type

IS A

Log_StartLine

Log_CommitLine

Log_ReadLine

Log_WriteLine

rv:

long

wv:

Long

object:

String

version:

Long

object:

String

Figure 7 - Structure of the classes representing the log

For the purpose of storing all the actions that were performed, we

created a general "LogLine" class, and four classes that extend it for

each action. Each of these classes contains the data we wanted to save

for its action type respectively.

32

Project in software

Technion

For each type of LogLine we created a SingleLineFactory – a class with

a single method, "createLine", which receives an XML node and, using

the DOM XML, returns a LogLine according to it. To distinct which

factory we should use, we created a general "LogLineFactory" singleton

class, which reads the type of LogLine from the "type" attribute at each

"line" tag, and calls the matching factory, using a Map of strings as keys

and SingleLineFactories as data.

With those classes, we are able to read an XML log file, and use the

LogLineFactory.parseLogFile method, which gets one XML node at a

time and calls the matching factory. The method produces a list of

LogLines. We assume the list maintains the order of insertion.

After the factory finishes the parsing of the file, we have no further use

for it since we have all the data in our list. The list will now be processed

by the analyzer.

4.3 The offline analysis

There are several stages to the offline analysis:

parsing the log file.

analyzing the statistics.

Using a "divide and conquer" approach, we designed different

packages for each of these stages: "ofersParser" package, which

contains the means to parse the log file – the LogLine classes and

factory classes discussed earlier – and "analyzer" package, which is

called by the parser when the log's data is stored in classes, rather

than the XML file. The analyzer package maintains a database of

transactions, dependencies and actions performed, as well as the

statistics wer'e attempting to measure.

33

Project in software

Technion

Run

Description

Parser

XML

Analyzer

LOG

Run

Abort

Description

analyzer

Data

Structure

Figure 8 - A schematic description of the offline analysis part

In this scheme, we can see that the "Parser" unit receives the log file

and generates a run description. The run description is actually the log

lines as they were written to the log, this time in Java classes. The

Parser passes this down to the Analzer unit, which reads the data and

maintains a "Run Description Data Structure", which is a precedence

graph. That precedence graph is passed to the "Abort analyzer" unit,

which checks each abort's necessity.

The analyzer functions as an interpreter – it reads line by line without

looking ahead, and reacts to each line, independent of other lines.

The analyzer package contains three data collecting classes and three

information storage classes. The storage classes are:

TxnDsc – contains general information about the transaction in the

current stage of the run, as interpreted by the analyzer thus far (As

recalled, the analysis is offline, so "thus far" refers to the analyzer's

progress through the list, not the actual state in the program's run).

These are used as the vertexes in the precedence graph. The

TxnDsc contains the transaction's ID, status (active, commited or

aborted), read version, read-set and write-set.

34

Project in software

Technion

ObjectVersion – contains information about a specific version of a

single object: its version, the previous and the next versions, the

writer of this version and a set of the readers of this version. While

building the precedence graph, we access the objectVersions to

determine which edges we should add to the graph.

ObjectHandle – contains information about the object itself: its ID,

the first and last versions, and the number of versions until now.

Using the object handles, we may traverse through objects' versions

easily.

The other three classes are "LogLineVisitors" – they use the Visitor

design pattern to distinct between each type of LogLine. Each one of

those classes has a different role in the analysis of the log:

Analyzer – keeps basic statistics of the run, and a database of

TxnDscs that can be accessed for general purpose. The statistics

available in the Analyzer are aborts and commits counts, reads and

wasted reads count, and writes and wasted writes count, which are

not valid for TL2, since writes are only speculative and are not

performed. It also contains an "abort map", to count aborts of each

type.

Generally, the analyzer has every statistic, aside from unnecessary

aborts, treated by the other classes. The analyzer has the method

"analyzeRun", which iterates over the LogLines list and calls all three

data collection classes to visit it.

RunDescriptor – maintains the precedence graph. As recalled, the

precedence graph is a graph, whose vertexes are the transactions,

and the edges represents dependencies of Read after Write (RaW),

Write after Read (WaR) and Write after Write (WaW). An

enumerated type was created for the edges, while the vertexes use

TxnDscs. The RunDescriptor also visits each line, and modifies the

graph suitably, by adding vertexes on transaction starts, updating

TxnDscs, ObjectHandles and ObjectVersions on reads, and Adding

ObjectVersions and edges on commits, and does nothing on aborts.

35

Project in software

Technion

The graph is built using the JgraphT package. The RunDescriptor

class also has an "exportToVisio" method, which creates a CSV file

with the precedence graph.

AbortAnalyzer – The missing piece of the RunDescriptor, this class'

job is to detect unnecessary aborts. On aborts, it speculatively adds

the matching dependency edges to the graph, and tries to detect

cycles containing the aborting transaction's vertex in the graph

(using the RunDescriptor.hasCycle method). As recalled, a cycle in

the graph means that the abort was necessary. The AbortAnalyzer

counts the number of unnecessary aborts.

For summary:

Class

Role

TxnDsc

Holds data concerning a single transaction.

ObjectVersion

Holds data concerning one version of a certain

object, and reference to previous and next

versions.

ObjectHandle

Holds data concerning a single object, and all of

its versions.

Analyzer

Holds a transactions database and basic

statistics.

RunDescriptor

Holds the precedence graph describing the run.

AbortAnalyzer

When encounters an aborts, detects cycles in

the precedence graph to determine abort's

necessity.

36

Project in software

Technion

XML Log

Parser

Abort Analyzer

Matlab histograms

and final analysis

Figure 9 - Another View of the Offline Design

Combining these three classes, we may measure:

Abort rate

Unnecessary abort rate

Wasted reads

Each abort type's impact on performance.

In conclusion, we have three classes which iterate over every LogLine,

and maintain a wide database of transactions and statistics, along with

the matching precedence graph. These classes allow us to read a log

generated by a program and reach the statistics we need. Our final step

is to make an STM environment do that for us.

4.4 The online logging

As recalled, the online logging part's aim is to monitor a running program

and generate a log file, matching the format we discussed earlier.

The online logging part also consists of two stages. The first of them is

creating the means for logging an algorithm with the XML format –

generating a "Logger" mechanism to which the STM environment will

report on every transaction's action. The second one is modifying the

37

Analyzer

Output of analysis is

a precedence graph

showing the

transactions and

their actions.

RUN DESCRIPTOR

Project in software

Technion

TL2 algorithm with the logging actions so that it may report to the logger

on every action.

The STM framework we modify is Deuce STM, discussed in the

background part.

Deuce Framework

TL2 algorithm

Transactions

Logging

code:

Instructions

Transaction

Logger

actions data

XML log

data

Start, read,

XML Log

file

write, commit

Figure 10 -was

A schematic

descriptiontoofimplement:

the online logging

part

The Logger mechanism

very problematic

first, we

had

to build it with a minimal amount of actions, since long insertion to highly

complex data structures may distort concurrency levels in the program.

Second, we had to make sure the LogLines reach the log in their

chronological order.

The most basic class in the Logger implementation is the LogCreator

class. The LogCreator is a singleton, and is in charge of receiving data

and writing it directly to the log file. To pass the data to the LogCreator,

we re-used the LogLine classes from the ofersParser package. And so,

LogCreator is a LogLine visitor. When we call LogCreator.getInstance

().visit (LogLine), the LogCreator independently writes the LogLine

directly to the file in XML format. We used manual writing of XML, rather

than DOM XML for this purpose.

LogCreator also has the "flush" and "quit" methods. Both of them close

the log tag in the XML file, and perform writing of the data accumulated

in the LogCreator's Writer class. The quit method also waits some time

untill all data has flushed, and then closes the log file.

38

Project in software

Technion

The Logger interface implementation is in charge of holding the

LogLines generated by each thread with TL2 in order of instantiation. It

has the following methods:

public interface Logger {

public void startTxn(String txnId, long rv);

public void readOp(String txnId, String objId, long verNum,

boolean result, String reason);

public void writeOp(String txnId, String objId);

public void commitOp(String txnId, long wv, boolean result,

String reason);

public void stop();

}

Source Code 2 - Logger Interface

Each method receives the data required to generate a LogLine. Then,

the method sends it to the LogCreator for writing in the log.

The Logger is a ThreadLocal, because synchronizing the threads to take

turns in using the Logger may be costy in means of parallelism. With

ThreadLocal Loggers, each thread may write independently to its own

private Logger.

To bring load off the running threads, we decided that along with the

threads, a background collector thread will collect the LogLines by their

order. The Collector will also be responsible for ordering the LogLines at

their chronological order.

We had two attempts at building the collector.

First, we created the LogLineHolder class. It is a container for a LogLine,

and an integer, called serialNum, representing its index. Lower

serialNum means earlier appearance. The index is generated by an

AtomicInteger. Each logger that needs to add a line accesses this

39

Project in software

Technion

integer and puts its value as the line's index, and increments the logger

(using the AtomicInteger.getAndIncrement () method).

In both of our attempts, the Logger holds a Queue of LogLineHolders. It

is O 1 complexity to insert an element to the Queue, and the FIFO rule

allows the lowest indexed lines to be treated first.

In our first attempt, our algorithm included a priority queue of

LogLineHolders queues. The priority of each queue is decided by the

index of its top element. In the background, there is a collector thread,

popping the queue and getting the first element of the top queue, and

writing it to the log by calling LogCreator. If the queue is now empty, the

Collector would not insert it back to the queue, which means each thread

may insert it when it fills up. Insertion to PriorityQueue may be costy, and

so we decided to refrain from using it.

40

Project in software

Technion

A schematic description of the first attempt:

Threads add the loggers to the queue themselves.

Figure 11 - Online Part version 1

In our second attempt, we focused on making the running threads more

passive: each thread inserts its LogLineHolders to its queue. In the

background, a new Collector thread, named BackendCollector, tries to

get the next line each time, and adds it to the log. The BackendCollector

keeps running while a Boolean value called "stop" is false and there are

more lines to collect:

public void run() {

while (true) {

LogLine line = qm.getNextLine();

if (line == null) {

if (stop)

break;

else

continue;

}

try {

line.accept(LogCreator.getInstance());

41

Project in software

Technion

} catch (IOException e) {

e.printStackTrace();

return;

}

}

try {

LogCreator.getInstance().quit();

} catch (IOException e) {

e.printStackTrace();

}

}

Source Code 3 - Background Collector Run method

The qm used in the second line of run () is the QueueManager, which

will be explained later. Its getNextLine () method returns either the next

line in the log, or null if no matching lines exist. If line is null, the

Collector keeps asking for the next line. When the Collecotr finds a line,

it sends it to the LogCreator using line.accept (LogLineVisitor) method.

At the end, the Collecotr attempts to close the log.

A schematic description of our second attempt:

42

Project in software

Technion

Collector iterates through the

loggers, threads remain passive.

Figure 12 - Online Part Final Version

Though the BackendCollector is very ineffective, since its search

function is very trivial, but its performance means nothing to us. Our only

specification for it is that it should create minimal interferance with the

running program, even if writing the log file should take longer, as long

as concurrency remains unharmed. With this algorithm, the threads' only

tasks are to access an AtomicInteger and to add a LogLineHolder to a

ThreadLocal queue.

The Logger's matching implementation is called AsyncLogger. It

contains the AtomicInteger for the indexes, and a LogLineHolder queue

for the lines. We also built an "AsyncLoggerFactory", which holds the

ThreadLocal Logger. Threads may access the factory to get their

Logger, which calls the ThreadLocal.get () method.

The final unit in the Logger mechanism is called the QueueManager. Its

job is to supply the BackendCollecotr with the next line in the queue

each time.

QueueManager is a singleton class. Its constructor is what starts the

Collector thread:

BackendCollector collector = new BackendCollector(this);

Thread t;

43

Project in software

Technion

private QueueManager() {

t = new Thread(collector);

t.start();

}

Source Code 4 - Queue Manager Ct'r

With the thread running early on, the logger is ready to accept LogLines

from the Threads.

The QueueManager holds a map of longs as keys and LogLineHolder

queues as data. The AsyncLogger C'tor adds its queue to the

QueueManager's map, using the "addQueueIfNotExists" method. The

method adds the queue and the thread num to the map, using

Thread.currentThread ().getId ():

public void addQueueIfNotExists(Queue<LogLineHolder> q) {

long curThreadId = Thread.currentThread().getId();

if (!threadQueues.containsKey(curThreadId)) {

threadQueues.put(curThreadId, q);

}

}

Source Code 5 - Add Queue Method

The method "getNextLine", which is used by the Collector, uses a

priorityQueue of LogLineHolders, sorted by index, and called

"LogLineCache".

LogLineCache is filled by a method called "traverseQueues": If the

Cache doesn't have the next line in order, the Collector uses this method

to loop over all queues to find any LogLines there. If none are found, the

method returns false, and "getNextLine" returns null, making the

Collector check stop condition.

44

Project in software

Technion

The "getNextLine" method checks for the next line in order using an int

named "counter", initialized on 0 and increased on every line added, and

the lines' indexes are supposed to match the counter.

public LogLine getNextLine() {

boolean empty = false;

while (!empty) {

LogLineHolder cacheHead = logLinesCache.peek();

if (cacheHead != null && cacheHead.serialNum == counter)

counter++;

return logLinesCache.poll().line;

}

empty = !traverseQueues();

}

return null;

}

private boolean traverseQueues() {

boolean smtFound = false;

for (Map.Entry<Long, Queue<LogLineHolder>> entry : threadQueues

.entrySet()) {

LogLineHolder lineHolder = entry.getValue().poll();

if (lineHolder != null) {

smtFound = true;

logLinesCache.add(lineHolder);

}

}

45

Project in software

Technion

return smtFound;

}

Source Code 6 - getNextLine & traverseQueues methods

Using those, the collector gets lines from the queues as long as the

"stop" Boolean value is false, and as long as there are more lines in the

queues.

To stop the Collector and end the run, the user may call the "stop"

method, which changes "stop" to true, and uses Thread.join () to wait for

the Collector to finish. The user will have to call

QueueManager.getInstance ().stop () in order to complete the Collector's

run.

With these interfaces in hand, the only task remaining is to embed the

Logger mechanism in the TL2 implementation.

Deuce STM has an interface called "org.deuce.transaction.Context". As

recalled, the Deuce framework automaticly matches the reads and writes

to the transaction's command to allow any user to implement an STM

algorithm. The user has two roles: implementing the STM algorithm, and

adding the "@Atomic" annotation to a function which he desires to be a

critical section. Deuce turns the function to a transaction.

We modified an existing TL2 implementation. As we discussed earlier,

we want to log each and every read and write.

Our additions to the TL2 Context, beside calls to the logger were:

final static int writtenByThis = -1;

private String name = "";

private final ThreadLocal<Integer> txnId = new

ThreadLocal<Integer> () {

@Override

protected Integer initialValue () {

return 0;

46

Project in software

Technion

}

};

private final Logger logger;

Source Code 7 - Addition to the TL2 context

In the Context's C'tor:

logger = LoggerFactory.getInstance ().getLogger ();

Source Code 8 - Change in context C'tor

47

Project in software

Technion

And in the "init ()" method:

this.name = Thread.currentThread ().getId () + "_T" + txnId.get

();

txnId.set (txnId.get () + 1);

logger.startTxn (name, this.localClock);

Source Code 9 - changes in TL2 Context init()

The integer "writtenByThis" is a constant. Whenever a transaction

reads something written by it, the logger would receive a read of

the integer's value (currently -1) to indicate it.

The "name" string holds the transaction's name, calculated once

in the Context's C'tor.

The "txnId" field is actually a counter. It is ThreadLocal, so each

thread has an instance of its own, initialized on 0. Whenever a

transaction is started, its name is calculated with the thread's Id,

and with this integer. Each new transaction increments the int

value, to apply different Ids to different transactions.

Within the C'tor, the Context saves a reference to its thread's logger.

This way, the functions can refer to the class itself, instead of accessing

the LoggerFactory on every logging operation.

When a transaction starts, the "init" method is called. A new name is

given to the new transaction, composed of the transaction Id and the

thread's name. As recalled, the ThreadLocal "txnId" is incremented, and

the first call to the logger within the transaction is made. This means that

on each "init", the logger writes the transaction's start in the log, along

with the global version clock matching.

This settles the new fields.

In the original TL2 implementation, there was a static

TransactionException stored in the LockTable class, which was a single

instance and was used by all transactions for every abort. However,

48

Project in software

Technion

neither concurrency nor correctness was damaged, since there was no

relevant information within that exception. The exception didn't contain

any information at all about the reason for the abort, either. In order to

distinct between the different causes for aborts, we had to create new

exception types to replace it, for each abort cause in the LockTable:

LockVersionException, in case the lock's version is too large.

ObjectLockedException, in case the lock is aquired by another

thread.

We didn't need an exception for each abort type in the whole algorithm.

For instance, an exception for failure in read-set validation isn't

necessary, since Deuce does'nt throw any exception about it anyway

(the check is performed in the Context class). To maintain Deuce

correctness, the new exceptions extends TransactionException, and can

be caught by the Deuce framework the same way the original exception

was.

Since we need different information from each transaction, we couldn't

use just one instance of each abort reason exception, like the old

TransactionException. We had to recreate each exception since most

errors differ in objects and versions causing them. But, each thread runs

a single transaction at a time, and so a single thread can use only one

exception at a time. So, to preserve memory, we made the new

exceptions ThreadLocal. Each time an abort occurs, the thread

accesses its own ThreadLocal, puts the relevant data in it, and throws it

as usual. When we catch it within the TL2 Context methods, we read its

data to send it to the logger, and throw it again to maintain the Deuce

framework's correctness. We are promised that no one shall access

those exceptions besides the relevant thread, since the transaction

stops. The new exceptions lie within the LockTable class, since locks

states and object versions are checked there:

49

Project in software

Technion

private static ThreadLocal<ObjectLockedException>

lockedException = new ThreadLocal<ObjectLockedException>() {

@Override

public ObjectLockedException initialValue() {

return new ObjectLockedException("Object is locked.", 0);

}

};

private static ThreadLocal<LockVersionException>

versionException = new ThreadLocal<LockVersionException>() {

@Override

public LockVersionException initialValue() {

return new LockVersionException("Object is locked.", 0);

}

};

Source Code 10 - The new exceptions in LockTable

Additional changes were made to the TL2 Context, such as changing the

return type of the LockTable.checkLock and

LockProcedure.setAndUnlockAll methods from void to int, to get the lock

version and the write versions respectively. We also added the

exceptions throw statements. For instance:

if( clock < (lock & UNLOCK))

versionException.get().throwWithVersion(lock & UNLOCK);

Source Code 11 - Exeption throw instance

The if statement performs the actual lock version check. The statement

within the if replaces the old TransactionException with the new

versionException. The constant UNLOCK is a constant – 0x0FFFFFF,

which means that a locked object is marked with 1 in its MSB. The

exception is thrown with lock & UNLOCK, since throwing the lock version

when it's locked may cause negative versions. Similar changes were

made to both checkLock methods.

50

Project in software

Technion

Since we don't want to interfere with the deuce framework, we added

another try/catch block in the "commit" method:

try {

// pre commit validation phase

writeSet.forEach(lockProcedure);

readSet.checkClock(localClock);

} catch (ObjectLockedException exception) {

lockProcedure.unlockAll();

logger.commitOp(name, exception.getLockVersion(), false,

"ReadsetInvalid");

return false;

} catch (LockVersionException e) {

lockProcedure.unlockAll();

logger.commitOp(name, e.getLockVersion(), false,

"ReadsetInvalid");

return false;

}

Source Code 12 - Change in Commit method

In the "onReadAccess0" method:

try {

// Check the read is still valid

LockTable.checkLock(hash, localClock, lastReadLock);

} catch (ObjectLockedException e) {

logger.readOp(name, objectId(obj, field) , e.getLockVersion(),

false, "ObjectLocked");

throw e;

} catch (LockVersionException e) {

51

Project in software

Technion

logger.readOp(name, objectId(obj, field), e.getLockVersion(),

false, "VersionTooHigh");

throw e;

}

Source Code 13 - Changes in the onReadAccess method

And in "beforeReadAccess" method:

try {

// Check the read is still valid

lastReadLock = LockTable.checkLock(next.hashCode(), localClock);

} catch (ObjectLockedException e) {

logger.readOp(name, objectId(obj, field), e.getLockVersion(),

false, "ObjectLocked");

throw e;

} catch (LockVersionException e) {

logger.readOp(name, objectId(obj, field), e.getLockVersion(),

false, "VersionTooHigh");

throw e;

}

Source Code 14 - Changes in beforeReadAccess method

The code we added to each method distincts between the abort types.

Whenever the LockTable class is accessed, we attempt to catch the new

exceptions. In the original implementation, onReadAccess0 had a simple

catch (TransactionException), while commit had no try/catch block there

at all – the TransactionException would go directly back to the Deuce

framework. We "intercept" the exceptions on their course, and catch

each one of the exceptions seperately. For each exception, we log the

necessary data, and throw it back as usual.

Using our Logger interface, and the "logger" field we added, we added

calls for the logging operations when needed:

52

Project in software

Technion

When a TransactionException is thrown (to mark an abort)

When a function completes successfully (to mark a successful

operation)

Depending on the method, either "init" (as seen earlier),

"onReadAccess", "onWriteAccess" or "commit", we added the matching

log functions, using the necessary data, which lies within the Context

and in our new exceptions.

Another important addition we made to the Context class is the objectId

method:

private static String objectId(Object reference, long field) {

return Long.toString(System.identityHashCode(reference) +

field);

}

Source Code 15 - Change in Context objectid

This method gives us a unique id for each object. The reference would

not have been enough, since the JVM performs defragmentation while

running programs, so we added the field.

We concentrated the TL2 classes we changed to a new package,

"loggingTL2", which uses the original "field" and "pool" packages. When

running our benchmarks, we inserted the loggingTL2.Context class as

the Deuce STM Context class. And so, when the run ended, we got

ourselves a log file describing the run, ready for the offline analysis.

53

Project in software

Technion

5. Evaluation

5.1 Hardware

To allow a high degree of concurrency, we ran our benchmarks over

the Trinity computer. Trinity's system consists of eight Quad Core

AMD Opteron CPUs, and 132GB RAM. Using c-shell scripts, we

automaticly ran each benchmark, about five times to get an average