Lab 8

advertisement

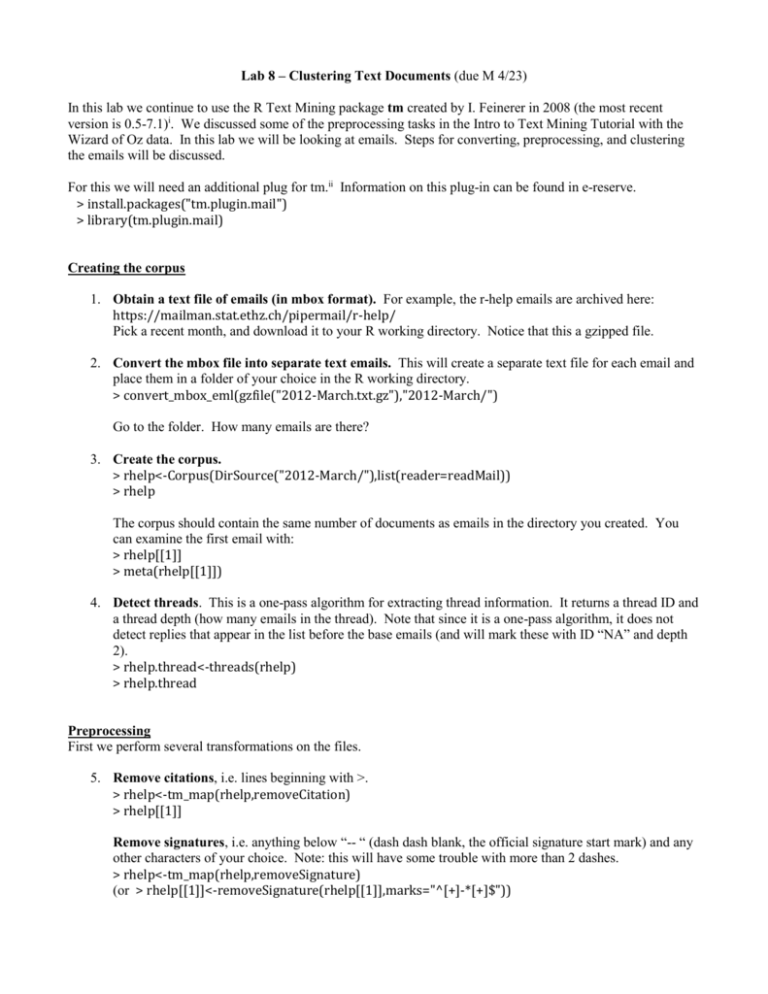

Lab 8 – Clustering Text Documents (due M 4/23)

In this lab we continue to use the R Text Mining package tm created by I. Feinerer in 2008 (the most recent

version is 0.5-7.1)i. We discussed some of the preprocessing tasks in the Intro to Text Mining Tutorial with the

Wizard of Oz data. In this lab we will be looking at emails. Steps for converting, preprocessing, and clustering

the emails will be discussed.

For this we will need an additional plug for tm.ii Information on this plug-in can be found in e-reserve.

> install.packages("tm.plugin.mail")

> library(tm.plugin.mail)

Creating the corpus

1. Obtain a text file of emails (in mbox format). For example, the r-help emails are archived here:

https://mailman.stat.ethz.ch/pipermail/r-help/

Pick a recent month, and download it to your R working directory. Notice that this a gzipped file.

2. Convert the mbox file into separate text emails. This will create a separate text file for each email and

place them in a folder of your choice in the R working directory.

> convert_mbox_eml(gzfile("2012-March.txt.gz"),"2012-March/")

Go to the folder. How many emails are there?

3. Create the corpus.

> rhelp<-Corpus(DirSource("2012-March/"),list(reader=readMail))

> rhelp

The corpus should contain the same number of documents as emails in the directory you created. You

can examine the first email with:

> rhelp[[1]]

> meta(rhelp[[1]])

4. Detect threads. This is a one-pass algorithm for extracting thread information. It returns a thread ID and

a thread depth (how many emails in the thread). Note that since it is a one-pass algorithm, it does not

detect replies that appear in the list before the base emails (and will mark these with ID “NA” and depth

2).

> rhelp.thread<-threads(rhelp)

> rhelp.thread

Preprocessing

First we perform several transformations on the files.

5. Remove citations, i.e. lines beginning with >.

> rhelp<-tm_map(rhelp,removeCitation)

> rhelp[[1]]

Remove signatures, i.e. anything below “-- “ (dash dash blank, the official signature start mark) and any

other characters of your choice. Note: this will have some trouble with more than 2 dashes.

> rhelp<-tm_map(rhelp,removeSignature)

(or > rhelp[[1]]<-removeSignature(rhelp[[1]],marks="^[+]-*[+]$"))

Initial Explorationiii

6. Determine the most active writers. First extract all of the authors and then normalize multiple entries

(collapse those with multiple lines into one line).

> authors<-lapply(rhelp,Author)

> authors<-sapply(authors,paste,collapse=' ')

Sort by number of emails authored and look at the top 10.

> sort(table(authors), decreasing=T)[1:10]

Who are the top 10 most active writers? How many emails did each generate?

7. Do the same thing with “Heading” for another way to look at thread/topic information.

> headings<-lapply(rhelp,Heading)

> headings<-sapply(headings,paste,collapse=' ')

> sort(table(headings),decreasing=T)[1:10]

> big.topics<-names(sort(table(headings),decreasing=T)[1:10])

> big.topics

> unique(sapply(rhelp[headings==big.topics[1]],Author))

> unique(sapply(rhelp[headings==big.topics[2]],Author))

8. Determine how many of the emails discuss a certain term. Perform a search of the emails for a

specific term, say “problem,” to determine (estimate) how many of the emails are dealing with problems

in R.

> p.filter<-tm_filter(rhelp,FUN=searchFullText,"problem",doclevel=T)

> p.filter

How many of the emails contain the term “problem”?

Determine the 10 most active authors for the term “problem”.

> p.authors<-lapply(p.filter,Author)

> p.authors<-(sapply(p.authors,paste,collapse=' '))

> sort(table(p.authors),decreasing=T)[1:10]

Who are they? How many emails did they author containing “problem”?

Repeat with a different term. What are your results?

Count-based Evaluationiv

The emails need to be in plain text format in order to create the document term matrix. It will also be useful to

remove common words and punctuation before creating the document term matrix.

9. Convert the emails to plain text. Show what this does to the metadata.

> rhelp<-tm_map(rhelp,as.PlainTextDocument)

> meta(rhelp[[1]])

Convert to lowercase.

> rhelp<-tm_map(rhelp,tolower)

> rhelp[[1]]

10. Remove common words.

> for(i in 1:3384){rhelp[[i]]<-removeWords(rhelp[[i]],stopwords("en"))}

Remove numbers.

> for(i in 1:3384){rhelp[[i]]<-removeNumbers(rhelp[[i]])}

Remove punctuation.

> for(i in 1:3384){rhelp[[i]]<-removePunctuation(rhelp[[i]])}

> rhelp[[1]]

11. Create the document term matrix.

> rhelp.tdm<-DocumentTermMatrix(rhelp)

> rhelp.tdm

How many non-zero entries are there? How many total terms?

12. Find the most frequent terms. Find the terms that occur at least 200 times.

> findFreqTerms(rhelp.tdm,200)

What are the words?

13. Find associations. Find the words that are associated with “archive” (correlation coefficient over 0.85).

Note, this may take several minutes.

> findAssocs(rhelp.tdm,"archive",.85)

Data Mining with Text Dataiv

Note that many of the below algorithms may be time-consuming with this particular data set. You may wish to

choose a smaller one, or a sample of the current one for the following. Another approach would be to remove

sparse columns.

14. Determine the number of clusters. One way to determine the number of clusters is to look at the within

cluster sum of squares. Compute the within cluster sum of squares for k=1 to 20 sample k-means

clusterings and see where the sum of squares seems to level off.

> wss <- (nrow(rhelp.tdm)-1)*sum(apply(rhelp.tdm,2,var))

> for (i in 2:20) wss[i] <- sum(kmeans(rhelp.tdm, centers=i)$withinss)

> plot(1:20, wss, type="b", xlab="Number of Clusters", ylab="Within groups sum of squares")

Though there isn’t an obvious choice for k, make a selection to use for all of the methods below.

15. Hierarchical Clustering.

> rhelp.hclust<-hclust(dist(rhelp.tdm), method="ward")

> rhelp.hclust

> rhelp.hclust.15 = cutree(rhelp.hclust,k=15)

> rhelp.hclust.15 = cutree(rhelp.hclust,k=15)

To examine the size of the clusters:

> table(rhelp.hclust.15)

> table(rhelp.hclust.10)

16. k-Means. We’ll use pam instead of kmeans as it is more robust.

> library(cluster)

> rhelp.pam<-pam(rhelp.tdm, 15)

Examine the clusters:

> rhelp.pam$clusinfo

How might you change any of the steps above? Discuss ways to evaluate the clusters.

For this lab, turn in a description of the preprocessing you did and the results. Answer any questions posed

above. For the exploration, the count-based evaluation, and the data mining pieces, make sure to include

results/output directly from R. Additionally, discuss the results and what they mean, and answer any

questions related to them. It is easiest if you cut and paste your results into a word processor as you obtain

them.

Extra Functions:

Remove non-text parts from multipart e-mail messages.

> rhelp<-tm_map(rhelp,removeMultipart)

Strip extra whitespace.

> rhelp<-tm_map(rhelp,stripWhitespace)

The above (8) creates a sub-corpus with the “problem” emails. Alternatively, you could have just created

a T/F vector denoting whether or not each of the emails contains the term “problem”. From this you

could find the percentage of emails.

> p<-tm_index(rhelp,FUN=searchFullText,"problem",doclevel=T)

> sum(p)/length(rhelp)

Cosine similarity

The default distance in hclust is Euclidean distance. Cosine similarity is often used for document data.

The function dissimilarity creates a dissimilarity matrix using any of the metrics/similarities available in

the proxy package. To use cosine similarity as the proximity measure in clustering, download and install

the proxy R package.

> install.packages("proxy")

> library(proxy)

Repeat hclust with the cosine similarity proximity measure.

> d<-dissimilarity(rhelp.tdm,method="cosine")

> rhelp.cosine<-hclust(d, method=”ward”)

> rhelp.cosine.15 = cutree(rhelp.cosine,k=15)

I. Feinerer. tm: Text Mining Package, 2012. URL http://CRAN.R-project.org/package=tm. R package

version 0.5-7.1.

ii tm.plugin.mail URL http://cran.r-project.org/web/packages/tm.plugin.mail/index.html

iii I. Feinerer. An Introduction to text mining in R. R News, 8(2):19-22, Oct. 2008. URL http://CRAN.Rproject.org/doc/Rnews/.

iv I. Feinerer, K. Hornik, and D. Meyer. Text mining infrastructure in R. Journal of Statistical Software, 25(5):154, March 2008. ISSN 1548-7660. URL http://www.jstatsoft.org/v25/i05.

i