BH_proof

advertisement

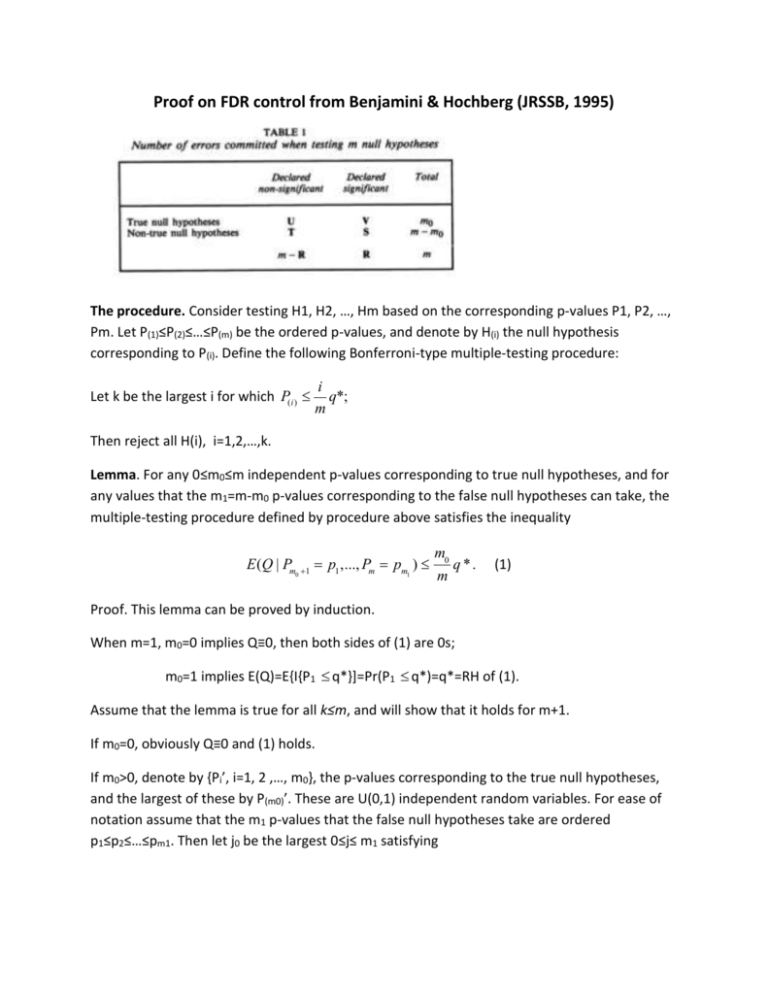

Proof on FDR control from Benjamini & Hochberg (JRSSB, 1995)

The procedure. Consider testing H1, H2, …, Hm based on the corresponding p-values P1, P2, …,

Pm. Let P(1)≤P(2)≤…≤P(m) be the ordered p-values, and denote by H(i) the null hypothesis

corresponding to P(i). Define the following Bonferroni-type multiple-testing procedure:

Let k be the largest i for which P(i )

i

q*;

m

Then reject all H(i), i=1,2,…,k.

Lemma. For any 0≤m0≤m independent p-values corresponding to true null hypotheses, and for

any values that the m1=m-m0 p-values corresponding to the false null hypotheses can take, the

multiple-testing procedure defined by procedure above satisfies the inequality

E(Q | Pm

0 1

p1 ,..., Pm pm )

1

m0

q *.

m

(1)

Proof. This lemma can be proved by induction.

When m=1, m0=0 implies Q≡0, then both sides of (1) are 0s;

m0=1 implies E(Q)=E{I{P1 q*}]=Pr(P1 q*)=q*=RH of (1).

Assume that the lemma is true for all k≤m, and will show that it holds for m+1.

If m0=0, obviously Q≡0 and (1) holds.

If m0>0, denote by {Pi’, i=1, 2 ,…, m0}, the p-values corresponding to the true null hypotheses,

and the largest of these by P(m0)’. These are U(0,1) independent random variables. For ease of

notation assume that the m1 p-values that the false null hypotheses take are ordered

p1≤p2≤…≤pm1. Then let j0 be the largest 0≤j≤ m1 satisfying

pj

m0 j

q*,

m1

(2)

and denote the right hand side of (2) at j0 by p ''

m0 j0

q*, .

m1

Conditioning on P(m0)’=p,

E (Q | Pm0 1 p1 ,..., Pm pm1 )

p"

E (Q | P

'

( m0 )

p, Pm0 1 p1 ,..., Pm pm1 ) f P' ( p)dp

(3)

( m0 )

0

1

E (Q | P('m0 ) p, Pm0 1 p1 ,..., Pm pm1 ) f P' ( p)dp.

( m0 )

p"

Because P(m0)’ is the largest one of m0 independent U(0,1) variables, we have

f P' ( p) m0 p m0 1.

( m0 )

In the first part of (3), p≤p”. Then among the p-values for the m0 nulls and the j0 smallest pm j

values among the false nulls, their maximum is smaller than p " 0 0 q * , but

m 1

m j 1

p j0 1 0 0 q * .

m 1

Therefore only the first (m0+ j0) hypotheses would be rejected and V= m0 and Q

Then

p"

E(Q | P

'

( m0 )

p, Pm

0

1

p1 ,..., Pm pm ) f P' ( p) dp

1

0

p"

m0

m0

m 1

m0 p 0 dp

p ''

j0

m0 j0

0

m

0

( m0 )

m0

p ''

m0 j0

m0 1

m0

m0 j

m

q* 0 q * p ''

m1

m1

m0 1

For the second component of (3), consider two scenarios:

m0

.

m0 j0

(a) p j0 p j p(' m0 ) p p j 1, for some j j0

(b) p j0 p " p(' m0 ) p p j0 1

m0 j

q * , therefore the true null corresponding to P(m0)’ and the

m 1

false nulls corresponding to p(j+1),…,pm1 all would be accepted. The rejection or acceptance of

the other hypotheses would not depend on the actual values of P (m0)’=p, p(j+1), …, pm1. The same

is true for (b) as well, except that j is replaced by j0.

Under (a), p(' m0 ) p p j

Therefore for the remain (m0+j-1) hypotheses, the procedure is to find out the largest k≤ m0+j-1,

such that P(k)≤{k/(m+1)}q*, where P(k) is the kth smallest p-value from the set

{Pi’, i=1, 2 ,…, m0-1} U { p1,p2, …, pj}

Or equivalently

p( k )

p

m0 j 1

k

q *.

m0 j 1 (m 1) p

Because condition on P(m0)’=p, {Pi’/p, i=1, 2 ,…, m0-1} are (m0-1) independently distributed U(0,1)

variables,0≤ pi/p≤1 are numbers corresponding to false null hypotheses. Then the false

discovery rate Q is the same as the one from controlling the false discovery rate among (m 0-1)

true nulls and j false nulls with p-values { Pi’/p, i=1, 2 ,…, m0-1; pi/p, i=1,2,…,j} with false

m j 1

discovery bound by 0

q *.

(m 1) p

Applying the induction, we have

1

E(Q | P

'

( m0 )

p, Pm

0

1

p1 ,..., Pm pm ) f P' ( p) dp

1

p"

1

m0 j 1

k

q * f P' ( p) dp

( m0 )

j

1

(m

1)

p

0

m

p"

1

k

(m 1) p q * m p

m0 1

0

dp

p"

m0

m1

( m0 1)

q *{1 p"

}.

Summing the two parts, we got

( m0 )

E(Q | Pm

0 1

p1 ,..., Pm pm )

End of proof.

1

m0

q *{1 p"

m1

( m0 1)

}

m0

q * p ''

m1

m0 1

m0

q *.

m