Power Analysis

Power is the conditional probability that one will reject the null hypothesis given that the null

hypothesis is really false.

Imagine that we are evaluating the effect of a putative memory enhancing drug. We have

randomly sampled 25 people from a population known to be normally distributed with a of 100 and

a of 15. We administer the drug, wait a reasonable time for it to take effect, and then test our

subjects’ IQ. Assume that we were so confident in our belief that the drug would either increase IQ or

have no effect that we entertained directional hypotheses. Our null hypothesis is that after

administering the drug 100; our alternative hypothesis is > 100.

These hypotheses must first be converted to exact hypotheses. Converting the null is easy: it

becomes = 100. The alternative is more troublesome. If we knew that the effect of the drug was to

increase IQ by 15 points, our exact alternative hypothesis would be = 115, and we could compute

power, the probability of correctly rejecting the false null hypothesis given that is really equal to 115

after drug treatment, not 100 (normal IQ). But if we already knew how large the effect of the drug

was, we would not need to do inferential statistics.

One solution is to decide on a minimum nontrivial effect size. What is the smallest effect

that you would consider to be nontrivial? Suppose that you decide that if the drug increases iq by 2

or more points, then that is a nontrivial effect, but if the mean increase is less than 2 then the effect is

trivial.

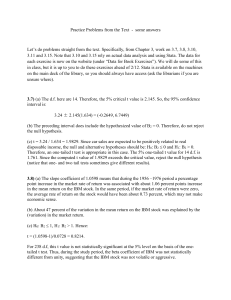

Now we can test the null of = 100 versus the alternative of = 102. Look at the figure on the

following page (if you are reading this on paper, in black and white, I recommend that you obtain an

electronic copy of this document from our BlackBoard site and open it in Word so you can see the

colors). Let the left curve represent the distribution of sample means if the null hypothesis were true,

15

3 . Let the right curve represent

= 100. This sampling distribution has a = 100 and a x

25

the sampling distribution if the exact alternative hypothesis is true, = 102. Its is 102 and,

15

3.

assuming the drug has no effect on the variance in IQ scores, x

25

The red area in the upper tail of the null distribution is . Assume we are using a one-tailed

of .05. How large would a sample mean need be for us to reject the null? Since the upper 5% of a

normal distribution extends from 1.645 above the up to positive infinity, the sample mean IQ

would need be 100 + 1.645(3) = 104.935 or more to reject the null. What are the chances of getting a

sample mean of 104.935 or more if the alternative hypothesis is correct, if the drug increases IQ by 2

points? The area under the alternative curve from 104.935 up to positive infinity represents that

probability, which is power. Assuming the alternative hypothesis is true, that = 102, the probability

of rejecting the null hypothesis is the probability of getting a sample mean of 104.935 or more in a

normal distribution with = 102, = 3. Z = (104.935 102)/3 = 0.98, and P(Z > 0.98) = .1635. That

is, power is about 16%. If the drug really does increase IQ by an average of 2 points, we have a 16%

chance of rejecting the null. If its effect is even larger, we have a greater than 16% chance.

Copyright 2015, Karl L. Wuensch - All rights reserved.

Power30.docx

2

Suppose we consider 5 the minimum nontrivial effect size. This will separate the null and

alternative distributions more, decreasing their overlap and increasing power. Now,

Z = (104.935 105)/3 = 0.02, P(Z > 0.02) = .5080 or about 51%. It is easier to detect large

effects than small effects.

Suppose we conduct a 2-tailed test, since the drug could actually decrease IQ; is now split

into both tails of the null distribution, .025 in each tail. We shall reject the null if the sample mean is

1.96 or more standard errors away from the of the null distribution. That is, if the mean is 100 +

1.96(3) = 105.88 or more (or if it is 100 1.96(3) = 94.12 or less) we reject the null. The probability of

that happening if the alternative is correct ( = 105) is: Z = (105.88 105)/3 = .29, P(Z > .29) =

.3859, power = about 39%. We can ignore P(Z < (94.12 105)/3) = P(Z < 3.63) = very, very small.

Note that our power is less than it was with a one-tailed test. If you can correctly predict the

direction of effect, a one-tailed test is more powerful than a two-tailed test.

15

1.5 .

100

With the null and alternative distributions less plump, they should overlap less, increasing power.

With x 1.5 , the sample mean will need be 100 + (1.96)(1.5) = 102.94 or more to reject the null. If

the drug increases IQ by 5 points, power is : Z = (102.94 105)/1.5 = 1.37, P(Z > 1.37) = .9147, or

between 91 and 92%. Anything that decreases the standard error will increase power. This

may be achieved by increasing the sample size or by reducing the of the dependent variable.

The of the criterion variable may be reduced by reducing the influence of extraneous variables upon

the criterion variable (eliminating “noise” in the criterion variable makes it easier to detect the signal,

the grouping variable’s effect on the criterion variable).

Consider what would happen if you increased sample size to 100. Now the x

Now consider what happens if you change . Let us reduce to .01. Now the sample mean

must be 2.58 or more standard errors from the null before we reject the null. That is, 100 +

2.58(1.5) = 103.87. Under the alternative, Z = (103.87 105)/1.5 = 0.75, P(Z > 0.75) = 0.7734 or

about 77%, less than it was with at .05, ceteris paribus. Reducing reduces power.

3

Please note that all of the above analyses have assumed that we have used a normally

X

distributed test statistic, as Z

will be if the criterion variable is normally distributed in the

x

population or if sample size is large enough to invoke the CLT. Remember that using Z also requires

that you know the population rather than estimating it from the sample data. We more often

estimate the population , using Student’s t as the test statistic. If N is fairly large, Student’s t is

nearly normal, so this is no problem. For example, with a two-tailed of .05 and N = 25, we went out

± 1.96 standard errors to mark off the rejection region. With Student’s t on N 1 = 24 df we should

have gone out ± 2.064 standard errors. But 1.96 versus 2.06 is a relatively trivial difference, so we

should feel comfortable with the normal approximation. If, however, we had N = 5, df = 4, critical t = ±

2.776, and the normal approximation would not do. A more complex analysis would be needed.

One Sample Power the Easy Way

Howell presents a simple method of doing power analyses for various designs. His method

also assumes a normal sampling distribution, so it is most valid with large sample sizes. The first

step in using Howell’s method is to compute d, the effect size in units. For the one sample test

1

d

. For our IQ problem with minimum nontrivial effect size at 5 IQ points,

d = (105 100)/15 = 1/3. We combine d with N to get , the noncentrality parameter. For the one

sample test, d N . For our IQ problem with N = 25, = 1/3 5 = 1.67. Once is obtained,

power is obtained using the power table in our textbook. For a .05 two-tailed test, power = 36% for a

of 1.60 and 40% for a of 1.70. By linear interpolation, power for = 1.67 is 36% + .7(40% 36%)

= 38.8%, within rounding error of the result we obtained using the normal curve. For a one-tailed test,

use the column in the table with twice its one-tailed value. For of .05 one-tailed, use the .10 twotailed column. For = 1.67 power then = 48% + 7(52% 48%) = 50.8%, the same answer we got

with the normal curve.

If we were not able to reject the null hypothesis in our research on the putative IQ drug, and

our power analysis indicated about 39% power, we would be in an awkward position. Although we

could not reject the null, we also could not accept it, given that we only had a relatively small (39%)

chance of rejecting it even if it were false. We might decide to repeat the experiment using an n large

enough to allow us to accept the null if we cannot reject it. In my opinion, if 5% is a reasonable

risk for a Type I error (), then 5% is also a reasonable risk for a Type II error (), so let us use power

= 1 = 95%. From the power table, to have power = .95 with = .05, is 3.60. n . For a

d

2

2

3 .6

five-point minimum IQ effect, n

116.64 . Thus, if we repeat the experiment with 117

1/ 3

subjects and still cannot reject the null, we can accept the null and conclude that the drug has no

nontrivial ( 5 IQ points) effect upon IQ. The null hypothesis we are accepting here is a “loose null

hypothesis” [95 < < 105] rather than a “sharp null hypothesis” [ = exactly 100]. Sharp null

hypotheses are probably very rarely ever true.

Others could argue with your choice of the minimum nontrivial effect size. Cohen has defined

a small effect as d = .20, a medium effect as d = .50, and a large effect as d = .80. If you defined

minimum d at .20, you would need even more subjects for 95% power.

A third approach one can take is to find the smallest effect that one could have detected with

high probability given n. If that d is small, and the null hypothesis is not rejected, then it is accepted.

For example, I used 225 subjects in the IQ enhancer study. For power = 95%, = 3.60 with .05

3.60

0.24 . If I can’t reject the null, I accept it, concluding that if the drug

two-tailed, and d

15

N

has any effect, it is a small effect, since I had a 95% chance of detecting an effect as small as .24 .

The loose null hypothesis accepted here would be that the population differs from 100 by less than

.24 .

4

Two Independent Samples

For the Two Group Independent Sampling Design, d

n

, where n = the number of scores

2

1 2

, where is the standard deviation in

either population, assuming is identical in both populations.

in one group, and both groups have the same n; d

If n1 n2 , use the harmonic mean sample size, n~

2

.

1

1

n1 n 2

For a fixed total N, the harmonic mean (and thus power) is higher the more nearly equal n1 and

n2 are. This is one good reason to use equal n designs. Other good reasons are computational

simplicity with equal n’s and greater robustness to violation of assumptions. Try computing the

effective (harmonic) sample size for 100 subjects evenly split into two groups of 50 each and

compare that with the effective sample size obtained if you split them into 10 in one group and 90 in

the other.

You should be able to rearrange the above formula for to solve for d or for n as required.

Consider the following a priori (done before gathering the data) power analysis. We wish to

compare the mean psychology achievement test score of students in Research Psychology graduate

programs with that of those in Clinical Psychology graduate programs. We decide that we will be

satisfied if we have enough data to have an 80% chance of detecting an effect of 1/3 of a standard

deviation, employing a .05 criterion of significance. How many scores do we need in each group, if

we have the same number of scores in each group? From the table in Howell, we obtain the value of

2.8

2.8 for . n 2 2

141 scores in each group, a total of 282 scores.

d

1/ 3

Consider the following a posteriori power analysis. We have available only 36 scores from

students in clinical programs and 48 scores from students in general programs. What are our

chances of detecting a difference of 40 points (which is that actually observed at ECU way back in

1981) if we use a .05 criterion of significance and the standard deviation is 98? The standardized

2

effect size, d, is 40/98 = .408. The harmonic mean sample size is n~

41.14

1

1

361 48 2

2

.408

2

41.14

1.85 . From our power table, power is 46% (halfway between .44 and .48).

2

5

Correlated Samples

The correlated samples t test is mathematically equivalent to a one-sample t test conducted

on the difference scores (for each subject, score under one condition less score under the other

condition). The greater ρ12, the correlation between the scores in the one condition and those in the

second condition, the smaller the standard deviation of the difference scores and the greater the

power, ceteris paribus. By the variance sum law, the standard deviation of the difference scores is

Diff 12 22 2 12 1 2 . If we assume equal variances, this simplifies to Diff 2(1 ) .

The 12 (rho) is the correlation between the scores in the one population and those in the other

population. With independent samples, rho = 0, and everything to the right of the minus sign drops

out. With correlated samples, the rho should be positive and, hopefully, considerable in magnitude.

This results in our subtracting from the standard error a positive magnitude, which will reduce the

standard error. Since the standard error is in the denominator – t = difference in means divided by

standard error – the absolute value of t is increased, lowering the value of p, and increasing power.

The reduction in the standard error should increase power relative to an independent samples

test with the same number of scores, but the correlated t has only half the degrees of freedom as the

independent t, which causes some loss of power. The gain in power from reducing the standard error

will generally greatly exceed the loss of power due to loss of half of the degrees of freedom, but one

could actually have less power with the correlated t than with the independent t if sample size were

low and the ρ12 low. Please see my document Correlated t versus Independent t .

When conducting a power analysis for the correlated samples design, we can take into

2

d

account the effect of ρ12 by computing dDiff, an adjusted value of d: d Diff 1

,

Diff

2(1 12 )

where d is the effect size as computed above, with independent samples. We can then compute

power via d Diff

n or the required sample size via n

d Diff

2

.

Please note that using the standard error of the difference scores, rather than the standard

deviation of the criterion variable, as the denominator of dDiff, is simply Howell’s method of

incorporating into the analysis the effect of the correlation produced by matching. If we were

computing estimated d (Hedges’ g) as an estimate of the standardized effect size given the obtained

results, we would use the standard deviation of the criterion variable in the denominator, not the

standard deviation of the difference scores. I should admit that on rare occasions I have argued that,

in a particular research context, it made more sense to use the standard deviation of the difference

scores in the denominator of g.

Consider the following a posteriori power analysis. One of my colleagues has data on level of

cortisol in humans under two conditions: 1.) in a relaxed setting, and 2.) in a setting thought to

increase anxiety. The standard deviations in her samples are 108 and 114. If the effect size in the

population were 20 points (which she considers the smallest nontrivial effect), how much power would

she have? The estimation correlation resulting from the within-subjects design is .75.

The pooled standard deviation is

.18

.5(1082 ) .5(1142 ) 111. d = 20/111 = .18.

.255 . .255 15 1.02. From the power table, power = 17%. My colleague

2(1 .75)

is not very happy about this low power. I point out that maybe she is lucky and has such a big

d Diff

6

difference in means that it is significant despite the low power. Regretfully, that is not so. She starts

planning to repeat the study with a larger sample size.

How much data will my colleague need? She wants to have enough power to be able to

detect an effect as small as 20 points of a standard deviation (d = .18) with 95% power – she

considers Type I and Type II errors equally serious and is employing a .05 criterion of statistical

significance, so she wants beta to be not more than .05. . n

d Diff

2

3.6

199.3.

.255

2

Here is another example of power analysis with matched pairs.

Pearson r

For a Pearson Correlation Coefficient, d = the size of the correlation coefficient in the

population, and d n 1 where n = the number of pairs of scores in the sample.

Consider the following a priori power analysis. We wish to determine whether or not there is a

correlation between misanthropy and support for animal rights. We shall measure these attributes

with instruments that produce scores for which it is reasonable to treat the variables as continuous.

How many respondents would we need to have a 95% probability of obtaining significant results if we

employed a .05 criterion of significance and if the true value of the correlation (in the population) was

3.6

0.2? n 1

1 325 .

d

.2

2

2

Type III Errors and Three-Choice Tests

Leventhal and Huynh (Psychological Methods, 1996, 1, 278-292) note that it is common

practice, following rejection of a nondirectional null, to conclude that the direction of difference in the

population is the same as what it is in the sample. This procedure is what they call a "directional twotailed test." They also refer to it as a "three-choice test" (I prefer that language), in that the three

hypotheses entertained are: parameter = null value, parameter < null value, and parameter > null

value. This makes possible a Type III error: correctly rejecting the null hypothesis, but incorrectly

inferring the direction of the effect - for example, when the population value of the tested parameter is

actually more than the null value, getting a sample value that is so much below the null value that you

reject the null and conclude that the population value is also below the null value. The authors show

how to conduct a power analysis that corrects for the possibility of making a Type III error. See my

summary at: http://core.ecu.edu/psyc/wuenschk/StatHelp/Type_III.htm

Links

POWER -- An overview

In Power Point format

G*Power3: Tests available

Internet Resources

Correlated t versus Independent t

Power Conventions: What effect size should I assume and how much power should I want?

Power, Retrospective -- Foolishness

Copyright 2015, Karl L. Wuensch - All rights reserved.