Lecture Notes on Binary Regression (doc)

advertisement

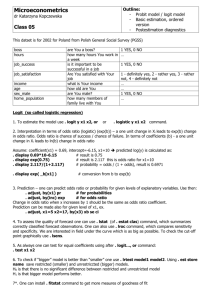

Workshop on Binary Regression

Logistic Regression + Classification Trees + Regression Trees +

Graphics + Multinomial Regression

Hyderabad, December 26-29, 2012

MB RAO

Module 1: An Introduction to Logistic Regression + Fitting the model

with R + Goodness-of-fit test

BINARY RESPONSE VARIABLE AND LOGISTIC REGRESSION

A binary variable is a variable with only two possible values. There

are many, many examples of binary variables in statistical work.

Example. A patient is admitted with abdominal sepsis (blood poisoning).

The case is severe enough to warrant surgery. The patient is wheeled to the

operation theatre. Let us speculate what will happen after the surgery. Let

Y

= 1 if death follows surgery,

= 0 if the patient survives.

The outcome Y is random. Since it is random, we want to know its

distribution.

Pr(Y = 1) = π, say, and

Pr(Y = 0) = 1 – π.

In simple terms, we want to know the chances (π) that a patient dies after

surgery. Equivalently, what are the chances (1 – π) of survival after surgery?

Are there any prognostic variables or factors which could influence the

outcome Y. Surgeons list the following variables which could have some

bearing on Y.

1. X1: Shock. Is the patient in a state of shock before the surgery?

X1

= 1 if yes,

= 0 if no.

2. X2: Malnutrition. Is the patient undernourished?

X2 = 1 if yes,

= 0 if no.

1

3. X3: Alcoholism. Is the patient alcoholic?

X3

= 1 if yes,

= 0 if no.

4. X4: Age

5. X5: Bowel infarction. Has the patient bowel infarction?

X5

= 1 if yes,

= 0 if no.

The variables X1, X2, X3, and X5 are categorical covariates and X4 is a

continuous covariate. The categorical variables are binary with only two

possible values. It is felt that the outcome Y depends on these covariates.

Response variable

Y

Covariates, predictors, or independent

variables

X1, X2, X3, X4, X5

More precisely, the probability π depends on X1 through X5. In order to

indicate the dependence, we write

π = π(X1, X2, X3, X4, X5).

We want to model π as a function of the covariates.

Why one wants to build a model? If a model is in place, one could use the

model to assess the chances of survival after surgery for a patient before he

is wheeled into the operation theatre. How? The surgeon could get

information on X1, X2, X3, X4, X5 for the patient, calculate π = π(X1, X2, X3,

X4, X5) from the postulated model, and then the chances of survival (1 – π)

after surgery.

A possible model?

π(X1, X2, X3, X4, X5) = β0 + β1X1 + β2X2 + β3X3 + β4X4 + β5X5

This is like a multiple regression model. This model is not acceptable. The

left hand side of the model π is a probability. The right hand side of the

model could be any real number.

Why not model Y directly as a function of the covariates? For example,

Y = β0 + β1X1 + β2X2 + β3X3 + β4X4 + β5X5?

2

This is not acceptable. The left hand side Y takes only two values 0 and 1,

but the right hand side could be any real number.

Logistic regression model

π(X1, X2, X3, X4, X5) =

0 1 X 1 2 X 2 3 X 3 4 X 4 5 X 5

e

1 e

0 1 X 1 2 X 2 3 X 3 4 X 4 5 X 5

.

This model looks very formidable. The left hand side of the model is a

probability and hence its value should always between zero and one. The

right hand is always a number between zero and one. Why?

This model has 6 unknown parameters β0, β1, β2, β3, β4, and β5. We need to

know the values of these parameters before it can be used. We can estimate

the parameters of the model if we have data on a sample of patients. We do

have data.

Structure of the data.

Patient

1

2

3

etc.

Y

1

1

0

X1

1

1

0

X2

1

0

1

X3

1

0

0

X4

47

53

32

X5

1

1

0

We have data on 106 patients. Present the data. Discuss the data.

A digression: The approach I presented is purely statistical. Identify the

variables of interest, designate the response variable or dependent variable,

designate the covariates or independent variables, postulate a model, fit the

model, and check it’s goodness-of-fit.

Engineers, physicists, and computer scientists will look at the problem from

a different angle. They work directly with the dependent or response

variable. I will talk about their approach later.

Problems (Back to our problem)

3

1. How does one estimate the parameters of the model using the data?

There are two standard methods available. 1. Method of maximum

likelihood. Write the likelihood of the data. Maximize the likelihood

with respect to the parameters. 2. Method of weighted least squares.

The least squares principle is used to minimize certain sum of

squares. This method is much simpler than the method of maximum

likelihood. Asymptotically, both methods are equivalent. If the

sample is large, the estimates will be more or less the same.

2. Once the model is estimated, we need to check whether or not the

model adequately summarizes the data. We need to assess how well

the model fits the data. We may have to use some goodness-of-fit

tests to make the assessment.

3. If the model fits the data well, we need to examine the impact of each

and every covariate on the response variable. It is tantamount to

identifying risk factors. We need to test the significance of each and

every covariate in the model. If a covariate is not significant, we

could remove the covariate from the model and then fit a leaner and

tighter model to the data.

4. If a particular model does not fit the data well, explore other models,

which can do a better job.

5. If an adequate model is fitted, explain how the model can be used in

practice. Spend time on interpreting the model.

Before we pursue all these objectives, let us look at the model from another

angle.

π(X1, X2, X3, X4, X5) = Probability of death after surgery for a patient with

covariate values X1, X2, X3, X4, X5

e 0 1 X1 2 X 2 3 X 3 4 X 4 5 X 5

=

0 1 X 1 2 X 2 3 X 3 4 X 4 5 X 5

1 e

1 - π(X1, X2, X3, X4, X5) = Probability of survival after surgery for a patient

with covariate values X1, X2, X3, X4, X5

=

1

1

1 e 0 1 X1 2 X 2 3 X 3 4 X 4 5 X 5

= Odds of Death versus Survival after surgery

4

ln(

= exp{β0 + β1X1 + β2X2 + β3X3 + β4X4 + β5X5}

) = log odds = logit =

1

β0 + β1X1 + β2X2 + β3X3 + β4X4 + β5X5

This is like a multiple regression model. The log odds are a linear function

of the covariates! This form of the model is very useful for interpretation.

The parameter β0 is called the intercept of the model. The parameter β1 is

called the regression coefficient associated with the variable ‘Shock.’ The

parameter β2 is called the regression coefficient associated with the variable

‘Malnutrition,’ etc. These regression coefficients indicate how much impact

the corresponding covariates have on the response variable.

The logistic regression model can be spelled out either in the form

π(X1, X2, X3, X4, X5) =

0 1 X 1 2 X 2 3 X 3 4 X 4 5 X 5

e

1 e

or in the form

ln(

1

Both are equivalent.

0 1 X 1 2 X 2 3 X 3 4 X 4 5 X 5

,

) = β0 + β1X1 + β2X2 + β3X3 + β4X4 + β5X5.

Using R and the data, I fitted the model. The following are the estimates of

the parameters along with their standard errors and p-values.

Variable Regression

Coefficient

Intercept

-9.754

Shock

3.674

Malnutrition 1.217

Alcoholism

3.355

Age

0.09215

Infarction

2.798

Standard

Error

2.534

1.162

0.7274

0.9797

0.03025

1.161

z-value p-value

3.16

1.67

3.43

3.04

2.41

0.0016

0.095

0.0006

0.0023

0.016

Let us proceed in a systematic way with an analysis of the model.

1. Adequacy of the model. The model fits the data very well. We can

say that the model is a good summarization of the data. I will talk

about this aspect when I present and discuss the relevant program.

5

2. Estimated model.

ln(

) = -9.754 + 3.674*X1 + 1.217*X2

1

+ 3.355*X3 + 0.09215*X4 + 2.798*X5

3. Impact of the covariates on the response variable. Let us look at the

covariate X1 (Shock). We want to test the null hypothesis H0: β1 = 0

(The covariate has no impact on the response variable or the covariate

X1 is not significant) against the alternative H1: β1 ≠ 0 (The covariate

has some impact on Y or the covariate is significant). An estimate of

β1 is 3.674. Is this value significant? We look at the corresponding zvalue (Estimate/(Standard Error)). If the z-value exceeds 1.96 in

absolute value, we reject the null hypothesis at 5% level of

significance. In our case, it does indeed exceed 1.96. The variable X1

is significant. There is another way to check significance of a variable.

Look at the corresponding p-value.

a. If p ≤ 0.001, the covariate is very, very significant.

b. If 0.001 < p ≤ 0.01, the covariate is very significant.

c. If 0.01 < p ≤ 0.05, the covariate is significant.

d. If p > 0.05, the covariate is not significant.

In our case, p = 0.0016. The variable X1 is very significant.

Further, the estimate 3.674 is positive. This means that X1 has a

positive significance over the response variable. This means that if the

value of X1 goes up, the probability π goes up. In our example, the

variable X1 takes only two values 1 and 0. The probability π will be

higher for a person with X1 = 1 than for a person with X1 = 0, other

factors remaining the same. I will talk about ‘how much higher’ later.

4. Let us look at the other covariates.

Malnutrition:

Not significant

Alcoholism:

Very, very significant

(Positive impact)

Age:

Very significant

(The higher the age is, the higher

the probability of death is.)

Infarction:

significant

(Positive impact)

5. Let us make the model a little tighter. Chuck out ‘Malnutrition’ from

the model. Refitting gives the following estimates.

Variable

Regression

Standard

z-value

Coefficient Error

6

Intercept

-8.895

Shock

3.701

1.103

3.355

Alcoholism

3.186

0.9163

3.477

Age

0.08983

0.02918

3.078

Infarction

2.386

1.071

2.228

6. The fit is good. Every covariate is significant. The estimated model is

ln(

1

) = -8.895 + 3.701*X1 + 3.186*X3

+ 0.08983*X4 + 2.386*X5

Data on ‘abdominal sepsis’

I have data in Excel format.

ID

Y

X1

X2

X3

1

0

0

0

0

2

0

0

0

0

3

0

0

0

0

4

0

0

0

0

5

0

0

0

0

6

1

0

1

0

7

0

0

0

0

8

1

0

0

1

9

0

0

0

0

10

0

0

1

0

11

1

0

0

1

12

0

0

0

0

13

0

0

0

0

14

0

0

0

0

15

0

0

1

0

16

0

0

1

0

17

0

0

0

0

19

0

0

0

0

20

1

1

1

0

22

0

0

0

0

102 0

0

0

0

103 0

0

0

0

104 0

1

0

0

105 1

1

0

0

106 0

0

0

0

107 0

0

0

0

X4

56

80

61

26

53

87

21

69

57

76

66

48

18

46

22

33

38

27

60

31

59

29

60

63

80

23

X5

0

0

0

0

0

0

0

0

0

0

1

0

0

0

0

0

0

0

1

0

1

0

0

1

0

0

7

108

110

111

112

113

114

115

116

117

118

119

120

122

123

202

203

204

205

206

207

208

209

210

211

214

215

217

301

302

303

304

305

306

307

308

309

401

402

501

502

0

0

1

0

0

1

0

0

0

0

0

1

0

1

0

0

0

0

0

0

0

0

0

0

0

0

0

1

0

0

0

1

0

0

0

0

0

0

1

0

0

0

1

0

0

0

1

0

0

0

0

1

0

1

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

1

0

0

0

0

0

1

0

0

0

1

1

0

0

0

0

0

0

0

0

1

1

0

0

0

1

0

0

0

1

0

0

0

1

0

1

1

1

0

0

0

0

0

1

0

0

1

0

1

0

1

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

71

87

70

22

17

49

50

51

37

76

60

78

60

57

28

94

43

70

70

26

19

80

66

55

36

28

59

50

20

74

54

68

25

27

77

54

43

27

66

47

0

0

0

0

0

0

0

0

0

0

0

1

0

0

1

0

0

0

0

0

0

0

0

0

0

0

1

1

0

1

0

0

0

0

0

0

0

0

1

0

8

503

504

505

506

507

508

510

511

512

513

514

515

516

518

519

520

521

523

524

525

526

527

529

531

532

534

535

536

537

538

539

540

541

542

543

544

545

546

549

550

0

0

1

0

0

0

0

0

1

0

0

0

0

0

0

0

1

0

1

0

1

0

0

0

1

0

0

0

0

0

0

1

0

1

1

0

0

0

0

0

0

0

1

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

1

0

0

0

0

0

0

0

0

0

0

0

0

1

0

0

0

1

1

0

0

1

0

0

0

0

0

1

0

1

0

1

1

1

0

0

1

1

0

0

0

0

0

0

0

1

0

0

0

0

1

1

0

1

0

1

1

0

0

0

0

0

1

0

1

0

1

0

0

0

0

0

0

0

1

1

1

0

0

0

1

0

0

0

0

1

0

0

0

1

1

1

0

0

1

1

37

36

76

33

40

90

45

75

70

36

57

22

33

75

22

80

85

90

71

51

67

77

20

52

60

29

30

20

36

54

65

47

22

69

68

49

25

44

56

42

0

1

0

0

0

0

0

0

1

0

0

0

0

0

0

0

0

0

0

0

0

0

0

1

0

0

1

0

0

0

0

0

0

0

0

0

0

0

0

0

9

I am working on a number of projects. In about fifty percent of these

projects the response variable is binary.

A specific example

A child gets cancer. It could be any one of the following.

Bone Cancer; Kidney (Wilms); Hodgkin’s; Leukemia; Neuroblastoma; NonHodgkin’s; Soft tissue sarcoma; CNS

Treatment begins. The child recovers and survives for five years. The child

is on Pediatric Cancer Registry. The child is followed lifelong. Periodically,

the child is examined. A number of measurements are recorded.

Some children get BCC (Basal Cell Carcinoma). Others don’t. How can one

explain this? What are the risk factors?

Data are collected. 320 children got BCC at least once during the follow up

years. 723 children never gotten BCC.

Response variable: Occurrence of BCC (Yes or No)

Covariates:

Type of Cancer (Categorical with 8 levels)

Age at diagnosis of cancer (numeric)

Follow up Years (How many years the child was followed up after he/she

enters the cancer registry?)

Gender

Race

Radiation (Yes or No)

SMN (Did the child get a different cancer during the follow up years?)

Model Fitting Using R

I want to illustrate how to use R to fit a logistic regression model for a given

data. The data I use here is different from the one I presented earlier. It is

easy to understand this data.

A particular treatment is being evaluated to cure a particular medical

condition. Introduce the response variable. Does the patient get relief when

he/she has the treatment?

10

Y = 1 if yes

= 0 if no

The response variable is binary. There are two prognostic variables: Age and

Gender. A sample of 20 male and 20 female patients are chosen to try the

treatment. Input the data.

> Age <- c(37, 39, 39, 42, 47, 48, 48, 52, 53, 55, 56, 57, 58, 58, 60, 64, 65,

68, 68, 70, 34, 38, 40, 40, 41, 43, 43, 43, 44, 46, 47, 48, 48, 50, 50, 52, 55,

60, 61, 61)

Gender is a categorical variable. Enter data on gender as a factor. Gender 0

means Female and 1 means Male.

> Gender <- factor(c(0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1))

An alternative way of inputting the data on Gender.

> Gender <- rep(0:1, c(20, 20))

Response is a categorical variable. Enter data on Response as a factor.

Response 1 means Yes and 0 means No.

> Response <- factor(c(0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1,

1, 0, 0, 0, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1))

Create a data file containing data on all these variables. There is no need to

do this. It is good to have everything in a single folder.

> MB <- data.frame(Age, Gender, Response)

Look at the data.

> MB

Age Gender Response

1 37 0

0

2 39 0

0

3 39 0

0

11

4 42

5 47

6 48

7 48

8 52

9 53

10 55

11 56

12 57

13 58

14 58

15 60

16 64

17 65

18 68

19 68

20 70

21 34

22 38

23 40

24 40

25 41

26 43

27 43

28 43

29 44

30 46

31 47

32 48

33 48

34 50

35 50

36 52

37 55

38 60

39 61

40 61

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

0

0

0

1

0

0

0

0

0

0

1

0

0

1

1

1

1

1

1

0

0

0

1

1

1

0

0

1

1

1

0

1

1

1

1

1

1

Reflect on the data. The first 20 patients are female and the last 20 female.

The ages are reported in increasing order of magnitude. At lower ages of

12

females the treatment does not seem to be working. For males the treatment

seems to be working at all ages with a good probability. We need to quantify

our first impressions. Model building will help us.

Let us fit the logistic regression model. Create a folder, which will store the

output. The basic command is glm (generalized linear model). The

command ‘glm’ is available in the ‘base.’

> MB1 <- glm(Response ~ Age + Gender, family = binomial, data = MB)

Let us look at the output.

> summary(MB1)

Call:

glm(formula = Response ~ Age + Gender, family = binomial, data = Logi)

Deviance Residuals:

Min

1Q Median

3Q

Max

-1.86671 -0.80814 0.03983 0.78066 2.17061

Coefficients:

Estimate Std. Error z value

(Intercept) -9.84294

3.67576 -2.678

Age

0.15806

0.06164

2.564

Gender1

3.48983

1.19917

2.910

--Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Pr(>|z|)

0.00741 **

0.01034 *

0.00361 **

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 55.452 on 39 degrees of freedom

Residual deviance: 38.917 on 37 degrees of freedom

AIC: 44.917

Number of Fisher Scoring iterations: 5

The estimated model is:

13

ln

Pr(Y 1)

= -9.84294 + 0.15806*Age + 3.48983*Gender

Pr(Y 0)

Age is a significant covariate. How can you tell? Look at its p-value

0.01304.

Gender is a very significant factor. How can you tell? Look at its p-value

0.00361.

General conclusions.

1. Look at the regression coefficients. Both are positive. The higher the

age is the higher the chances are of getting relief on the treatment.

2. Males and Females react to the treatment significantly differently.

The treatment is more beneficial to males than to females.

Justification.

Two patients on the treatment

Patient 1. Age = 50

Gender = Male

Patient 2. Age = 55

Gender = Male

Patient 1

Pr(Y = 1) = Probability of getting relief =

4.71

1 4.71

= 0.82

Patient 2.

Pr(Y = 1) = Probability of getting relief =

10.38

1 10.38

= 0.91

Another example

Two patients on the treatment

Patient 1. Age = 50

Gender = Female

Patient 2. Age = 55

Gender = Female

Patient 1.

14

Pr(Y = 1) = Probability of getting relief =

0.14

1 0.14

= 0.12

Patient 2.

Pr(Y = 1) = Probability of getting relief =

0.32

1 0.32

= 0.24

Compare the probabilities of getting relief for a male and a female with the

same age 50:

Male:

Female:

Probability of relief = 0.82

Probability of relief = 0.12

It is now time to focus on a goodness-of-fit test of the Logistic Regression

Model. We will work with the data presented above, with response variable

‘Response,’ and covariates ‘Gender’ and ‘Age.’ We want to test the

hypothesis that the Logisitc Regression Model is an adequate summary of

the data. In other words, we want to test that the response probability

Pr(Response = 1) follows the stipulated logistic regression model pattern.

The null hypothesis is

H0: Pr(Response = 1) =

exp(0 1 * Age 2 * Gender)

1 exp(0 1 * Age 2 * Gender)

for some parameters β0, β1, and β2.

The alternative is H1: H0 not true.

Hosmer and Lemeshow devised a test to test the validity of the null

hypothesis. I will not go through the rationality behind the test. We will use

the R package to conduct the test on our data. The test is available in a

package called ‘rms.’ First, we need to download the package. Download the

package. How?

Put the package ‘rms’ into service.

> library (rms)

The basic R command is ‘lrm’ (logistic regression model). Create a new

folder for the execution of ‘lrm.’

15

The package ‘rms’ also fits the logistic regression model. The command is

different.

MB2 <- lrm(Response ~ Age + Gender, data = MB, x = TRUE, y =

TRUE)

You need to ask for the output. The output is given later.

The following command gives the results of the Hosmer-Lemeshow test.

residuals.lrm(MB2, type = 'gof')

Sum of squared errors

6.4736338

Z

0.0552307

Expected value|H0 SD

6.4612280

0.2246174

P

0.9559547

You look at the p-value in the output. It is a large number. The chances of

getting the type of data we have gotten when the null hypothesis is true are

0.96. Recall what the null hypothesis is here. One cannot reject the null

hypothesis. The logistic regression model adequately summarizes the data.

Let us ask for the output of the ‘lrm’ command.

> MB2

Logistic Regression Model

lrm(formula = Response ~ Age + Gender, data = Logi, x = TRUE, y =

TRUE)

Frequencies of Responses

0 1

20 20

Obs Max Deriv Model L.R. d.f. P C

Dxy

40 7e-08

16.54

2 3e-04 0.849 0.698

Gamma

Tau-a

R2 Brier

0.703

0.358 0.451 0.162

16

Coef

S.E.

Wald Z P

Intercept -9.8429 3.67577 -2.68 0.0074

Age

0.1581 0.06164 2.56 0.0103

Gender

3.4898 1.19917 2.91 0.0036

̂ 0 = - 9.8429; ˆ1 = 0.1581; ˆ2 = 3.4898

Age is significant. Gender is very significant.

Why does one build a model? If the model is an adequate summary of the

data, the data can be thrown away. The model can be used to answer any

questions that may be raised on the experiment and the outcomes.

A model lets us assess whether or not a particular covariate is a significant

risk factor. A model lets us evaluate the extent of its influence on the

outcome variable.

Module 2: Null Hypotheses + p-values + Standard Errors + Critical Values +

LOGISTIC REGRESSION + INTERACTIONS + GRAPHS

Null hypotheses and their ilk

Let us go back to the abdominal sepsis problem. The response variable

(Dead or Alive after surgery, Y) is binary. There are five covariates (X1 =

Shock; X2 = Undernourishment; X3 = Alcoholism; X4 = Age; X5 =

Infarction). We postulated the following regression model.

π(X1, X2, X3, X4, X5) = Probability of Death After Surgery = Pr(Y = 1) =

e 0 1 X1 2 X 2 3 X 3 4 X 4 5 X 5

.

0 1 X 1 2 X 2 3 X 3 4 X 4 5 X 5

1 e

This is a population model. The population consists of all those who have

had abdominal sepsis and for whom surgery is contemplated. We believe

that the response probability has the pattern spelled out above. This belief

can be tested.

We want to test the impact of Shock on the Response. The null hypothesis is

one of skepticism. The patient being in shock has no bearing on the outcome

of the surgery. The null hypothesis is H0: β1 = 0. We have to have an

alternative. H1: β1 ≠ 0. The null hypothesis can be interpreted that Shock has

17

no impact on the Response. Another interpretation is that ‘Shock’ has no

significance. Yet, another interpretation is that ‘Shock’ is not a risk factor.

Yet, another interpretation is that there is no association between Shock and

the outcome of surgery. The alternative is interpreted as that ‘Shock’ has an

impact on the Response. Equivalently, Shock and Outcome of Surgery are

associated.

In order to test the hypotheses we need data. It means that we want to check

whether the data is consistent with the null hypothesis. Using the data, we

estimate the unknown parameter β1. If the null hypothesis is true, we would

̂1 to be close to the null value β1 = 0. Any large value of

expect the estimate 𝛽

̂1 would make us doubt the validity of the null hypothesis. In

the estimate 𝛽

̂1 =

practice, we got 𝛽

3.674. Is this large enough to cast doubt on the validity of H0? If β1 = 0, how

much it is plausible to get an estimate of β1 to be as high as 3.674?

Mathematical statisticians are able to calculate the probability of getting an

estimate at least as large as 3.674 if the null hypothesis is true. Formally, the

p-value is defined by

̂1 ⌋ ≥ 3.674 | 𝐻0 𝑖𝑠 𝑇𝑟𝑢𝑒) = 0.0016.

p = Pr(⌊𝛽

If the null hypothesis is true, the chances of observing a value at least as

large as 3.674 for β1 in a sample are very, very small. I do not think getting

the estimate 3.674 is feasible. However, we did indeed get such an estimate.

How did one calculate the probability? The probability is calculated under

the assumption that the null hypothesis is true. May be, the assumption is not

valid. Reject the null hypothesis!

In short, if the p-value is small, reject the null hypothesis. Typically, the pvalue is compared with the industry standard 0.05. Any event with the

probability of occurrence 0.05 or less is unlikely to occur.

When a p-value is deemed small?

1. One-in-twenty principle (5%): If an event has the probability of

occurrence ≤ 5%, the event is not expected to occur. (Level of

significance is 5%)

18

2. One-in-hundred principle (1%): If an event has the probability of

occurrence ≤ 1%, the event is not expected to occur. (Level of

significance is 1%)

3. One-in-ten principle (10%): If an event has the probability of

occurrence ≤ 10%, the event is not expected to occur. (Level of

significance is 10%)

4. Non-judgmental: Just report the p-value. Let the reader make up

his/her mind.

Some misconceptions!

1. Can I say that the chances that the null hypothesis is true are 0.0016?

No. Remember that the p-value is a conditional probability.

2. Is the null hypothesis true? I don’t know.

3. Is the null hypothesis false? I don’t know.

4. Is the data consistent with the null hypothesis? No, in this example.

Some theory behind the calculation of p-value

We have a model. The model is believed to be true. It has some parameters

in the model. One of the parameters is β1. We take a random sample of

subjects and collect data on the variables. Using the data, we estimate β 1. Let

̂1 . The value of the estimate would vary from

us denote the estimate by 𝛽

sample to sample. If the null hypothesis is true, it has been shown that

̂1

𝛽

~ N(0, 1).

Here SE is the standard error of the estimate. Using the standard normal

distribution, we need to calculate

𝑆𝐸

̂

𝛽

3.674

̂

𝛽

Pr(⌊ 1 ⌋ ≥

) = Pr(⌊ 1 ⌋ ≥ 3.1618) = 2*pnorm(3.1618, lower.tail = F) =

𝑆𝐸

1.162

𝑆𝐸

0.0016

I used R to calculate the p-value using the ‘pnorm’ command. Talk about

this more.

What is standard error?

A medical doctor collected data on 106 patients. Using the data, we

estimated β1. The estimate is 3.674. If another researcher collects data on the

same theme, the estimate may not come out to be 3.674. There is bound to

19

be variation from one estimate to another. Mathematical statisticians are able

to estimate the variation as measured by the standard deviation of the

estimate. This is the standard error of the estimate. This can also be called

margin of error. In this example, standard error is 1.162. One can use the

standard error to provide a 95% confidence interval for the unknown

parameter β1 of the population model. It is

3.674 ± 1.96*1.162.

This interval misses β1 = 0. Check! From this, one can conclude that the null

hypothesis can be rejected.

Logistic Regression and interactions

Let us go back to the example presented in the last lecture. A treatment is

being tested out on patients suffering from a medical condition whether or

not they get relief.

Response variable: For any randomly chosen patient on treatment, let

Y = 1 if the patient gets relief

= 0 if not.

There are two prognostic variables: Age and Gender.

Main effects model:

exp(0 1 * Age 2 * Gender)

Pr(Y = 1) =

1 exp(0 1 * Age 2 * Gender)

and

1

Pr(Y = 0) =

1 exp(0 1 * Age 2 * Gender)

for some unknown parameters β0, β1, and β2.

Interaction model:

exp(0 1 * Age 2 * Gender 3 * Age * Gender)

Pr(Y = 1) =

1 exp(0 1 * Age 2 * Gender 3 * Age * Gender)

and

1

Pr(Y = 0) =

1 exp(0 1 * Age 2 * Gender 3 * Age * Gender)

for some unknown parameters β0, β1, β2, and β3.

20

Typically, one should entertain an interaction model before gravitating

towards the main effects model.

What does an interaction model mean? In a multiple regression set-up, an

interaction model is easy to explain. In the context of a logistic regression

model, one can provide a good explanation in terms of log-odds model.

Pr(Y 1)

= β0 + β1*Age + β2*Gender + β3*Age*Gender

Pr(Y 0)

The log-odds is a linear function of the covariates!

ln

Logistic Regression Model for Males

Pr(Y 1)

ln

= (β0 + β2) + (β1 + β3)*Age

Pr(Y 0)

The log-odds is a linear function of age with intercept β0 + β2 and slope β1 +

β3.

Logistic Regression Model for Females

Pr(Y 1)

ln

= β0 + β1*Age

Pr(Y 0)

The log-odds is a linear function of age with intercept β0 and slope β1.

If interaction is present, i.e., β3 ≠ 0, the slopes are different. It is of

paramount importance to test the significance of interaction to begin with,

i.e., test the null hypothesis H0: β3 = 0. This is what we will do.

Load R with data.

> Age <- c(37, 39, 39, 42, 47, 48, 48, 52, 53, 55, 56, 57, 58, 58, 60, 64, 65,

68, 68, 70, 34, 38, 40, 40, 41, 43, 43, 43, 44, 46, 47, 48, 48, 50, 50, 52, 55,

60, 61, 61)

> length(Age)

[1] 40

> Gender <- rep(c("female", "male"), c(20, 20))

> Response <- factor(c(0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1,

1, 0, 0, 0, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1))

> length(Response)

[1] 40

> MB <- data.frame(Age, Gender, Response)

21

> MB

Age Gender Response

1 37 female

0

2 39 female

0

3 39 female

0

4 42 female

0

5 47 female

0

6 48 female

0

7 48 female

1

8 52 female

0

9 53 female

0

10 55 female

0

11 56 female

0

12 57 female

0

13 58 female

0

14 58 female

1

15 60 female

0

16 64 female

0

17 65 female

1

18 68 female

1

19 68 female

1

20 70 female

1

21 34 male

1

22 38 male

1

23 40 male

0

24 40 male

0

25 41 male

0

26 43 male

1

27 43 male

1

28 43 male

1

29 44 male

0

30 46 male

0

31 47 male

1

32 48 male

1

33 48 male

1

34 50 male

0

35 50 male

1

36 52 male

1

37 55 male

1

38 60 male

1

22

39 61 male

40 61 male

1

1

Attaching package: 'rms'

Fit the main effects model.

> MB1 <- lrm(Response ~ Age + Gender, data = MB, x = T, y = T)

> MB1

Logistic Regression Model

lrm(formula = Response ~ Age + Gender, data = MB, x = T, y = T)

Frequencies of Responses

0 1

20 20

Obs Max Deriv Model L.R.

d.f.

40 7e-08 16.54

2

Gamma Tau-a

R2 Brier

0.703 0.358 0.451 0.162

Intercept

Age

Gender=male

Coef

-9.8429

0.1581

3.4898

P

3e-04

C

Dxy

0.849 0.698

S.E.

Wald Z

3.67577 -2.68

0.06164 2.56

1.19917 2.91

P

0.0074

0.0103

0.0036

Let us do a goodness-of-fit test.

> residuals.lrm(MB1, type = 'gof')

Sum of squared errors

6.4736338

Z

0.0552307

Expected value|H0

6.4612280

P

0.9559547

SD

0.2246174

The fit is excellent.

23

Let us fit the interaction model.

> MB2 <- lrm(Response ~ Age + Gender + Age*Gender, data = MB, x = T,

y = T)

> MB2

Logistic Regression Model

lrm(formula = Response ~ Age + Gender + Age * Gender, data = MB,

x = T, y = T)

Frequencies of Responses

0 1

20 20

Obs Max Deriv Model L.R.

d.f.

40 2e-05

16.97

3

Gamma Tau-a

R2 Brier

0.733 0.373 0.461 0.158

Coef S.E.

Intercept

-12.1462 5.5816

Age

0.1970 0.0935

Gender=male

7.7047 6.7598

Age * Gender=male -0.0819 0.1259

P

7e-04

C

0.864

Dxy

0.728

Wald Z P

-2.18 0.0295

2.11 0.0351

1.14 0.2544

-0.65 0.5153

The interaction is not significant. Let us do a goodness-of-fit test.

> residuals.lrm(MB2, type = 'gof')

Sum of squared errors

6.3100002

Z

-0.3503031

The fit is excellent.

Expected value|H0

6.3857317

P

0.7261113

SD

0.2161884

We better stick to the main effects model. What is its interpretation? It is

easy to explain in terms of log-odds.

24

Logistic Regression model for Males

ln

Pr(Y 1)

= β0 + β2 + β1*Age

Pr(Y 0)

Logistic Regression model for Females

ln

Pr(Y 1)

= β0 + β1*Age

Pr(Y 0)

The lines are parallel. The only difference is in the intercepts.

Let us do some plotting. Plot the logistic regression model for males and

females separately.

> curve(exp(-9.8429 + 3.4898 + 0.1581*x)/(1 + exp(-9.8429 + 3.4898 +

0.1581*x)),

+ from = 30, to = 75, xlab = "Age", ylab = "Probability", col = "red", sub =

+ "Logistic Regression Model", main = "Probability of Relief From

Treatment")

> curve(exp(-9.8429 + 0.1581*x)/(1 + exp(-9.8429 + 0.1581*x)), col =

"blue",

+ add = T)

> text(40, 0.6, "Males", col = "red")

> text(60, 0.6, "Females", col = "blue")

The output is at the end.

What else we can do? Some prediction. Prediction is useful in pattern

recognition problems. What does the output folder MB1 contain?

> names(MB1)

[1] "freq"

"sumwty"

"stats"

[4] "fail"

"coefficients" "var"

[7] "u"

"deviance"

"est"

[10] "non.slopes"

"linear.predictors" "penalty.matrix"

[13] "info.matrix"

"weights"

"x"

[16] "y"

"call"

"Design"

25

[19] "scale.pred"

[22] "na.action"

[25] "fitFunction"

"terms"

"fail"

"assign"

"nstrata"

> MB1$linear.predictors

1

2

3

4

5

6

-3.99478499 -3.67866837 -3.67866837 -3.20449343 -2.41420186 -2.25614355

7

8

9

10

11

12

-2.25614355 -1.62391030 -1.46585199 -1.14973536 -0.99167705 -0.83361874

13

14

15

16

17

18

-0.67556042 -0.67556042 -0.35944380 0.27278945 0.43084777 0.90502270

19

20

21

22

23

24

0.90502270 1.22113933 -0.97913195 -0.34689870 -0.03078207 -0.03078207

25

26

27

28

29

30

0.12727624 0.44339287 0.44339287 0.44339287 0.60145118 0.91756780

31

32

33

34

35

36

1.07562612 1.23368443 1.23368443 1.54980106 1.54980106 1.86591768

37

38

39

40

2.34009262 3.13038418 3.28844250 3.28844250

Linear predictors are the numbers calculated in the linear form of the model

for every individual in the sample. We can calculate predicted probability of

relief as per the model for every one in the sample using the subject’s

covariate values.

> Predict <- round(exp(MB1$linear.predictor)/(1 +

exp(MB1$linear.predictors)), 3)

> Predict

1

2

3

4

5

6

7

8

9

10

11

12

13

0.018 0.025 0.025 0.039 0.082 0.095 0.095 0.165 0.188 0.241 0.271 0.303

0.337

14

15

16

17

18

19

20

21

22

23

24

25

26

0.337 0.411 0.568 0.606 0.712 0.712 0.772 0.273 0.414 0.492 0.492 0.532

0.609

27

28

29

30

31

32

33

34

35

36

37

38

39

0.609 0.609 0.646 0.715 0.746 0.774 0.774 0.825 0.825 0.866 0.912 0.958

0.964

40

0.964

Put everything together.

> MB3 <- data.frame(MB, Predict)

> MB3

Age Gender Response Predict

1

37 female

0

0.018

2

39 female

0

0.025

3

39 female

0

0.025

26

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

42

47

48

48

52

53

55

56

57

58

58

60

64

65

68

68

70

34

38

40

40

41

43

43

43

44

46

47

48

48

50

50

52

55

60

61

61

female

female

female

female

female

female

female

female

female

female

female

female

female

female

female

female

female

male

male

male

male

male

male

male

male

male

male

male

male

male

male

male

male

male

male

male

male

0

0

0

1

0

0

0

0

0

0

1

0

0

1

1

1

1

1

1

0

0

0

1

1

1

0

0

1

1

1

0

1

1

1

1

1

1

0.039

0.082

0.095

0.095

0.165

0.188

0.241

0.271

0.303

0.337

0.337

0.411

0.568

0.606

0.712

0.712

0.772

0.273

0.414

0.492

0.492

0.532

0.609

0.609

0.609

0.646

0.715

0.746

0.774

0.774

0.825

0.825

0.866

0.912

0.958

0.964

0.964

Are there any graphics available to check on interaction?

27

0.6

Males

Females

0.2

0.4

Probability

0.8

1.0

Probability of Relief From Treatment

30

40

50

60

70

Age

Logistic Regression Model

Module 3: Odds, Odds ratio, and their ilk

Odds and odds ratio

A Bernoulli Trial is a random experiment, which when performed

results in one and only one of two possible outcomes. For example, tossing a

coin is a Bernoulli trial. There are only two possible outcomes: Heads or

Tails. A medical researcher devised a new medicine for curing a specific

malady. If the medicine is administered to a patient suffering from the

malady, only one of two possible outcomes results: the patient is cured or

28

the patient is not cured. It is customary to denote the outcomes as Success

and Failure.

Consider a Bernoulli trial with probability of Success being p.

Consequently, the probability of Failure is 1-p. We define

p

.

1 p

The odds of Success provide a way to assess how likely the Success to occur

in comparison with Failure. In the following table, for a given probability of

Success, we calculate the odds of Success versus Failure.

Odds of Success versus Failure = Odds of Success =

p:

Odds:

0.1

1/9

0.2

¼

0.3

3/7

0.4

2/3

0.5

1

0.6

1.5

0.7

7/3

0.8

4

0.9

9

Suppose the odds of Success are 1. It means that Success and Failure are

equally likely.

Suppose the odds of Success are 2. This means that Success is twice as

likely to occur as Failure. Solve the equation

p

= 2 for p.

1 p

Solution: p = 2/3 and 1 – p = 1/3.

Suppose the odds of Success are 8. This means that Success is eight times as

likely to occur as Failure. Suppose the odds of Success are ¼.This means

that Failure is four times as likely to occur as Success.

In Medical Research, the scientists usually talk about odds of a treatment

being successful.

If we know the probability of Success, we can work out the odds of Success.

Conversely, if we know the odds of Success (versus Failure), we can work

out the probability of Success.

Suppose the odds of Success are 4.1. Set

p

= 4.1 and solve for p.

1 p

29

As a matter of fact, p =

4.1

= 0.8039.

1 4.1

Now we come to the concept of Odds Ratio. As the name indicates, it

is indeed the ratio of two sets of odds. Another name for the Odds Ratio is

Cross Ratio.

Odds ratio can be defined for independent Bernoulli experiments. In

prospective studies, we generally compare the performance two treatments.

Prospective Studies

Suppose we have two Bernoulli trials. In one Bernoulli trial the probability

of Success is p1 and in the other it is p2. In Bernoulli Trial 1, the odds of

p1

Success versus Failure are

. In Bernoulli Trial 2, the odds of Success

1 p1

p2

versus Failure are

. The Odds Ratio is defined by

1 p2

OR = .

Interpretation. Suppose OR = 2. This means

Odds of Success versus Failure in Trial 1 are two times the Odds of Success

versus Failure in Trial 2. It also implies that the probability of Success in

Trial 1 is greater than the probability of Success in Trial 2. How much

larger? It depends on what the probability of Success is in Trial 2.

1. Suppose the Odds of Success in Trial 2 are one. This means that in Trial

2, Success and Failure occur with equal probability ½. The odds of

Success in Trial 1 are two. This implies that the probability of success in

Trial 1 is 2/3. The probability of Failure is 1/3. Successes are two times

as likely as Failures.

2. Suppose the Odds of Success in Trial 2 are two. In Trial 2, the

probability of Success is 2/3. Thus in Trial 2, Successes are two times as

likely as Failures. In Trial 1, the Odds of Success are 4. The probability

of Success in Trial 1, therefore, is 4/5. The probability of Failure is 1/5.

Thus in Trial 1, Successes are four times as likely as Failures.

Estimation of Odds Ratio

30

The population odds ratio OR is unknown. We collect data in order to

estimate and build confidence intervals for OR. Typically, the data are

collected by adopting a prospective design.

In medical research, Trial 1 corresponds to an experimental drug and Trial 2

corresponds to a standard drug (control). The drugs are designed to cure a

specific malady.

Sampling.

Select m many patients randomly and put them all on the experimental drug.

Observe them for a certain length of time. Let m1 be the number of patients

for whom the drug is successful. Let m2 be the number of patients for whom

the drug is not successful.

Select n many patients randomly and put them all on the standard drug.

Observe them for the same length of time. Let n1 be the number of patients

for whom the drug is successful. Let n2 be the number of patients for whom

the drug is not successful.

The sampling protocol described above is called a prospective design. The

data can be put in the form of a 2x2 contingency table.

Drug

Experimental

Standard

Successful

Yes

No

m1

m2

n1

n2

Sample size

m

n

The null hypothesis is that the population OR = 1 (Hypothesis of

skepticism). The alternative hypothesis is that OR ≠ 1.

H0:

H1:

OR = 1

OR ≠ 1

Null hypothesis is equivalent to the statement that the experimental and

standard drugs are equally effective. Let p1 be the probability of success on

the experimental drug and p2 the probability of success on the standard drug.

Population odds ratio is defined by

31

p1

OR =

p2

(1 p1)

(1 p2 )

p (1 p2 )

.

1

(1 p1) p2

Claim: OR = 1 if and only if p1 = p2. Prove this.

It is easier to handle OR than handling with p1 and p2.

mn

Estimate of OR = ORˆ 1 2 (Derive this estimate.)

m2n1

Standard Error of the ln ORˆ

1

1

1 1

m1 m2 n1 n2

ln ORˆ ln 1

SE(ln( ORˆ ))

Test: Reject the null hypothesis at 5% level of significance if |Z| > 1.96.

Test statistic = Z=

Note: Testing OR = 1 is equivalent to testing p1 = p2. For testing p1 = p2, one

could use the two-sample proportion test if the alternative is directional or a

chi-squared test if the alternative is non-directional. The test based on OR

has better sampling properties than the one based on the proportions.

Retrospective Studies

Odds Ratio can also be defined in the context of a retrospective study. Let us

consider the problem of examining association between smoking status of

mothers and perinatal mortality. We select at random a maternity record of a

mother from a group of hospitals. We observe the following two categorical

variables:

Smoking status of the mother

and

perinatal mortality of the baby

It is natural to expect that these response variables are correlated.

Their joint distribution can be summarized in the following table.

Mother

Smoked (X)

Yes (0)

Perinatal Mortality (Y)

Yes (0)

No (1)

a

b

Marginals

a+b

32

No (1)

Marginals

c

a+c

d

b+d

c+d

1

Odds of Death versus Life if the mother smoked during pregnancy =

[Pr(Baby Died / Mother Smoked)] [Pr(Baby Alive / Mother Smoked)]

a

( a b)

=

.

b

( a b)

Odds of Death versus Life if the mother did not smoke during pregnancy =

Pr(Baby Died/Mother did not smoke)Pr(Baby Alive/Mother did not smoke)

c

(c d )

=

.

d

(c d )

The odds ratio is the ratio of these two sets of odds.

ad

OR =

bc

Equivalently,

Odds of Death versus Life if mother smoked = OR Odds of Death versus

Life if mother did not smoke.

OR = 1 means it does not matter whether mother smokes or not. The

smoking status of the mother has no impact on mortality. This also means

that Smoking Status of Mother and Perinatal Mortality are statistically

independent.

OR > 1 implies that death probability if mother smokes is greater than the

death probability if mother does not smoke.

So far what we discussed is about population’s odds ratio. We do not know

the population odds ratio. We need to estimate the population odds ratio and

test hypothesis about the odds ratio.

Inference for Odds Ratio

The data come in the form of a 2x2 contingency table.

X /Y

0

1

33

0

1

n00

n10

n01

n11

n n

A point estimate of OR: ORˆ 00 11 .

n10 n01

In order to build a confidence interval for OR, we need its large sample

standard error. What is standard error? It is easy to obtain a large sample

standard error of

ln( ORˆ ) = ln(n00) + ln(n11) – ln(n01) – ln(n10)

using asymptotic theory. As a matter of fact, estimated standard error is

given by

ORˆ )) =

1

1

1

1

.

n00 n01 n10 n11

A large sample 95% confidence interval for ln(OR) is given by

ln( ORˆ ) 1.96SE(ln( ORˆ )).

In order to get a large sample 95% confidence interval for ln(OR), take antilogarithms. It is given by

Exp{ ln( ORˆ ) - 1.96SE(ln( ORˆ ))} OR

Exp{ln( ORˆ ) + 1.96SE(ln( ORˆ ))}

SE(ln(

Whether the study is prospective or retrospective, the concept of odds ratio

is the same. The underlying distributions are characteristically different.

Example. Back to perinatal mortality problem and smoking mothers. A

retrospective study of 48,378 mothers yielded the following data.

Mother

Smoked

Yes

No

Marginals

ORˆ =

Perinatal Mortality

Yes

No

619

20,443

634

26,682

1253

47,125

Marginals

21,062

27,316

48,378

619 26682

= 1.27

634 20443

ln( ORˆ ) = 0.2390

34

SE[ln( ORˆ )] =

1

1

1

1

619 20443 634 26682

= 0.057

A large sample 99% confidence interval for ln(OR) is given by

Ln( ORˆ ) 2.576SE[ln( ORˆ )]

0.2390 0.1475

0.0915 ln(OR) 0.3865

A large sample 99% confidence interval for OR is given by

exp{0.0915} OR exp{0.3865}.

1.10 OR 1.47

Conclusion. The number 1 is not in the interval. Smoking status of a mother

does indeed influence the perinatal mortality of the baby. The odds ratio is at

least 1.10 and at most it is 1.47.

The odds of death versus life if mother smokes is at best 1.10 times the odds

of death versus life if mother does not smoke and at worst it is 1.47 times the

odds of death versus life if mother does not smoke.

R can do all these calculations.

Download the package ‘vcd.’ Activate the package.

Enter the data into a matrix.

> MB <- matrix(c(619, 20443, 634, 26682), nrow = 2, byrow = T)

> MB

[,1] [,2]

[1,] 619 20443

[2,] 634 26682

Name the rows and columns.

> rownames(MB) <- c("Smoked", "No")

> colnames(MB) <- c("Died", "Alive")

> MB

Died Alive

Smoked 619 20443

No

634 26682

35

The command for oddsratio is ‘oddsratio.’

> oddsratio(MB)

[1] 0.2424050

This is ln(oddsratio), i.e., log of odds ratio.

We can get confidence interval of ln(OR). The default level is 95%.

> confint(MB)

lwr

upr

[1,] 0.1302128 0.3545972

> confint(MB, level = 0.95)

lwr

upr

[1,] 0.1302128 0.3545972

> confint(MB2, level = 0.90)

lwr

upr

[1,] 0.1482503 0.3365596

> confint(MB, level = 0.99)

lwr

upr

[1,] 0.09495945 0.3898505

How to get confidence intervals for OR?

> oddsratio(MB, log = F)

[1] 1.274310

> MB3 <- oddsratio(MB, log = F)

> confint(MB3)

lwr

upr

[1,] 1.139071 1.425606

Odds ratios in the context of Logistic Regression

In a multiple regression model, it is easy to examine the impact of a

covariate on the response variable. Suppose we have one response variable y

and three covariates X1, X2, and X3. Suppose we have the following

estimated multiple regression equation:

ŷ = 3 + 2X1 + 3X2 – 4X3.

36

What is the impact of X1 on the response variable? Suppose we increase the

value of X1 by one unit and keep the values of X2 and X3 the same. What

will happen to the value of y?

Scenario 1

X1 = 1, X2 = 3, X3 = 1

ŷ = 10

Scenario 2

X1 = 2, X2 = 3, X3 = 1

ŷ

= 12

What is the difference between Scenarios 1 and 2? The value of X1 has gone

up by one unit and the values of X2 and X3 have remained the same. The

value of y has gone up by two units. The number 2 is precisely the

coefficient of X1 in the multiple regression estimated equation. Thus the

impact of X1 on the response variable is positive and is measured by the

coefficient of X1 in the equation.

What is the impact of X3 on the response variable y? If the value of X3 goes

up by one unit and the values of X1 and X2 remain the same, then the value

of y goes down by four units on average.

Scenario 1

X1 = 1, X2 = 3, X3 = 1

ŷ = 10

Scenario 2

X1 = 1, X2 = 3, X3 = 2

ŷ = 6

What is the difference between Scenarios 1 and 2?

We would like to initiate a similar study in the environment of logistic

regression models. Suppose we have two covariates X1 and X2 in a logistic

regression model. Suppose the model is given by Pr(Y = 1 / X1, X2) =

exp{0 1 X1 2 X 2}

1 exp{0 1 X1 2 X 2}

and

1

Pr(Y = 0 / X1, X2) =

1 exp{0 1 X1 2 X 2}

37

What is the impact of X1 on the response variable? A multiple regression

type of interpretation is not possible here. We work with the odds ratios. Let

Y = 1 stand for success and Y = 0 stand for failure.

Odds of Success versus Failure =

Pr(Y 1 | X1, X 2 )

exp( 0 1 X1 2 X 2 ) .

Pr(Y 0 | X1, X 2 )

Look at the following scenario:

X1 = 1 and X2 = 2 Odds of Success versus Failure = exp{0 + 1 + 22}

(Check this.)

Let us increase the value of X1 by one unit and keep the value of X2 the

same, i.e.,

X1 = 2 and X2 = 2 Odds of Success versus Failure = exp{0 + 21 + 22}

Odds Ratio = [Odds of Success versus Failure when X1 = 2 and X2 = 2]

[Odds of Success versus Failure when X1 = 1 and X2 = 2] = exp{1}.

The numbers given to X1 and X2 are not special. Give any values to X1 and

X2 so that the value of X1 goes up by one unit and the value of X2 remains

the same. The odds ratio will remain the same.

Equivalently,

[Odds of Success versus Failure when X1 = 2 and X2 = 2] = (Odds Ratio)[

Odds of Success versus Failure when X1 = 1 and X2 = 2]

Value of 1 Odds Ratio Impact of X1 on Y

0

1

No impact

>0

>1

If X1 goes up, so are the

odds.

<0

<1

If X1 goes up, the odds

go down.

Example. Framingham Study: Homework

38

Module 4: Odds ratio from the logistic regression model vis-à-vis Odds ratio

from the contingency table + Biplots + How to download EXCEL onto R

Odds ratio

Let us look at the data on Response to a particular treatment with prognostic

variable Age and Gender.

> Age <- c(37, 39, 39, 42, 47, 48, 48, 52, 53, 55, 56, 57, 58, 58, 60, 64, 65,

68, 68, 70, 34, 38, 40, 40, 41, 43, 43, 43, 44, 46, 47, 48, 48, 50, 50, 52, 55,

60, 61, 61)

> Gender <- factor(rep(c("F", "M"), c(20, 20)))

> Response <- factor(c(0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1,

1, 0, 0, 0, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1))

> MB <- data.frame(Age, Gender, Response)

> MB

Age Gender Response

1 37 F

0

2 39 F

0

3 39 F

0

4 42 F

0

5 47 F

0

6 48 F

0

7 48 F

1

8 52 F

0

9 53 F

0

10 55 F

0

11 56 F

0

12 57 F

0

13 58 F

0

14 58 F

1

15 60 F

0

16 64 F

0

17 65 F

1

18 68 F

1

19 68 F

1

20 70 F

1

21 34 M

1

22 38 M

1

23 40 M

0

24 40 M

0

25 41 M

0

39

26 43 M

1

27 43 M

1

28 43 M

1

29 44 M

0

30 46 M

0

31 47 M

1

32 48 M

1

33 48 M

1

34 50 M

0

35 50 M

1

36 52 M

1

37 55 M

1

38 60 M

1

39 61 M

1

40 61 M

1

Response and Gender are binary variables. We can cross-tabulate these

variables to get a 2x2 contingency table. We can calculate the odds ratio for

the table to measure the degree of association between Response and

Gender. We can also build a 95% confidence interval for the population

odds ratio. Let us activate the package ‘vcd.’

> MB1 <- table(Response, Gender)

> MB1

Gender

Response F M

0 14 6

1 6 14

> MB2 <- oddsratio(MB1, log = F)

> MB2

[1] 5.444444

Interpretation: Odds of Cure versus No Cure if the patient is Male =

5.44*Odds of Cure versus No Cure if the patient is Female. Odds of Cure

are much better for males than for females.

Suppose the odds of Cure versus No Cure for females are 1/5, i.e., No Cure

is 5 times more likely than Cure. Then the odds of Cure versus No Cure for

males is 5.44*(1/5) = 1.088, better than evens. More precisely,

Pr(Cure | Male) = 1.088/(1 + 1.088) = 0.52

In this analysis, age is not factored into.

> confint(MB2)

lwr upr

40

[1,] 1.471410 20.14528

More precisely, a 95% confidence interval for the population odds ratio is

given by

1.47 ≤ OR ≤ 20.15.

Why this confidence interval is so wide? The sample is small. Recall the

̂ ) is √

standard error of ln(𝑂𝑅

1

14

+

1

6

+

1

14

1

+ . The length of the confidence

6

intervals depends on the standard error. The smaller the standard error is the

small the length of the confidence interval is. The numbers in the four cells

of the contingency table are small. The bigger these numbers are the smaller

the standard is. Recall how the confidence interval is built.

We want to test the null hypothesis that the population odds ratio is equal to

one, i.e., there is no association between Response and Gender.

H0: OR = 1

H1: OR ≠ 1

The observed 95% confidence interval is: 1.47 ≤ OR ≤ 20.15. The interval

does not contain OR = 1. We reject the null hypothesis at 5% level of

significance. We can also calculate the p-value.

Under the null hypothesis, ln(OR) = 0. Under the null hypothesis,

theoretically,

Z=

̂ )− 0

ln(𝑂𝑅

̂ ))

𝑆𝐸(ln(𝑂𝑅

has a standard normal distribution. Observed value of the z-statistic can be

computed using R.

> SE <- sqrt(1/14 + 1/14 + 1/6 + 1/6)

> SE

[1] 0.6900656

> Z <- log(MB2)/SE

>Z

[1] 2.455703

> pvalue <- 2*pnorm(2.455703, lower.tail = F)

> pvalue

[1] 0.01406093

Based on this p-value, we can reject H0: ln(OR) = 0 or H0: OR = 1.

We can fit a logistic regression model to the data.

exp(𝛽0 + 𝛽1 ∗𝐴𝑔𝑒+ 𝛽2 ∗𝐺𝑒𝑛𝑑𝑒𝑟)

Pr(Response = 1 | Age, Gender) =

1+ exp(𝛽0 + 𝛽1 ∗𝐴𝑔𝑒+ 𝛽2 ∗𝐺𝑒𝑛𝑑𝑒𝑟)

Let us fit this model.

> MB3 <- glm(Response ~ Age + Gender, data = MB, family = binomial)

> summary(MB3)

41

Call:

glm(formula = Response ~ Age + Gender, family = binomial, data = MB)

Deviance Residuals:

Min

1Q

Median

-1.86671 -0.80814

0.03983

Coefficients:

3Q

0.78066

Max

2.17061

Estimate Std. Error z value Pr(>|z|)

(Intercept) -9.84294

3.67576 -2.678 0.00741 **

Age

0.15806

0.06164

2.564 0.01034 *

GenderM

3.48983

1.19917

2.910 0.00361 **

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 55.452 on 39 degrees of freedom

Residual deviance: 38.917 on 37 degrees of freedom

AIC: 44.917

Number of Fisher Scoring iterations: 5

> OddsRatioGender <- exp(3.4898)

> OddsRatioGender

[1] 32.77939

If the Age is fixed, no matter what it is, the odds of Cure versus No Cure for

a male = 32.78*Odds of Cure versus No Cure for a female, both with the

same age.

Suppose the odds of Cure versus No Cure for females are 1/5, i.e., No Cure

is 5 times more likely than Cure females. Then the odds ratio of Cure versus

No Cure for males is 32.78*(1/5) = 6.556. This means

Pr(Cure | Male) = 6.556/(1 + 6.556) = 0.87.

This odds ratio takes into account age. One can say that this odds ratio is the

odds ratio of Response and Gender adjusted for Age. This is indeed a true

summary of the relationship between Response to the treatment and Gender.

Another great advantage of the logistic regression model, if it is a good fit, is

that we can measure association between the response variable (binary) and

a numeric covariate after adjusting for the presence of other covariates.

In our example, the continuous variable is Age. The odds ratio of Response

to Treatment and Age is exp(0.15806) = 1.17.

If the gender is fixed,

Odds of Cure versus No cure if (Age = x+1) =

1.17*Odds of Cure versus No Cure if (Age = x),

where x is any number.

42

This odds ratio is the odds ratio of Response to the Treatment and Age

adjusted for Gender. If the gender is fixed,

Odds of Cure versus No cure if (Age = x+2) =

(1.17)2*Odds of Cure versus No Cure if (Age = x)

Derive this result.

There is no way we can measure association between Response to Treatment

(binary) and Age (continuous) using contingency table approach.

Build a 95% confidence interval for the odds ratio of Gender

The coefficient of Gender in the logistic regression model is β2. Population

odds ratio is exp(β2). A 95% confidence interval for β2 is

𝛽̂

2 ± 1.96*SE.

3.49 ± 1.96*1.20

1.14 ≤ β2 ≤ 5.84

A 95% confidence interval for the odds ratio exp(β2) is obtained by

exponentiation of the above interval.

3.13 ≤ OR ≤ 343.78

Why this interval is so wide?

Biplots

Goal: I have 4 quantitative variables: X1, X2, X3, and X4. Make a graphical

presentation of the data on these four variables in a single frame.

Solution: Get a scatter plot of X1 and X2. Get the scatter plot of X3 and X4

on the same graph.

How? Let us look at an example. The data ‘iris’ is available in R. Data were

collected on Petal Length, Petal Width, Sepal Length, and Sepal Width on

three different species of iris flowers (setosa, versicolor, viriginica).

Download the data.

> data(iris)

> dim(iris)

[1] 150 5

> head(iris)

1

2

3

4

5

6

Sepal.Length Sepal.Width Petal.Length Petal.Width Species

5.1

3.5

1.4

0.2 setosa

4.9

3.0

1.4

0.2 setosa

4.7

3.2

1.3

0.2 setosa

4.6

3.1

1.5

0.2 setosa

5.0

3.6

1.4

0.2 setosa

5.4

3.9

1.7

0.4 setosa

How many flowers in each species?

> table(iris$Species)

setosa versicolor virginica

43

50

50

50

Focus on setosa flowers only.

The four measurements are: Petal.Length; Petal.Width; Sepal.Length; and

Sepal.Width.

Get the scatter plot of Petal Length and Sepal Length. Superimpose this

graph with the scatter plot of Petal Width and Sepal Width.

> setosa <- subset(iris, iris$Species == "setosa")

Using ‘par’ command, create four lines of space at the bottom, four lines on

the left, seven lines at the top, and seven lines on the right. I need space at

the top and on the right for legend. (mar = margin on the sides)

> par(mar = c(4, 4, 7, 7))

> plot(setosa$Petal.Length, setosa$Sepal.Length, pch = 16, col = "red",

+ xlab = "Petal Length", ylab = "Sepal Length")

I have been harping that a plot command will not accept another plot

command in any superimposition. We can overcome that.

> par(new = T)

> plot(setosa$Petal.Width, setosa$Sepal.Width, pch = 17, col = "blue", ann

= F,

+ axes = F)

> range(setosa$Sepal.Width)

[1] 2.3 4.4

> range(setosa$Petal.Width)

[1] 0.1 0.6

> axis(side = 3, at = c(0.1, 0.2, 0.3, 0.4, 0.5, 0.6))

> axis(side = 4, at = c(2, 3, 4, 5))

> mtext("Petal Width", side = 3, line = 2)

> mtext("Sepal Width", side = 4, line = 3)

> mtext("Setosa Flowers", side = 3, line = 5)

mtext = text on the margins

Here is the biplot.

This method of plotting can be used to plot X versus Y and X versus U,

where X, Y, and U are three quantitative variables. In this case, side = 3 is

not needed.

44

Setosa Flowers

0.2

Petal Width

0.3

0.4

0.5

0.6

Sepal Width

4

4.5

3

5.0

Sepal Length

5.5

0.1

1.0

1.2

1.4

1.6

1.8

Petal Length

How to download EXCEL data into R for MAC users?

Courtesy: Gail Pyne-Geithman, Associate Professor, Neurosurgery

1. Save the data as a comma-separated.csv file.

2. Find the precise address of this file. If you can find it, that is good. If

you cannot, R can find it for you. For example, suppose the data file is

“sepsis.csv”. Then in R console, type < rawdata <file.choose(“sepsis.csv”). Type < rawdata. This will give the address

in double quotes.

3. Type < Name <- read.csv(“Address/sepsis.csv”, header = T)

4. The folder Name contains the data.

45

Module 5: NON-PARAMETRIC REGRESSION; BINARY RESPONSE

VARIABLE; CLASSIFICATION TREES

We have been working on how to model a binary response variable in terms

of covariates or independent variables. Our approach was probabilistic in

nature. We proposed a logistic regression model. However, there are a

number of other approaches. One approach popular with engineers and

physicists is to treat the problem as a pattern recognition or classification

problem. Let us go back to the abdominal sepsis problem.

Response variable

Y = 1 if the patient dies after surgery

= 0 if the patient survives after surgery

Independent variables

X1: Is the patient in a state of shock?

X2: Is the patient suffering from malnutrition?

X3: Is the patient alcoholic?

X4: Age

X5: Has the patient bowel infarction?

In logistic regression, the probability distribution of Y is modeled in terms of

the covariates.

If we view this problem as a pattern recognition problem, we need to

identify what the patterns are. The situation Y = 1 is regarded as one pattern

and Y = 0 as the other. Once we have information on the independent

variables for a patient, we need to classify him/her into one of the two

patterns. We have to come up with a protocol, which will classify the patient

as falling into one of the patterns. In other words, we have to say whether he

will die or survive after surgery. We will not make a probability statement.

Any classification protocol one comes up can not be expected to be free of

errors. A classification protocol is judged based on its misclassification error

rate. We will make precise this concept later.

Core idea: Look at the space of predictors. We want to break up the

predictor space into boxes (5-dimensional parallelepipeds) so that each box

is identified with one pattern. For example, Shock = 1, Malnourishment = 0,

Alcoholism = 1, Age > 45, Infarction = 1 is one such box. Can we say that

46

most of the patients that fall into this box die? We want to divide the

predictor space into mutually exclusive and exhaustive boxes so that the

patients falling into each box have predominantly one pattern. The creation

of such boxes is the main objective of this lecture.

One popular method in classification or pattern recognition is the so called

the ‘classification tree methodology,’ which is a data mining method. The

methodology was first proposed by Breiman, Friedman, Olshen, and Stone

in their monograph published in 1984. This goes by the acronym CART

(Classification and Regression Trees). A commercial program called CART

can be purchased from Salford Systems. Other more standard statistical

software such as SPLUS, SPSS, and R also provide tree construction

procedures with user-friendly graphical interface. The packages ‘rpart’ and

‘tree’ do classification trees. Some of the material I am presenting in this

lecture is culled from the following two books.

L Breiman, J H Friedman, R A Olshen, and C J Stone – Classification and

Regression Trees, Wadsworth International Group, 1984.

Heping Zhang and Burton Singer – Recursive Partitioning in the Health

Sciences, Second Edition, Springer, 2008.

Various computer programs related to this methodology can be downloaded

freely from Heping Zhang’s web site: http://peace.med.yale.edu/pub

Let me illustrate the basic ideas of tree construction in the context of a

specific example of binary classification. In the construction of a tree, for

evaluation purpose, we need the concept of ENTROPY of a probability

distribution and/or Gini’s measure of uncertainty. Suppose we have a

random variable X taking finitely many values with some probability

distribution.

X:

Pr.:

1

p1

2

p2

…

…

m

pm

We want to measure the degree of uncertainty in the distribution (p1, p2, … ,

pm). For example, suppose m = 2. Look at the distributions (1/2, 1/2) and

(0.99, 0.01). There is more uncertainty in the first distribution than in the

second. Suppose some one is about to crank out X. I am more comfortable in

47

betting on the outcome of X if the underlying distribution is (0.99, 0.01) than

when the distribution is (1/2,1/2). We want to assign a numerical quantity to

measure the degree of uncertainty. Entropy of a distribution is introduced as

a measure of uncertainty.

Entropy (p1, p2, … , pm) =

m

pi ln pi

= Entropy impurity = Measure of

i 1

Chaos, with the convention that 0 ln 0 = 0.

Properties

1. 0 ≤ Entropy ≤ ln m.

2. The minimum 0 is attained for each of the distributions (1, 0, 0, … ,

0), (0, 1, 0, … , 0), … , (0, 0, … , 0, 1). For each of these

distributions, there is no uncertainty. The entropy is zero.