The effects of feedback during exploratory

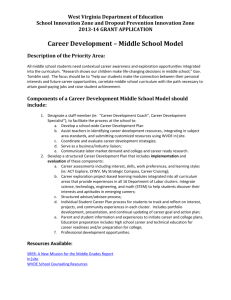

advertisement