Discharge measurements of water are used by

advertisement

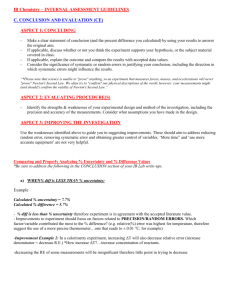

NASH Panel Discussion – Discharge Measurements: Needs for Improving the Estimate of Measurement Uncertainty Moderator Janice Fulford Panelists: Frank Weber, Juan Gonzalez, Kevin Oberg, Brian Wahlin Discharge measurements of water are used by water managers, land use planners, engineers and hydrologists to make decisions concerning water allocations, flood risks, zoning, flood structures, and bridges. The quality and uncertainty of discharge measurements affect the level of confidence in the decisions made on the basis of those discharge measurements. The panel will discuss whether alternative methods for estimating the uncertainty of discharge measurements could yield more robust estimates than those afforded by current approaches. Why do your need an estimate of uncertainty and what do you use the estimate for? BW: Uncertainty is important for water balances. Water gets delivered and applied to the fields. Measurements going in and measurements going out – differences of 30 to 50% - where is the water going? In California by the year 2020 all water users have to reduce use by 20%. Need estimates of uncertainty to ensure that goals are met. Hydraulic Modelling – input is flow measurements. The black box of RAS produces results with discharge uncertainty amplified by model uncertainty FW: Experience with quantifying uncertainty in hydrologic predictions. Sometimes we have to deal with sum of upstream flow exceeding downstream flow - is this due to measurement uncertainty, measurement errors, a losing reach, or other processes? All dams leak. In one project, the question was whether the seepage signal can be detected in existing streamflow records, or in other words whether the signal-to-noise ratio is large enough to detect the seepage. Or would the (costly) construction of a weir improve the signal-to-noise ratio enough to then detect seepage? Generation operation orders give clear instructions to hydro operations planners on how to manage a project. Flow constraints at water compliance points downstream of facilities are inherently based on assumptions of streamflow data uncertainty and have therefore built in a 5% buffer. It is speculated that real-time streamflow data uncertainties can greatly exceed the commonly quoted 5% and that operations could therefore be further optimized. Hydrologic models can be calibrated in a way that takes the uncertainty of flow data into consideration. KO We use uncertainty in the development of stage-discharge and other types of ratings. The uncertainty is used to help aid in decisions about changing the rating. The uncertainty is also used to determine when a check discharge measurement is required. After making a discharge measurement, the hydrographer compute the deviation from the rating and compare that with the measurement uncertainty and knowledge about prior trends in shifts. If the deviation cannot be explained by prior shifts and the uncertainty of the measurement is acceptable, a check measurement is required. The decision to do a check measurement should include uncertainty. We are consumers of our own data – using them for modeling. Our immediate clients like the weather service need to know uncertainty because. JG Discharge measurements close to hydraulic structures are often subject to a variety of factors that challenge the measurement assumptions – yet those measurements are needed to calibrate the flow ratings used to estimate the flow through the structures in real-time. The uncertainty of streamgauging data propagates as uncertainty of the rating equations calibrated with it, and consequently as uncertainty of the flows estimated with these equations. Knowing the uncertainty of the data used for calibration of ratings for hydraulic structures would greatly help in the calibration process, and ultimately add confidence to the rated flows on which water managers rely to operate the structures. Streamgauging data are used for a variety of hydraulic problems including water budget in constructed wetlands, canal conveyance reduction due to vegetative resistance, and quantifying lateral flows in canals due to groundwater---surface-water interactions. These problems rely on the difference between synoptic discharge measurements made at various stations in a canal reach. Comparing the differences of the mean flows measured at the canal stations only is both less meaningful and statistically weak, than comparing the mean flows with their respective uncertainty limits. Uncertainty is also relevant in flow monitoring for environmental compliance due to the increasingly stringent laws that regulate water releases to natural environments. Uncertainty on flow is a pressing issue: mean flows are currently reported without uncertainty. Quantifying and reporting uncertainty in flow as aid in the environmental compliance process are becoming an apparent necessity. JF Surprised that no one mentioned confusion amongst clients when discharge measurements may be contradictory FW There is an opportunity to better quantify data uncertainty by using independent, redundant sensors. The approach is increasingly being used to improve data reliability. JG In South Florida we designed a set of hydrometric ADCP measurements at four stations in a 6.5Mi-canal reach between a gated hydraulic structure and an index-velocity site to help explain the differences between the flows monitored at these sites as computed with their respective calibrated rating equations. These data allowed us to show that a major environmental source of uncertainty for the index-velocity site was wind re-circulation, and that groundwater--surface-water interaction was not significant. We did this comparison including uncertainty bounds on the synoptic flow data we collected. Including uncertainty help stakeholders recognize that conventional I-V metering was biased for low flows when there was substantial wind action. BW In a dispute between Wyoming and Nebraska. Wyoming would measure one day, Nebraska the next, and USGS the third day and none of the measurements compared. The independent study showed that when uncertainty was taken into consideration the measurements did overlap, further there was a storage element in the reach that accounted for part of the differences. How does you/your group agency currently estimate discharge uncertainty? Qualitatively or quantitatively? JG We use quantitative methods to report on uncertainty of our streamgauging data. These methods rely on standardized approaches for propagation of systematic uncertainty from the data measured by ADCP systems (based on Type B approach) into the systematic uncertainty of the mean flow. The precision uncertainty is estimated statistically based using eight or more transects (Type A approach). The total uncertainty is then estimated by combining the precision and systematic uncertainties. At present, we do not have estimates of uncertainty form sources that the algorithms used to compute flow estimates by ADCP do not accounted for. BW for Q measurements we use both quantitative and qualitative methods. Once submitted model results for a no rise certificate that showed a water level rise of 0.01 ft because did not round to zero, the no rise certificate was rejected because the reviewer thought the result was real. KO Mix of both - Uncertainty of discharge measurements will always be a mix of both quantitative and qualitative estimates because there are portions of the flow that aren't measured and qualitative assessments of these areas must be made. These qualitative assessments may be assisted by computations simple or complex but the assumptions on which the computations are based are qualitative. The most common method is qualitative. At present (2014), the Interpolated Variance Estimator (IVE) method is not currently being actively used for rating curve development but is being computed for many wading measurements. A semi-quantitative method for estimating the uncertainty of ADCP discharge measurements is being taught and used, but not by all hydrographers. There will always be some component of uncertainty that is qualitative because there are some regions of flow that we just can’t characterize that well. JG There is still a lot to do. Many of the sources of uncertainty have to do with the flow measurement environment, physical constraints, and suitability of the flow measurement equipment. There is a need for field experiments to get estimates of uncertainty in difficult sites from sources whose effects are not accounted by the reduction equations, unknown or unavailable. Concurrent measurements with different types of flow meters can help in this type of effort. In SF, we have done some comparisons of flows measured inside a gated culvert by an ADFM with flows measured in a canal downstream of the culvert by ADCO to identify estimate systematic uncertainties and identify their possible sources. Intercomparison also helps determine the applicability limits of each meter and the flow and gate settings, that define them. KO Not happy with current methods for uncertainty. After a recent flood event, the NWS indicated that they must take into account local biases in qualitative estimates of measurement uncertainty. FW Unknown unknowns are important. Even wrong data can be useful. JG The uncertainty propagates from the measurements all the way down to the decision chain. But there can be a reluctance to report uncertainty due to many reasons. A possible way to dealing with the “how=good are your uncertainty estimates,” is by reporting streamgauging uncertainty estimates with caveats on some of the limitations that one faced in estimating them. Do the current estimates of discharge measurement uncertainty capture the important source of measurement uncertainty? If not what’s missing? KO No: Proposed methods in the literature are often lacking the details to actually apply the method to real situations. Practical methods tend to be too subjective and need more objective approaches to dealing with the qualitative components. Experience has shown that the selection of the measurement section is the greatest source of uncertainty in discharge measurements. For example, it is not uncommon to measure from bridges which are not usually good measuring sections. Location, Location, Location. What we don’t measure is important- edge distance etc. BW Chaos which includes human error and turbulence FW Sensor uncertainty is probably the smallest source of uncertainty. JG The method we currently use in SF accounts for precision uncertainty and for systematic uncertainties in the fundamental data measured by the ADCP. This approach works well for measurements that follow standard best-measurement practices and quality assurance practices. However, it does not include uncertainties from sources not accounted for by the algorithms used by the ADCP software in discharge computation. It also yields less reliable uncertainty estimates of the total uncertainty for flows where the percent of the flow crosssectional area where the ADCP does not directly measure is relatively large. There is a need to develop/expand best practices guidelines based on real field conditions that account for this in a quantitative manner. These efforts could also rely on virtual data from CFD models, but care should be placed in not treating virtual data as a true representation of the actual flow. Capturing the boundary conditions of field conditions poses great challenge. Capturing the time history of the flow structure is only partly possible by validated LES and DNS models with many caveats regarding the Reynolds number and geometries that can be actually modeled. KO Another important source of measurement error is human error. The contribution of the hydrographer to measurement uncertainty – will vary with method, site, environmental conditions. This is not well known. JG combination of site conditions, keeping up with best practices and guideline. BW If you do a flow measurement it is as easy to do it right as it is to do it wrong (thanks to John Replogle). JF physical size (leg length) can influence uncertainty of wading measurements. JG what is the statistical variability among samples? One needs to characterize the relevant time and length scales of the flow to be measured and used this information to estimate what is an appropriate number of samples to reach estimates with low standard error. Given an estimate of the mean from a given number of samples, as you increase the number of samples to estimate the mean, you get convergence on estimates of the mean, but that does not mean that you are reaching statistical convergence. KO IVE is relatively robust for the important sources of uncertainty for panel measurements but has not implemented for moving-boat ADCP discharge measurements. FW What are probably the largest sources of uncertainty in discharge measurements – the human factor and environmental/site conditions (which affect how representative the data are for the monitoring the intended process) - are almost impossible to characterize objectively for any kind of situation (such as stream types, river stage, training of hydrographer, etc.). In fact, it might be a liability for agencies to not admit to uncertainty in the total uncertainty estimates. Therefore, rather than concentrate on a calculation methodology for total uncertainty of discharge measurements that can be universally applied, it is suggested to change the general approach to provide uncertainty bounds and develop bracketing estimates of uncertainty for the human and environmental/site sources of uncertainty, and develop a methodology for the sensor accuracy sources independently. Perhaps a reference manual similar to the Mannings n exemplars could be developed where the gauging section could be compared to sections with known uncertainties. Or, alternatively, focus on sensor uncertainty only and publish it as such. JG are you really replicating the process? Are the environmental conditions repeatable? You can never expect exactly the same result. KO The problem with discharge is that it is always changing so repeatability is not necessarily proof of consistency JG the variance of the samples does not reduce much with additional measurements – what changes is the confidence in the estimation of the mean. What do you think needs to be done to improve uncertainty estimates of discharge? KO Personnel intimately familiar with field applications of the measurement technology need to work with personnel familiar with practical applications of uncertainty methods. The methods developed then need to be tested against very high quality and complete data sets (or synthetic data) where the answer is known and the data sets modified with noise or invalid data. Need uncertainty methods that are practical and implementable by the people who will be responsible. One thing that is crucial is education about uncertainty. We teach others what uncertainty means and the implication of uncertainty for measurements and streamflow records. This will be a long process. It has big implications. BW it has to be a simple method JG one has to look at how it will be used. Can we build a better understanding of that. Can we build the algorithms that will apply to every scenario? What are the thresholds or boundaries of applicability? Ensembles may introduce bias based on underlying assumptions about fully developed flows. Extrapolations are important. New architecture for ADCPs is something that might offer some opportunities to help understand the problems better. Regattas, flow experiments, I think that there are several things that can be done. --Efforts at quantifying the contribution of uncertainties that cannot be propagated through the DRE to estimate total uncertainty in discharge should continue. --An uncertainty budget will aid estimating the relative contribution of the unc from the identified sources to the total uncertainty. --A review of the algorithms for discharge computation in the unmeasured portions of the cross section during ADCP measurements by using turbulence averaged estimates of velocity in the measured area as reference may shed light as to whether the current approach (based on limited sample profiles) are biased due to limited sampling. --Seeking a better understanding of the time scales of the large flow fluctuations may have a large contribution to the total precision variance. I believe new findings in fluid mechanics regarding the length scales of the large energy containing eddies or very large eddy structures may bring a better understanding of how to estimate the time scales relevant to precision uncertainty. --New ADCP architectures like that of the RiverPro ADCP may help reducing sampling biases stemming from the sampling afforded by current architectures. --Additional laboratory and field experiments like those done for estimating the biases due to profiler-flow interaction, aimed at quantifying the biases due to other sources of uncertainty may still offer a viable option. In addition, mining of CFD modeling result from LES and DNS may help shed some light regarding sampling over space and time. However, practical LES and DNS simulations are coupled to the boundary conditions, and running simulations with the boundary conditions of realistic complex flows pose a great challenge. FW It would be great to have uncertainty estimates rather sooner than later, even if they are wrong, but only if they are useful. We have evidence of uncertainty but no method to aggregate that evidence into a number. Maybe we should simply publish the evidence in the interim. Methods for quantifying stage-discharge-derived streamflow data uncertainty need to clearly distinguish between the uncertainty of real-time and published (quality-controlled) data. JF if you can’t put a number on it what can you say you know about it?