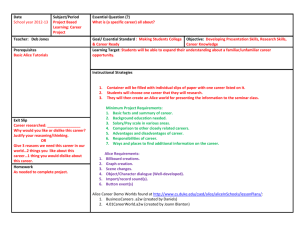

[TH]Note 3.1

advertisement

![[TH]Note 3.1](http://s3.studylib.net/store/data/007194745_1-9cd36dd6ebc92f3ee85048db410e387e-768x994.png)

1 Preserving Anonymity of Recurrent Location-based Queries - Daniele

Riboni, Linda Pareschi, Claudio Bettini

Conference:

Attribute:

1.1 Key ideal

-

-

Threat

o 1 user – n similar request

o N service parameters (ex. kind of restaurant) in a request

Solution: mixed

o Anonymity generalizing STdata.

K-anonymity

L-diversity[1]: each sensitive attribute has at least l values

“Equivalence class(EC) of an anonymized table to be a set of

records that have the same values for the quasi-identifiers. For

example in Table 2 has three “equivalence class”.

Definition: An “equivalence class” (EC) is said to have l-diversity if

there are at least l “well-represented” values for the sensitive

attribute. A table is said to have l-diversity if every equivalence

class of the table has l-diversity.

For example: the second EC of table 2 is 3-diversity => can be

background knowledge attack – if attacker knows the target has

very low risk for Flu & Heart Disease then the target must has

Cancer. Table 2 is 1-diversity (the first EC is 1-diversity =>

homogeneity attack - the target belong this EC then the target

must has Heart Disease).

Limit: it does not take into account the semantically closeness of

the values in an EC (l-diversity only care about the quantity of

values) => Skewness and Similarity Attack.

For ex: the first EC of Talbe 4 => all disease is stomach problem.

T-closeness[1]:

Definition: An equivalence class is said to have t-closeness if the

distance between the distribution of a sensitive attribute in this

class and the distribution of the attribute in the whole table is no

more than a threshold t. A table is said to have t-closeness if all

equivalence classes have t-closeness.

o Obfuscation generalizing SSdata.

1.2 Adversary’s model

-

-

-

Service Request (R) has 3 part:

o ID data – identification data

o ST data – spatial(location) and time data

o SS data – service parameters (ex object - China Restaurant, Café, Bookstore;

speed; interest – play tennis).

Context Assumption

o Generalization algorithm is public.

o At each time-granule a group of generalized requests is forwarded to the SP.

Only one request per time granule can be issued by each user.

o The adversary may obtain the generalized requests issued in one or more time

granules.

o The adversary may observe or obtain from external sources the position of

specific individuals at given times.

o Correlation of requests at different time granules can only be done by analyzing

SSdata.

o The adversary has no specific prior knowledge about the association between

individuals and sensitive service parameters.

Example

o Definition

AC(r) is the anonymity set of potential issuers of request r identified on

the basis of r and of context C. Ex: AC(r) = {Alice, Bea, Carl, Dan, Eric}.

R(A) = (r1,r2,….,rn) is the set of generalized requests issued by users in

anonymity set A. Ex: R(A) is the set composed of requests issued by

Alice, Bea and Carl during TG1

Θ(v1,v2,….,vn) is the set of values of SSdata included in the set R of

generalized requests

Mv,R is the number of requests in R which include the SSdata v; this value

is called the multiplicity of v in R. Ex: Mv1,R(A1)= 2

Given posterior knowledge Kpos(u, TG) = (p1,…, pn) => K(i) pos(u, TG) = pi.

Similarly, given Kpri(u) = (p1,…, pn), K(i) pri (u) = pi. For all user in U, Kpri(u) =

{1/n, …., 1/n} if having n SSdata.

o A1, A2, A3 and A4 are 5-anonymity set. Set SSdata has 12 service parameters

Θ(v1,v2,….,v12) => Kpri(u) = 1/12.

o TG1:

A1(the left anonymity set) has 3 requests

2 requests ask for v1 (included Alice)

=> probability Alice request for v1 is 2/5

One for v2

=> probability Alice request for v2 is 1/5

A2 has 1 request ask for v3

o TG2

A3 has 2 request ask for v1 and v2

A4 has 1 request (from Alice) for v1, the adversary know Alice in A4. The

adversary can notice that the presence of Alice in a given anonymity set

is correlated with a frequency of the private value v1 that is higher than

the average frequency of the same value in the whole set of requests.

Hence, he can conclude that probably Alice issued requests for v1.

o Inference Method

If user u does not belong to any anonymity set at TGm, the adversary

knowledge is the same.

Otherwise, new knowledge can be inferred with

βi is the probability the user request for vi. Ex: in (TG1, A1) β1 =

2/5 with Alice.

α is the probability the user issued the request. Ex in (TG1, A1) α

= 3/5.

K1pos(Alice, TG1) = 2/5 + (1-2/5)*K0pos(Alice, TG0) = 0.4 + 0.4 *

Kpri(Alice) = 0.4 + 0.4 * 1/12 = 0.43

K2pos(Alice, TG2) = 1/5 + (1-1/5)* K1pos(Alice, TG1) = 1/5 +

4/5*0.43 = 0.546

For other users in A4 like Hal, K2pos(Hal, TG2) = K1pos(Hal, TG1) =

1/5 + (1-1/5)*1/12 = 0.267

=>the associates Alice to v1 is considerably higher than the value

for the other users belonging to the same anonymity set as Alice.

Hence, he can conclude that probably Alice issued requests for

v1.

o Defend Technique

In particular, for each anonymity set A we ensure that the distance

between the distribution of SSdata in requests originating from A and

the distribution of SSdata in the whole set of requests issued during the

same time granule is below a threshold t.

A different value of t must be used for each SSdata generalization level.

If it does not exist an SSdata generalization level satisfying both kanonymity and t-closeness (lines 13 to 15), requests are discarded and

their potential issuers are removed

Otherwise (lines 17 to 21), the generalization level j of SSdata

maximizing the QoS is chosen

1.3 Reference

[1] N. Li, T. Li, and S. Venkatasubramanian, t-closeness: Privacy beyond k-anonymity and ldiversity.” in ICDE. IEEE, 2007, pp. 106–115.