wrinkles correctness

advertisement

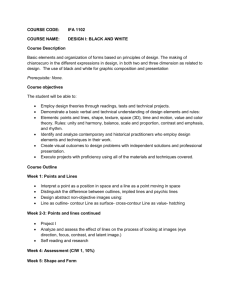

Animation & Graphics Theory Contents 1) Graphics Drivers (TBD) ................................................................................................... 2 OpenGL ............................................................................................................................ 2 Direct3d (DirectX)............................................................................................................ 2 2) 2D Animation Theory ....................................................................................................... 3 3) 3D Animation Theory ....................................................................................................... 4 Modeling........................................................................................................................... 4 Layout and animation ....................................................................................................... 8 Rendering ....................................................................................................................... 10 NURBS ........................................................................................................................... 14 4) Graphics Processing ....................................................................................................... 15 Polygon Budgets ............................................................................................................. 15 Animation Frames .......................................................................................................... 15 Texturing ........................................................................................................................ 16 Reasons for "Slow-down" .............................................................................................. 16 Scene Design .................................................................................................................. 17 5) Heightmaps .......................................................................... Error! Bookmark not defined. Heightmap Editors .......................................................................................................... 20 6) 3D Animation File Formats ........................................................................................... 25 3D animation formats: .................................................................................................... 25 3D model formats: .......................................................................................................... 25 1) Graphics Drivers (TBD) There are two main graphics drivers, OpenGL, and DirectX. Both have a different set of APIs. These days most high end graphics cards support both (note, mine supports both). OpenGL Originally used for CAD design. Direct3d (DirectX) Used in most games. 2) 2D Animation Theory 3) 3D Animation Theory Overview The process of creating 3D computer graphics can be sequentially divided into three basic phases: 3D modeling which describes the process of forming the shape of an object, layout and animation which describes the motion and placement of objects within a scene, and 3D rendering which produces an image of an object. Modeling (Main article: 3D modeling) The model describes the process of forming the shape of an object. The two most common sources of 3D models are those originated on the computer by an artist or engineer using some kind of 3D modeling tool, and those scanned into a computer from real-world objects. Models can also be produced procedurally or via physical simulation. Texturing In addition to creating and animating objects that appear in the scene, 3D animators also paint and texture objects for added realism while keeping down the polygon count. These textures are typically painted in an image-editing package such as Photoshop, which provides tools for including transparency, texture, and surface treatments (Wiki - Texture Mapping). In 3D computer graphics, a texture is a digital image applied to the surface of a three-dimensional model by texture mapping to give the model a more realistic appearance. Often, the image is a photograph of a "real" texture, such as wood grain. A texture map is applied (mapped) to the surface of a shape or polygon. [1] This process is akin to applying patterned paper to a plain white box. Every vertex in a polygon is assigned a texture coordinate (which in the 2d case is also known as a UV coordinate) either via explicit assignment or by procedural definition. Image sampling locations are then interpolated across the face of a polygon to produce a visual result that seems to have more richness than could otherwise be achieved with a limited number of polygons. Multitexturing is the use of more than one texture at a time on a polygon.[2] For instance, a light map texture may be used to light a surface as an alternative to recalculating that lighting every time the surface is rendered. Another multi-texture technique is bump mapping, which allows a texture to directly control the facing direction of a surface for the purposes of its lighting calculations; it can give a very good appearance of a complex surface, such as tree bark or rough concrete, that takes on lighting detail in addition to the usual detailed coloring. Bump mapping has become popular in recent video games as graphics hardware has become powerful enough to accommodate it in real-time. The way the resulting pixels on the screen are calculated from the texels (texture pixels) is governed by texture filtering. The fastest method is to use the nearest-neighbour interpolation, but bilinear interpolation or trilinear interpolation between mipmaps are two commonly used alternatives which reduce aliasing or jaggies. In the event of a texture coordinate being outside the texture, it is either clamped or wrapped. Texture coordinates are specified at each vertex of a given triangle, and these coordinates are interpolated using an extended Bresenham's line algorithm. If these texture coordinates are linearly interpolated across the screen, the result is affine texture mapping. This is a fast calculation, but there can be a noticeable discontinuity between adjacent triangles when these triangles are at an angle to the plane of the screen (see figure at right – textures (the checker boxes) appear bent). Perspective correct texturing accounts for the vertices' positions in 3D space, rather than simply interpolating a 2D triangle. This achieves the correct visual effect, but it is slower to calculate. Instead of interpolating the texture coordinates directly, the coordinates are divided by their depth (relative to the viewer), and the reciprocal of the depth value is also interpolated and used to recover the perspective-correct coordinate. This correction makes it so that in parts of the polygon that are closer to the viewer the difference from pixel to pixel between texture coordinates is smaller (stretching the texture wider), and in parts that are farther away this difference is larger (compressing the texture). Classic texture mappers generally did only simple mapping with at most one lighting effect, and the perspective correctness was about 16 times more expensive. To achieve two goals faster arithmetic results, and keeping the arithmetic mill busy at all times - every triangle is further subdivided into groups of about 16 pixels. For perspective texture mapping without hardware support, a triangle is broken down into smaller triangles for rendering, which improves details in non-architectural applications. Software renderers generally preferred screen subdivision because it has less overhead. Additionally they try to do linear interpolation along a line of pixels to simplify the set-up (compared to 2d affine interpolation) and thus again the overhead (also affine texture-mapping does not fit into the low number of registers of the x86 CPU; the 68000 or any RISC is much more suited). For instance, Doom restricted the world to vertical walls and horizontal floors/ceilings. This meant the walls would be a constant distance along a vertical line and the floors/ceilings would be a constant distance along a horizontal line. A fast affine mapping could be used along those lines because it would be correct. UV Mapping UV mapping is the 3D modeling process of making a 2D image representation of a 3D model. This process projects a texture map onto a 3D object. The letters "U" and "V" are used to describe the 2D mesh because ("W" when using quaternions, which is standard,) "X", "Y" and "Z" are already used to describe the 3D object in model space. UV texturing permits polygons that make up a 3D object to be painted with color from an image. The image is called a UV texture map,[1] but it's just an ordinary image. The UV mapping process involves assigning pixels in the image to surface mappings on the polygon, usually done by "programmatically" copying a triangle shaped piece of the image map and pasting it onto a triangle on the object.[2] UV is the alternative to XY, it only maps into a texture space rather than into the geometric space of the object. But the rendering computation uses the UV texture coordinates to determine how to paint the three dimensional surface. In the example to the right, a sphere is given a checkered texture, first without and then with UV mapping. Without UV mapping, the checkers tile XYZ space and the texture is carved out of the sphere. With UV mapping, the checkers tile UV space and points on the sphere map to this space according to their latitude and longitude. When a model is created as a polygon mesh using a 3D modeler, UV coordinates can be generated for each vertex in the mesh. One way is for the 3D modeler to unfold the triangle mesh at the seams, automatically laying out the triangles on a flat page. If the mesh is a UV sphere, for example, the modeler might transform it into a equirectangular projection. Once the model is unwrapped, the artist can paint a texture on each triangle individually, using the unwrapped mesh as a template. When the scene is rendered, each triangle will map to the appropriate texture from the "decal sheet". A UV map can either be generated automatically by the software application, made manually by the artist, or some combination of both. Often a UV map will be generated, and then the artist will adjust and optimize it to minimize seams and overlaps. If the model is symmetric, the artist might overlap opposite triangles to allow painting both sides simultaneously. UV coordinates are applied per face,[2] not per vertex. This means a shared vertex can have different UV coordinates in each of its triangles, so adjacent triangles can be cut apart and positioned on different areas of the texture map. The UV Mapping process at its simplest requires three steps: unwrapping the mesh, creating the texture, and applying the texture.[1] Diffuse Mapping Bump Mapping Bump mapping is a technique in computer graphics for simulating bumps and wrinkles on the surface of an object making a rendered surface look more realistic by modeling the interaction of a bumpy surface texture with lights in the environment Bump mapping does this by changing the brightness of the pixels on the surface in response to a heightmap that is specified for each surface. This is achieved by perturbing the surface normals of the object and using the perturbed normal during illumination calculations. The result is an apparently bumpy surface rather than a perfectly smooth surface although the surface of the underlying object is not actually changed. Normal Mapping (Normal) and parallax mapping are the most commonly used ways of making bumps. When rendering a 3D scene, the brightness and color of the pixels are determined by the interaction of a 3D model with lights in the scene. After it is determined that an object is visible, trigonometry is used to calculate the "geometric" surface normal of the object, defined as a vector at each pixel position on the object. The geometric surface normal then defines how strongly the object interacts with light coming from a given direction using the Phong reflection model or a similar lighting algorithm. Light traveling perpendicular to a surface interacts more strongly than light that is more parallel to the surface. After the initial geometry calculations, a colored texture is often applied to the model to make the object appear more realistic. Normal Mapping In 3D computer graphics, normal mapping, or "Dot3 bump mapping", is a technique used for faking the lighting of bumps and dents. It is used to add details without using more polygons. A normal map is usually an RGB image that corresponds to the X, Y, and Z coordinates of a surface normal from a more detailed version of the object. A common use of this technique is to greatly enhance the appearance and details of a low polygon model by generating a normal map from a high polygon model. The image usually looks purply, bluey, greeny colours. Normal mapping's popularity for real-time rendering is due to its good quality to processing requirements ratio versus other methods of producing similar effects. Much of this efficiency is made possible by distance-indexed detail scaling, a technique which selectively decreases the detail of the normal map of a given texture (cf. mipmapping), meaning that more distant surfaces require less complex lighting simulation. Texture Filtering In computer graphics, texture filtering or texture smoothing is the method used to determine the texture color for a texture mapped pixel, using the colors of nearby texels (pixels of the texture). Mathematically, texture filtering is a type of anti-aliasing, but it filters out high frequencies from the texture fill whereas other AA techniques generally focus on visual edges. Put simply, it allows a texture to be applied at many different shapes, sizes and angles while minimizing blurriness, shimmering and blocking. There are many methods of texture filtering, which make different trade-offs between computational complexity and image quality. During the texture mapping process, a 'texture lookup' takes place to find out where on the texture each pixel center falls. Since the textured surface may be at an arbitrary distance and orientation relative to the viewer, one pixel does not usually correspond directly to one texel. Some form of filtering has to be applied to determine the best color for the pixel. Insufficient or incorrect filtering will show up in the image as artifacts (errors in the image), such as 'blockiness', jaggies, or shimmering. Given a square texture mapped on to a square surface in the world, at some viewing distance the size of one screen pixel is exactly the same as one texel. Closer than that, the texels are larger than screen pixels, and need to be scaled up appropriately - a process known as texture magnification. Farther away, each texel is smaller than a pixel, and so one pixel covers multiple texels. In this case an appropriate color has to be picked based on the covered texels, via texture minification. Graphics APIs such as OpenGL allow the programmer to set different choices for minification and magnification filters. Note that even in the case where the pixels and texels are exactly the same size, one pixel will not necessarily match up exactly to one texel - it may be misaligned, and cover parts of up to four neighboring texels. Hence some form of filtering is still required. Mipmapping is a standard technique used to save some of the filtering work needed during texture minification. During texture magnification, the number of texels that need to be looked up for any pixel is always four or fewer; during minification, however, as the textured polygon moves farther away potentially the entire texture might fall into a single pixel. This would necessitate reading all of its texels and combining their values to correctly determine the pixel color, a prohibitively expensive operation. Mipmapping avoids this by prefiltering the texture and storing it in smaller sizes down to a single pixel. As the textured surface moves farther away, the texture being applied switches to the prefiltered smaller size. Different sizes of the mipmap are referred to as 'levels', with Level 0 being the largest size (used closest to the viewer), and increasing levels used at increasing distances. Methods for mipmapping are: Nearest-neighbor interpolation, Nearest-neighbor with mipmapping, Bilinear filtering, Trilinear filtering, Anisotropic filtering (Wiki). Texture Synthesis Texture synthesis is the process of algorithmically constructing a large digital image from a small digital sample image by taking advantage of its structural content. It is object of research to computer graphics and is used in many fields, amongst others digital image editing, 3D computer graphics and post-production of films. Texture synthesis can be used to fill in holes in images (as in inpainting), create large nonrepetitive background images and expand small pictures. Texture synthesis algorithm are intended to create an output image that meets the following requirements: The output should have the size given by the user. The output should be as similar as possible to the sample. The output should not have visible artifacts such as seams, blocks and misfitting edges. The output should not repeat, i. e. the same structures in the output image should not appear multiple places. Like most algorithms, texture synthesis should be efficient in computation time and in memory use. The simplest way to generate a large image from a sample image is Tiling. This means multiple copies of the sample are simply copied and pasted side by side. The result is rarely satisfactory. Except in rare cases, there will be the seams in between the tiles and the image will be highly repetitive. For more advanced methods, see (Wiki). Animation (Main article: Computer animation) Before objects are rendered, they must be placed (laid out) within a scene. This is what defines the spatial relationships between objects in a scene including location and size. Animation refers to the temporal description of an object, i.e., how it moves and deforms over time. Popular methods include keyframing, inverse kinematics, and motion capture, though many of these techniques are used in conjunction with each other. As with modeling, physical simulation is another way of specifying motion. A key frame in animation and filmmaking is a drawing that defines the starting and ending points of any smooth transition. They are called "frames" because their position in time is measured in frames on a strip of film. A sequence of keyframes defines which movement the viewer will see, whereas the position of the keyframes on the film, video or animation defines the timing of the movement. Because only two or three keyframes over the span of a second do not create the illusion of movement, the remaining frames are filled with inbetweens. The animator creates the important key frames of a sequence, then the software fills in the gap, this is called tweening. The animator can correct the result at any point, shifting keyframes back and forth to improve the timing and dynamics of a movement, or change an 'in between' into an additional keyframe to further refine the movement. There is also an animation technique known as keyframing. Contrary to tweening, every frame of a keyframed computer animation is directly modified or manipulated by the creator, such that no tweening has actually occurred. This method is similar to the drawing of traditional animation, and is chosen by artists who wish to have complete control over the animation. Inverse kinematics is a subdomain of kinematics, which is of particular interest in robotics and (interactive) computer animation. In contrast to forward kinematics, which calculates the position of a body after a series of motions, inverse kinematics calculates the motions necessary to achieve a desired position. Examples of problems that can be solved through inverse kinematics are: How does a robot's arm need to be moved to be able to pick up a specific object? What are the motions required to make it look like an animated character is picking up an object? Solving these problems is usually more involved than simply moving an object from one location to another. Typically, it requires the translation and rotation of a series of interconnected objects while observing limitations to the range of motions that are physically possible. A robot might damage itself if mechanical limitations are disregarded; An animation looks unrealistic if a character's hand moves through their own body to pick up an object located behind their back. Kinematics is the formal description of motion. One of the goals of rudimentary mechanics is to identify forces on a point object and then apply kinematics to determine the motion of the object. Ideally the position of the object at all times can be determined. For an extended object, (rigid body or other), along with linear kinematics, rotational motion can be applied to achieve the same objective: Identify the forces, develop the equations of motion, find the position of center of mass and the orientation of the object at all times. Inverse kinematics is the process of determining the parameters of a jointed flexible object (a kinematic chain) in order to achieve a desired pose. Inverse kinematics is a type of motion planning. Inverse kinematics are also relevant to game programming and 3D animation, where a common use is making sure game characters connect physically to the world, such as feet landing firmly on top of terrain. Motion capture, motion tracking, or mocap are terms used to describe the process of recording movement and translating that movement on to a digital model. It is used in military, entertainment, sports, and medical applications, and for validation of computer vision[1] and robotics. In filmmaking it refers to recording actions of human actors, and using that information to animate digital character models in 2D or 3D computer animation. When it includes face and fingers or captures subtle expressions, it is often referred to as performance capture. In motion capture sessions, movements of one or more actors are sampled many times per second, although with most techniques (recent developments from Weta use images for 2D motion capture and project into 3D), motion capture records only the movements of the actor, not his or her visual appearance. This animation data is mapped to a 3D model so that the model performs the same actions as the actor. Rendering (Main article: 3D rendering, Rendering (Computer Graphics)) Rendering is the process of generating an image from a model (or models in what collectively could be called a scene file), by means of computer programs. A scene file contains objects in a strictly defined language or data structure; it would contain geometry, viewpoint, texture, lighting, and shading information as a description of the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. Rendering converts a model into an image either by simulating light transport to get photorealistic images, or by applying some kind of style as in non-photorealistic rendering. The two basic operations in realistic rendering are transport (how much light gets from one place to another) and scattering (how surfaces interact with light). This step is usually performed using 3D computer graphics software or a 3D graphics API. The process of altering the scene into a suitable form for rendering also involves 3D projection which allows a three-dimensional image to be viewed in two dimensions Another important piece of the animation puzzle is compositing several separate rendered pieces together into a single image. Compositing software is typically used to accomplish this. This software is also used to edit animations and in some cases add special effects to the rendered animation sequence. A rendered image can be understood in terms of a number of visible features. Rendering research and development has been largely motivated by finding ways to simulate these efficiently. Some relate directly to particular algorithms and techniques, while others are produced together. shading — how the color and brightness of a surface varies with lighting texture-mapping — a method of applying detail to surfaces bump-mapping — a method of simulating small-scale bumpiness on surfaces fogging/participating medium — how light dims when passing through non-clear atmosphere or air shadows — the effect of obstructing light soft shadows — varying darkness caused by partially obscured light sources reflection — mirror-like or highly glossy reflection transparency (optics), transparency (graphic) or opacity — sharp transmission of light through solid objects translucency — highly scattered transmission of light through solid objects refraction — bending of light associated with transparency diffraction — bending, spreading and interference of light passing by an object or aperture that disrupts the ray indirect illumination — surfaces illuminated by light reflected off other surfaces, rather than directly from a light source (also known as global illumination) caustics (a form of indirect illumination) — reflection of light off a shiny object, or focusing of light through a transparent object, to produce bright highlights on another object depth of field — objects appear blurry or out of focus when too far in front of or behind the object in focus motion blur — objects appear blurry due to high-speed motion, or the motion of the camera non-photorealistic rendering — rendering of scenes in an artistic style, intended to look like a painting or drawing Therefore, four loose families of more-efficient light transport modelling techniques have emerged: rasterization, including scanline rendering, geometrically projects objects in the scene to an image plane, without advanced optical effects; ray casting considers the scene as observed from a specific point-of-view, calculating the observed image based only on geometry and very basic optical laws of reflection intensity, and perhaps using Monte Carlo techniques to reduce artifacts; and ray tracing is similar to ray casting, but employs more advanced optical simulation, and usually uses Monte Carlo techniques to obtain more realistic results at a speed that is often orders of magnitude slower. The fourth type of light transport techique, radiosity is not usually implemented as a rendering technique, but instead calculates the passage of light as it leaves the light source and illuminates surfaces. These surfaces are usually rendered to the display using one of the other three techniques. The Generic Graphic Pipeline Graphic pipeline constantly evolve. This article describe graphic pipeline as can be found in OpenGL 3.2 and Direct3D 10. Transformation: This stage consumes data about polygons with vertices, edges and faces that constitute the whole scene. A matrix controls the linear transformations (scaling, rotation, translation, etc.) and viewing transformations (world and view space) that are to be applied on this data. Per-vertex lighting: For more details on this topic, see Vertex shader. Geometry in the complete 3D scene is lit according to the defined locations of light sources, reflectance, and other surface properties. Current hardware implementations of the graphics pipeline compute lighting only at the vertices of the polygons being rendered. The lighting values between vertices are then interpolated during rasterization. Per-fragment (i.e. per-pixel) lighting can be done on modern graphics hardware as a post-rasterization process by means of a shader program. Viewing transformation or normalizing transformation: Objects are transformed from 3D world space coordinates into a 3-D coordinate system based on the position and orientation of a virtual camera. This results in the original 3D scene as seen from the camera’s point of view, defined in what is called eye space or camera space. The normalizing transformation is the mathematical inverse of the viewing transformation, and maps from an arbitrary userspecified coordinate system (u, v, w) to a canonical coordinate system (x, y, z). Primitives generation For more details on this topic, see Geometry shader. After the transformation, new primitives are generated from those primitives that were sent to the beginning of the graphics pipeline. Projection transformation: In the case of a Perspective projection, objects which are distant from the camera are made smaller (sheared). In an orthographic projection, objects retain their original size regardless of distance from the camera. In this stage of the graphics pipeline, geometry is transformed from the eye space of the rendering camera into a special 3D coordinate space called "Homogeneous Clip Space", which is very convenient for clipping. Clip Space tends to range from [-1, 1] in X,Y,Z, although this can vary by graphics API(Direct3D or OpenGL). The Projection Transform is responsible for mapping the planes of the camera's viewing volume (or Frustum) to the planes of the box which makes up Clip Space. Clipping: For more details on this topic, see Clipping (computer graphics). Geometric primitives that now fall outside of the viewing frustum will not be visible and are discarded at this stage. Clipping is not necessary to achieve a correct image output, but it accelerates the rendering process by eliminating the unneeded rasterization and post-processing on primitives that will not appear anyway. Viewport transformation: The post-clip vertices are transformed once again to be in window space. In practice, this transform is very simple: applying a scale (multiplying by the width of the window) and a bias (adding to the offset from the screen origin). At this point, the vertices have coordinates which directly relate to pixels in a raster. Scan conversion or rasterization: For more details on this topic, see Render Output unit. Rasterization is the process by which the 2D image space representation of the scene is converted into raster format and the correct resulting pixel values are determined. From now on, operations will be carried out on each single pixel. This stage is rather complex, involving multiple steps often referred as a group under the name of pixel pipeline. Texturing, fragment shading: For more details on this topic, see Texture mapping unit.At this stage of the pipeline individual fragments (or pre-pixels) are assigned a color based on values interpolated from the vertices during rasterization or from a texture in memory. Display: The final colored pixels can then be displayed on a computer monitor or other display. Shaders In the field of computer graphics, a shader is a set of software instructions that is used primarily to calculate rendering effects on graphics hardware with a high degree of flexibility. Shaders are used to program the graphics processing unit (GPU) programmable rendering pipeline, which has mostly superseded the fixed-function pipeline that allowed only common geometry transformation and pixel-shading functions; with shaders, customized effects can be used. As graphics processing units evolved, major graphics software libraries such as OpenGL and Direct3D began to exhibit enhanced ability to program these new GPUs by defining special shading functions in their API. Shaders are simple programs that describe the traits of either a vertex or a pixel. Vertex shaders describe the traits (position, texture coordinates, colors, etc.) of a vertex, while pixel shaders describe the traits (color, z-depth and alpha value) of a pixel. A vertex shader is called for each vertex in a primitive (possibly after tessellation) – thus; one vertex in, one (updated) vertex out. Each vertex is then rendered as a series of pixels onto a surface (block of memory) that will eventually be sent to the screen. Shaders replace a section of video hardware typically called the Fixed Function Pipeline (FFP) – so-called because it performs lighting and texture mapping in a hard-coded manner. Shaders provide a programmable alternative to this hard-coded approach.[1] The Direct3D and OpenGL graphic libraries use three types of shaders. Vertex shaders are run once for each vertex given to the graphics processor. The purpose is to transform each vertex's 3D position in virtual space to the 2D coordinate at which it appears on the screen (as well as a depth value for the Z-buffer). Vertex shaders can manipulate properties such as position, colour, and texture coordinate, but cannot create new vertices. The output of the vertex shader goes to the next stage in the pipeline, which is either a geometry shader if present or the rasterizer otherwise. Geometry shaders can add and remove vertices from a mesh. Geometry shaders can be used to generate geometry procedurally or to add volumetric detail to existing meshes that would be too costly to process on the CPU. If geometry shaders are being used, the output is then sent to the rasterizer. Pixel shaders, also known as fragment shaders, calculate the color of individual pixels. The input to this stage comes from the rasterizer, which fills in the polygons being sent through the graphics pipeline. Pixel shaders are typically used for scene lighting and related effects such as bump mapping and color toning. (Direct3D uses the term "pixel shader," while OpenGL uses the term "fragment shader." The latter is arguably more correct, as there is not a one-to-one relationship between calls to the pixel shader and pixels on the screen. The most common reason for this is that pixel shaders are often called many times per pixel for every object that is in the corresponding space, even if it is occluded; the Z-buffer sorts this out later.) The unified shader model unifies the three aforementioned shaders in OpenGL and Direct3D 10. As these shader types are processed within the GPU pipeline, the following gives an example how they are embedded in the pipeline: For more details on this topic, see Graphics pipeline. The CPU sends instructions (compiled shading language programs) and geometry data to the graphics processing unit, located on the graphics card. Within the vertex shader, the geometry is transformed and lighting calculations are performed. If a geometry shader is in the graphic processing unit, some changes of the geometries in the scene are performed. The calculated geometry is triangulated (subdivided into triangles). Triangles are broken down into pixel quads (one pixel quad is a 2 × 2 pixel primitive). The graphic pipeline uses these steps in order to transform three dimensional (and/or two dimensional) data into useful two dimensional data for displaying. In general, this is a large pixel matrix or "frame buffer". NURBS Non-uniform rational basis spline (NURBS) Is a mathematical model commonly used in computer graphics for generating and representing curves and surfaces which offers great flexibility and precision for handling both analytic and freeform shapes. Mathematically precise representation of freeform surfaces like those used for ship hulls, or car bodies, which could be exactly reproduced. But because Bézier published the results of his work, the average computer graphics user today recognizes splines — which are represented with control points lying off the curve itself — as Bézier splines, while de Casteljau’s name is only known and used for the algorithms he developed to evaluate parametric surfaces. In the 1960s it became clear that non-uniform, rational B-splines are a generalization of Bézier splines, which can be regarded as uniform, non-rational B-splines. Real-time, interactive rendering of NURBS curves and surfaces. They allow representation of geometrical shapes in a compact form. They can be efficiently handled by the computer programs and yet allow for easy human interaction. NURBS surfaces are functions of two parameters mapping to a surface in three-dimensional space. The shape of the surface is determined by control points. In general, editing NURBS curves and surfaces is highly intuitive and predictable. Control points are always either connected directly to the curve/surface, or act as if they were. Splines A spline or the more modern term flexible curve consists of a long strip fixed in position at a number of points that relaxes to form and hold a smooth curve passing through those points for the purpose of transferring that curve to another material. 4) Graphics Processing Polygon Budgets It is generally recommended to use about about 3,000 to 4,000 (sometimes a maximum of 6,000) polygons for main characters in FPS games, about 1/3 for the head and 2/3 for the body, and approximately 100 for weapons (Oblivion used 10,000 polys for characters). The rule of thumb for keeping poly counts down is to "Only use 3D detail where necessary, instead use texture detail" (e.g. in CoH squad tactics game, where there may be up to 100 characters on screen simultaneously, each model is fairly octagonal - arms and legs based on 8 sided tubes, maybe with a few extra bumps for shaping, extra 3D effect is given by shading edges), (e.g. a 2.4G P4 with 1G RAM and ATI Radeon X1550 256mb graphics, 20 x 3,500 poly characters (70,000 polys total) with 50 bones each (functional to individual fingers, eyebrows, and speech), starts maxing and stuttering). You must also set a poly budget for the game, of say 100,000 polys per level (depends on the pc performance, but this is a good amount for a fairly high end pc, someone else mentioned that a top end pc should be able to handle roughly 1.2 million polygons (triangles) per scene (on screen at once) at 60fps, textured and lit by a single light source). The budget will depend on the game type, but you must ensure a balanced look (don't overspend on characters to the detriment of surroundings). For example: 40% characters, 40% terrain detail, 10% horizon 90% level, 10% characters You can also start by using half the number of polys that you budget, then increasing model polys for extra detail if required. Backgrounds can also simply be jpeg images. You can also have several stages of LOD (Level Of Detail), models with drastically reduced polygon counts for when things get smaller in screen space (further away). Animation Frames Model file size is very important. If you have a 1000 polygon model but the file is 6 mb in size, your game will perform poorly. Take a 2.5k polygon model that is 1 mb and it will run circles around the 6 mb model (i.e. where models are inflated in frames and size by a factor of 160, if you re-rig them with 500 frames of animation then they shrink down to around 250k and are amazing as NPCs). Good animation is very important. People tend to add too many frames. A typical animation should be around 20 frames, not 100. DirectX interpolates between frames. Increasing the number of frames directly impacts the size of the model. I just re-rigged the FPSC thug model with my own skeleton. It has tons of bones and 2400-ish frames of animation, all of which are FPS focused. I changed it to 500 frames of quality RPG animation and fewer bones and he shrunk to 250k. I can now have tons of NPCs on screen and still have a playable game. Moral of the story - polygon counts are important, but not the only factor to consider. Animate using as few "flattening joints"(?) as possible. Texturing You need to take into account file size, number of textures (nothing beats 1 good UV mapped texture), texture tiling (the more you tile a texture the slower it will run), and number of limbs (each limb gets a draw call of its own). You must also choose your texture resolutions, (512x512 -> 1,024x1,024?), texture sight: www.mayang.com . Reasons for "Slow-down" Remember the game in general has more going on than just the visual aspect. You'll probably also have AI, Networking, Collision, Dynamics (Physics), Shaders, etc... Remember also that, you don't just have a polygon limit, but also a pixel limit, and memory limit. While textures of 1024x1024 might look really nice and give high-resolution to your models detail remember they take up quite a fair bit of graphics and system memory, take longer to move between the two, and generally take more power to render as they eat up your pixel throughput. So using lower resolution textures, helps. Also keep track of what texture is doing what... for example Shaders now generally use a Diffuse (colouring), Normal (lighting) and Specular (Highlighting); so that's 3 textures for a single model. This uses a lot of graphics memory for multiple objects, especially with high resolution models. You'd also be good to note that "a single texture = multiple draw passes" for the entire model just to do a single material area (especially with Shaders). So splitting up your textures into several smaller ones often you can get more detail, waste less space and be able to then set each aspect in it's own draw queue. This will cut down on the number of draws (the less the better performance!), memory space, and overall processing power needed. Animation wise, "less bones = less cpu power needed" and "more keyframes = less cpu power needed for interpolation". Flip side being the model requires more space in memory. A very good idea is to actually let a physics system take over the animation and only use basic keyframes. For example, it is good to remember is that DirectX Skin & Bone animation allows you to set time-indices for each keyframe, so a walk animation only needs about 4 keyframes, combined with a physics ragdoll and IK, you retain a fairly realistic walk at very little memory overhead, and more reliance on the CPU during the physics calculation cycle (if TGC ever sort out Dark Physics to be Multi-Threaded, this means that it'll end up practically no cost either over normal physics). If left to a simple interpolation system though, (believe DBP uses a Bicubic Interpolation) then you'll need to set keyframes at regular intervals. Try to keep animations to around 6-15 keyframes across every 30-seconds. If you're using Shaders, then make sure you know their rough throughput values. For example, anything using vertices (even just reading) will mean you can only use half the polygons you originally wanted to. As far as the pixel aspect goes, remember the shader will be running the same number of times as the rendered frames per second. So if you have a full-screen shader, on an 800x600 resolution doing something like bloom. Then you'll find you'll render: 28.8 million pixels/second (at 60fps) just doing that on everything; and depending on how well it's written it might take double that doing 2 passes for blur, plus a few nanoseconds calculating. The more complex the more calculation time. If you have say a shadow shader on all 20 characters on-screen at once, and you're doing say shadow mapping; then you'll probably output to a 512x512 texture in order for it to be smooth enough. So you'll be doing 512x512x20x60 (315million pixel/second) when you have a budget of 1.1billion/second, and you've just blown 1/3rd on shadows alone. You can start to see how careful you have to be if you're actually thinking about it. Microsoft DirectX PIX will go a long way to showing you bottlenecks and what is happening. But if you think about 20 people on your screen with 10,000 polys and 5 materials (= drawcalls) each, then you can calculate that it could go bad. Terrain As with anything it depends on how much of that is on screen at one time. AFAIK you can only properly import models that are less than 65K or so, but I too have dropped a 100k terrain in without issues. Someone else will have to tell you the exact limits. It would be best to break that up into several pieces so Unity can cull it more efficiently. For me, a 100K+ terrain with a 4 layered shader on a GeForce FX 5200 (64mb) and a dual G5 machine runs around 25fps. Considering my card is horrible, that's not to shabby. Edit: In my test level, I could also see about 2-thirds of all that on screen at once. You Image don’t load so I can’t really say if there is a issue with your terrain density, but what I can advise you on is on what may work for you in terms of scale, there are a few things you want to look at these are the “Num Patches X” and the “Num Patches Y” these will increase the size of the terrain you could then if you wanted increase or decrease the “Draw Scale” in X or Y and these will stretch of compact the Patches into a smaller or larger area. another thing you want to consider is the Terrain LOD this can be controlled by the “Min Tesselation Level” and “Max Tesselation Level” these values will create a variance of levels so I think by default there is Min set to 1 and Max set to 4 this means 4 variance in LOD’s the furthest level has the larger patches away from the camera and the closest has the smallist, finally is the “Tesselation Distance Scale” which controls the LOD size if you increase it the LOD will occur closer to the camera as well as increasing the size of the patches further away from the camera. I hope this helps, and as far at the question what is a good terrain density I think it is dependent on what your are trying to do and that’s why I have gave you a rundown of these setting so you yourself can figure out what works best for you in your map Scene Design Make the budget suitable to the point in the story and relative to the level of detail you want in the game, smaller environments mean a more concentrated area to fill with great graphics (more polys to play with). A larger area means less frequent loading and more seamless game play, but may suffer from 'blandness'. Try something relatively unique, we've seen waterfalls and trees thousands of times already. Push the imagination, for example if your making a FPS game and your at a factory; ask yourself, what is the factory mining? Who owns the factory? What's the history behind the factory? What would be the most exciting way to play through the factory? Is the factory functional? Would it be better if it was on fire/delapodated/a secret hideout/about to collapse. 5) Terrain (Landscapes) Heightmaps In computer graphics, a heightmap or heightfield is a raster image used to store values such as surface elevation data for display in 3D computer graphics. A heightmap can be used in bump mapping to calculate where this 3D data would create shadow in a material, or in displacement mapping to displace the actual geometric position of points over the textured surface, or for terrain where the heightmap is converted into a 3D mesh. A heightmap contains one channel interpreted as a distance of displacement or “height” from the “floor” of a surface and sometimes visualized as luma of a grayscale image, with black representing minimum height and white representing maximum height. When the map is rendered, the designer can specify the amount of displacement for each unit of the height channel, which corresponds to the “contrast” of the image. Heightmaps can be stored by themselves in existing grayscale image formats, with or without specialized metadata, or in specialized file formats such as Daylon Leveller, GenesisIV and Terragen documents. Heightmaps are widely used in terrain rendering software and modern video games. Heightmaps are an ideal way to store digital terrain elevations; compared to a regular polygonal mesh, they require substantially less memory for a given level of detail. In most newer games, the elements represent the height coordinate of polygons in a mesh. Heightmaps are usually complemented by texture maps that are then applied to the terrain ingame for extra detail and realism. I'd use a grey-scale image and save it as 8-bit. Or just use one color channel, i.e. the red one. A third solution could be to convert the color information into grey scale, using the following commonly used formula: grey scale = red * 0.3 + green * 0.59 + blue * 0.11. The problem with this is, that creating heightmaps in a image editor is not easy but if you used a special editor you could save up to 4294967296 distinct heights per hightmap. Basically you'd read them as int or uint and just define the height of each pixel as h = i * scaling (e.g. height = 4500 * 0.1 (m) = 450.0 (m) -> the pixel would have value 4500 which is 17 green and 148 blue in a argb layout). The shapes of the terrains that you can create with The New Terrain Tool are defined by height-maps. A height-map is usually a square gray-scale image whose black pixels represent the lowest heights (0%) and whose fully white pixels describe the highest heights (100%). The gray values between them represent intermediate height values – the brighter a pixel, the higher is the resulting terrain at that spot. CaWE and Cafu support height-maps in all the image file formats that are also supported for textures (jpg, png, tga and bmp), as well as Terragen (ter) and portable graymap (pgm) files. The image file formats usually have a precision of 8 BPP (bits-per-pixel), while the ter and pgm formats have a much higher precision of 16 BPP, and are therefore the preferred choice for height-maps. Height-maps must also meet the additional requirement of having side lengths of (2n+1) * (2n+1) pixels, where n is an integer between 1 and 15. Examples of typical height-map dimensions are 129*129, 257*257, 513*513 and 1025*1025. Heightmap Editors !! Terragen – terrain renderer, import your heightmap and render a texture using its orthographic camera mode, which you can then just apply to the entire terrain Picogen – terrain renderer and heightmap creation tool OpenTTD scenario editor AC3D Earthsculptor - Edit/Create a terrain then generate a heightmap Myth OutcastGrome – Advanced outdoor editor that uses multiple layers, brushes and procedural generation (fractals, erosion) to operate on terrain heightmaps. L3DT - Has tools to create a terrain, then generates a heightmap from the designed terrain Unreal Development Kit - Uses Heightmaps to create terrain as of its June 2011 build CaWE - The obvious and best choice for editing the height-maps of terrain entities is CaWE. L3DT (Free/Commercial) Under developement for many years, Large 3D Terrain is an app made for game devs. The basic version is free but there is also a Pro version that costs about 34$ for an indie license. http://www.bundysoft.com/L3DT/ Earth Sculptor (Commercial/outdated) - A small app, that works fine and allows for decent manipulation. Unfortunatly it's development stopped since 2008, but still its worth noticing it http://www.earthsculptor.com/index.htm Gimp (Freeware) Obviously the most common freeware 2d application, that mimics the commercial Adobe Photoshop in many ways and beyond. It matured over the years into a reliable 2d package and should be considered as a professional tool. By using filter and custom brushes it is a very good way to start a decent highmap. In CaWE, it is possible to edit and modify height-maps directly in the 3D view, in a “live” manner that naturally and immediately shows the interactions with other elements of the map, as for example brush-based buildings and walls, other entities, etc. For more information about the terrain tool please check here. Note that although CaWE would be the ideal place for height-map editing and modification, it is not necessarily the best place for height-map creation. Although that would be and will be possible, too, other programs below that are specialized on height-map creation may do a much better job in creating the initial heightmap, especially if very large, natural-looking shapes are desired. In such cases, you would use another program to create the initial height-map, then add it to your map in “rough” form using The New Terrain Tool, and then continue to edit the fine details in CaWE, for example the alignment with buildings, other brushes, etc. You may want to have a look at the [ Link to tutorial about how the TechDemo terrain was made. ] tutorial in this regard. Terrain Texturing Texturing in computer graphics is used to add detail, colour and effects to otherwise plain geometry. Textures are applied to three-dimensional terrain meshes to create the illusion of a rich landscape – grassy plains, sandy shores, snowy mountain peaks and rocky cliff faces. The first terrain texturing techniques used 2D tiles – each land type had it’s own set of tiles, for grass, sand, mud, snow and these tiles were textured per-quad at relevant positions on the terrain mesh. Harsh seams between different tiles are visible; the solution was to create a set of blending tiles to use between areas of adjoining texture types. Later and more effective techniques, such as that outlined by Bloom, C. (2000), blend textures using hardware multi-texturing to create seamless and unique blending between different textures applied to the terrain mesh. Many modern techniques use multi-pass rendering to achieve their desired effects, which is not a suitable technique for modern programmable GPU architectures. This article extends upon the article by Glasser, N. (2005) and describes a simple and fast way of implementing terrain texture blending on modern programmable graphics hardware. Terrain texture blending is achieved by overlaying different textures on top of each other depending on the height of the terrain mesh, or another attribute such as the gradient of the terrain at that particular point. This overlaying is achieved using alpha blending. A base texture is first applied over the whole of the chunk, with further textures blended on top of the base texture depending on their alpha map. Figure 1 shows this texture combination. Splatting is a technique for texturing a terrain using high resolution localized tiling textures which transition nonlinearly through use of an alpha blend map (sometimes via mesh vertex colouring, or RGB maps). There is a tradition of "texture layers" which are alpha blended together. Splatting requires very little CPU work, but relies heavily on the graphics card, which is asked to render each triangle many times to combine textures in the frame buffer, making heavy use of fill-rate and triangle-rate. Possibly break up tiling of one type of surface material by having several variations. So instead of just dirt and grass, you have dirt, dirt2, dirt/grass mix, grass 1, and grass 2. Then use a combination of masks and vertex colors to control blending. Company Of Heroes: Basically, a standard vertex based texture blending + dynamically created detail textures overlaid on top. It allows to define polygons (using splines or just regular quads) and assign separate textures to each. These polygons only exist in a 2d space (X,Z) and are associated with each world tile (which contains 33x33 cells). During runtime, these polygons are rendered to a 1024x1024 texture (blended with alpha) and then this newly created texture is blended on top. (Id Software/Splash Damage Megatexture technique, apparently awesome, but expensive?). Looks to me like they have vertex color blending to mix tiles together, along with a decal system and possibly some floating geometry. Company of Heroes' texture transitions look fairly soft overall, probably indicating vertex color blends. ETM Ogre Add-in (looks a bit like NeoAxis Map Editor?): Supports splatting with up to 12 textures in a single pass, very easy to use, terrain shaping, editing, etc. I am using it since quite a while and am quite happy with the features and performance. Homepage: http://www.oddbeat.de/wiki/etm Ogre Wiki: http://www.ogre3d.org/wiki/index.php/ETM Ogre Addons Board: http://www.ogre3d.org/phpBB2addons/viewforum.php?f=16 Put some overlays on top of the terrain, and blend them nicely, without actually baking them into the texture. if your terrain is flat then you don't have to bake them into anything. Seems another method is to use a single big coverage texture stretched out over the entire map, overlaid with a 4 channel splat-map (RGBA) dictating location and strength of 4 splat textures (generally 256x256 or 512x512 for good sized terrain) - these will be repeated over the whole terrain many times, but will be blended via the splat-map in the shader (e.g. grass, mud/dirt, rock, snow). Can also use the vertex colour to save the blend percentages for every texture (R for grass, G for mud, B for rock and A for snow or any other constellation). without lighting on it because I do it elsewhere, but if you weren't changing lighting at runtime for the terrain, you'd pre-render it into the texture For texturing models you need to UV map them, then texture the UV's into a paint program like Photoshop, Gimp, Paint Shop Pro I use BodyPaint or DeepPaint for painting directly on the models. For terrain I use 3D World Studio which paints directly on the terrain ---> with your own scanned textures or from .jpg get the demo and test it out. That implementation also textures the mesh with a 512x512 'colormap' and a 512x512 detail texture. The colormap is basically a huge texture being stretched over the entire mesh. This yields blurry coloring onto the terrain. You can distinguish rock, dirt, grass, etc from eachother but it is extremely blurry. So I tile the detail texture over the entire terrain. I am not using multitexturing at the moment, so I am doing two passes. Methods of generating a terrain texture: Tiling 1. Vertex Height Mapping: The texture applied is selected using the vertex height information. 2. Vertex Normal Mapping: The texture applied is selected using the vertex normal (angle from horizontal) information. Also called tri-planar texturing (good because it almost completely removes texture stretching)? 3. Texture overlay map: The overlay map covers the entire terrain, and specifies how to blend between textures. 4. Alpha Blend Splatting: Several textures can be specified, each one assigned its own layer (or channel). These textures are then painted (rendered) onto the terrain according to their alpha specified for a particular piece of terrain (requires an alpha map?). For adding terrain feature textures (e.g. roads, or special features such as detritus around a camp fire: 1. Decals: 2D textures painted onto the terrain vertices. 2. Splines: A flat surface polygon mesh (floating geometry) that can be textured and overlaid onto the terrain mesh (the edges can be curved down so the edges are underneath ground level, or the texture can have transparent sides to blend into surrounds) - need to manually mould spline to the terrain (or vice versa?).. 3. If the features are numerous enough, you can use a texture layer, although there is no way to add directional information without difficulties. so in conclusion, it seems that most games today use texture splatting and pixel shaders for their terrain, laying down multiple layers of blended textures, then spline overlays for roads etc, and finally decals for special localised features. Simple vegetation and feature objects can then be scattered about to give the final look. Polyboosts tool for laying floating geometry onto terrain mesh? Megatexture still amazes me though. You get great looking terrains with near 100% unique textures. You can use splines, since it generates UV's for you already. Though if you decide to go the polyboost route, Max 2009+ has something called spline mapping. If you make a spline down the center of your "road" and apply an unwrap to the mesh, you can pick the spline as a guideline for the UV map - works amazing well for things like that. When you draw splines on top of a terrain, then use the sweep modifier (I don't know how else) the place where the road sticks to the terrain is in the middle (that's rather obvious), which causes the borders to sometimes be 'buried' in the ground. That wouldn't be the case with the strip-laying tool. Though using the automatic mapping coordinates while using spline/sweep may end up to be an unbeatable combination. Look for a checkbox that says "generate mapping coords" inside of Rendering in the spline menu. It also exists in sweep. Then set the spline to renderable, then setting it to rectangle. Probably apply an edit poly on top and delete the bottom part of the geo so you're left with just a plane. You can also use a conform compound object but it will smash that object completely flat to the surface. So it works best on flat objects. If you need height afterward you can use the shell modifier. You could also use a spline painter script to paint a spline on the surface of the terrain, it should follow it along pretty closely. Neil Blevins has a great one in his pack of scripts. http://www.neilblevins.com/soulburnscripts/soulburnscripts.htm This is a great question. The main focus of the work I do is creating realistic terrain for exterior scenes. While learning how to create good terrain, I've run into similar obstacles that you are having. Now there are a couple different ways you can go about texturing terrain for believable results. One way, which is the way you are currently using, and that is to use a his rez bitmap get a ground plane. I applaud your use of a distance blend to simulate a realistic perspective. However, I believe this is only part of what you need to create a realistic terrain. Now you are on the right track, but I would use the bitmap as a base to build upon. If you want the ground to look more thee dimensional, then here are a few tips that might help you: - In 3DS Max you can use Composite maps to layer different textures together. For your diffuse slot you can layer your bitmap with "Noise" Textures to break up the tiling effect and then blend them together. - You can apply this same composite principle into a bump map as well. this will help break up the tiling texture, but it can give your ground a bit more dimension. I woul look at using a "Cellular" Map for cracks and the like. - If you really want to pull things up and out, look into using a Displacement map OR look into using a Normal Map. A Displacement map will subdivide your model based on a texture and pull it in the Normal Direction of the mesh. This is good for creating a more organic environment, however if your scene already has a high poly count .. then the subdivisions might overload your memory. If you don't like that option, try sculpting in some organic cruves and bumps into your mesh to make it uneven. - You could also add to the terrain by modeling in some quick rocks and debris as well. Here are some links that might help explain some of these points a little better: Modeling a Natural Environment 1 - http://max.cgcookie.com/2009/11/06/modeling-an-naturalenvironment/ Modeling a Natural environment 2 - http://max.cgcookie.com/2009/12/11/modeling-a-naturalenvironment-part-2/ Creating a Rock Shader - http://max.cgcookie.com/2009/08/31/creating-a-rock-shader/ I hope this helps. 1. Tiling effect is common problem for all 3d software if you're working with large scale objects like terrain or similar. There are several solutions to this problem: You can either use plugin software that makes terrain and corresponding textures (like Vue or Terra, though I don't think there are those for Sketchup) or make your own Mix maps and Blend materials. Basically it goes like this: You make two or more materials you will use for you main, Blend material (for example sand for basic material and some other to mix it with - gravel, grass, mud... something like that). After that you need a Mix map - black and white texture that will separate this materials in irregular manner. You can use either large scale noise map, or fallof map set to z-scale to function as a height map. Finally, you'll have to spend some time playing with settings to get the right result. Experience is crucial here, but you can also look for some tutorials online to help you (I remember seeing a good terrain texture tutorial online some time a go but I wasn't able to find it). Hope it helps. 6) 3D Animation File Formats There seem to be two main methods of 3D game animation, file formats that supports 3D animation that can be run by the game engine, and formats that import a sequence of static 3D models that the game engine will run in sequence (similar to sub-images in a sprite): 3D animation formats: .md2: (Quake II engine? character animation). .3ds: (rigid objects): .an8: (Anim8or). Collada: (.dae): defines an XML-based schema to make it easy to transport 3D assets between applications. Collada eenables diverse 3D authoring and content processing tools to be combined into a production pipeline. The intermediate language provides comprehensive encoding of visual scenes including: geometry, shaders and effects, physics, animation, kinematics, and even multiple version representations of the same asset.Collada defines an open standard XML schema for exchanging digital assets among various graphics applications, Collada documents that describe digital assets are XML files. Designed to be useful to the widest possible audience (Animators: 3ds Max, Adobe Phtotshop, Blender Carrara, Lightwave, Maya, SoftImage XSI, Engines: C4, Illricht, Unreal, Torque, Unity). As of version 1.4, physics support was added to the COLLADA standard. The goal is to allow content creators to define various physical attributes in visual scenes. For example, one can define surface material properties such as friction. Furthermore, content creators can define the physical attributes for the objects in the scene. This is done by defining the rigid bodies that should be linked to the visual representations. More features include support for ragdolls, collision volumes, physical constraints between physical objects, and global physical properties such as gravitation. Physics middleware products that support this standard include Bullet Physics Library, Open Dynamics Engine, PAL and NVIDIA's PhysX. 3D model formats: .obj: dsf .d3d: sdf