DennisProposedOutline

advertisement

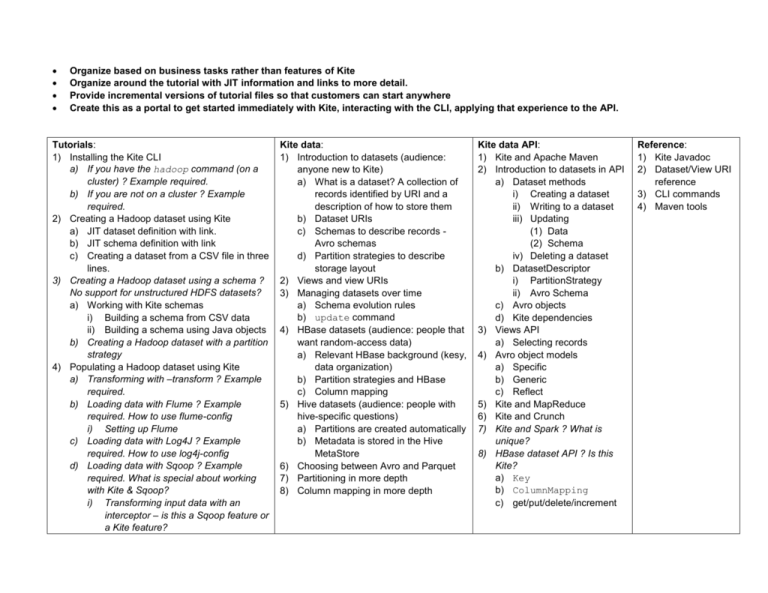

Organize based on business tasks rather than features of Kite Organize around the tutorial with JIT information and links to more detail. Provide incremental versions of tutorial files so that customers can start anywhere Create this as a portal to get started immediately with Kite, interacting with the CLI, applying that experience to the API. Tutorials: 1) Installing the Kite CLI a) If you have the hadoop command (on a cluster) ? Example required. b) If you are not on a cluster ? Example required. 2) Creating a Hadoop dataset using Kite a) JIT dataset definition with link. b) JIT schema definition with link c) Creating a dataset from a CSV file in three lines. 3) Creating a Hadoop dataset using a schema ? No support for unstructured HDFS datasets? a) Working with Kite schemas i) Building a schema from CSV data ii) Building a schema using Java objects b) Creating a Hadoop dataset with a partition strategy 4) Populating a Hadoop dataset using Kite a) Transforming with –transform ? Example required. b) Loading data with Flume ? Example required. How to use flume-config i) Setting up Flume c) Loading data with Log4J ? Example required. How to use log4j-config d) Loading data with Sqoop ? Example required. What is special about working with Kite & Sqoop? i) Transforming input data with an interceptor – is this a Sqoop feature or a Kite feature? Kite data: 1) Introduction to datasets (audience: anyone new to Kite) a) What is a dataset? A collection of records identified by URI and a description of how to store them b) Dataset URIs c) Schemas to describe records Avro schemas d) Partition strategies to describe storage layout 2) Views and view URIs 3) Managing datasets over time a) Schema evolution rules b) update command 4) HBase datasets (audience: people that want random-access data) a) Relevant HBase background (kesy, data organization) b) Partition strategies and HBase c) Column mapping 5) Hive datasets (audience: people with hive-specific questions) a) Partitions are created automatically b) Metadata is stored in the Hive MetaStore 6) Choosing between Avro and Parquet 7) Partitioning in more depth 8) Column mapping in more depth Kite data API: 1) Kite and Apache Maven 2) Introduction to datasets in API a) Dataset methods i) Creating a dataset ii) Writing to a dataset iii) Updating (1) Data (2) Schema iv) Deleting a dataset b) DatasetDescriptor i) PartitionStrategy ii) Avro Schema c) Avro objects d) Kite dependencies 3) Views API a) Selecting records 4) Avro object models a) Specific b) Generic c) Reflect 5) Kite and MapReduce 6) Kite and Crunch 7) Kite and Spark ? What is unique? 8) HBase dataset API ? Is this Kite? a) Key b) ColumnMapping c) get/put/delete/increment Reference: 1) Kite Javadoc 2) Dataset/View URI reference 3) CLI commands 4) Maven tools 5) Investigating/Interrogating a Hadoop dataset using Kite a) Kite and Impala ? What is unique about Kite when working with Impala? b) HBase get/put ? Example required. What is unique about Kite with HBase? c) Bulk-processing data i) Using the Kite with the CrunchDatasets API ii) Using Kite with the org.kitesdk.data.mapreduce API iii) Kite and Spark ? What is unique to Kite? iv) Kite and Hive ? What is unique to Kite? 6) Updating a Hadoop dataset using Kite a) Adding records to a Hadoop dataset using Kite b) Updating a dataset schema using Kite i) Special considerations when using Flume ? Example required.