Bananas in pyjamas?

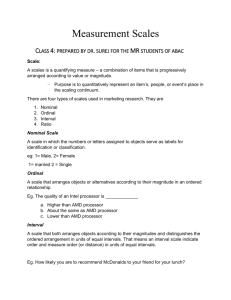

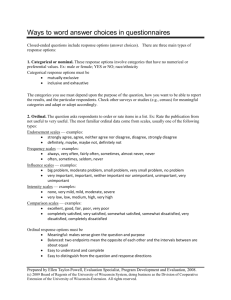

advertisement

Research News > September 2010 Statistics: Bananas in pyjamas? No, not B1 and B2, rather L1 and L2 In the July edition of Research News, Ingrid Burrowes wrote of ‘our complex language landscape' and espoused the view that to ‘truly engage with Australia's population necessitates speaking a multitude of languages' and further that ‘market researchers must take heed of (the associated) trends'. In attending the Informs Marketing Science Conference in Köln, Germany, last June, amongst some 600 (!) individual presentations, I came across one that dealt with what would seem to be an important aspect of this topic. Stefano Puntoni and his colleagues at the Rotterdam School of Management, Erasmus University, reflect on the fact that relative to a few decades ago, a much larger share of marketing research data are now collected from ‘multilingual or multicultural' respondents. And although marketing research agencies often translate surveys into respondents' native (L1) language, there are many instances in which data are collected in a respondent's second (L2) language (typically English). Hardly a path-breaking finding! However, what the authors have also established, through the conduct of nine separate investigative studies covering more than 1,000 respondents, is a strong and systematic tendency for individuals to report more intense emotions when answering questions using L2 rating scales than when using L1 rating scales. That is, L2 rating scales yield more extreme responses than L1 rating scales in the case of emotionladen items. This phenomenon is known as the ‘Anchor Contraction Effect' (ACE). A couple of examples taken from the paper serve to illustrate: Amazon.com offers customers the opportunity to rate any product using the emotional statements ‘I hate it' and ‘I love it'. Regardless of their native language, people around the world contribute ratings to the website and the status of the customer (i.e. whether L1 or L2) may exert a significant influence on such ratings. Hotels and other sites visited by international travellers typically field self-completion customer satisfaction questionnaires. The emotional anchor point ‘Happy' (or similar) is often employed in the response scales used. In such cases, ACE could lead foreign (L2) visitors to express more positive opinions than local (L1) residents. How should we correct for this introduced bias? Well, one approach (as one well-known and respected academic said to me was his practice) might be to simply exclude from the data any respondent who was too overtly L2, which (depending on the context) some might justifiably view as being a somewhat extreme remedy. Another obvious approach, offered by Puntoni et al, would be to make sure all respondents answer items in their native language, although the authors acknowledge that to provide L1 rating scales to everyone could be too costly or impractical. In fact, the authors claim that ACE can be accounted for a priori with ‘corrective techniques'. Two approaches that they claim to be ‘simple' are based on the ‘concomitant presentation of verbal and non-verbal cues': Emoticons can be used when measuring basic emotions that can easily be portrayed with stylised facial expressions, and are particularly appropriate in online interview settings, and also with children, those with low L2 proficiency, and those with low literacy. Colours are claimed to be especially suitable in the case of abstract or complex emotional concepts (e.g. by placing visual cues such as colour dots) of increasing intensity under the response points along with the verbal labels), although they may be vulnerable to crosscultural differences in interpretation. If neither of these approaches is deemed suitable (or if you think they are rubbish), the authors suggest that researchers can adopt an a posteriori approach and use information about respondents' L1 as a control variable (e.g. adding a dummy variable in regression models), but even this has drawbacks. Whether or not you agree with any of the above (and I would urge you to read the full paper* yourselves), it is clear that a respondent's L1/L2 status will impact on how (s)he responds. It would seem to behove us as researchers to be cognisant of the ACE impact and either control it at the point of data collection, or take it into account in our analysis (e.g. via separate analyses for the L1 and L2 subgroups in the sample). Scott MacLean, Nulink Analytics Footnote: Whilst it easy to say that academics can have a somewhat simplified view of the real world, at least they do have the time to look at issues that we practitioners sometimes feel inclined or compelled to gloss over. And whilst academic research can be a little unrealistic - e.g. to the point of basing findings on convenience samples of at most a few hundred paid students - it often raises issues that subsequently hit the research mainstream and help us in what we do in our day to day work. * The full text of the paper can be found here - free to download but not to print: http://www.marketingpower.com/AboutAMA/Pages/AMA Publications/AMA Journals/Journal of Marketing Research/JMRForthcomingArticles.aspx