Exam 2 answers

advertisement

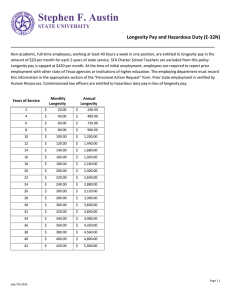

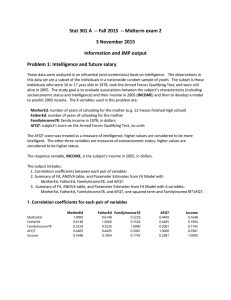

Stat 301: Midterm 2 answers and notes 1. Intelligence and future salary a. second plot in the row. Notes: r for income and AFQT is 0.29, so you’re looking for a weak to moderate positive relationship b. 0.088 Notes: the r2 (r-squared) for the simple linear regression is the square of the correlation coefficient. 0.088 = (0.298)2 c. F, T, I, F, F, T Notes: the p-value for the test of r = 0 is < 0.0001, so there is strong evidence of a linear association the estimated r is not 0, so the estimated slope in the simple linear regression is not 0 remember the four Anscombe data sets. Same r, but in 1, the line fits; in 2, the quadratic fits better as I said in lecture, I hate r2 because it means much less than folks believe it does the regression may be useful (e.g. estimating β2) even though observations are very variable (which will give you a small r2) estimating the population correlation requires that the observations be a simple random sample from the population. That is an assumption about both X and Y. Regression only makes assumptions about the errors (Y given X). The X values can be arbitrary d. Increasing family income in 1978 by 1 increases average salary in 2005 by 0.056, when all other variables are held constant. Notes: various other wordings accepted, but the idea of ‘all others held constant’ was crucial Because this is an observational study, “increasing” really means comparing two youth that have family incomes in 1978 that differ by 1. e. 126.09. Computed as β for FatherEd + β for MotherEd f. No. You expect the simple and multiple linear regression slopes to differ because they estimate different things. g. p < 0.0001. At least one of the four regression slopes is not 0. Notes: An incorrect conclusion is that ALL slopes are not 0. h. Not possible. The rMSE is 9255, so the sd of a predicted observation is always > 9255. Notes: the rMSE is the s in the formula for the sd of a predicted observation. i. AFQT, it has the largest standardized beta. Notes: You don’t have the range for each X or the sd of each X, so you can’t use those approaches. You do have the std beta values. The t statistics and standard errors answer different questions, not the importance of a variable. j. Yes, the p-value for the interaction (AFQT*FamilyIncome78) is 0.018, which is < 0.05. Notes: The interaction is in the model is not sufficient, because the interaction coefficient could be 0 k. Increasing AFQT by 1 increases average 2005 salary by 112.7$ when familyincome78 = 0 and all other variables held constant. Notes: Various wordings acceptable. The key idea is 112.7 is the slope for AFQT only when familyincome78=0. Because this is an observational study, “increasing” really means comparing two youth that have AFQT scores that differ by 1. l. a more complicated model is not necessary. The p-value for the quadratic term is 0.54 Notes: Some argued that a more complicated model is necessary because this model has such a large rMSE. Perhaps, but the results from the model with 6 predictor variables clearly indicates that the obvious ‘more complicated than a straight line’ relationship (i.e., quadratic) isn’t needed. 2. longevity of mammal species a. E log longevity = 1.74 – 0.00888 mass + 0.000175 metab b. longevity multiplied by 0.41, OR longevity decreases by 59% Notes: My calculations: the estimated change in log longevity is 100 x (-0.00888) = -0.89 The multiplicative effect of longevity is exp(-0.89) = 0.41 Some folks predicted longevity at two masses that differed by 100kg. Good approach. You just have remember that the change is a ratio not a difference. A different pair of masses will give you a different difference, but the same ratio. c. E log longevity = 3.719 + 0.535 log mass – 0.316 log metab d. longevity multiplied by 3.42 OR longevity increased by 242% Notes: My calculations: log mass increases by log(10), change in log longevity is log(10) x 0.535 = 1.23 multiplicative effect on longevity is exp(1.23) = 3.42 Common mistakes include: reporting a 71% decrease: you switch signs and computed exp(-1.23) calculating 3.74 + 0.535 log(10), which is predicting log longevity for an individual with mass 10 calculating 0.535 * 10, which is forgetting that the X variable is log mass. calculating log mass using log base 10, not natural log. forgetting to exponentiate the change in log longevity. e. No – the data are a random sample and there is one row of data (ou) per species (equiv. of eu) Notes: You can’t tell independence from the residual plot, which quite a few tried to do. f. No – the residual plot shows no sign of a cone or trumpet shape. Notes: If you saw some sort of change in vertical spread and concluded Yes, that was accepted. To my eye, the change is minimal, at best. g. No – the residual plot shows no sign of a smile or frown. Notes: various other answers accepted if described something that indicates lack of fit. h. Yes – the VIF values exceed 10. i. 22.8 years. Notes: My calculation: predicted log longevity = 3.719 + 0.5346 log(65) – 0.3161 log(7560) = 3.13 hence longevity = exp(3.13) = 22.8 Various other values accepted because they reflect different amounts of rounding. If you got 32.6 years, you used log base 10 for mass and metab, not natural log If you got a large negative estimate, you forgot to log transform both X values. An aside: the mammal with mass = 65 kg and metabolic rate = 7560 is Homo sapiens. The value in this data set is 75.6 years; the 2015 estimate of US life expectancy at birth is 79 years for men and 82 for women. The difference between reality and the prediction based on other mammal species has many potential causes, but two big ones are the 20’th century reduction in infant mortality, and current medical care. j. No – the prediction point is sufficiently close to the middle of the data. The se of the prediction is only 1.6 times the se of a prediction at the mean point. Notes: saying that 65 is within the range of mass and 7560 is within the range of metabolic rates is true, but not sufficient to avoid an extrapolation. Remember my lecture example where a prediction point was far from the observed data, even though each individual variable was not. k. No concerns about prediction. Notes: Your answer here was calibrated against the issues you identified in previous questions. Many were concerned about multicollinearity, but that is not an issue for prediction.