CGCC - SUNY Council on Assessment (SCoA)

advertisement

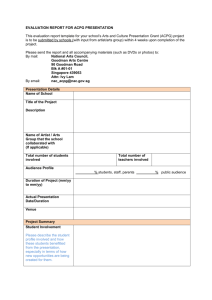

2015-2016 Fourth Edition Non-Academic Assessment Resource Guide Helpful hints, tips and ideas for Unit Assessment Non-Academic Assessment Committee Table of Contents C-GCC College Assessment 1 Timeline and Schedule for Assessment 2 Units At-A-Glance 3 Developing Outcomes 4 Assessment Measures 6 Establishing Standards 8 Data Collection 9 Development and Implementation of Action Plans 10 CGCC Non-Academic Assessment Plan 2014-2015 13 Resources and Tools 14 Non-Academic Assessment Related Terms 15 Appendix: Strategic Plan, Forms and Reports 18 End of the Year Committee Report 25 Unit Assessment Plan 28 Survey Approval Form 30 NAC RESOURCE GUIDE Page 1 Columbia-Greene Community College Assessment A. What do we mean by assessment? Assessment at Columbia-Greene “begins with the end in mind.” Like a college’s manuscript, it tells the story of the operational data and plan. With this information at hand we can play the role of manuscript editors, examining areas in need of refinement, revision, or efficiencies. This resource guide will serve as a helpful tool as you carry out assessment, gaining information about ongoing work throughout the college, finding overlaps, targeting potential areas for integration, matching assessment standards and reviewing timelines. Your unit serves as a pathway to decision-making, as the work that you carry out directly supports the college mission. Although we may all work together in the same building for years, we may not necessarily have detailed knowledge about what goes on in each other’s units or departments. For example, faculty in offices next door to one another may have partial knowledge about what goes on in each other’s classrooms. This is normal as we often work independently as part of a cohesive whole institution. Books, concepts, assignments and exams are unfamiliar to distinct faculty. Likewise, although faculty work closely with the academic support center we have limited information as to how each unit operates. If there are gaps among units within buildings, then there are virtual Grand Canyons among the entire school. If there were little real-time data available, there would result in two polarized tendencies. One is to become rigid and lock step with what we are already doing, giving the impression that everything is under control exactly the way that it is. The second is to become too loose and vague that no one has a clue what is going on. To make sense of our students and our work experience over time, we need to zoom into our practices and not lose sight of a wide-angle lens or perspective. With data from assessment we can efficiently and sustainably understand, make changes to and improve our experiences. This guide will help describe the procedures for that assessment process. The fourth edition of the resource guide is designed to directly assist you as you develop and refine your unit plans, collect data and use your assessment results in a variety of ways. Budget justifications, improvements and unit efficiency and a connection to enrollment, retention and completion where applicable serves as a general or very focused snapshot of your work. The guide has been updated to include our focus from the year and collaboration from the IAPG, AAC and Strategic Planning committees. We have also included examples from past CGCC Unit Plans within the guide. The updates reflect your suggestions as well. Please feel free to contact a NAC member to assist in your efforts along the way. Thank you for your efforts. NAC RESOURCE GUIDE Page 2 B. Procedure and Protocol and Timeline for Non-Academic Assessment 1. August to June NAC Committee meets monthly 2. Late August through September All College Meeting Day-Draft Unit Plan & VP/ Dean Review- Collaboration 3. September until October Unit Plan (Page One) Completed in September in conjunction with your VP/Dean Submit to Non Academic Assessment Committee in October as email attachment 4. November NAC Approves Unit Plan NAC will notify respective VP/ Dean and provide a copy of your Unit Plan (Page one) 5. December through April Collect Data and Complete Unit Plan Results (Page 2) NAC will support your efforts as this is the time to reach out to the committee for information. 6. May 6th 2016 Unit Plan Results (Page 2) submit to Non Academic Assessment Committee as email attachment NAC RESOURCE GUIDE Page 3 C. Using the Guide Refer to this resource as a helpful guide in completing Non-Academic Assessments. You may refer to sections while drafting your Unit Assessment Plans or glance at past models. In addition, the NonAcademic Assessment Related Terms may help you decipher reports that you find helpful in understanding assessment or broadening your assessment related vocabulary. Referencing the guide may also enable you to relate to others’ Non-Academic Assessment Plans or provide future ideas. Please feel free to contribute ideas or suggestions to future guides by contacting the Non-Academic Assessment Committee on campus. D. What is an Assessment Ally? NAC committee members intentionally represent a diverse range of institutional departments and services. In each of your units and areas you may find a NAC committee member best prepared to assist you. In other words, an “ally”. Allies may assist and support the creation and implementation of your unit plan by providing additional ideas as a “second set of eyes”, proofreading, editing and wording. We also have knowledge of what other units are working on and how your plan fits within the strategic plan or what is happening on the academic side of the college. In order to best assist you through the steps of completing your unit plan, please reach out to your assessment Ally. He or she will gladly help! 5. NAC Committee Members 2014-2015 Nicole Strevell Assistant Professor of History Chair/Coordinator President’s Area Assessment Ally Diana Smith Research Analyst, Technical Assistant II for Institutional Research President’s Area Assessment Ally Jen Colwell Business Office Academic Affairs Assessment Ally Karen Fiducia Clerk Typist for Student Activities Academic Affairs Assessment Ally Leslie Rousseau Advising Counselor Administration Assessment Ally Jan Winig Coordinator/Programmer for CIS Administration Assessment Ally Casey O’Brien Institutional Research Director Student Services/Enrollment Management Assessment Ally Harold Lansing, Jr. Head Maintenance Worker Student Services/ Enrollment Management Assessment Ally NAC RESOURCE GUIDE Page 4 Non-Academic Assessment Units At-A-Glance President’s Area President Board of Trustees Foundation Public Relations Academic Affairs Vice President Academic Support Center Advising Community Services Computer Information Systems Institutional Research Library Administration Vice President Facilities Accounting Bursar Security Human Resources Purchasing Association: Bookstore or Daycare Student Services/Enrollment Management Vice President Accessibility Services Admissions Athletics Counseling/Career Financial Aid Health Services Registrar Student Activities It is anticipated that each unit listed above will conduct ongoing and meaningful assessment each year. Please contact your Assessment Ally to review past assessment in your area if needed. NAC RESOURCE GUIDE Page 5 Columbia-Greene Community College Non-Academic Assessment Committee Resource Guide “Begin with the end in mind.” Identification of Unit Outcomes Unit outcomes are the long-range, general statements of what the unit intends to deliver. They provide the basis for determining more specific outcomes and objectives of the unit. The chief function of unit goals is to provide a conduit between specific outcomes in any particular area and the general statements of the college mission statement. Thus, unit outcomes should be crafted to reflect the goals of the college mission statement/strategic plan and also be connected to budget requests. How to develop unit outcomes: Outcomes should describe current services, processes, or instruction. It is often helpful to create a list of the most important things your unit does. This is the first step as you draft your plan. Then, create a master list of the key services, processes or instruction. From that list, a set of outcomes can be created. Consider what problems you are trying to solve. The numbers of outcomes are unique to the specific unit. For each assessment cycle, it is recommended that one or two outcomes are assessed, and over a three to four year period, all of your unit outcomes are assessed. 10 Characteristics of good outcomes: focus on a current service, process, or instruction under the control of or responsibility of the unit meaningful and not trivial measurable, ascertainable and specific lend itself to improvements singular, not bundled not lead to “yes/no” answers describe current services, processes or instruction use active verbs in the present tense (unless a learning outcome) measure the effectiveness of the unit (using descriptive words) NAC RESOURCE GUIDE Page 6 Unit Outcomes Should: ● Be clearly and succinctly stated. Make the unit outcome clear and concise; extensive detail is not needed at this stage. ● Be under the control or responsibility of the unit. ● Be ascertainable/measurable. ● Lend itself to improvements. The process of assessment is to make improvements, not simply to look good. The assessment process is about learning how the unit can be better, so do not choose an outcome that will measure something the unit is already doing well. ● Focus on an outcome that is meaningful. Although it can be tempting to measure something because it is easy to measure, the objective is to measure that which can make a difference in how the unit functions and performs. ● Focus on outcomes that measure effectiveness. If the answer to the outcome is a “yes/no” response, the outcome has not been written correctly and, when measured, may not yield actionable data. We recommend use of descriptive words regarding the service or function. ● Outcomes should be phrased with action verbs in the present tense that relate directly to objective measurement. Example of a Problematic Unit Outcome: Example 1: The Office of Institutional Research will ensure that 90 Percent of departments will submit their annual Institutional Effectiveness plan on time. Problems: a) The unit does not have control over this outcome. While we certainly hope this goal can be achieved, and it is important, the outcome itself is not appropriate for the assessment of this unit’s outcomes because there is no direct control. b) In addition, this outcome is stated in the future tense, implying that it may be a future goal or initiative, rather than a current service or process. Example of a Suitable Unit Outcome: Online course evaluations have an overall response rate of at least 60%. Questions to Consider When Reviewing the Design of Outcomes Is the outcome stated in terms of current services, processes, or instruction? Does the unit have significant responsibility for the outcome with little reliance on other programs? Will the outcome lead to meaningful improvement? Is the outcome distinct, specific, and focused? Any answer other than “yes” to the above questions is an indication that the outcome should be re-examined and redesigned. NAC RESOURCE GUIDE Page 7 Identification, Design, and Implementation of Assessment Tools that Measure the Unit Administrative Outcomes What should an assessment measure do? An assessment measure should provide meaningful, actionable data that leads to improvements. Therefore, one should not choose to assess something with which one is satisfied. The purpose of assessment is to look candidly and even critically at one’s unit or department to measure and collect data that will lead to improvements. The purpose of assessment measures is to gather data to determine achievement of the unit outcomes selected during the specific assessment cycle. Consider the method as a tool to gather information to help solve a problem. An assessment method should answer the questions: What data will be collected? When will the data be collected? What assessment tool will be used? How will the data be analyzed? Who will be involved? It is important that the assessment be directly related to the outcome. For example, if an outcome is designed to measure community satisfaction with community continuing education, and then the assessment measure counts the number of continuing education courses, there is a misalignment between the outcome and the measure. In this case, the measure should be an evaluation survey. An assessment method should include: A clear and specific description of what data will be collected. A definitive and specific timeframe for when and by whom the data will be collected. Will it be measured and collected during one specific month? A full year? By whom? A clear and specific description of the assessment tool which will be used. Will it be a systems log? Or will it be a survey? Other? A clear and specific description of how the data will be analyzed. Examples of types of assessment methods: Quantitative Data – response time, accuracy, cost savings, efficiency Student or Staff Satisfaction Level – surveys, focus groups, observation of client behavior External or peer comparisons – auditors, fire marshal, other outside agencies A Note about Using Surveys: Be sure to fill out proper forms and notification before distribution from Institutional Research. Attach your survey instrument to the Unit Assessment Plan. NAC RESOURCE GUIDE Page 8 Example of an Outcome and Appropriate Assessment Measure: Outcome: Keep computer hardware current to support teaching and learning. Assessment Measure: Calculate the age of each computer. Send a survey to all the faculty whose program majors are up for review. Checklist for an Assessment Measure An Assessment Measure should: Be directly related to the outcome Consider all aspects of the outcome Be designed to measure/ascertain effectiveness Multiple assessment measures should be identified, if possible, be complemented by a second assessment measure Provide adequate data for analysis Provide actionable results Outline in detail a systematic way to assess the outcome (who, what, when, and how) Be manageable and practical Questions to Consider when Reviewing the Design of Assessment Measures: Are assessment measures for each outcome clearly appropriate and do they measure all aspects of the outcome? Have multiple – at least two – direct assessment measures been identified? Are the assessment measures clear and detailed descriptions of the assessment activity (who, when, what, and how)? Do the assessment measures clearly indicate a specific time frame for conducting assessment and collecting data? Does the measure reflect different campuses and locations, if appropriate? Any answer other than “yes” to the above questions is an indication that the assessment measure should be re-examined and redesigned. Assessment measures are usually divided into two broad classes: direct and indirect measures. Direct Measures: are those in which actual behavior is observed or recorded and the measured is derived from that information. These are generally preferable for the assessment of specific objectives, although some objectives may only be measurable with more indirect methods. Indirect Measures: include those in which participants report their attitudes, perceptions or feelings about something. Usually in the form of a survey. NAC RESOURCE GUIDE Page 9 Establishment of a Standard A standard is the benchmark for determining the level of success for the unit outcome. Setting the standard determines the criterion or level that is acceptable. The standard is the target that the unit seeks to achieve. You may determine your standard through the use of internal data and knowledge, or external research and benchmarks. How standards should be expressed: Standards should be specific. The standard should be clearly stated with actual numbers. Standards should avoid words such as “most,” “all,” or “the majority.” Specific and actual numbers should be utilized. Standards should not utilize target goals of 100 percent. If a target of 100 percent is set, the standard set is either unrealistically high or there is an implication that staff has selected a target they already know can be universally achieved. If unit is expected to consistently attain 100 percent due to legal or financial regulations or guidelines, it is recommended that the unit state that in the standard. Example of a Unit Outcome, Appropriate Assessment Measure and Standard: Outcome: The Academic Dean’s Office is responsible for the academic offerings at the College, including credit courses, programs, curricula, and course and program assessment. It will ensure that students are knowledgeable and prepared to make informed decisions regarding their courses and schedules. Goal 4: Student Centered Objective 1: The College will foster an atmosphere where students feel connected to the learning environment. Assessment Methods: S.O.S Data 2013 (Academic Experience #4 & #5) Review feedback from students and advisors Standard: 85% of students will indicate having a year of schedules is helpful to planning. Checklist for a standard: A standard should: Be specific Avoid vague words such as “most” or “majority” Generally not be stated in terms of “all” or 100%” Directly relate to the outcome and assessment measure Use item analysis where appropriate, not averages NAC RESOURCE GUIDE Page 10 Questions to Consider when Reviewing the Design of standards: Have appropriate achievement targets been clearly stated for each measure? Do achievement targets address different campuses and locations? Has a brief rationale been offered for the selection of the achievement target? Is the achievement target specific and devoid of vague words? Is the achievement target directly related to the outcome and assessment method? Any answer other than “yes” to the above questions is an indication that the standard should be re-examined and redesigned. Review of Assessment Plans for Non-Academic Units Steps 1 through 4, as outlined and explained above, constitute the Unit Assessment Plan. Members of the NAC committee will review all Assessment Plans for all Non-Academic units. Please see timelines and check- in dates attached. Results & Findings After the outcome, assessment measures and standards have been identified and implemented, data of that implementation must be collected and the findings analyzed. In this regard, the shift is from planning the assessment to conducting it. What data collection and findings should include. A summary of the findings should be reported in specific detail using actual numbers, not vague words such as “most” or “a majority.” It is necessary to report findings in terms of percentages and actual numbers. Because reviewers will not be experts in your field, avoid the use of technical or field-specific language, and be certain that the findings are reported clearly and succinctly. Most importantly, be certain that the findings are reported in a manner that indicates if the achievement target was met and aligns with the actions the unit personnel will decide to implement in order to make improvements. On your unit plan, you will be asked to report if you check if you have exceeded, met or did not meet your standard. Example of a meaningful and ongoing report on Results and Findings: Based on 50 students average in attendance. Fall 2013 Sept. 17, 13 New Programs Dash for Dollars/Game Show Attendance 63 Result +13students Sept. 24, 13 Levi Stephen Band/Singer 60 Result +10 students -----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------Spring 2014 New Program Feb. 25, 14 Tom Krieglstein/Speaker Mar. 25. 14 April 1, 14 Mieka Pauley Jessica Kirson Attendance 62 Result +12 students 45 Result 65 Result +15 students - 5 students Standard Met NAC RESOURCE GUIDE Page 11 Checklist for Data Collection/Findings Data Collection/Findings should: Indicate whether you have exceeded, met or did not meet standard Provide detailed data that connects to your action plan Include sample size in the description Use specific numbers Avoid technical language Align with outcome and standard Be clearly and succinctly presented Support actions taken later to improve Questions to Consider when Reviewing the Findings: Does the data analysis yield information that can be used to determine to what extent the outcome is being achieved? Is the data reported in sufficient detail to effectively describe and document the outcome assessment results? Is the analysis linked to the specified standard? Does the analysis take into consideration different campuses and locations, if applicable? Any answer other than “yes” to the above questions is an indication that the data collection/findings should be re-examined and redesigned. Development and Implementation of an Action Plan Based on Assessment Results to Improve Attainment of Unit Outcomes This last step in the assessment process is often referred to as “closing the loop.” However, assessment is ongoing at CGCC. The chief aim of assessment is improvement. Keep in mind that assessment is cyclical. The previous assessment activities are of little importance unless the results are utilized to improve services, processes or instruction. It is critical to put into place some mechanism which will indicate if the implemented changes have the desired effect. If a unit implements changes in response to the assessment results, it is vital to have a mechanism for assessing the results of the changes. The timeline for determining whether any implemented changes had the desired effect will vary depending upon the changes put into place. The method for determining whether the change has had the desired effect may be as simple as repeating the previous assessment measures. Thus, the assessment process is cyclical and ongoing in nature as it moves through the process of assessment, review, identification of changes needed, implementation of those changes and subsequent phase of assessment. NAC RESOURCE GUIDE Page 12 What Action Plans Should Accomplish: 1. 2. 3. 4. 5. Address gaps or weaknesses identified by the assessment results Demonstrate a relationship between the outcome and the results from the data collected Set forth a plan that is described in detail and not in general terms Set forth a substantive, specific and non-trivial plan of action Set forth a plan that does not include words such as “continue” or “maintain.” The goal of assessment is to effect improvement, and words such as continue and maintain indicate that no improvement will be effected 6. Set forth a plan that is manageable and practical Example of a Unit Outcome, Results, Findings and Action Plan: Outcome: Students will report that the communication from the OAS Office, through the Blackboard course, was helpful in terms of facilitating access to college programs and activities. Results: The standard was met for students that expressed satisfaction with the OAS Blackboard presence as it facilitates equal access to college programs and activities. Findings: Standard Met Action Plan: Those students that make a practice of using Blackboard for their course work will continue to access the OAS course for guidance and follow through with requests for accommodations. Those students that don’t access Blackboard will continue to find that practice to be a hindrance to their educational success on many levels. A few responses to the Student survey indicated that confirmation of appointments with OAS should be posted to their Blackboard account. OAS is exploring on-line request forms. Checklist for an Action Plan Action Plans Should: Be included, even if target met Address gaps identified by assessment results Provide details of improvement made Indicate how likely the action taken will improve achievement of outcome Relate outcome and the findings Be substantive, not trivial Avoid words like “continue” or “maintain” Be manageable and practical Questions to Consider when Reviewing the Action Plan: Are the decisions set forth in the action plan based on assessment results and analysis? Are the action steps clearly stated and easily understood by someone outside of the program? Does the action plan directly relate to accomplishing the intended outcomes? Does the plan reflect improvements at the different campuses and locations, if appropriate? Any answer other than “yes” to the above questions is an indication that the data collection/findings should be re-examined and redesigned. NAC RESOURCE GUIDE Page 13 Review of Assessment Reports for Non-Academic Units Along with some overall analysis, questions about the annual process, Steps 5 and 6, as outlined and explained above, constitute the Assessment Report. Members of the Non- Academic Assessment Committee will review and assess Assessment Reports for Non-Academic units. Your assessment plan will be posted on the intranet for quick reference and kept on file at the Institutional Research Office. NAC RESOURCE GUIDE Page 14 Non-Academic Assessment Plan***as of 2014-Aug 2016 Step 1: The President shares the annual report card and strategic priorities with the campus community at “All College Day” in August of each year. Non-academic units participate in “Assessment Day” activities. Step 2: Department heads and directors review progress on action plans and align their program and department goals with the strategic goals and priorities. Assessment plans for this cycle are formed. Step 3: The Unit and VP determine which activity(s), opportunity(s), program(s) or service(s) to assess in this cycle.** Step 4: The Unit creates an assessment plan and a timeline for completion (based on type of assessment, when and how data would be collected, etc.) Step 5: The VP approves the assessment plan and timeline. Step 6: Units attend a NAC meeting during the fall semester to discuss their plans and timeline. NAC co-chairs present this information to the IAPG. Step 7: The Unit performs the assessment, compiles and interprets the results, and submits it to the VP. The VP reviews the results and has a discussion with the Unit about using them in planning, resource allocation, budget requests, etc. An “Action Plan” is created. Step 8: The Unit submits the assessment plan, results, action plan and timeline for implementation and reassessment to the Non-Academic Assessment Committee (NAC) during a NAC meeting in the spring semester or soon after, depending on the assessment timeline. NAC chairs presents this information to the IAPG. Step 9: The unit implements the action plan, and then reassesses the outcome to measure improvement. (Closing the loop) The unit submits a final report to the VP for approval. The approved assessment is shared at the next NAC meeting. NAC chair presents the information to the IAPG. Step 10: Go back to Step 1. (Note: Some assessment plans, and re-assessment activities may overlap, depending on the timeline for collecting data for each) **Ideally, all Units should be assessing at least one activity, program or service related to the Strategic priorities set by the College President each year. 09/2012 08/2014 08/2015 NAC RESOURCE GUIDE Page 15 Assessment Related Resources and Tools On the Web: ANNY Assessment Network of New Yorkhttp://www.oneonta.edu/anny/ SUNY Council on Assessmenthttp://www.sunyassess.org/ Middle States Commission on Higher Educationhttp://www.msche.org/ National Center for Post-Secondary Improvementhttp://www.stanford.edu/group/ncpi/unspecified/assessment_states/instruments.html Vanderbilt University Assessment Best Practiceshttp://virg.vanderbilt.edu/AssessmentPlans/Best_Practices.aspx At CGCC: NAC is on the Intranet! Columbia-Greene Planning and Assessment http://employees.sunycgcc.edu/default.aspx NAC RESOURCE GUIDE Page 16 Non- Academic Assessment Related Terms Accreditation: The designation that an institution earns indicating that it functions appropriately with respect to its resources, programs, and services. The accrediting association, often comprised of peers, is recognized as the external monitor. Maintaining fully accredited status ensures that the university remains in compliance with federal expectations and continues to receive federal funding. Assessment: A systematic, ongoing process to identify, collect, analyze, and report on data that is used to determine program achievement. Results are used for understanding and improving student learning and administrative services and operations. Assessment instrument: A tool used to evaluate assignments, activities, artifacts, or events that support outcomes or objectives. These can be measurement tools such as standardized tests, locally designed examinations, rubrics, exit interviews, or student, alumni, or staff surveys. Assessment plan: A document that outlines and describes assessment activities, including identifying learning outcomes or program objectives, methods, and criteria. The plan should include enough detail that anyone could read it and know exactly what to do to implement the plan. The plan should be reviewed frequently and revised any time new learning or operational goals are identified. Generally, programs update assessment plans early each academic year and submit results, analyses, and action plans by the following fall. Benchmark: A point of reference for measurement; a standard of achievement against which to evaluate or judge performance. A unit may use its own past-performance data as a baseline benchmark against which to compare future data/performance. Additionally, data from another (comparable, exemplary) institutions may be used as a target benchmark. Close the loop: The phrase indicates the ability to demonstrate—through a cycle of collecting, analyzing, and reporting on data—continuous improvement of curricular, programmatic, or operational efforts. Criterion: Identifies the target or minimum performance standard. For non-academic units, the criterion establishes a target in terms of a number or percentage. Culture of assessment: An institutional characteristic that shows evidence for valuing and engaging in assessment for ongoing improvement. Direct measures: Those in which actual behavior is observed or recorded and the measure is derived from that observation. Direct measures are generally preferable for the assessment of specific objectives, but some objectives may only be measurable with more indirect methods. NAC RESOURCE GUIDE Page 17 Effectiveness: The degree to which programs, events, or activities achieve intended results. Effectiveness indicates how well the curriculum, program, and even the university, achieves their purpose. Embedded assessment: Denotes a way to gather effectiveness information that is built into regular activities. When assessment is embedded, it is routine, unobtrusive, and an ongoing part an operational process. Evaluation of results: The process of interpreting data. The evaluation compares the results to the intentions and explains how they correlate. Feedback: Providing assessment results and analysis to interested constituents in order to increase transparency. Information can be communicated to students, faculty, staff, administrators, and outside stakeholders. Goal: A broad and un-measurable statement about what the program is trying to accomplish to meet its mission. Indirect measures: include those in which participants report their attitudes, perceptions, or feelings about something, usually in the form of a survey. Institutional Effectiveness (IE): The term used to describe how well an institution is accomplishing its mission and how it engages in continuous improvement. Instrument: An assessment tool that is used for the purpose of collecting data. Measure or Method: Describes the procedures used to collect data for assessing a program, including identifying the activity and the process for measuring it. Middle States Commission on Higher Education-The Middle States Commission on Higher Education is a voluntary, non-governmental, membership association that is dedicated to quality assurance and improvement through accreditation via peer evaluation. Middle States accreditation instills public confidence in institutional mission, goals, performance, and resources through its rigorous accreditation standards and their enforcement. Mission statement: Explains why a program or department exists and identifies its purpose. It articulates the organization’s essential nature, its values, and its work and should be aligned with institutional mission. NAC RESOURCE GUIDE Page 18 Non-Academic Assessment Committee: Works with the Director of Institutional Research for advisement regarding internal and external data sources and for assistance with communicating this information to the non-academic units. Provide information, training, and guidance to non-academic units regarding assessment processes. Seek out opportunities for professional development training and assessment activities. Promote use of assessment data as a mechanism to support the College planning, assessment, and budget cycle. Oversee progress of unit level assessment as per the schedules developed for the offices of the president and vice-presidents. Collect and disseminate information concerning assessment activities to the C-GCC intranet. Collect and disseminate assessment plan information to the Institutional Assessment Planning Group (IAPG). Objective measure: A score, grade, or evaluation that relies on a consistent, valid, and predetermined range. It does not depend on a subjective opinion. Peer assessment: The process of evaluating or assessing the work of one’s peers. Qualitative data: Non-numeric information such as conversation, text, audio, or video. Quantitative data: Numeric information including quantities, percentages, and statistics. Reliability: The extent to which an assessment method produces consistent and repeatable results. Reliability is a precondition for validity. Results: Report the qualitative or quantitative findings of the data collection in text or table format. They convey whether the outcomes or objectives were achieved at desired levels of performance. Sample: A defined subset of the population chosen based on 1) its ability to provide information; 2) its representativeness of the population under study; 3) factors related to the feasibility of data gathering, such as cost, time, participant accessibility, or other logistical concerns. Triangulate/-tion: The use of a combination of assessment methods, such as using surveys, interviews, and observations to measure a unit outcome. NAC RESOURCE GUIDE Page 19 Unit outcome: Intended outcomes that reflect the area or service that can be improved using current resources and personnel and are assessable within one assessment cycle. Unit outcomes should be under the direct control of the unit and in-line with a Strategic Plan goal, objective, and strategy. For units, outcomes are primarily process-oriented, describing the support process/service the unit intends to address. Validity: The extent to which an assessment method measures or assesses what it claims to measure or assess. A valid assessment instrument or technique produces results that can lead to valid inferences. NAC RESOURCE GUIDE Page 20 Appendix Strategic Plan End of Year Committee Report Forms and Reports Unit Assessment Plan Template NAC RESOURCE GUIDE Page 21 STRATEGIC PLAN – SEPTEMBER 1, 2012- AUGUST 31, 2016 Focus: 2011 2012 2013 2014 2015 Enrollment & Retention 2016 Enrollment, Retention, and Completion Objective = Outcome Standard = Indicator Measurement = Data Source ( ) Information in brackets are only examples and not inclusive lists Goal 1: Quality Education (VP/Dean Academics) Objective 1: Students will attain core academic proficiencies as defined in our Academic Philosophy Standard: All programs are in alignment with the academic philosophy. Measurement: Assessment of the Major, with SUNY GED review, Student Opinion Survey (S.O.S.) with local questions for Academic Philosophy, graduate survey N.C.C.B.P., nonacademic assessments. Objective 2: Student Academic Support Services will reflect the College’s commitment to excellence. Standard: The College’s Academic Support Services will compare favorably with peer institutions. Students and faculty will report that Student Academic Support Services meet or exceed expectations. Measurement: S.O.S., Graduate Survey, N.C.C.B.P., non-academic assessments, retention rate, SUNY reports. Objective 3: The teaching and learning environment will meet or exceed student expectations. Standard: Students report they are satisfied with the teaching and learning environment. The Transferability of the College’s Academic Programs will compare favorably with peer institutions. College graduation rates will compare favorably with peer institutions. Measurement: S.O.S., Student evaluations of teaching, Transfer and Graduate rates, Academic Performance of Transfers, graduate survey. NAC RESOURCE GUIDE Page 22 STRATEGIC PLAN – SEPTEMBER 1, 2012- AUGUST 31, 2016 Focus: 2011 2012 2013 2014 2015 Enrollment & Retention 2016 Enrollment, Retention, and Completion Objective = Outcome Standard = Indicator Measurement = Data Source ( ) Information in brackets are only examples and not inclusive lists Goal 2: Accessibility (VP/Deans Administration, Academics, Student) Objective 1: Affordability Standard: Maintain tuition and fees comparable to SUNY peers Maintain or increase scholarship opportunities for students Students will report satisfaction with cost of College. Measurement: SUNY peer benchmarking, S.O.S., non-academic assessment (Foundation, Business Office) Objective 2: Prepare academically challenged students for College success. Standard: Increase long-term academic performance of students taking transitional courses. Measurement: N.C.C.B.P., SIRIS reporting of grades, and course assessments. Objective 3: Diversify the student population Standard: Maintain optimum level of students by recruiting in new markets Measurement: SIRIS reporting of # of registered students (who are early admits, distance learning, veterans, international, etc.); DOL referrals, Enrollment Management non-academic assessment, monthly admissions reports, S.O.S., non-traditional student population NAC RESOURCE GUIDE Page 23 STRATEGIC PLAN – SEPTEMBER 1, 2012- AUGUST 31, 2016 Focus: 2011 2012 2013 2014 2015 Enrollment & Retention 2016 Enrollment, Retention, and Completion Objective = Outcome Standard = Indicator Measurement = Data Source ( ) Information in brackets are only examples and not inclusive lists Goal 3: Excellent Facilities (VP/Deans Administration, Academics, Student) Objective 1: Provide a physical infrastructure that supports the College’s commitment to educational excellence. Standard: Maintain adequate availability of teaching environments. Measurement: Room usage statistics, OAS (Office of Accessibility Services) reports, equipment in classroom data, community use satisfaction survey. Objective 2: Provide effective technology that support teaching and learning outcomes. Standard: Adhere to the academic and administrative technology plans. Students will report satisfaction with technology. Measurement: Assessment of the Major, Program Accreditations (for example Automotive, Computer Science), Non-academic assessments (CIS, Library, ASC) S.O.S Objective 3: Maintain a Safe and Secure Campus Standard: Students report they are satisfied with the College environment in that they feel safe. Measurement: Campus safety statistics, Incident Reports, Emergency Management Plan, S.O.S., Non-academic assessments. NAC RESOURCE GUIDE Page 24 STRATEGIC PLAN – SEPTEMBER 1, 2012- AUGUST 31, 2016 Focus: 2011 2012 2013 2014 2015 Enrollment & Retention 2016 Enrollment, Retention, and Completion Objective = Outcome Standard = Indicator Measurement = Data Source ( ) Information in brackets are only examples and not inclusive lists Goal 4: Student Centered (VP/Deans Academics, Student) Objective 1: The College will foster an atmosphere where students feel connected to the learning environment (in achieving goals for graduation and/or career and/or successful transfer.) Standard: Maintain current faculty/student contact structure. Measurement: Retention data, IPEDS, SIRIS reporting on Faculty/Student ratio; Transfer and Graduate rates, Academic Performance of Transfers, N.C.C.B.P., SOS. Objective 2: The College will foster an atmosphere where students feel connected to the College in a personal way. Standard: College services will remain accessible and provide effective service. Measurement: S.O.S., Graduate Survey, Non-academic assessments (Student Development.) NAC RESOURCE GUIDE Page 25 STRATEGIC PLAN – SEPTEMBER 1, 2012- AUGUST 31, 2016 Focus: 2011 2012 2013 2014 2015 Enrollment & Retention 2016 Enrollment, Retention, and Completion Objective = Outcome Standard = Indicator Measurement = Data Source ( ) Information in brackets are only examples and not inclusive lists Goal 5: Service to the Community (VP/Deans Administration, Academics, Student) Objective 1: The College will effectively serve the social, cultural needs of its local community. Standard: The College will offer a variety of programs and services responsive to the community. (Non-credit course offerings, Lectures, Concerts, Outside Group Facilities Use, Summer Camps, etc) Measurement: Board of Trustees Facilities Use Report, Non-academic assessments (CS, AV Media, Library, PR, Student Services), non-credit instructional activities survey (NCIA). Objective 2: The College will provide business and industry training needs for its local community. Standard: The College will offer a variety of training responsive to the community. Measurement: Non-academic assessments NAC RESOURCE GUIDE Page 26 STRATEGIC PLAN – SEPTEMBER 1, 2012- AUGUST 31, 2016 Focus: 2011 2012 2013 2014 2015 Enrollment & Retention 2016 Enrollment, Retention, and Completion Objective = Outcome Standard = Indicator Measurement = Data Source ( ) Information in brackets are only examples and not inclusive lists Goal 6: Sound Management (President) Objective 1: The College will maintain its public trust. Standard: Continuous “no-exception” Third-Party Review Audit Reports/State & Federal Regulation Compliance Measurement: Audit Reports Compliance, Non-academic assessments (Business Office, Financial Aid) Objective 2: The College will meet its mission efficiently and effectively. Standard 2.1: Maintain Positive Fund Balance equivalent to the Government Standard Board of 5-15% Measurement: Fund Balance, Consistent Positive votes on College budget by the two County sponsors Standard 2.2: The College will maintain compliance with the standards set forth by relevant accrediting bodies. Measurement: Accreditation Reports; Maintain accreditations Objective 3: The College will increase development efforts for outside and non-traditional resources. Standard: Adhere to the College Foundation’s strategic plan to create a major fundraising event. The event will generate $50,000 in new revenue. Measurement: Foundation board minutes related to discussion of the fundraising events, Non-academic assessments, comparable financial statements. NAC RESOURCE GUIDE Page 27 End of Year Committee Report Each Committee is being asked to fill out a year-end report that demonstrates major actions taken throughout the year. This summary will serve as a reflective exercise and the information that it provides may aid in our assessment efforts. During the last committee meeting, please take the time to reflect and make note of the committee’s major accomplishments. Then, consider future goals or ideas related to the charge of the committee. You may also make additional notes for future reference. Once completed, this report may be submitted to the President. This information will also be posted on the intranet for reference from other institutional Units at C-GCC. In addition, these reports will enable us to easily reference actions taken across the campus from various Non-Academic units. Thank you for your time and dedication to Columbia-Greene Community College. NAC RESOURCE GUIDE Page 28 END OF YEAR COMMITTEE REPORT Name of Committee________________________ Year____________ Committee Chair(s)_________________________________________ Names of Members: ________________________________ ____________________________ ________________________________ ____________________________ ________________________________ ____________________________ ________________________________ ____________________________ ________________________________ ____________________________ Charge or Mission: Please List Major Actions Taken: NAC RESOURCE GUIDE Page 29 Outstanding Goals or Future Plans: Additional Notes: NAC RESOURCE GUIDE Page 30 Columbia-Greene Community College Survey Approval Form Completion of this form is required to conduct survey research at C-GCC. Please return this form to the Office of Institutional Research at least 2 weeks prior to your projected survey start date. Name Department Survey Start Date Survey End Date Please provide a brief description of your survey. Include information regarding the survey’s: 1. objective (e.g. to measure student satisfaction with a particular course topic) 2. target population (e.g. students from a particular class) 3. mode of administration (e.g. in-person, SurveyMonkey, Email, Phone, etc.) Has this survey been administered at C-GCC in the past? Yes No Not Sure ***Please also attach your survey to this form*** Dean Approval Signature Survey Request Approved NAC RESOURCE GUIDE ________________________________ Signature Date __________________________ Date Page 31 2015 – 2016 UNIT ASSESSMENT PLAN AND REPORTING FORM AREA Select your Area UNIT(S) Select your Unit AUTHOR Enter your name Specify any additional units here PART 1 OUTCOME(S) Select Strategic Goal, Select Objective Enter a brief, clear statement describing your desired outcome in relation to broader goals ASSESSMENT METHOD Describe how you intend to measure the proposed outcome STANDARD(S) Specify a criterion or target that will indicate successful achievement of the proposed outcome To propose additional outcomes, click the (+) symbol at the bottom-right corner of this table NAC RESOURCE GUIDE Page 32 2015 – 2016 UNIT ASSESSMENT PLAN AND REPORTING FORM PART 2 RESULTS Briefly describe the results and indicate whether the standard(s) were met, unmet, or exceeded. ACTION PLAN What actions were taken or will be taken based on the results? BUDGETING AND RESOURCE ALLOCATION Will these results be used to support or justify an upcoming budget proposal? Please explain. NAC RESOURCE GUIDE Page 33