Multiple Regression with Two Independent Variables

advertisement

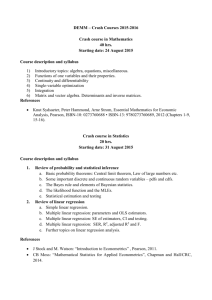

CHAPTER 6 Multiple Regression 1. 2. 3. 4. 5. 6. 7. 8. The model The Least Squares Method of Determining Sample Regression Coefficients Using Matrix Algebra to Solve the System of Least Squares Normal Equations Standard Error of Estimate Sampling Properties of the Least Squares Estimators 5.1. The Variances and Covariances of the Least Squares Estimators Inferences about the Population Regression Parameters 6.1. Interval Estimates for the Coefficients of the Regression 6.2. Confidence Interval for the Mean Value of y for Given Values of 𝑥𝑖 6.3. Prediction Interval for the Individual Value of y for Given Values of 𝑥𝑖 6.4. Hypothesis Testing for a Single Coefficient 6.4.1. Two-Tail Test of Significance 6.4.2. One-Tailed Tests 6.4.2.1. Example 1: Testing for Price Elasticity of Demand 6.4.2.2. Example 2: Testing for Effectiveness of Advertising 6.4.2.3. Example 3: Testing for a Linear Combination of Coefficients Extension of the Regression Model 7.1. The Optimal Level of Advertising 7.2. Interaction Variables 7.3. Log-Linear Model Measuring Goodness of Fit—𝑅2 8.1. Adjusted 𝑅2 1. The model In multiple regression models we may include two or more independent variables. We will start with the model that includes two independent variables 𝑥2 and 𝑥3 . The main features of and assumptions regarding the simple regression model can be extended to multiple regression models. Here the dependent variable is influenced by the variations in the independent variables through the three parameters 𝛽1 , 𝛽2 , and 𝛽3 . The disturbance term u is still present, because the variations in y continue to be influenced by the random component 𝑢. Thus, 𝑦𝑖 = 𝛽1 + 𝛽2 𝑥2𝑖 + 𝛽3 𝑥3𝑖 + 𝑢𝑖 Given that 𝐸(𝑢𝑖 ) = 0, the following holds (dropping the 𝑖 subscript), 𝐸(𝑦) = 𝛽1 + 𝛽2 𝑥2 + 𝛽3 𝑥3 The sample regression equation is 𝑦 = 𝑏1 + 𝑏2 𝑥2 + 𝑏3 𝑥3 + 𝑒 To estimate the parameters from the sample data we need formulas to determine values for the estimators of the population parameters. The estimators are the sample regression coefficients 𝑏1 , 𝑏2 , and 𝑏3 . Chapter 6—Multiple Regression 1 of 26 2. The Least Squares Method of Determining Sample Regression Coefficients We want to find the values for 𝑏1 , 𝑏2 , and 𝑏3 such that the sum of the squared deviation of the observed 𝑦 from the fitted plane is minimized. The deviation takes on the same familiar form as in the simple regression model 𝑒 = 𝑦 − 𝑦̂ where 𝑦̂ is the predicted value which lies on the regression plane. 𝑦̂ = 𝑏1 + 𝑏2 𝑥2 + 𝑏3 𝑥3 Substituting for 𝑦̂ in the residual equation above, squaring both sides, and then summing for all 𝑖, we have, 𝑒 = 𝑦 − 𝑏1 − 𝑏2 𝑥2 − 𝑏3 𝑥3 ∑𝑒2 = ∑(𝑦 − 𝑏1 − 𝑏2 𝑥2 − 𝑏3 𝑥3 )2 Taking three partial derivatives, one for each coefficient, and then setting them equal to zero, we obtain three normal equations. 𝜕 ∑𝑒 2 = −2∑(𝑦 − 𝑏1 − 𝑏2 𝑥2 − 𝑏3 𝑥3 ) = 0 𝜕𝑏1 𝜕 ∑𝑒 2 = −2∑𝑥2 (𝑦 − 𝑏1 − 𝑏2 𝑥2 − 𝑏3 𝑥3 ) = 0 𝜕𝑏2 𝜕 ∑𝑒 2 = −2∑𝑥3 (𝑦 − 𝑏1 − 𝑏2 𝑥2 − 𝑏3 𝑥3 ) = 0 𝜕𝑏3 The normal equations are: ∑𝑦 − 𝑛𝑏1 − 𝑏2 ∑𝑥2 − 𝑏3 ∑𝑥3 = 0 ∑𝑥2 𝑦 − 𝑏1 ∑𝑥2 − 𝑏2 ∑𝑥22 − 𝑏3 ∑𝑥2 𝑥3 = 0 ∑𝑥3 𝑦 − 𝑏1 ∑𝑥3 − 𝑏2 ∑𝑥2 𝑥3 − 𝑏3 ∑𝑥23 = 0 To find the solutions for the three 𝑏’s, we can write the normal equations in the following way, by taking the terms not involving the 𝑏𝑗 to the right-hand-side. 𝑛𝑏1 + (∑𝑥2 )𝑏2 + (∑𝑥3 )𝑏3 = ∑𝑦 (∑𝑥2 )𝑏1 + (∑𝑥22 )𝑏2 + (∑𝑥2 𝑥3 )𝑏3 = ∑𝑥2 𝑦 (∑𝑥3 )𝑏1 + (∑𝑥2 𝑥3 )𝑏2 + (∑𝑥32 )𝑏3 = ∑𝑥3 𝑦 Here we have a system of three equations with three unknowns, 𝑏1 , 𝑏2 , and 𝑏3 . Now we need to develop a method to find the solution for these unknowns. For this, a brief introduction to matrix algebra is called for. (See the appendix at the end of this chapter.) Chapter 6—Multiple Regression 2 of 26 3. Using Matrix Algebra to Solve the System of Least Squares Normal Equations We can write the system of normal equations in the matrix format: 𝑛 [∑𝑥2 ∑𝑥3 ∑𝑥2 ∑𝑥3 ∑𝑦 𝑏1 2 ∑𝑥2 ∑𝑥2 𝑥3 ] [𝑏2 ] = [∑𝑥2 𝑦] 𝑏3 ∑𝑥2 𝑥3 ∑𝑥32 ∑𝑥3 𝑦 with the following shorthand notation 𝐗𝐛 = 𝐜 where, 𝑛 𝐗 = [∑𝑥2 ∑𝑥3 ∑𝑥2 ∑𝑥3 2 ∑𝑥2 ∑𝑥2 𝑥3 ] ∑𝑥2 𝑥3 ∑𝑥32 𝑏1 𝐛 = [𝑏2 ] 𝑏3 ∑𝑦 𝐜 = [∑𝑥2 𝑦 ] ∑𝑥3 𝑦 Solutions for 𝑏𝑗 are obtained by finding the product of the inverse matrix of 𝐗, 𝐗 −𝟏 , times 𝐜. 𝐛 = 𝐗 −𝟏 𝐜 Example: We want to obtain a regression of 𝑚𝑜𝑛𝑡ℎ𝑙𝑦 𝑠𝑎𝑙𝑒𝑠 (𝑦), in $1,000's, on 𝑝𝑟𝑖𝑐𝑒 (𝑥2 ), in dollars, and 𝑎𝑑𝑣𝑒𝑟𝑡𝑖𝑠𝑖𝑛𝑔 (𝑥3 ), in $1,000's, of a fast food restaurant. The data is contained in the Excel file “CH6 DATA” in worksheet tab “burger”. Use Excel to compute the values for the elements of the matrix 𝐗 and 𝐤: 𝑛 [∑𝑥2 ∑𝑥3 ∑𝑥2 ∑𝑥3 75 426.5 138.3 ∑𝑥22 ∑𝑥2 𝑥3 ] = [426.5 2445.707 787.381] 138.3 787.381 306.21 ∑𝑥2 𝑥3 ∑𝑥32 ∑𝑦 5803.1 [∑𝑥2 𝑦] = [32847.7] 10789.6 ∑𝑥3 𝑦 Thus, our system of normal equations can be written as: 75 426.5 [426.5 2445.707 138.3 787.381 138.3 𝑏1 5803.1 787.381] [𝑏2 ] = [32847.7] 306.21 𝑏3 10789.6 Since 𝑏 = 𝑋 −1 𝑐, we need to find 𝑋 −1 . Now that we know what the inverse of a matrix means and how to find it, we have Excel to do the hard work for us. In Excel use the array type function =𝐌𝐈𝐍𝐕𝐄𝐑𝐒𝐄(). You must first highlight a block of 3 × 3 cells, then call up the function =MINVERSE(). When it asks for “array”, you must “lasso” the block of cells containing the elements of the 𝑋 matrix and then press Ctrl-Shift-Enter keys together. The result is the following: Chapter 6—Multiple Regression 3 of 26 1.689828 -0.28462 -0.03135 -0.28462 0.050314 -0.00083 -0.03135 -0.00083 0.019551 which is the matrix 𝑋 −1 . Then pre multiplying 𝑘 by 𝑋 −1 , we have b₁ b₂ b₃ 1.689828 -0.28462 -0.03135 = -0.28462 0.050314 -0.00083 -0.03135 -0.00083 0.019551 × 5803.1 32847.7 10789.6 = 118.9136 -7.90785 1.862584 𝑦̂ = 118.9136 − 7.90785𝑥2 + 1.862584𝑥3 ̂ = 118.914 − 7.908𝑃𝑅𝐼𝐶𝐸 + 1.863𝐴𝐷𝑉𝐸𝑅𝑇 𝑆𝐴𝐿𝐸𝑆 𝑏2 = −7.908 implies that for each dollar increase in price (advertising held constant) monthly sales would fall by $7,908. Or, a 10¢ increase in price would result in a decrease in monthly sales of $790.8. 𝑏3 = $1.863 implies that (holding price constant) for each additional $1,000 of advertising sales would increase by $1,863. The following is the Excel regression output showing the estimated coefficients. SUMMARY OUTPUT Regression Statistics Multiple R R Square Adjusted R Square Standard Error Observations 0.669521 0.448258 0.432932 4.886124 75 ANOVA df 2 72 74 SS 1396.5389 1718.9429 3115.4819 MS 698.26946 23.874207 F 29.247859 Significance F 5.04086E-10 Coefficients 118.91361 -7.90785 1.86258 Standard Error 6.35164 1.09599 0.68320 t Stat 18.72172 -7.21524 2.72628 P-value 0.00000 0.00000 0.00804 Lower 95% 106.25185 -10.09268 0.50066 Regression Residual Total Intercept PRICE ADVERT Upper 95% 131.57537 -5.72303 3.22451 4. Standard Error of Estimate In simple regression we learned that the disturbance term 𝑢 for each given 𝑥 is normally distributed about the population regression line, with an expected value E(𝑢) = 0, and variance var(𝑢). The unbiased estimator of var(𝑢), var(𝑒), was obtained by using the least squares residuals 𝑒 = 𝑦 − 𝑦̂. var(𝑒) = ∑𝑒 2 𝑛−2 = ∑(𝑦 − 𝑦̂)2 𝑛−2 The square root of the estimated variance was called the standard error of estimate, se(𝑒). Here with two independent variables, the least squares residuals take the same form: 𝑒 = 𝑦 − 𝑦̂ = 𝑦 − (𝑏1 + 𝑏2 𝑥2 + 𝑏3 𝑥3 ) Chapter 6—Multiple Regression 4 of 26 Thus the estimated variance of the disturbance or error term is: var(𝑒) = ∑𝑒 2 𝑛−𝑘 = ∑(𝑦 − 𝑦̂)2 𝑛−𝑘 where 𝑘 is number of parameters of the regression being estimated. Here 𝑘 = 3, for estimating 𝛽1 , 𝛽2 , and 𝛽3 . Given the estimated regression equation in the last example, 𝑦̂ = 118.9136 − 7.90785𝑥2 + 1.862584𝑥3 the predicted value of 𝑆𝐴𝐿𝐸𝑆 (𝑦̂), for price 𝑥2 = $6.20 and advertising 𝑥3 = $3.0 (observation #10), is: 𝑦̂ = 118.9136 − 7.90785(6.2) + 1.862584(3) = $75.473 (thousand) The observed value of sales is 𝑦10 = $76.4. Thus, the residual is calculated as 𝑒 = 76.4 − 75.473 = 0.927. Computing such residuals for all 75 observations and finding the sum of squared residuals, the estimated variance and the standard error of estimate are, respectively var(𝑒) = ∑(𝑦 − 𝑦̂)2 𝑛−𝑘 = 1718.943 = 23.874 72 se(𝑒) = 4.886 5. Sampling Properties of the Least Squares Estimators As they were in simple regression, the least squares estimators, or the coefficients of the estimated regression, are sample statistics obtained from a random sample. Thus they are all random variables each with its own expected value and variance. Again, like the simple regression coefficients, these estimators are BLUE. 𝑏1 , 𝑏2 , and 𝑏3 are each a linear function of the dependent variable 𝑦. The coefficients are unbiased estimators: E(𝑏1 ) = 𝛽1 , E(𝑏2 ) = 𝛽2 , and E(𝑏3 ) = 𝛽3 var(𝑏1 ), var(𝑏2 ), and var(𝑏3 ) are all minimum variances. Once again, the linearity assumption is important for inferences about the parameters of the regression. If the disturbance terms 𝑢 , and correspondingly the 𝑦 values, are normally distributed about the regression plane, then linearly related coefficients are also normally distributed. If the disturbance terms are not normal, then according to the central limit theorem, the coefficients will be approximately normal for large samples. 5.1. The Variances and Covariances of the Least Squares Estimators Since the least squares estimators are random variables, their variances show how closely or widely dispersed they tend to scatter around their respective population parameters. In a multiple regression model with three parameters 𝛽1 , 𝛽2 , and 𝛽3 , the variance of each estimator 𝑏1 , 𝑏2 , and 𝑏3 is estimated from the sample data. We can determine the variances and covariances of the least square estimators using elementary matrix algebra. Using the inverse matrix X −1 determined above, we can generate a covariance matrix as follows: Chapter 6—Multiple Regression 5 of 26 var(𝑏1 ) [covar(𝑏1 , 𝑏2 ) covar(𝑏1 , 𝑏3 ) covar(𝑏1 , 𝑏2 ) var(𝑏2 ) covar(𝑏2 , 𝑏3 ) var(𝑒)𝑋 −1 = 23.87421 × covar(𝑏1 , 𝑏3 ) covar(𝑏2 , 𝑏3 )] = var(𝑒)𝑋 −1 var(𝑏3 ) 1.6898 -0.2846 -0.0313 -0.2846 0.0503 -0.0008 -0.0313 -0.0008 0.0196 = 40.3433 -6.7951 -0.7484 -6.7951 1.2012 -0.0197 -0.7484 -0.0197 0.4668 From the covariance matrix on the extreme right hand side we have the following variances and covariances: var(𝑏1 ) = 40.3433 var(𝑏2 ) = 1.2012 var(𝑏3 ) = 0.4668 covar(𝑏1 , 𝑏2 ) = −6.7951 covar(𝑏1 , 𝑏3 ) = −0.7484 covar(𝑏2 , 𝑏3 ) = −0.0197 6. Inferences about the Population Regression Parameters To build an interval estimate or perform a hypothesis test about the 𝛽’s in the population regression, the least square estimators of these parameters must be normally distributed. The normality is established if the disturbance terms and, correspondingly, the 𝑦 values for the various values of the explanatory variables are normally distributed. And, as long as the least squares estimators are linear functions of the 𝑦, then the estimator are also normally distributed. 6.1. Interval Estimates for the Coefficients of the Regression Following the same reasoning as in simple regression, the interval estimates for each 𝛽 parameter take the following form, for a given confidence level 1 − 𝛼 (confidence level): For 𝛽1 : 𝐿, 𝑈 = 𝑏1 ± 𝑡α⁄2,𝑑𝑓 se(𝑏1 ) For 𝛽2 : 𝐿, 𝑈 = 𝑏2 ± 𝑡α⁄2,𝑑𝑓 se(𝑏2 ) For 𝛽3 : 𝐿, 𝑈 = 𝑏3 ± 𝑡α⁄2,𝑑𝑓 se(𝑏3 ) The standard error for each regression coefficient can be obtained from the variance-covariance matrix. The 95% interval estimates for the slope coefficients in the above example, based on the following information, are given below. 𝑏1 = 18.914 𝑏2 = −7.908 𝑏3 = 1.863 𝑡0.025,(72) = 1.993 se(𝑏1 ) = 6.352 se(𝑏2 ) = 1.096 se(𝑏3 ) = 0.683 The 95% interval estimate for the response of 𝑆𝐴𝐿𝐸𝑆 to a 𝑃𝑅𝐼𝐶𝐸 change, 𝛽2 , is 𝐿, 𝑈 = 𝑏2 ± 𝑡0.025,(𝑛−3) se(𝑏2 ) 𝐿, 𝑈 = −7.908 ± (1.993)(1.096) = [−10.093, −5.723] Chapter 6—Multiple Regression 6 of 26 This implies that a $1 decrease in price will result in an increase in revenue somewhere between $5,723 and $10,093. The 95% interval estimate for the response of 𝑆𝐴𝐿𝐸𝑆 to a change in 𝐴𝐷𝑉𝐸𝑅𝑇, 𝛽3 , is 𝐿, 𝑈 = 𝑏3 ± 𝑡0.025,(𝑛−3) se(𝑏3 ) 𝐿, 𝑈 = 1.863 ± (1.993)(0.683) = [0.501,3.225] An increase in advertising expenditure of $1,000 would increase sales between $501 and $3,225. This is a relatively wide interval and, therefore, does not convey good information about the range of responses in sales to advertising. This wide interval arises from the fact that the sampling variability of the 𝑏3 coefficient is large. One way to reduce this variability is to increase the sample size. But this in many cases is impractical because of the limitations on data availability. An alternative way is to introduce some kind of non-sample information on the coefficient, to be explained later. 6.2. Interval Estimate for the Mean Value of y for Given Values of 𝒙𝒋 The mean value of 𝑦 for given values of the independent variables is 𝑦̂0 , the predicted value of 𝑦 for the given values x₀₂, x₀₃, ... The confidence interval for the mean value of y is therefore, 𝐿, 𝑈 = 𝑦̂0 ± 𝑡α⁄2,(𝑛−𝑘) se(𝑦̂0 ) To determine se(𝑦̂0 ), start with var(𝑦̂0 ). For a model with two independent variables, 𝑦̂0 = 𝑏1 + 𝑏2 𝑥02 + 𝑏3 𝑥03 var(𝑦̂0 ) = var(𝑏1 + 𝑏2 𝑥02 + 𝑏3 𝑥03 ) 2 2 var(𝑦̂0 ) = var(𝑏1 ) + 𝑥02 var(𝑏2 ) + 𝑥03 var(𝑏3 ) + 2𝑥02 covar(𝑏1 , 𝑏2 ) + 2𝑥03 covar(𝑏1 , 𝑏3 ) + 2𝑥02 𝑥03 covar(𝑏2 , 𝑏3 ) To build a 95% interval for the mean value of 𝑆𝐴𝐿𝐸𝑆 in the model under consideration, ̂ = 118.914 − 7.908𝑃𝑅𝐼𝐶𝐸 + 1.863𝐴𝐷𝑉𝐸𝑅𝑇 𝑆𝐴𝐿𝐸𝑆 Let 𝑥02 = 𝑃𝑅𝐼𝐶𝐸0 = 5 and 𝑥03 = 𝐴𝐷𝑉𝐸𝑅𝑇0 = 3. Then 𝑦̂0 = 84.96. 𝑦̂0 = 118.914 − 7.908(5) + 1.863(3) = 84.96 From the covariance matrix, 40.3433 -6.7951 -0.7484 -6.7951 1.2012 -0.0197 -0.7484 -0.0197 0.4668 we have, var(𝑦̂0 ) = 40.3433 + (52 )(1.2012) + (32 )(0.4668) + 2(5)(−6.7951) + 2(3)(−0.7484) + 2(5)(3)(−0.0197) var(𝑦̂0 ) = 1.5407 se(𝑦̂0 ) = √1.5407 = 1.241 Chapter 6—Multiple Regression 7 of 26 𝐿, 𝑈 = 84.96 ± (1.993)(1.241) = [82.49,87.44] 6.3. Prediction Interval for the Individual Value of 𝒚 for a Given 𝒙 For the given value of 𝑥𝑗 , 𝑥0𝑗 , the difference between an individual value 𝑦0 and the mean value 𝑦̂0 is the error term, 𝑦0 − 𝑦̂0 = 𝑒 Therefore, var(𝑦0 ) = var(𝑒) + var(𝑦̂0 ) In the above example, var(𝑦0 ) = 23.8742 + 1.5407 = 25.4149 se(𝑦0 ) = 5.0413 𝐿, 𝑈 = 84.96 ± (1.993)(5.0413) = [74.91,95.01] 6.4. Interval Estimation for a Linear Combination of Coefficients In the above example, suppose we want to increase advertising expenditure by $800 and reduce the price by $0.40. Build a 95% interval estimate for the change in the mean (expected) sales. The change in the mean sales is: ∆𝑦̂ = 𝑦̂1 − 𝑦̂0 ∆𝑦̂ = [𝑏1 + 𝑏2 (𝑥02 − 0.4) + 𝑏3 (𝑥03 + 0.8)] − [𝑏1 + 𝑏2 𝑥02 + 𝑏3 𝑥03 ] ∆𝑦̂ = −0.4𝑏2 + 0.8𝑏3 The interval estimate is: 𝐿, 𝑈 = ∆𝑦̂ ± 𝑡0.025,𝑑𝑓 se(∆𝑦̂) 𝐿, 𝑈 = (−0.4𝑏2 + 0.8𝑏3 ) ± 𝑡0.025,𝑑𝑓 se(−0.4𝑏2 + 0.8𝑏3 ) var(−0.4𝑏2 + 0.8𝑏3 ) = 0.42 var(𝑏2 ) + 0.82 var(𝑏3 ) − 2 × 0.4 × 0.8 × cov(𝑏2 , 𝑏3 ) var(−0.4𝑏2 + 0.8𝑏3 ) = 0.42 (1.2012) + 0.82 (0.4668) − 2 × 0.4 × 0.8(−0.0197) var(−0.4𝑏2 + 0.8𝑏3 ) = 0.5036 se(−0.4𝑏2 + 0.8𝑏3 ) = 0.7096 ∆𝑦̂ = −0.4𝑏2 + 0.8𝑏3 = −0.4(−7.908) + 0.8(1.8626) = 4.653 𝐿, 𝑈 = 4.653 ± 1.993 × 0.7096 = [3.239,6.068] We are 95% confident the mean increase in sales will be between $3,239 and $6,068. Chapter 6—Multiple Regression 8 of 26 6.5. Hypothesis Testing for a Single Coefficient 6.5.1. Two-Tail Test of Significance Generally, a computer output such as the Excel regression output provides the 𝑡 test statistic for the test of null hypothesis 𝐻0 : 𝛽𝑗 = 0 against 𝐻1 : 𝛽𝑗 ≠ 0. If the null hypothesis is not rejected, then we conclude that the independent variable 𝑥𝑗 does not influence 𝑦. The test statistic for such a test is 𝑇𝑆 = |𝑡| = 𝑏𝑗 se(𝑏𝑗 ) And, since this is a two-tail test, the critical value, for a level of significance α, is 𝐶𝑉 = 𝑡α⁄2,(𝑛−𝑘) . For example, 𝐻0 : 𝛽2 = 0 𝐻1 : 𝛽2 ≠ 0 𝑇𝑆 = 7.908⁄1.096 = 7.215, and 𝐶𝑉 = 𝑡0.025,(72) = 1.993. Since 𝑇𝑆 > 𝐶𝑉, we reject the null hypothesis and conclude that revenue is related to price. Also note that the probability value for the test statistic is 2 × P(𝑡 > 7.215) ≈ 0. Since this is less than α = 0.05, we reject the null. The test for 𝛽3 is as follows: 𝐻0 : 𝛽3 = 0 𝐻1 : 𝛽3 ≠ 0 𝑇𝑆 = 1.863⁄0.683 = 2.726 and 𝐶𝑉 = 1.993. Since 𝑇𝑆 > 𝐶𝑉, we reject the null hypothesis and conclude that revenue is related to advertising expenditure. The probability value for the test statistic is 2 × P(𝑡 > 2.726) = 0.0080. All these figures are presented in the Excel regression output shown above. 6.5.2. One-Tailed Tests We will use the current example to provide an example of one-tailed test of hypothesis in regression. 6.5.2.1. Example 1: Testing for Price Elasticity of Demand Suppose we are interested to test the hypothesis that the demand is price inelastic against the alternative hypothesis that demand is price elastic. According to the total-revenue test of elasticity, if demand is inelastic, then a decrease in price would lead to a decrease in revenue. If demand is elastic, a decrease in price will lead to an increase in revenue. 𝐻0 : 𝛽2 ≥ 0 (When demand is price inelastic, price and revenue change in the same direction.) 𝐻1 : 𝛽2 < 0 (When demand is price elastic, price and revenue change in the opposite direction.) Note that here we are giving the benefit of the doubt to “inelasticity”. If demand is elastic, we want to have strong evidence of that. Also note that the regression result already provides some evidence that demand is elastic (𝑏2 = −7.908 < 0). But is this evidence significant? The test statistic is Chapter 6—Multiple Regression 9 of 26 𝑇𝑆 = 𝑏2 − (𝛽2 )0 se(𝑏2 ) Since by the null hypothesis (𝛽2 )0 is equal to (or greater than) zero, the test statistic simplifies to 𝑇𝑆 = 𝑏2 ⁄se(𝑏2 ). However, since this is a one-tailed test, the critical value is 𝐶𝑉 = 𝑡α,(𝑛−𝑘) . 𝑇𝑆 = 7.908⁄1.096 = 7.215 > 𝐶𝑉 = 𝑡0.05,(72) = 1.666 This leads us to reject the null hypothesis and conclude that there is strong evidence that 𝛽2 is negative, and hence demand is elastic. 6.5.2.2. Example 2: Testing for Effectiveness of Advertising We can also perform a test for the effectiveness of advertising. Does an increase in advertising expenditure bring an increase in total revenue above that spent on advertising? That is, is 𝑑𝑦⁄𝑑𝑥3 > 1? Again, the sample regression provides that 𝑏3 = 1.863 > 1. But we want to prove if this evidence is significant. Thus, 𝐻0 : 𝛽3 ≤ 1 𝐻1 : 𝛽3 > 1 The test statistic is, 𝑡= 𝑏3 − (𝛽3 )0 1.862 − 1 = = 1.263 se(𝑏2 ) 0.683 Since 𝑡 = 1.263 < 𝐶𝑉 = 𝑡0.05,(72) = 1.666, do not reject the null hypothesis. The p-value for the test is P(𝑡 > 1.263) = 0.1053, which exceeds 𝛼 = 0.05. The test does not prove that advertising is effective. That is, 𝛽3 is not significantly greater than 1. 6.5.2.3. Example 3: Testing for a Linear Combination of Coefficients Test the hypothesis that dropping price by $0.20 will be more effective for increasing sales revenue than increasing advertising expenditure by $500, that is: −0.20𝛽2 > 0.5𝛽3 Note that the regression model provides that −0.2𝑏2 = 1.582 > 0.5𝑏3 = 0.931. The test is to prove that this is significant. Therefore, the null and alternative hypotheses are: 𝐻0 : − 0.2𝛽2 − 0.5𝛽3 ≤ 0 𝐻1 : − 0.2𝛽2 − 0.5𝛽3 > 0 The test statistic is, 𝑡= −0.2𝑏2 − 0.5𝑏3 − (−0.2𝛽2 − 0.5𝛽3 )0 se(−0.2𝑏2 − 0.5𝑏3 ) 𝑇𝑆 = −0.2𝑏2 − 0.5𝑏3 se(−0.2𝑏2 − 0.5𝑏2 ) The problem here is to find the standard error of the linear combination of the two coefficients in the denominator. For that, first determine var(−0.20𝑏2 − 0.5𝑏2 ): var(−0.2𝑏2 − 0.5𝑏3 ) = (−0.2)2 var(𝑏2 ) + (−0.5)2 var(𝑏3 ) + 2(−0.2)(−0.5)covar(𝑏2 , 𝑏3 ) Chapter 6—Multiple Regression 10 of 26 Form the covariance matrix above, we obtain the following: var(−0.2𝑏2 − 0.5𝑏3 ) = (0.2)2 (1.2012) + (0.5)2 (0.4668) + 2(0.2)(0.5)(−0.0197) var(−0.2𝑏2 − 0.5𝑏3 ) = 0.1608 se(−0.2𝑏2 − 0.5𝑏3 ) = 0.4010 Then, 𝑡= (−0.2)(−7.9079) − (0.5)(1.8626) = 1.622 0.4010 𝐶𝑉 = 𝑡0.05,(72) = 1.666 Since 𝑡 = 1.622 < 𝐶𝑉 = 𝑡0.05,(72) = 1.666, do not reject the null hypothesis. The p-value for the test is P(𝑡 > 1.622) = 0.055, which exceeds 𝛼 = 0.05. The test does not prove that −0.20𝛽2 > 500𝛽3 . 7. Extension of the Regression Model We can extend a regression model by altering the existing independent variables and treat them as new variables. Let’s use the current example of sales-price and advertising expenditure model. We want to address the issue that sales does not rise indefinitely and at a constant rate in response to increases in advertising expenditure. As expenditure on advertising rises revenues may rise at a decreasing (rather than a constant) rate, implying diminishing returns to advertising expenditure. To take into account the impact of the diminishing returns on advertising is to include the squared value of advertising, 𝑥32 in the model. 𝑦 = 𝛽1 + 𝛽2 𝑥2 + 𝛽3 𝑥3 + 𝛽4 𝑥32 + 𝑢 E(𝑦) = 𝛽1 + 𝛽2 𝑥2 + 𝛽3 𝑥3 + 𝛽4 𝑥32 Thus, 𝜕E(𝑦) = 𝛽3 + 2𝛽4 𝑥3 𝜕𝑥3 We expect revenues to increase with each additional unit increase in advertising expenditure. Therefore, 𝛽3 > 0. We also expect the rate of increase in revenues to decrease with each additional unit increase in advertising. Therefore, 𝛽4 < 0. Once we point out the characteristics of the extended model, we can treat 𝑥32 as a new variable 𝑥4 . Using the same data, the Excel regression output is show below. Chapter 6—Multiple Regression 11 of 26 SUMMARY OUTPUT Regression Statistics Multiple R 0.7129061 R Square 0.5082352 Adjusted R Square 0.4874564 Standard Error 4.645283 Observations 75 ANOVA df SS Regression MS F 3 1583.397408 527.79914 Residual 71 1532.084459 21.578654 Total 74 3115.481867 Coefficients Intercept Standard Error t Stat Significance F 24.459316 P-value 5.59996E-11 Lower 95% Upper 95% 109.719 6.799 16.137 1.87E-25 96.162 PRICE -7.640 1.046 -7.304 3.236E-10 -9.726 123.276 -5.554 ADVERT 12.151 3.556 3.417 0.0010516 5.060 19.242 ADVERT² -2.768 0.941 -2.943 0.0043927 -4.644 -0.892 From the regression table, the coefficient of 𝐴𝐷𝑉𝐸𝑅𝑇 2 , 𝑏4 = −2.768. It has the anticipated sign, and it is also significantly different from zero (𝑝­value = 0.0044 < 0.05). There is diminishing returns to advertising. To interpret the role of the coefficients 𝑏3 = 12.151 and 𝑏4 = −2.768, consider the following table where 𝑦̂ (𝑆𝐴𝐿𝐸𝑆) is computed for a fixed value of 𝑥2 (𝑃𝑅𝐼𝐶𝐸) = $5 and for various values of 𝑥3 (𝐴𝐷𝑉𝐸𝑅𝑇). Starting from (𝑥3 )0 = $1.8 and increasing advertising by the increment of ∆𝑥3 = 0.10 to , 𝑥3 = 1.9, the predicted sales increases by ∆𝑦̂ = $0.19. As advertising expenditure is increased by the same increment, the increment in predicted sales decreases to 0.14 and then to 0.08. (𝑥3 )0 1.8 1.9 2.0 2.1 7.1. 𝑦̂ 84.42 84.61 84.75 84.83 ∆𝑦̂ 0.19 0.14 0.08 The Optimal Level of Advertising What is the optimum level of advertising? Optimality in economics always implies marginal benefit of an action be equal to its marginal cost. If marginal benefit exceeds the marginal cost, the action should be taken. If marginal benefit is less than the marginal cost, the action should be curtailed. The optimum is, therefore, where the two are equal. The marginal benefit of advertising is the contribution of each additional one thousand dollar ($1) of advertising expenditure to total revenue. Form the model, 𝑦 = 𝛽1 + 𝛽2 𝑥2 + 𝛽3 𝑥3 + 𝛽4 𝑥32 + 𝑢 the marginal benefit of advertising is: Chapter 6—Multiple Regression 12 of 26 𝜕E(𝑦) = 𝛽3 + 2𝛽4 𝑥3 𝜕𝑥3 Ignoring the marginal cost of additional sales, marginal cost is each additional $1 (thousand) spent on advertising. Thus, the optimality requirement is 𝑀𝐵 = 𝑀𝐶: 𝛽3 + 2𝛽4 𝑥3 = $1 Using the estimated least squares coefficients, we thus have: 12.151 + 2(−2.768)𝑥3 = 1 Yielding, 𝑥3 = $2.014 thousand. We want to build an interval estimate for the optimal level of advertising: (𝑥3 )𝑜 = 1 − 𝛽3 2𝛽4 Note that the sample statistic 1−𝑏3 2𝑏4 is obtained as a nonlinear combination of the two coefficients 𝑏3 and 𝑏4 . Therefore, we cannot use the same formula that we used to find the variance of the linear combination of the two coefficients. The (approximate) variance of the nonlinear combination of two random variables is obtained using the delta method. Let 𝑑= 1 − 𝑏3 2𝑏4 Then, 𝜕𝑑 2 𝜕𝑑 2 𝜕𝑑 𝜕𝑑 var(𝑑) = ( ) var(𝑏3 ) + ( ) var(𝑏4 ) + 2 ( )( ) covar(𝑏3 , 𝑏4 ) 𝜕𝑏3 𝜕𝑏4 𝜕𝑏3 𝜕𝑏4 Using partial derivatives, 𝜕𝑑 1 =− 𝜕𝑏3 2𝑏4 and 𝜕𝑑 1 − 𝑏3 =− 𝜕𝑏4 2𝑏42 Hence, 2 1 2 1 − 𝑏3 1 1 − 𝑏3 var(𝑑) = (− ) var(𝑏3 ) + (− ) var(𝑏4 ) + 2 (− ) (− ) covar(𝑏3 , 𝑏4 ) 2𝑏4 2𝑏4 2𝑏42 2𝑏42 We can obtain var(𝑏3 ) and var(𝑏4 ) by simply squaring the standard errors from the regression output. Unfortunately, the Excel regression output does not provide the covariance value. We can, however, still use Excel to compute covar(𝑏3 , 𝑏4 ). If you recall, using matrix algebra we can determine the variance-covariance matrix: var(𝑒)𝑋 −1 . Adding 𝑥32 to the model, we have now have three independent variables. The solution for this problem is simple because the 𝑋 matrix can be expanded to incorporate any number of independent variables. For a 3-variable model we have, Chapter 6—Multiple Regression 13 of 26 ∑𝑥2 ∑𝑥2 ∑𝑥22 𝐗= ∑𝑥3 ∑𝑥2 𝑥3 [∑𝑥4 ∑𝑥2 𝑥4 𝑛 ∑𝑥3 ∑𝑥2 𝑥3 ∑𝑥32 ∑𝑥3 𝑥4 ∑𝑥4 ∑𝑥2 𝑥4 ∑𝑥3 𝑥4 ∑𝑥42 ] Using Excel we can compute these quantities as the elements of the X matrix: 75.0 426.5 138.3 306.2 426.5 2445.7 787.4 1746.5 138.3 787.4 306.2 755.0 306.2 1746.5 755.0 1982.6 Then determine the inverse matrix 𝑋 −1 : 2.1423 -0.2978 -0.5376 0.1362 -0.2978 0.0507 0.0139 -0.0040 -0.5376 0.0139 0.5861 -0.1524 0.1362 -0.0040 -0.1524 0.0410 and find the variance-covariance matrix by finding the product var(𝑒)𝑋 −1 = 21.579𝑋 −1 46.227 -6.426 -11.601 2.939 -6.426 1.094 0.300 -0.086 -11.601 0.300 12.646 -3.289 2.939 -0.086 -3.289 0.885 Thus, 2 1 1 − 12.151 2 1 1 − 12.151 var(𝑑) = ( ) (12.646) + ( ) (0.885) + 2 (− ) (− ) (−3.289) 2 2 × 2.768 2 × 2.768 2 × 2.768 2 × 2.7682 var(𝑑) = 0.41265 + .46857 + 2(1.18064)(0.72773)(−3.28875) = 0.01657 se(𝑑) = 0.12872 The 95% confidence interval for the optimal level of advertising then is, 𝐿, 𝑈 = 𝑑 ± 𝑡𝛼⁄2,(𝑛−4) se(𝑑) 𝐿, 𝑈 = 2.014 ± (1.994)(0.12872) = ($1.757, $2.271) 7.2. Interaction Variables An example to illustrate the use of interaction variables is the life-cycle model of consumption behavior. Here the model involves the response of 𝑃𝐼𝑍𝑍𝐴 consumption (𝑦) to 𝐴𝐺𝐸 (𝑥2 ) and 𝐼𝑁𝐶𝑂𝑀𝐸 (𝑥3 ) of the consumer. First consider the simple model without the interaction variables. 𝑦 = 𝛽1 + 𝛽2 𝑥2 + 𝛽3 𝑥3 + 𝑢 Using the data in the Excel file “CH6 DATA” in the tab “pizza”, the estimated regression equation is Chapter 6—Multiple Regression 14 of 26 𝑦̂ = 342.88 − 7.576𝑥2 + 1.832𝑥3 Thus, holding 𝐼𝑁𝐶𝑂𝑀𝐸 constant, for each additional year of 𝐴𝐺𝐸 the expenditure on 𝑃𝐼𝑍𝑍𝐴 decreases by $7.58: 𝑏2 = 𝜕𝑦̂ = −7.576 𝜕𝑥2 And, holding 𝐴𝐺𝐸 constant, for each additional $1,000 increase in 𝐼𝑁𝐶𝑂𝑀𝐸 expenditure on 𝑃𝐼𝑍𝑍𝐴 rises by $1.83. However, we would expect that people of different ages would not spend similar amount on pizza for each additional $1,000 increase in income. It is reasonable to expect that older persons spend smaller amount of additional income on pizza than younger persons. Thus, we expect there to be an interaction between age and income variables. This gives rise to the interaction variable in the model, represented by the product of the variables 𝐴𝐺𝐸 and 𝐼𝑁𝐶𝑂𝑀𝐸: 𝐴𝐺𝐸 × 𝐼𝑁𝐶𝑂𝑀𝐸 (𝑥2 𝑥3 ). 𝑦 = 𝛽1 + 𝛽2 𝑥2 + 𝛽3 𝑥3 + 𝛽4 𝑥2 𝑥3 + 𝑢 The estimated regression equation is now: 𝑦̂ = 161.465 − 2.977𝑥2 + 6.980𝑥3 − 0.123𝑥2 𝑥3 𝜕𝑦̂ = 𝑏2 + 𝑏4 𝑥3 𝜕𝑥2 𝜕𝑦̂ = −2.977 − 0.123𝑥3 𝜕𝑥2 𝜕𝑦̂ = 𝑏3 + 𝑏4 𝑥2 𝜕𝑥3 𝜕𝑦̂ = 6.98 − 0.123𝑥2 𝜕𝑥2 For 𝑥3 = 30 ($1,000) For 𝑥2 = 25 years 𝜕𝑦̂ = −2.977 − 0.123(30) = −6.67 𝜕𝑥2 𝜕𝑦̂ = 6.98 − 0.123(25) = 3.90 𝜕𝑥2 When income is $30,000, for each additional year, expenditure on pizza is reduced by $6.67 When age is 25, for each additional $1,000 income, expenditure on pizza is increased by $3.90. For 𝑥3 = 80 ($1,000) For 𝑥3 = 50 years 𝜕𝑦̂ = −2.977 − 0.123(80) = −12.84 𝜕𝑥2 𝜕𝑦̂ = 6.98 − 0.123(50) = 0.82 𝜕𝑥2 When income is $80,000, for each additional year, expenditure on pizza is reduced by $12.84 When age is 50, for each additional $1,000 income, expenditure on pizza is increased by $0.82. 7.3. Log-Linear Model Let’s start with a model where the percent change in 𝑊𝐴𝐺𝐸 (𝑦) is a stochastic function of two independent variables 𝐸𝐷𝑈𝐶𝐴𝑇𝐼𝑂𝑁 (𝑥2 ) and years of 𝐸𝑋𝑃𝐸𝑅𝐼𝐸𝑁𝐶𝐸 (𝑥3 ) ln(𝑦) = 𝛽1 + 𝛽2 𝑥2 + 𝛽3 𝑥3 + 𝑢 Chapter 6—Multiple Regression 15 of 26 Now if we believe that the effect of each additional year of experience also depends on the level of education, then we may include the interaction variable 𝐸𝐷𝑈𝐶𝐴𝑇𝐼𝑂𝑁 × 𝐸𝑋𝑃𝐸𝑅𝐼𝐸𝑁𝐶𝐸 (𝑥2 𝑥3 ) in the model is a third variable. ln(𝑦) = 𝛽1 + 𝛽2 𝑥2 + 𝛽3 𝑥3 + 𝛽4 𝑥2 𝑥3 + 𝑢 Using the data in the tab “𝑤𝑎𝑔𝑒”, the estimated regression equation is ̂ ln (𝑦) = 1.3923 + 0.09494𝑥2 + 0.006329514𝑥3 − 0.0000364𝑥2 𝑥3 The effect of another year of 𝐸𝐷𝑈𝐶𝐴𝑇𝐼𝑂𝑁, holding 𝐸𝑋𝑃𝐸𝑅𝐼𝐸𝑁𝐶𝐸 constant, is, 1 𝑑𝑦̂ = 𝑏2 + 𝑏4 𝑥3 𝑦̂ 𝑑𝑥2 For example, given 𝐸𝑋𝑃𝐸𝑅𝐼𝐸𝑁𝐶𝐸, 𝑥3 = 5 years, the increase in 𝑊𝐴𝐺𝐸 from an extra year of 𝐸𝐷𝑈𝐶𝐴𝑇𝐼𝑂𝑁 is 9.48%. 𝑑𝑦̂⁄𝑑𝑥2 = 0.09494 − 0.000036(5) = 0.09476 (9.476%) 𝑦̂ At a higher level of 𝐸𝑋𝑃𝐸𝑅𝐼𝐸𝑁𝐶𝐸, say 𝑥3 = 10 years, the percentage increase in 𝑊𝐴𝐺𝐸 for an additional year of 𝐸𝐷𝑈𝐶𝐴𝑇𝐼𝑂𝑁 decreases slightly to 9.46%. 𝑑𝑦̂⁄𝑑𝑥2 = 0.09494 − 0.000036(10) = 0.09457 (9.457%) 𝑦̂ Note that these percentage changes in 𝑊𝐴𝐺𝐸 are the result of a very small change in the variable 𝐸𝐷𝑈𝐶𝐴𝑇𝐼𝑂𝑁. The results of a discrete change in the variable 𝐸𝐷𝑈𝐶𝐴𝑇𝐼𝑂𝑁, where ∆𝑥2 = 1, are shown in the calculations in the following table. The results show that at a higher level of 𝐸𝑋𝑃𝐸𝑅𝐼𝐸𝑁𝐶𝐸, any additional year of education has a slightly smaller impact on 𝑊𝐴𝐺𝐸. 𝐴 𝐼𝑛𝑡𝑒𝑟𝑐𝑒𝑝𝑡 𝐸𝐷𝑈𝐶 (𝑥2 ) 𝐸𝑋𝑃𝐸𝑅 (𝑥2 ) 𝐸𝐷𝐸𝑋 (𝑥2 𝑥3 ) ̂ ln (𝑦) 𝑦̂ ∆𝑦̂ (∆𝑦̂ ⁄𝑦̂)% 𝑏𝑗 1.39232 0.09494 0.00633 -0.000036 𝑥02 1 16 5 80 2.94 18.917 𝐵 𝑥12 1 17 5 85 3.03 20.797 1.880 9.94% 𝑥02 1 16 10 160 2.97 19.469 𝑥12 1 17 10 170 3.06 21.400 1.931 9.92% The effect of another year of 𝐸𝑋𝑃𝐸𝑅𝐼𝐸𝑁𝐶𝐸, holding 𝐸𝐷𝑈𝐶𝐴𝑇𝐼𝑂𝑁 constant, is 1 𝑑𝑦̂ = 𝑏3 + 𝑏4 𝑥2 𝑦̂ 𝑑𝑥3 Holding 𝐸𝐷𝑈𝐶𝐴𝑇𝐼𝑂𝑁constant at 𝑥2 = 8, Chapter 6—Multiple Regression 16 of 26 𝑑𝑦̂⁄𝑑𝑥3 = 0.00633 − 0.000036(8) = 0.00604 (0.604%) 𝑦̂ Holding 𝐸𝐷𝑈𝐶𝐴𝑇𝐼𝑂𝑁constant at 𝑥2 = 16, 𝑑𝑦̂⁄𝑑𝑥3 = 0.00633 − 0.000036(16) = 0.00575 (0.575%) 𝑦̂ The results of a discrete change in the variable 𝐸𝑋𝑃𝐸𝑅𝐼𝐸𝑁𝐶𝐸, where ∆𝑥3 = 1, are shown the calculation in the following table. 𝐴 𝐼𝑛𝑡𝑒𝑟𝑐𝑒𝑝𝑡 𝐸𝐷𝑈𝐶 (𝑥2 ) 𝐸𝑋𝑃𝐸𝑅 (𝑥2 ) 𝐸𝐷𝐸𝑋 (𝑥2 𝑥3 ) ̂ ln (𝑦) 𝑦̂ ∆𝑦̂ (∆𝑦̂ ⁄𝑦̂)% 𝑏𝑗 1.39232 0.09494 0.00633 -0.000036 𝑋0 𝐵 𝑋1 1 8 10 80 2.212 9.136 𝑋0 1 8 11 88 2.218 9.192 0.055 0.617% 1 16 10 160 2.969 19.469 𝑋1 1 16 11 176 2.975 19.585 0.116 0.598% The greater the number of years of education, the less valuable is an extra year of experience. 8. Measuring Goodness of Fit—𝑹𝟐 The coefficient of determination 𝑅2 in a multiple regression measures the combined effects of all independent variables on 𝑦. It simply measures the proportion of total variation in 𝑦 that is explained by the regression model. 𝑅2 = 𝑆𝑆𝑅 ∑(𝑦̂ − 𝑦̅)2 = 𝑆𝑆𝑇 ∑(𝑦 − 𝑦̅)2 These quantities are easily calculated and they are also shown in the ANOVA part of the regression output for the 𝐵𝑈𝑅𝐺𝐸𝑅 example. ANOVA df Regression Residual Total 𝑅2 = 2 72 74 SS 1396.539 1718.943 3115.482 1396.539 = 0.4483 3115.482 This implies that nearly 45% of the variations in 𝑦 (monthly sales) is explained by the variations in price and advertising expenditure. In Chapter 3 it was shown that 𝑅2 is a measure of goodness of fit, that is, how well the estimated regression 2 2 fits the data: 𝑅2 = 𝑟𝑦𝑦 ̂ . The same argument applies here. A high 𝑅 value means there is a close association between the predicted and observed values of 𝑦. Chapter 6—Multiple Regression 17 of 26 8.1. Adjusted 𝑹𝟐 In multiple regression 𝑅2 is affected by the number of independent variables. As we add more explanatory variables to the model, 𝑅2 will increase. This would artificially “improve” the model. To see this, consider the regression model 𝑦̂ = 𝑏1 + 𝑏2 𝑥2 + 𝑏3 𝑥3 According to the formula 𝑅2 = 1 − ∑(𝑦 − 𝑦̂)2 ∑(𝑦 − 𝑦̅)2 Now, if we add another variable to the regression model, then the quantity 𝑆𝑆𝐸 = ∑(𝑦 − 𝑦̂)2 becomes smaller and 𝑅2 = 1 − 𝑆𝑆𝐸 becomes larger. An alternative measure devised to address this problem is the adjusted 𝑅2 : 𝑅2 = 1 − 1 𝑆𝑆𝐸 ⁄(𝑛 − 𝑘) 𝑆𝑆𝑇 ⁄(𝑛 − 1) For our example, RA2 1 1718.943 72 = 0.4329 3115.482 74 This figure is shown in the computer regression output right below the regular 𝑅2 . Note that 𝑅2 = 1 − 𝑆𝑆𝐸⁄𝑆𝑆𝑇. Divide the numerator and denominator of the quotient on the right-hand-side by their respective degrees of freedom. Thus, 1 𝑅𝐴2 = 1 − 𝑆𝑆𝐸⁄(𝑛 − 𝑘) 𝑆𝑆𝑇⁄(𝑛 − 1) To show why 𝑅𝐴2 does not increase with the increase in 𝑘 (the number of independent variables), we can rewrite it as: 𝑅𝐴2 = 1 − 𝑆𝑆𝐸 𝑛 − 1 𝑛−1 = 1 − (1 − 𝑅2 ) 𝑆𝑆𝑇 𝑛 − 𝑘 𝑛−𝑘 As 𝑘 increases, the negative adjustment on the right-hand-side rises, reducing 𝑅𝐴2 . Chapter 6—Multiple Regression 18 of 26 Appendix A Brief Introduction to Matrix Algebra 1. Introduction 1.1. The Algebra of Matrices 1.1.1. Addition and subtraction of matrices 1.1.1.1. Scalar Multiplication 1.1.1.2. Matrix Multiplication 1.1.2. Identity Matrices 1.1.3. Transpose of a Matrix 1.1.4. Inverse of a Matrix 1.1.4.1. How to Find the Inverse of a Matrix 1.1.4.1.1. The Determinant of a Matrix 1.1.4.1.2. Properties of Determinants 1.1.5. How to use the Inverse of Matrix A to find the solutions for an equation system 1. Introduction Matrix algebra enables us to: write an equation system in a compact way, develop a method to test the existence of a solution by evaluation of a “determinant”, and devise a method to find the solution. For example, consider the following equation system with three variables 𝑥1 , 𝑥2 , and 𝑥3 : 6𝑥1 + 3𝑥2 + 1𝑥3 = 22 1𝑥1 + 4𝑥2 − 2𝑥3 = 12 4𝑥1 − 1𝑥2 + 5𝑥3 = 10 This equation system can be written in the matrix format as follows: 6 [1 4 3 1 𝑥1 22 4 −2] [𝑥2 ] = [12] −1 5 𝑥3 10 The lead matrix on the left hand side is the coefficient matrix, denoted by 𝐴. The lag matrix is the variable matrix 𝑥 and the matrix on the right hand side is the matrix of the constant terms, 𝑑. 6 𝐴 = [1 4 3 4 −1 1 −2] 5 𝑥1 𝑥 𝑥 = [ 2] 𝑥3 22 𝑑 = [12] 10 Thus, the short hand version of an equation system is: 𝐴𝑥 = 𝑑 Any given matrix can be defined by its dimension: The number of rows (𝑚) and number of columns (𝑛). The dimension of 𝐴 is 3 × 3, that of 𝑥 and 𝑑 are both 3 × 1. 1.1. The Algebra of Matrices 1.1.1. Addition and subtraction of matrices Two matrices can be added if and only if they are conformable for addition. That is, they have the same dimension. For example: Chapter 6—Multiple Regression 19 of 26 𝐴=[ 4 5 ] 6 12 6 𝐶=[ 12 6 3 10 ] 15 𝐴+𝐵 =[ 8 𝐷=[ 3 5 ] 11 𝐶−𝐷 =[ 𝐵=[ 8 ] 19 4+6 6+3 6−8 12 − 3 5 + 10 10 ]=[ 12 + 15 9 −2 8−5 ]=[ 9 19 − 11 15 ] 27 3 ] 8 1.1.1.1. Scalar Multiplication When every element of a matrix is multiplied by a number (scalar), then we are performing a scalar multiplication. 4 4[ 6 16 5 ]=[ 24 12 20 ] 48 1.1.1.2. Matrix Multiplication To multiply two matrices, they must be conformable for multiplication. This requires that the number of columns of the lead matrix be equal to the number of rows of the lag matrix. For example, let dimension of 𝐴 be 3 × 2 and that of 𝐵, 2 × 1, then 𝐴 and 𝐵 are conformable for multiplication because 𝐴 has two columns and 𝐵 has 2 rows. The resulting product matrix will have a dimension of 3 × 1. A B AB (32) ( 21) (31) The following example shows how the multiplication rule applies to two matrices. 𝑎11 𝐴 = [𝑎21 𝑎31 5 𝐴 = [6 2 𝑎12 𝑎22 ] 𝐵 = [𝑏11 ] 𝑏21 𝑎32 4 1] 9 𝑎11 𝑏11 + 𝑎12 𝑏21 𝐴𝐵 = [ 𝑎21 𝑏11 + 𝑎22 𝑏21 ] 𝑎31 𝑏𝑏11 + 𝑎32 𝑏21 5(7) + 4(5) 55 𝐴𝐵 = [6(7) + 1(5)] = [47] 59 2(7) + 9(5) 7 𝐵=[ ] 5 Note that if 𝐵 is used as the lead matrix and 𝐴 as the lag matrix, the two are no longer conformable for multiplication. 𝐵: 2 × 1 and 𝐴: 3 × 2. The number of columns of the lead matrix is not the same as the number of rows of the lag matrix. Even if switching the lead and lag matrices preserved the conformability, still the resulting product matrix would not be the same. That is: 𝐴𝐵 ≠ 𝐵𝐴. Matrix multiplication is not commutative. Another example, 1 A =[ 3 23 2 1 −1 ] 4 4 AB = [ 6 22 −2 ] 16 −2 B =[ 4 32 2 5 −3] 1 13 BA = [−5 33 5 1 5 5 22 −16] 2 Also note that we have used the matrix multiplication rule to write an equation system in the matrix format: 6 [1 4 3 1 𝑥1 22 4 −2] [𝑥2 ] = [12] −1 5 𝑥3 10 Chapter 6—Multiple Regression → 6𝑥1 + 3𝑥2 + 1𝑥3 = 22 1𝑥1 + 4𝑥2 − 2𝑥3 = 12 4𝑥1 − 1𝑥2 + 5𝑥3 = 10 20 of 26 A x d (33) (31) (31) 1.1.2. Identity Matrices An identity matrix is a matrix with the same number of rows and columns (a square matrix) which has 1’s in its principal diagonal and 0’s everywhere else. The identity matrix is denoted by 𝐼. The numeric subscript, if shown, indicates the dimension. 𝐼2 = [ 1 𝐼3 = [0 0 1 0 ] 1 0 0 1 0 0 0] 1 Pre or post multiplying a matrix by an identity matrix leaves the matrix unchanged. 𝐴𝐼 = 𝐼𝐴 = 𝐴 Example 2 𝐴 = [3 1 4 2 4 6 3] 9 2 4 𝐴𝐼 = [3 2 1 4 6 1 3] [0 9 0 0 1 0 0 2 0] = [3 1 1 4 2 4 6 3] = 𝐴 9 1 0 𝐼𝐴 = [0 1 0 0 0 2 0] [3 1 1 4 2 4 6 2 3] = [3 9 1 4 2 4 6 3] = 𝐴 9 1.1.3. Transpose of a Matrix A matrix is transposed by interchanging its rows and columns. The transpose of the matrix 𝐴 is denoted by 𝐴′. 𝑎11 𝐴 = [𝑎21 𝑎31 𝑎12 𝑎22 ] 𝑎32 𝑎11 𝐴′ = [𝑎 12 𝑎21 𝑎22 𝑎31 𝑎32 ] For example, 5 𝐴 = [6 2 4 1] 9 5 𝐴′ = [ 4 6 1 2 ] 9 1.1.4. Inverse of a Matrix The inverse of the square matrix 𝐴 (if it exists) is another matrix, denoted by 𝐴−1 , such that if 𝐴 is pre or post multiplied by 𝐴−1 , the resulting product is an identity matrix. 𝐴𝐴−1 = 𝐴−1 𝐴 = 𝐼 If 𝐴 does not have an inverse, then it is called a singular matrix. Otherwise it is a nonsingular matrix. Chapter 6—Multiple Regression 21 of 26 1.1.4.1. How to Find the Inverse of a Matrix Finding the inverse of a matrix is a complicated process. First we must understand several concepts required in determining the inverse of a matrix. 1.1.4.1.1. The Determinant of a Matrix The determinant of a matrix 𝐴 is a scalar quantity (a number) and is denoted by |𝐴|. It is obtained by summing various products of the elements of 𝐴. For example, the determinant of a 2 × 2 matrix is defined to be: 𝑎 |𝐴| = |𝑎11 21 𝑎12 𝑎22 | = 𝑎11 𝑎22 − 𝑎12 𝑎21 The determinant of a 3 × 3 matrix is obtained as follows: 𝑎11 |𝐴| = |𝑎21 𝑎31 𝑎12 𝑎22 𝑎32 𝑎13 𝑎 𝑎23 | = 𝑎11 | 22 𝑎32 𝑎33 𝑎23 𝑎21 𝑎33 | − 𝑎12 |𝑎31 𝑎23 𝑎21 𝑎33 | + 𝑎13 |𝑎31 𝑎22 𝑎32 | |𝐴| = 𝑎11 𝑎22 𝑎33 − 𝑎11 𝑎23 𝑎32 − 𝑎12 𝑎21 𝑎33 + 𝑎12 𝑎23 𝑎31 + 𝑎13 𝑎21 𝑎32 − 𝑎13 𝑎22 𝑎31 In the latter case, each element in the top row is multiplied by a “sub determinant”. The first sub determinant 𝑎22 |𝑎 𝑎23 𝑎33 | 32 multiplied by 𝑎11 , is obtained by eliminating the first row and the first column. The sub determinant associated with 𝑎11 is called the minor of that element and is denoted by 𝑚11 . The minor of 𝑎11 , 𝑚11 , is obtained by eliminating the first row and second column, and so on. If the sum of the subscript of the element is odd, then the sign is negative. The calculation of the determinant of A then can be presented as: |𝐴| = 𝑎11 𝑚11 − 𝑎12 𝑚12 + 𝑎13 𝑚13 2 |4 7 1 5 8 3 5 6| = 2 | 8 9 4 6 |−| 7 9 6 4 | + 3| 9 7 5 | = 2(5 × 9 − 6 × 8) − (4 × 9 − 6 × 7) + 3(4 × 8 − 5 × 7) = −9 8 Here the determinant is obtained by expanding the first row. The same determinant can be found by expanding any other row or column. In the previous example, find the determinant by expanding the third column: |𝐴| = 𝑎13 𝑚13 − 𝑎23 𝑚23 + 𝑎33 𝑚33 2 |4 7 1 5 8 3 4 6| = 3 | 7 9 2 5 | −6| 7 8 1 2 | + 9| 8 4 1 | = 3(4 × 8 − 5 × 7) − 6(2 × 8 − 1 × 7) + 9(2 × 5 − 1 × 4) = −9 5 Now let’s introduce a related concept to the minor, called the cofactor. A cofactor, denoted by 𝑐𝑖𝑗 , is a minor with a prescribed algebraic sign attached. If the sum of the two subscripts in the minor 𝑚𝑖𝑗 is odd then the cofactor is negative: 𝑐𝑖𝑗 = (−1)(𝑖+𝑗) 𝑚𝑖𝑗 In short, the value of determinant |𝐴| of order 𝑛 can be found by the expansion of any row or any column as follows: Chapter 6—Multiple Regression 22 of 26 𝑛 |𝐴| = ∑ 𝑎𝑖𝑗 𝑐𝑖𝑗 [expansion by the 𝑖th row] 𝑗=1 𝑛 |𝐴| = ∑ 𝑎𝑖𝑗 𝑐𝑖𝑗 [expansion by the 𝑗th column] 𝑖=1 For example, for n = 3, 𝑛 |𝐴| = ∑ 𝑎1𝑗 𝑐1𝑗 = 𝑎11 |𝑐11 | + 𝑎12 |𝑐12 | + 𝑎13 |𝑐13 | Expansion by the first row: 𝑗=1 𝑛 Expansion by the first column: |𝐴| = ∑ 𝑎𝑖1 𝑐𝑖1 = 𝑎11 |𝑐11 | + 𝑎21 |𝑐21 | + 𝑎31 |𝑐31 | 𝑖=1 1) 1.1.4.1.2. Properties of Determinants Transposing a matrix does not affect the value of the determinant: |𝐴| = |𝐴′| 1 |𝐴| = | 3 2) 2 | = 1 × 4 − 2 × 3 = −2 4 2 | = 1 × 4 − 2 × 3 = −2 4 | 3 1 4 |= 3×2−4×1 =2 2 | 1×5 3 2×5 | = 5 × 4 − 10 × 3 = −10 4 The addition (subtraction) of a multiple of any row (or column) to another row (or column) will leave the value of the determinant unchanged. In the determinant| 1 | 3−3 5) 3 | = 1 × 4 − 2 × 3 = −2 4 Multiplying any one row (or any one column) by a scalar will change the value of the determinant by the multiple of that scalar. 1 | 3 4) 1 |𝐴′| = | 2 The interchange of any two rows (or any two columns) will alter the sign, leaving the absolute value of the determinant unchanged. 1 | 3 3) 2 | = 1 × 4 − 2 × 3 = −2 4 1 3 2 1 |=| 4−6 0 2 |, multiply the first row by −3 and add to the second row: 4 2 | = −2 −2 If one row (or column) is a multiple of another row (or column), the value of the determinant is zero; the determinant will vanish. In other words, if two rows (or columns) are linearly dependent, the determinant vanishes. In the following example the second row is the first row multiplied by 4. 1 | 4 2 |=8−2×4 =0 8 A very important conclusion relating the existence of the determinant to the existence of a unique solution for an equation system: Chapter 6—Multiple Regression 23 of 26 Consider the equation system shown at beginning of the discussion of matrices: 6𝑥1 + 3𝑥2 + 1𝑥3 = 22 1𝑥1 + 4𝑥2 − 2𝑥3 = 12 4𝑥1 − 1𝑥2 + 5𝑥3 = 10 The coefficient matrix of the equation system is 6 𝐴 = [1 4 3 4 −1 1 −2] 5 This equation system has a unique solution because there is a non-vanishing determinant |𝐴| = 52. The matrix A is a nonsingular matrix. Now consider the following equation system: 16𝑥1 + 3𝑥2 + 1𝑥3 = 22 12𝑥1 + 6𝑥2 + 2𝑥3 = 11 14𝑥1 − 1𝑥2 + 5𝑥3 = 10 The determinant of the coefficient matrix is 6 |𝐴| = |12 4 3 6 −1 1 2| = 6(6 × 5 + 2) − 3(12 × 5 − 2 × 4) + (−1 × 12 − 6 × 4) = 0 5 The determinant vanishes because rows 1 and 2 are linearly dependent: The second row is first row multiplied by 2. Thus the equation system will not have a unique solution. 6) The expansion of the determinant by alien cofactors (the cofactor of a “wrong” row or column) always yields a value of zero. Expand the following determinant by using the first row elements but the cofactors of the second row elements 6 3 𝐴 = |1 4 4 −1 1 −2| 5 𝑐21 = −[3 × 5 − (−1) × 1] = −16 𝑐22 = (6 × 5 − 1 × 4) = 26 𝑐23 = −[6 × (−1) − 3 × 4] = 18 𝑎11 = 6 𝑎12 = 3 𝑎12 = 1 𝑎11 𝑐21 = −96 𝑎12 𝑐22 = 78 𝑎13 𝑐23 = 18 3 ∑ 𝑎𝑖𝑗 𝑐𝑖𝑗 = 𝑎11 𝑐21 + 𝑎12 𝑐22 + 𝑎13 𝑐23 = −96 + 78 + 18 = 0 𝑗=1 This last property of the determinants finally leads us to the method to find the inverse of a determinant. Finding the inverse of A involves the following steps: 𝑎11 𝐴 = [𝑎21 𝑎31 𝑎12 𝑎22 𝑎32 𝑎13 𝑎23 ] 𝑎33 Chapter 6—Multiple Regression 24 of 26 1) Replace each element 𝑎𝑖𝑗 of A by its cofactors 𝑐𝑖𝑗 . 𝑐11 𝐶 = [𝑐21 𝑐31 2) 𝑐12 𝑐22 𝑐32 𝑐13 𝑐23 ] 𝑐33 Find the transpose of 𝐶. This transpose matrix, 𝐶′, is called the 𝒂𝒅𝒋𝒐𝒊𝒏𝒕 matrix of 𝐴 . 𝑐11 𝐶 ′ ≡ adjoint 𝐴 = [𝑐12 𝑐13 3) 𝑐21 𝑐22 𝑐23 𝑐31 𝑐32 ] 𝑐33 Multiply 𝐴 by adjoint 𝐴 𝑎11 𝐴𝐶 = [𝑎21 𝑎31 ′ 𝑎12 𝑎22 𝑎32 𝑎13 𝑐11 𝑎23 ] [𝑐12 𝑎33 𝑐13 𝑐21 𝑐22 𝑐23 ∑𝑎1𝑗 𝑐1𝑗 𝑐31 𝑐32 ] = [∑𝑎2𝑗 𝑐1𝑗 𝑐33 ∑𝑎3 𝑐1𝑗 ∑𝑎1𝑗 𝑐2𝑗 ∑𝑎2𝑗 𝑐2𝑗 ∑𝑎3𝑗 𝑐2𝑗 ∑𝑎1𝑗 𝑐3𝑗 ∑𝑎2𝑗 𝑐3𝑗 ] ∑𝑎3𝑗 𝑐3𝑗 Now note that the elements in principal diagonal of the product matrix simply provide the determinant of 𝐴. All other elements outside the principal diagonal are the expansions of the determinant by an alien cofactor. Thus, they are all zeros. ∑𝑎1𝑗 𝑐1𝑗 [∑𝑎2𝑗 𝑐1𝑗 ∑𝑎3 𝑐1𝑗 ∑𝑎1𝑗 𝑐2𝑗 ∑𝑎2𝑗 𝑐2𝑗 ∑𝑎3𝑗 𝑐2𝑗 ∑𝑎1𝑗 𝑐3𝑗 |𝐴| ∑𝑎2𝑗 𝑐3𝑗 ] = [ 0 0 ∑𝑎3𝑗 𝑐3𝑗 0 |𝐴| 0 0 1 0 ] = |𝐴| [0 |𝐴| 0 0 1 0 1 0] = |𝐴|𝐼 1 Thus, 𝐴𝐶 ′ = |𝐴|𝐼 Now divide both sides of the equation by the determinant |𝐴| 𝐴𝐶′ =𝐼 |𝐴| Pre multiply both sides by 𝐴−1 , 𝐴−1 𝐴𝐶′ = 𝐴−1 𝐼 |𝐴| Since 𝐴−1 𝐴 = 𝐼, 𝐼𝐶 ′ = 𝐶′, and 𝐴−1 𝐼 = 𝐴−1 , then: 𝐴−1 = 𝐶′ |𝐴| The inverse of matrix A is obtained by dividing the 𝑎𝑑𝑗𝑜𝑖𝑛𝑡 A by the determinant. 6 Find the inverse of 𝐴 = [1 4 3 4 −1 1 −2] 5 First find the |𝐴| Chapter 6—Multiple Regression 25 of 26 |𝐴| = 6 | 4 −1 −2 1 | − 3| 5 4 −2 1 |+| 5 4 4 | = 6(18) − 3(13) + (−17) = 52 −1 Next find the cofactor matrix 4 −2 | −1 5 3 1 𝐶 = −| | −1 5 3 1 [ |4 −2| | −2 | 5 6 1 | | 4 5 6 1 −| | 1 −2 −| 1 4 1 4 6 −| 4 | 4 | −1 18 3 | = [−16 −1 −10 6 3 | |] 1 4 −13 26 13 −17 18] 21 Find adjoint 𝐴 18 𝐶 ′ = [−13 −17 𝐴−1 = −16 26 18 −10 13] 21 18 1 ′ 1 𝐶 = [−13 |𝐴| 52 −17 −16 26 18 −10 13] 21 With each element in 𝐴−1 rounded to three decimal points, 0.346 𝐴−1 = [−0.250 −0.327 −0.308 0.500 0.346 −0.192 0.250] 0.404 1.1.5. How to use the Inverse of Matrix A to find the solutions for an equation system Note that in the previous example the matrix A was the coefficient matrix of the equation system 6𝑥1 + 3𝑥2 + 1𝑥3 = 22 1𝑥1 + 4𝑥2 − 2𝑥3 = 12 4𝑥1 − 1𝑥2 + 5𝑥3 = 10 6 [1 4 3 4 −1 1 𝑥1 22 −2] [𝑥2 ] = [12] 5 𝑥3 10 𝐴𝑥 = 𝑑 Now pre multiply both sides of the matrix notation of the equation system by 𝐴−1 : 𝐴−1 𝐴 = 𝐴−1 𝑑 which results in 𝑥 = 𝐴−1 𝑑 𝑥1 0.346 [𝑥2 ] = [−0.250 𝑥3 −0.327 −0.308 0.500 0.346 −0.192 22 2 0.250] [12] = [3] 0.404 10 1 Thus, 𝑥1 = 2, 𝑥2 = 3, and 𝑥3 = 1. Chapter 6—Multiple Regression 26 of 26