3.6 What is momentum used for?

advertisement

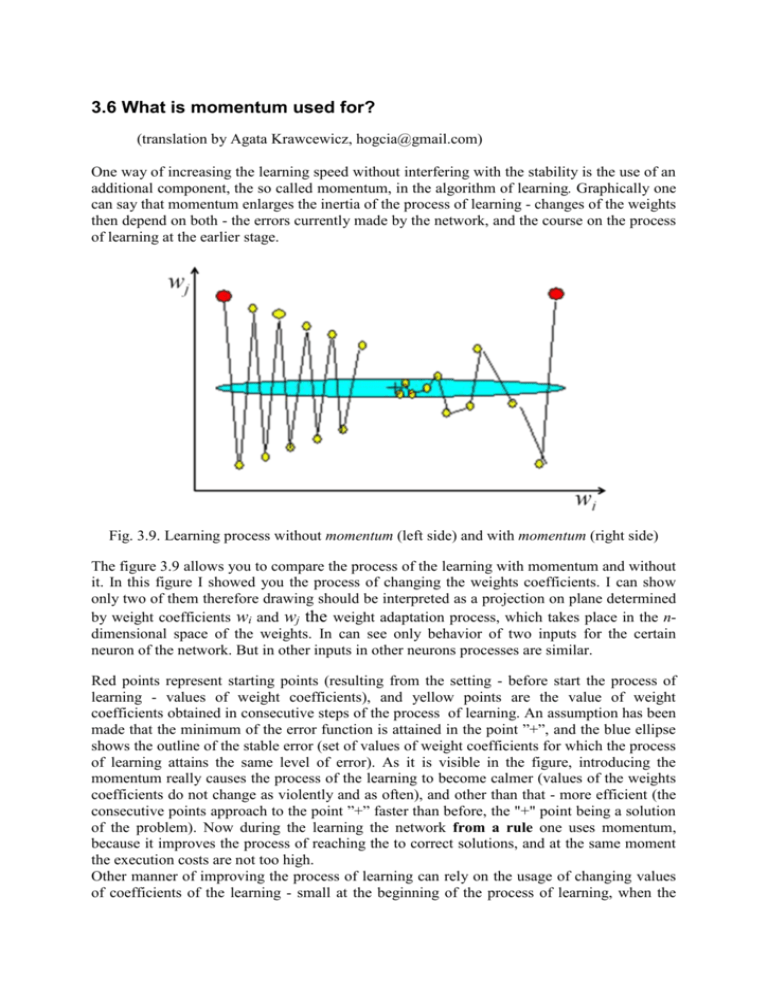

3.6 What is momentum used for? (translation by Agata Krawcewicz, hogcia@gmail.com) One way of increasing the learning speed without interfering with the stability is the use of an additional component, the so called momentum, in the algorithm of learning. Graphically one can say that momentum enlarges the inertia of the process of learning - changes of the weights then depend on both - the errors currently made by the network, and the course on the process of learning at the earlier stage. Fig. 3.9. Learning process without momentum (left side) and with momentum (right side) The figure 3.9 allows you to compare the process of the learning with momentum and without it. In this figure I showed you the process of changing the weights coefficients. I can show only two of them therefore drawing should be interpreted as a projection on plane determined by weight coefficients wi and wj the weight adaptation process, which takes place in the ndimensional space of the weights. In can see only behavior of two inputs for the certain neuron of the network. But in other inputs in other neurons processes are similar. Red points represent starting points (resulting from the setting - before start the process of learning - values of weight coefficients), and yellow points are the value of weight coefficients obtained in consecutive steps of the process of learning. An assumption has been made that the minimum of the error function is attained in the point ”+”, and the blue ellipse shows the outline of the stable error (set of values of weight coefficients for which the process of learning attains the same level of error). As it is visible in the figure, introducing the momentum really causes the process of the learning to become calmer (values of the weights coefficients do not change as violently and as often), and other than that - more efficient (the consecutive points approach to the point ”+” faster than before, the "+" point being a solution of the problem). Now during the learning the network from a rule one uses momentum, because it improves the process of reaching the to correct solutions, and at the same moment the execution costs are not too high. Other manner of improving the process of learning can rely on the usage of changing values of coefficients of the learning - small at the beginning of the process of learning, when the network only chooses the directions of its activity, greater in the centre-piece of the learning, when it is necessary to act forcefully yet roughly enough to adapt the values of parameters of the network to established rules of its activity, and at last again smaller in the end of the process of learning, at the moment when the network perfects the final values of its parameters (fine tuning) and too impetuous corrections can destroy the construction of the earlier built structure of knowledge. Let us notice that these techniques of the activity, about the mathematically led out structure and the experientially examined usefulness lively resemble the elaborate methods of the teacher, who has a large didactic practice and used for pupils with a small psychical strength! Is without a doubt the striking convergence of the behavior of the neural network and the human mind - not first, and not last after all.