Test Creation Project - Martha Fisher`s Portfolio

advertisement

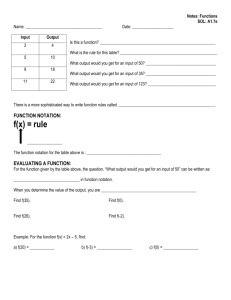

Fisher, 1 Martha Fisher Test Creation Project March 3, 2013 Course Description and Test Overview A. Course Overview This test is designed for a General Biology class of sophomores at New Kent High School. One global aim of the class is to aid in students becoming scientifically literate citizens who understand the nature of science, who can use scientific reasoning and knowledge to make decisions about their lives and actions, their health, and who can be informed citizens who can contribute to discussion of scientific policies. A second aim of the class is to help students understand the main themes of biology and appreciate how these principles and themes are relevant to their own lives. The main educational goals of the course are for students to understand and use processes of scientific experimentation and research, to get an overview of and gain an appreciation for the diversity of life that exists and the diverse strategies that organisms have evolved to survive, and to recognize the interdependence of biological and earth systems and predict consequences of changes. B. Description of unit This test follows a seven period unit on evolution. The students spend two days investigating the evidence for evolution, and then learn about the mechanisms of evolution, spending three days on adaptations and natural selection. The students then learn about speciation and large scale patterns of evolution (macroevolution) for one day and conclude the unit with a one day lesson on classification and taxonomy. This instructional emphasis is aligned with the curriculum that places most emphasis and therefore most time on evidence for evolution and natural selection. Students are pre-assessed before to the unit to determine prior knowledge and attitudes about the topic. Students are also pre-assessed for concepts during the unit to determine potential misconceptions. Throughout the course of the unit, the students are assessed with several more activities and projects that are included on the table of specifications below. In addition to enriching student learning and motivation, these activities offer a way to assess students more authentically than a pen and paper test allows. C. Description of Intended Learning Outcomes The ILOs for this unit are derived partially from the Biology SOL Framework. They address biology SOLs 6 and 7, which cover evidence for evolution, mechanisms of evolution and classification. I modified the objectives somewhat and added some of my own to better reflect the importance of certain essential concepts. The objectives primarily address the comprehension and application levels of Bloom’s taxonomy, with several that address the analysis level as well. This range is suited to a unit on evolution, in which the application of concepts is essential in order for students to gain a good understanding of the material. The assessments used in the unit in addition to a unit test are an adaptations project, a dichotomous key activity, an evidence for evolution stations activity, a goldfish variation lab, and a speciation lab. Most of these activities serve as learning experiences for the students in addition to assessment. The labs for instance are used to introduce concepts and serve more to assess student participation, though they also cover specific learning objectives from the unit. In the dichotomous key activity, students are able to create products that would not be very feasible on a test. In the adaptation project students are able to investigate an animal that they are interested in and apply the concepts of evolution to that animal’s adaptations. This project is intended to give students a deeper understanding of the material as well as to motivate them. For greater detail about the learning objectives and where they will be assessed, see the tables below. Fisher, 2 D. Overview of the Classroom/School Context This test is designed for three general and collaborative Biology courses at New Kent High School in New Kent, Virginia. New Kent is a rural community. The majority of the school population is white and middle or working class. The school is successful as a whole in terms of meeting state standards, however there is not a strong focus on advanced academic tracks at the school. Most students in the collaborative classroom are eligible for special education services. Many of these students have learning disabilities, processing disorders, or ADHD. Many also have testing accommodations for read-aloud testing and small group testing. Because many students in both the collaborative and general classroom have difficulty reading and writing, I have tried to write the test to be clear and concise. I tried to make sure that I was assessing science concepts and not reading skills by using clear direct language and wording. Because students struggle with writing, the short answer questions are all scaffolded so that students are asked to write one to two sentences at a time, rather than five to six sentences at once, which would overwhelm many students. E. Explicit purposes of the test and test results This test is summative in nature and is intended to provide me and each student with information about their mastery of the content for the unit. Each student will have the opportunity for test corrections after the test to improve their understanding of the material, however they may not receive full credit for these corrections. The test provides information about what students may need remediation and what topics may need to be covering again before the end-of-course test. I will also analyze the test to identify particular areas of strengths and weaknesses among students to improve my teaching of the material next time. In order to improve my future instruction and future tests, I will attempt to identify where errors were likely a result of poor wording or formatting and where student errors were a result of inadequate instruction. Design Elements of the Test A. Standards and Intended Learning Outcomes SOL Standards: BIO.6 The student will investigate and understand bases for modern classification systems. Key concepts include a) structural similarities among organisms; b) fossil record interpretation; c) comparison of developmental stages in different organisms; d) examination of biochemical similarities and differences among organisms; and e) systems of classification that are adaptable to new scientific discoveries. BIO.7 The student will investigate and understand how populations change through time. Key concepts include a) evidence found in fossil records; b) how genetic variation, reproductive strategies, and environmental pressures impact the survival of populations; c) how natural selection leads to adaptations; d) emergence of new species; and e) scientific evidence and explanations for biological evolution. Fisher, 3 Intended Learning Objectives: Topic Evidence for Evolution Fossil Record Objective 1. Describe relationships based on homologous structures, analogous structures, and vestigial structures Speciation Cognitive Level Comprehension 2. Infer evolutionary relationships based on similarities in embryonic stages and biochemical evidence Embryology DNA evidence Application 3. Compare structural characteristics of an extinct organism, as evidenced by its fossil record, with present, familiar organisms. Structural similarities (fossils and present) Application 4. Differentiate between relative and absolute dating and determine relative age of a fossil given information about its position in the rock and dating by radioactive decay Relative and absolute dating Comprehension, Application 5. Explain how genetic mutations and variation are the basis for selection and how natural selection leads to changes in gene frequency of a population over time Natural Selection Content Homologous, analogous, and vestigial structures Variation, inheritance, survival of the fittest Comprehension 6. Predict the impact of environmental pressures on populations Patterns of natural selection (stabilizing, directional, disruptive) Application 7. Describe how the work of Lamarck and Darwin shaped the theory of evolution and compare and contrast their theories Lamarck, Darwin, Galapagos Comprehension, Analysis 8. Analyze the impact of reproductive and survival strategies on fitness of a population Sexual selection, kin selection Analysis 9. Describe the mechanisms of emergence of species. Emergence of new species, types of speciation Comprehension 10. Apply the biological species concept to novel scenarios and discuss problems with the definition. Biological species concept Comprehension, Application Punctuated equilibrium, gradualism Comprehension Macroevolution 11. Compare and contrast punctuated equilibrium with gradual change over time. Fisher, 4 Classification 12. Compare and contrast convergent evolution with divergent evolution Convergent and divergent evolution Comprehension 13. Construct and utilize dichotomous keys to classify groups of objects and organisms Dichotomous keys Application, Synthesis 14. Interpret a cladogram or phylogenic tree showing evolutionary relationships among organisms. Cladograms, taxonomy Analysis 15. Investigate flora and fauna in field investigations and apply classification systems Virginia plants and animals Application Fisher, 5 B. Table of Specifications and Unit Plan Content Evidence for Evolutionary Relationships (30%) - Homologous, analogous, and vestigial structures - Embryology - DNA evidence - Structural similarities (fossils and present) Fossil Record (10%) - Relative and absolute dating Natural Selection (30%) - Variation, inheritance, survival of the fittest - Impact of the environment - Patterns of natural selection (stabilizing, directional, disruptive) History of evolutionary theory (5%) - Lamarck - Darwins and Galapagos Speciation and macroevolution (20%) - Biological species concept - Emergence of new species - Punctuated equilibrium and gradualism - Convergent and divergent Classification (15%) - Dichotomous keys - Cladograms - Flora and fauna of VA - Taxonomy Knowledge Comprehension Describe Application Describe, Infer, 8 6, 7, 13, 23, 24 Analysis Infer Synthesis Evidence Stations Activity Differentiate between Determine, Compare Differentiate between 18 Explain how 5, 17 Predict 11, 27 3, 27 Half-life Lab Analyze 9, 12, 16, 27 Goldfish Lab Adaptations Project Describe how 22, 26 Describe, Discuss, Compare and Contrast Compare and Contrast 26 Compare and Contrast Apply 1, 25 21, 23 2, 10, 25 Speciation Lab Utilize, Investigate and Apply Interpret 4, 19, 20 14, 15 Dichotomous Key Activity Construct Evaluation Fisher, 6 C. Discussion of Construct Validity This test has high construct validity. It assesses the objectives that were emphasized in the curriculum and in the instruction to students. The test should accurately assess student knowledge about the intended topic. This test has “face validity.” If a colleague were to look at the test, they would clearly see that it is assessing knowledge of content and skills related to evolution. D. Discussion of Content Validity This test has high content validity because it samples the intended learning objectives at the correct content and cognitive level. A table of specifications was used in the creation of the test to ensure good sampling validity. This table was used to plan questions that assessed each content standard at the appropriate cognitive level. I tried to include at least 2-3 questions for each intersection on the table of specifications. I assigned each content category a relative level of emphasis and attempted to mirror this emphasis on the test. The synthesis level ILO for dichotomous keys and cladograms is omitted from the test because of feasibility, but these concepts are assessed with a separate activity in the unit. The ILOs on natural selection are also slightly underemphasized on the test, but students have a major project that counts as a test grade on these concepts, so I felt that they would demonstrate their understanding of these concepts sufficiently through that project. The test itself could have higher content validity if there were more questions for each intersection, but there were limitations to the time allowed for the test as well as the endurance of the students. I am fairly confident that there are enough questions at each point of intersection to draw inferences about student knowledge and skills. E. Rationale for my Choice of Test Item Types The majority of the test is in multiple choice. This format is the most efficient and is suited to the majority of the learning objectives which are at the comprehension and application levels. It is also important for students to learn to take multiple choice tests because their end-of-course SOL will be in that format. Short answer questions are included to allow students to demonstrate deeper knowledge of important concepts. However, because many students struggle with reading and writing and feel intimidated by the prospect of composing an essay, the short answer questions are scaffolded to allow students to break down the question and build confidence about their knowledge. This also allows for me to easily determine how to divide up partial credit on these answers more objectively. Sources of Test Items: Question Source 1 SOL Release - 2007 2 SOL Release - 2008 3 Self-Written 4 SOL Release - 2006 5 Self-Written 6 MCAS Release 7 Kate Ottolini 8 SOL Release - 2008 9 SOL Release - 2008 Question 10 11 12 13 14 15 16 17 18 Source SOL Release - 2008 Self-Written MCAS Release Self-Written Self-Written SOL Release Kate Ottolini Self-Written Self-Written Question 19 20 21 22 23 24 25 26 27 Source Self-Written Self-Written Self-Written Self-Written Self-Written Self-Written Self-Written Self-Written MCAS Release Fisher, 7 F. Potential Threats to Reliability I have tried to write this test to be as reliable as possible. In reviewing the test, I do not see any evidence of cultural bias that I could identify. The test has clear directions and has been proofread for grammatical errors and bias by myself and a colleague. I am confident in the reliability of the individual items that I wrote because I followed the rules for creating assessment items. Particularly with multiple choice items because they make up the majority of the test I ensured that the question was in the item stem, the choices were in random or alphabetic order, the choices had similar formats and the distractors were plausible. For the SOL or other adapted questions that I did not write myself, I screened for these rules and modified them slightly if I found problems. Many test items include surplus information like graphs, images, or diagrams. I attempted to provide adequate explanation for this surplus information so that all the information students need to answer a question is provided for students if they understand the concepts being assessed. Of course, this test is not perfectly reliable and its greatest weakness is probably the limited number of questions for the relatively high number of ILOs. There is bound to be some error in questions that I have not anticipated. I will analyze items after students have taken the test and attempt to determine if questions that many students answer incorrectly are unreliable because of error or if the concepts were not adequately addressed in instruction. I can do this by comparing these items with other items that assess the same ILO to see if students were weak in the whole area or just one question. During administration of the test, I will provide only information to clarify what a question is asking, or what a word means, though not a vocabulary word that students are being assessed on. If any question or concern seems like one that more than one student may struggle with, I will make that information available to all students by writing the clarification on the board and announcing that students should read it if they have issues with that question. G. Potential for and Cautions About Predictive Validity I believe that this test has fairly good potential for predictive validity for the end of course assessment, the biology SOL. The test covers the ILOs of the unit fairly based on the table of specifications that I created. Though I modified the ILOs, these objectives were originally drawn from the SOL framework and so reflect essential knowledge and skills that students may be assessed on during the SOLs. In addition, many questions were drawn from the SOLs. This increases the predictive validity of the test in regards to student performance on the SOLs. However, I did change some wording or answer choices on SOL questions that I believed would mislead or confuse students unnecessarily. Therefore, students may have a more difficult time answering SOL questions because of these issues. The greatest caution I have about the predictive validity of this test is that there are relatively few questions for a large set of ILOs. On the SOL there are also relatively ew questions (only 50) for a huge amount of objectives. Because this is the case on both tests, the questions addressed may not match up well in terms of content and cognitive level and this may limit the predictive validity of this test. H. Scoring and Grading Procedures This test consists of 24 multiple choice items and 3 short answer items, which each have three parts. The multiple choice items are worth 3 points and the short answer questions are worth 9 points. Each sub-question of the short answer section is worth 3 points, equal to each multiple choice question. I believe these are about equal in value so I think this is a fair grading system. This totals 99 points for Fisher, 8 the test. This test will be averaged with students other test and project grades which then account for 50% of the students total grade, a policy that is mandated by the school system. Select response items will be graded with a key. These items will be analyzed and if over 50% of students miss any one question, that question will be omitted from the test. Supply-response items will be graded with rubrics that were created beforehand in order to make grading as objective as possible. These rubrics contain sample acceptable answers as well as essential information that a student must include in order to receive full credit. Partial credit will be awarded for partially correct but incomplete answers. In order to limit personal bias, I will grade short answer questions without knowing which student’s test I am grading. Additionally, I may go over students answers twice in order to ensure that my grade was not affected by any outside factors at the time of original grading. If time allows, I will also have colleague double check my grades for short answer questions using the same rubrics that I developed.