Evaluators should do what they can 1

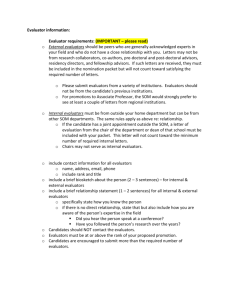

advertisement

Evaluators should do what they can1 (in tribute to Barry MacDonald) Saville Kushner University of Auckland Barry MacDonald was a pragmatist – which is to say that if an idea or a proposition has no implications for action it is meaningless – meaningless in the sense that it does not inform intent or motivation to act. This is why he was drawn to educational evaluation. In fact, he distinguished evaluation from research with two basic observations: one, that evaluation in essence has consequences in action (mostly for resource distribution) – whereas research may have only theoretical implications; the other, that evaluation works on the practical dilemmas of others – not on the priorities of the researcher. Evaluation is service; research is discovery. Both generate original knowledge – but with different intent. He was also drawn to titles. Titles for him were mini-summations of the orientation of the paper he was writing. Most often, he was preoccupied with the dilemmas we all face in confronting the challenge and the paradox of action. So his titles often were expressed as paradoxes or double entendres or juxtapositions – but always as challenges. ‘Hard Times’ (evaluation and accountability), ‘Mandarins and Lemons’ (evaluation and the bureaucracy), ‘Bread and Dreams’ (civil rights policy and bilingual education). One of his minor offerings was the tile of this introduction – a double entendre to be sure. His preoccupations were with taking a realistic view of what evaluation can achieve, and in this he saw things evaluators were asked to do which were unreasonable, and other things evaluators could do which they were not. This is the theme of the discussion I suggest we have. Let us start with competing statement of the possible and the impossible. First, the impossible. Here is Michael Scriven’s (1996) view of evaluator competencies: 1. Basic qualitative and quantitative methodologies (including survey and observation skills, bias control procedures, practical testing and measurement procedures, judgment and narrative assessment, standard-setting models, presupposition identification, definition theory and practice, case study techniques). 2. Validity theory, generalizability theory, meta-analysis (a.k.a. external synthesis), and their implications (especially as they apply to instruments and indicators, to dissemination practice, and to the synthesis of evaluation studies, respectively). 3. Legal constraints on data control and access, funds use, and personnel treatment (including the rights of human subjects). This includes an understanding of the professional program evaluation standards and the testing standards. 4. Personnel evaluation (since a program can hardly be said to be good if its evaluation of personnel is incompetent or improper), and the personnel evaluation standards. 5. Ethical analysis (e.g., of the extent to which services to clients require a judgment about the legitimacy of program goals; and with respect to confidentiality, discrimination, abuse, triage). 6. Needs assessment, including the distinctions between needs and wants, between performance needs and treatment needs, between needs and ideals, between met and unmet needs, and so on. 1 Paper presented at the ANZ Evaluation Association annual conference, Auckland, July, 2013 7. Cost analysis, including standard procedures such as time discounting of the value of money, but also including the ability to determine opportunity costs and non-money costs (since they are often the most important ones). 8. Internal synthesis models and skills (i.e., models for pulling together sub-evaluations into an over-all evaluation, sub-scores into sub-evaluations, and the evaluations of multiple judges into an over-all rating or standard). 9. Conceptual geography, as in understanding (1) the difference between the four fundamental logical tasks for evaluation [of either (a) merit, (b) worth, or (c) significance], namely grading, ranking, scoring, and apportioning, and their impact on evaluation design; (2) the technical vocabulary of evaluation (including an understanding of commonly discussed methodologies, such as performance measurement and TQM); (3) various models of evaluation as a basis for justifying various evaluation designs; and (4) the validity and utility of evaluation itself (i.e., recta-evaluation), since that issue often comes up with clients and program staff (it includes psychological impact of evaluation). 10. Evaluation-specific report design, construction, and presentation. He goes on to say – hardly comforting: “This is a formidable set of competencies, although it is meant to be minimal, and one that (if valid) raises a serious question about how many people who describe themselves as evaluators deserve the name.” Well – that rules me out. Let’s take a more comforting view. Here is Lee Cronbach (1985): “No one is…fully equipped…The political scientist and the economist find familiar the questions about social processes that evaluation confronts, but they lack preparation for field observation. Sociologists, psychologists and anthropologists know ways to develop part of the data needed for evaluations, but they are not accustomed to drawing conclusions in fastchanging political contexts. The scale and uncontrollability of contemporary field studies tax the skills of statisticians. Even at their best, statistical summaries fall short because evaluative enquiry is historical investigation more than it is a search for general laws and explanations….The intellectual and practical difficulties of completing a credible evaluation are painfully evident…” (Cronbach et al, 1980: 13) Now I feel a little better! But these are old writings and contexts have changed substantially. Both Scriven and Cronbach were writing in what were still expansive times with robust public sectors and a political and fiscal focus in advanced industrial countries on program development as a means of advancing social policy. We live in different times with the widespread intent to dismantle welfare states and to shrink public sectors and systems of social protection. In intervening years we have also seen a widespread transfer of evaluation responsibility into the administrative system and the close alignment of evaluation with the preferences of the government of the day. Government administrators increasingly see evaluation as a means of solving their immediate problems – essentially as an instrument of their own accountabilities and as management information. As the size of the bureaucracy itself shrinks, so the range of individual responsibilities broadens and program management becomes more complex. So – as a generalisation - evaluation contracts are drying up, shrinking in scale but widening in scope. This means fewer resources to hold public policy accountable and a burgeoning expectation on evaluators. Evaluators are increasingly asked to do more than is practicable and to shorter deadlines. On the other hand, evaluators are an increasingly embattled community whose economy is in decline. Whether in a university or in private practice evaluators live within tight commercial realities and are as good and as viable as their last contract. Contracts represent acts of faith and conforming to minimum contracts specifications is the best many can do to guarantee a foothold on the funding process. There is little room left to theorise across evaluation tasks, to address the bigger picture – what House (REF) called ‘big policy’ as opposed to ‘little policy’. Neither of these is a healthy state of affairs and, in the end, do not serve the interests of the administrative system, civil society or, obviously, evaluators. I have signalled before (Kushner, 2011) that treating evaluation as an internal, management information and accountability service has left us bereft of narratives to defend what is publicly cherished in public service and left us vulnerable to damaging narratives of inefficiency, waste and irrelevance. So here is the challenge. We need to enter into negotiations with the sponsoring community over what is worthwhile and reasonable to expect of evaluation. At the same time, we need to be more confident about addressing the big picture as we see it reflected in our evaluation data. To start the conversation, here are some measures we need to engage with: Build in a post-reporting phase to the evaluation to dedicate resource to deliberation; Grow our confidence at working with small samples to address the bigger picture. This means loosening the ties of validity. Evaluation reports are most useful when they serve to focus attention on program and policy development and on building consensus. The pressure to report on ‘what works’ and on ‘impact’ will not go away – but nor does the need to report constructively on the following: - Qualities to be found in programs - key issues – especially differences of views and experiences across program constituencies - constraints and enablers to change - views of change (prospective rather than retrospective evaluation) - options and accompanying consequences focus on problems and issues before method. Evaluation is generally more vulnerable to getting the question wrong than to not validating the answer; distinguish more closely between evaluation and research. Measuring effects, documenting practices and outputs, identifying causal mechanisms are not, in themselves, evaluation. Evaluation adds the requirement of placing these things in a judgement framework and subjecting them to the test of political feasibility; Include data on program interactions in our accounts. Evaluation reports should be inviting of challenge and reinterpretation. Cronbach et al (1980) Toward Reform of Program Evaluation, SF: Jossey Bass Kushner, S (2011) ‘A Return to Quality’ – Presidential Address to UK Evaluation Society Annual Conference, November, published in Evaluation, 17(3), pp. 309-312 Scriven, M (1996) Types of evaluation and types of evaluator in Evaluation Practice, Vol.17(2), pp.151161