Appendix 1

advertisement

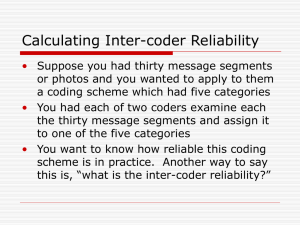

Appendix 1 Literature Review Method We conducted a literature review to determine the proportion of papers that provided; a written description of the iterative method, showed examples from real pulsed wave Doppler traces, showed more than the bare minimum of three traces (too short, too long and just right) and showed data from more than one example patient. The review was performed systematically using Pubmed, using the following search criteria: “Echocardiography”[MeSH] AND “Cardiac Resynchronization Therapy”[MeSH], “Echocardiography”[MeSH] AND "Cardiac Resynchronization Therapy Devices"[MeSH], "analysis"[All Fields] OR “determination”[All Fields] AND “optimal”[All Fields] AND “atrioventricular”[All Fields] AND “delay”[All Fields], “CRT” OR “Biventricular” AND “echocardiography” AND “optimization” OR “optimisation”, “CRT” OR “Biventricular” AND “echocardiography” AND atrioventricular”. Publications returned from the search were screened and excluded if the title did not include at least one of the following phrases; Optimization, cardiac resynchronization (CRT), atrioventricular (AV) delay. The remaining publications were screened and excluded if the abstract did not describe a method of AVD optimization using transmitral Doppler traces. Identified publications were included in final analysis if they gave a written description of the iterative method. The following criteria were used to assess methods of describing the iterative method; written description, cartoon representation and exemplar Doppler trace description of real data (including the number of patient examples and the number of trace examples from each patient). Results Of the 392 publications returned using the search criteria described publications were screen by title, retaining 169. After reading the abstract, 66 publications remained that described echocardiographic methods for optimization of the AV delay. On reading of the full text, a further 47 publications were excluded leaving follow exclusion as per Methods, a total of 19 publications were included in the analysis Appendix 2 Kappa This study uses kappa to assess agreement between 3 observers on the optimal transmitral Doppler inflow pattern from a selection of images showing traces captured across a range of atrioventricular (AV) delays. We provide all images and a detailed description of the kappa score in this appendix. Kappa scores range from 0-1, where a score of 0 indicates that the agreement is only due to chance (i.e. bad agreement) and a score of 1 indicates perfect agreement (100%). The kappa scale depends on how many choices observers begin with, if there are ten choices then there is a 1 in 10 chance that observers will select the same thing; 10% agreement is expected by chance so the maximum agreement beyond chance is 90%. The observed agreement beyond chance is how well observers actually agree during the selection process beyond what is expected. Kappa score is calculated as the observed agreement beyond chance divided by the maximum agreement beyond chance. Interpretation of kappa scores has been suggested as <0.20= poor, 0.21-0.40= fair, 0.41-0.60= moderate, 0.61-0.80= good, and >0.81= very good1. Two simple examples of calculating kappa, also represented diagrammatically in Figure 1, are: (1) Tossing coins There are 2 possible results from tossing a coin; therefore the chance that 2 people will return the same result is 1 in 2 or as a percentage 100/2 (50%) So the maximum agreement beyond chance is 100-50= 50% Each person tosses a coin 100 times: 80 times the result is the same: that’s 80% observed agreement, which is, 30% observed agreement above chance (80%-50%) Kappa = observed agreement above chance/maximum agreement above chance Kappa= 30/50 = 0.6 (2) Dice There are 6 possible results from the roll of a dice; therefore the chance that 2 rollers will roll the same is 1 in 6 or as a percentage 100/6 (16.66%) So the maximum agreement beyond chance is 100-16.66= 83.34% They roll 100 times each: 20 times they roll the same: that’s 20% observed agreement, which is, 3.34% observed agreement above chance (20%-16.66%) Kappa = observed agreement above chance/maximum agreement above chance Kappa= 3.34/83.34 = 0.04 Appendix 3 Reviewer comment “It is clear that the authors of the manuscript and I have a different opinion on the value of the iterative technique for CRT optimization in clinical practice. Irrespectively who of us is right, I remain concerned about sending out a message that the iterative technique is most of the time useless and should even be omitted from the guidelines. I will try to explain better my point: I completely agree with the authors that defining one "optimal" AV delay with the iterative method is impractical. As the authors have nicely demonstrated in their manuscript observer variability is too substantial to allow this. I would like to point out, and I think the authors will agree, that any other method, quantitative or qualitative, is very unlikely to provide this one and only "optimal" setting. Moreover, as I pointed out in the previous revision, the one and only ideal AV delay when a patient is at rest in the supine position is unlikely to be the optimal AV delay for each situation of the patient during daily life. The purpose of CRT optimization, to my opinion, is therefore not to identify the one and only optimal AV delay. In clinical practice, it is probably more important to chose an AV delay that provides an acceptable transmitral filling pattern at rest, that is preserved during exercise. I fully realize this personal opinion is beyond the scope of the article, but I think it is essential for the authors to understand why I think they overemphasize the importance of the one and only AV delay. The true value of the iterative technique, when performed systematically (and this can be done even by well-trained paramedics in my experience), is that it can identify a suboptimal AV delay in 47% of CRT non-responders (Mullens et al. J Am Coll Cardiol. 2009;53:765-73.). This means identifying patients with a clearly suboptimal transmitral filling pattern that is ameneable to improvement by changing the device settings. I think it would be a wrong message to send out to practicing clinicians that they should stop to do that or use a method that is much more difficult.” Appendix 4 Reviewer comment “The authors state in the abstract and discussion that "more than half of real-world patients have E-A fusion or full E-A separation at all AVDs, making iterative optimization challenging or impossible". I completely disagree with this statement and would like to highlight Figure 2 of the article to illustrate my case. If one looks at the example of the "class A" patient, indeed this patient demonstrates E- and A-waves that always appear merged, irrespectively of the AVD. However, iterative optimization still identifies an ideal AVD of around 100ms. Few experts would argue that the AVD of 40ms results in better filling as the A-wave is clearly truncated. It might be more challenging to appreciate the differences between 100ms and 160ms. However, at 100ms, the ventricle continues to fill (end of A-wave) long after the start of electrical systole (start of QRS complex), while this is not the case at 160ms. Moreover, diastolic filling time seems longer at 100ms although I must admit that it is difficult to assess by eye-balling. Similar, the class D patient, who is also not suited for iterative optimization according to the authors, demonstrates an A-wave which is still truncated at 140ms, but less at 160ms. Therefore, again, iterative optimization offers useful information on the optimal AVD. In fact, I can't see the point of using this classification as it offers no benefit to clinical practice.” Supplemental Figures Figure 1. Top: Kappa scale versus raw agreement for tossing a coin. Bottom: kappa scale versus raw agreement for rolling dice References 1. Altman DG. Practical statistics for medical research: Chapman and Hall, London, 1991.