D2.4-review1

advertisement

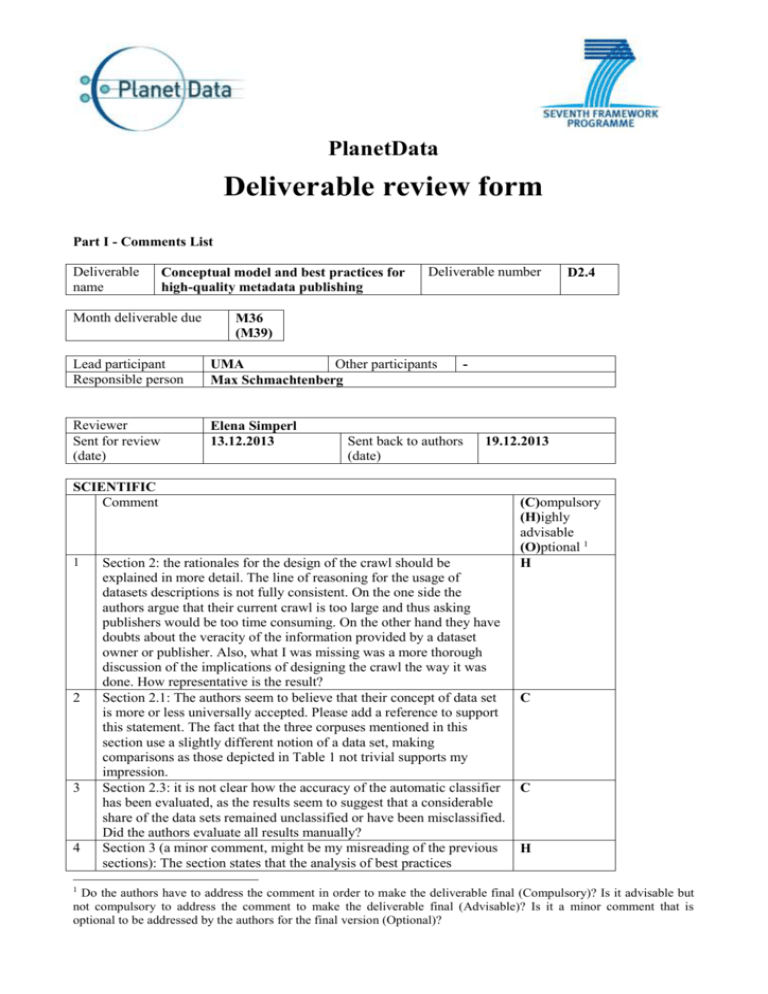

PlanetData Deliverable review form Part I - Comments List Deliverable name Conceptual model and best practices for high-quality metadata publishing Month deliverable due Deliverable number M36 (M39) Lead participant Responsible person Other participants UMA Max Schmachtenberg Reviewer Sent for review (date) Elena Simperl 13.12.2013 - Sent back to authors (date) 19.12.2013 SCIENTIFIC Comment 1 2 3 4 1 D2.4 Section 2: the rationales for the design of the crawl should be explained in more detail. The line of reasoning for the usage of datasets descriptions is not fully consistent. On the one side the authors argue that their current crawl is too large and thus asking publishers would be too time consuming. On the other hand they have doubts about the veracity of the information provided by a dataset owner or publisher. Also, what I was missing was a more thorough discussion of the implications of designing the crawl the way it was done. How representative is the result? Section 2.1: The authors seem to believe that their concept of data set is more or less universally accepted. Please add a reference to support this statement. The fact that the three corpuses mentioned in this section use a slightly different notion of a data set, making comparisons as those depicted in Table 1 not trivial supports my impression. Section 2.3: it is not clear how the accuracy of the automatic classifier has been evaluated, as the results seem to suggest that a considerable share of the data sets remained unclassified or have been misclassified. Did the authors evaluate all results manually? Section 3 (a minor comment, might be my misreading of the previous sections): The section states that the analysis of best practices (C)ompulsory (H)ighly advisable (O)ptional 1 H C C H Do the authors have to address the comment in order to make the deliverable final (Compulsory)? Is it advisable but not compulsory to address the comment to make the deliverable final (Advisable)? Is it a minor comment that is optional to be addressed by the authors for the final version (Optional)? PlanetData 5 6 7 conformance refers to the LOD cloud, but it is not clear to me as a reviewer what this cloud now contains. Is it the 961 data sets you classified by domain? I was wondering whether considering only VoID as a dataset vocabulary is too restrictive. Could the authors elaborate on possible alternatives used in repositories such as CKAN? In a future version of this analysis it might be interesting to combine the results of data and vocabulary interlinking and to see whether some types of entities tend to be more interlinked than others when the corresponding classes are interlinked or the other way around. This could also inform ontology matching and data interlinking strategies. My biggest concern about the deliverable refers to the implications of the quantitative analysis. The conclusions merely scratch the surface there and I’d appreciate a more in-depth discussion of each criterion that was analysed in Section 3. Also, it would be nice to be able to see a table showing which criteria have been subjected in previous surveys and what the difference is with respect to the findings. Deliverable D2.4 H O H 8 9 ADMINISTRATIVE (e.g., layout problems such as empty pages, track changes/comments visible, broken links, missing sections, incomplete TOC, spelling/grammar mistakes) Comment (C)ompulsory (H)ighly advisable (O)ptional 2 1 Cover page, abstract and executive summary missing or incomplete C 2 Atypical use of capitalization in nouns, sometimes inconsistently used C throughout the document. Examples: Design Issues, Project, Vocabulary 3 Inconsistent capitalization of section headers, see, for example, C Section 2 vs the rest of the document. Please also check captions of figures and tables. 4 The name of the project is spelled as ‘PlanetData’ (one word) and not C ‘Planet Data’ (two words) 5 There is a missing reference on page 32 C 2 Do the authors have to address the comment in order to make the deliverable final (Compulsory)? Is it advisable but not compulsory to address the comment to make the deliverable final (Advisable)? Is it a minor comment that is optional to be addressed by the authors for the final version (Optional)? Page 2 o Deliverable D<xxx> INSEMTIVES Part II – Summary overall marking Comments VG (very good) / G (good) / S (generally satisfactory / P (poor) S The deliverable presents a brief summary of Complex event processing concepts and comments on possible future issues in this domain. It also introduces a use case aggregating events in the context of a smart city. In general the main contribution seems to be the application of CEP technologies to this type of use cases, although this has already been seen in the literature (e.g. IoT and sensor related projects). The authors mention some interesting open issues including mixing CEP and mining in a coherent way, for example. However this is not explored in the use case presented. It might be useful to indicate clearly in the beginning the contributions made. In the case of the rules and queries used in the use cases, it might be useful to see the rules (or queries in Esper or StreamInsight) and illustrate with more detail the steps followed. This can actually be useful for potential readers of this document. After addressing the Quality Assessor’s comments, report back to him/her re-using this review form. © INSEMTIVES consortium 2009 - 2012 Page 3 of (3)