Introduction to Statistics

advertisement

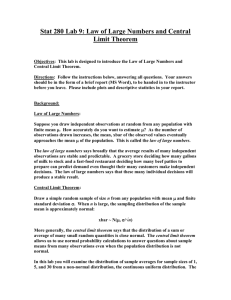

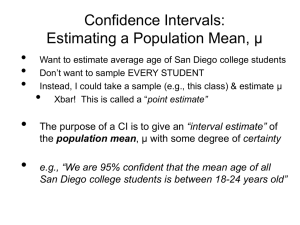

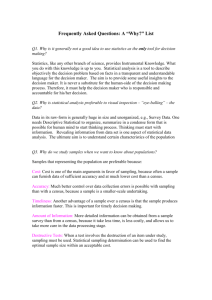

Bus 221 Notes Legend 1. Grey: Particularly important information. 2. Yellow: particularly important procedures 3. Blue: refers to graphics (tables, charts, transparencies of pages, etc.) from the textbook. 4. Black: supplemental material – not covered in the exams or text Chapter 1: Picturing Distributions with Graphs 1. Individuals and Variables a. Let’s collect some data from some members of this class. b. Individuals: “The objects described by a set of data.” c. Variables: “Any characteristic of an individual.” For example, the height (variable 1) and weight (variable 2) of the students in our class (the individuals). i. Quantitative variables: “takes numerical values … [and] recorded in a unit of measurement …” ii. Categorical variables: individuals are placed “into one of several groups or categories.” d. Sample: a subset of a population, where the population is the full group of interest. i. Often data set construction begins with a question that needs to be answered: What is the mean GPA of a CWU student? 1. The population is defined by the question. ii. Why might we prefer gathering information from a sample rather than the full population. iii. Is there ever a problem with gathering data from a sample? 1. The importance of sample size. e. Observation: the value that a variable takes for a particular individual. f. Data: any collection of observations. g. Example: Transparency: p. 6 i. Individuals: make & model ii. Variables: vehicle type, transmission type, number of cylinders, city mpg, highway mpg 2. Categorical Variables: Pie Charts and Bar Graphs a. Categorical variable: individuals are placed “into one of several groups or categories” and hence the value of the variable represents a certain category. b. Distribution of a variable: It “tells us what values [a variable] takes and how often it takes these values.” That is, it tells us how the data are spread (i.e., distributed) across different ranges. c. Distribution of a categorical variable: It “lists the categories and gives either the count or the percent of individuals who fall in each category.” i. Frequency: the number of observations in a range ii. Percent: the proportion of observations in a range x 100 1. Proportion: number of observations in range / total number of observations 2. Roundoff error: when the sum of the percents across the different categories don’t equal 100% because of rounding. iii. Slide: Example 1.2 1. What are the individuals? 2. What is the variable? d. Pie chart: the size of the wedge represents the percent of individuals that fit into a certain category in the sample. i. Slide: Figure 1.2, p. 8 e. Bar chart: the height of each bar represents the percent or count (number) of individuals that fit into a given category in the sample. i. Slide: Figure 1.3, p. 8 ii. The bars are drawn with a space between them. iii. The height of each bar can represent percent or count. 3. Quantitative Variables: Histograms a. Quantitative variable: a variable where the value of the variable represents a quantitative level, rather than a category. b. Histogram: Very similar to a bar chart. Data are placed into one of a number of equal sized classes (similar to categories), and then the height of each bar represents the percent or frequency (number) of individuals that fit into each class. i. Similar to a bar chart, except: 1. Rather than categories, there are ranges for the variable. 2. The bars in a histogram do not have any space between them. ii. Example 1. The raw data: Slide: Table 1.1, p. 12 a. What are the individuals? b. What is the variable? 2. One histogram: Slide: Figure 1.5, p. 13 3. Another histogram: Slide: Figure 1.6, p. 16 a. “Histograms with more classes show more detail but may have a less clear pattern.” 4. Interpreting histograms: a. Overall pattern: i. Shape 1. Skewed: When “the histogram extends much farther out” in one direction than another. a. The distribution can be right skewed or left skewed. b. Slide: Figure 1.6, p. 16 2. Symmetric: When “the right and left sides of the histogram are approximately mirror images of each other.” a. Slide: Figure 1.7, p. 17 ii. Center: For now, “the value with roughly half the observations taking smaller values and half taking larger values.” iii. Spread: “For now, we will describe the spread of a distribution by giving the smallest and largest values.” b. Deviations from the overall pattern: i. Outlier: “An individual that falls outside the overall pattern.” 1. Slide: Figure 1.9, p. 19 5. Quantitative Variables: Stemplots: a. Stemplot: very similar to a histogram (it looks like a sideways histogram), but which reveals the exact numerical value of sample data in each range. i. The right most digit is the leaf, and the remaining digits are the stem. b. Slide: Figure 1.10, p. 20 i. A distribution of the percent of foreign born residents in states using data from Table 1.1. c. When making stemplots we often first round the data to the nearest one, ten, hundered, or thousand. We then put the rounded data into the stemplot. i. Slide: Example 1.9, p. 21 - 22 d. We will also often split stems, which means divide each stem into two. i. Slide: Figure 1.11 (and figure under Figure 1.11) 6. Summary – Graphically Representing Distributions a. Categorical data Bar Chart or Pie Chart b. Quantitative data Histogram or Stem Plot 7. Time Plots a. Time series data: Sometimes data (on a certain variable) for an individual or group is collected over time. These type of date are called time series data. i. Cross sectional data: The name for the data that we have been discussing up to this point. 1. Cross sectional data on a certain variable is data collected across a section of individuals at a certain point in time. 2. Bar graphs, pie charts, and histograms plot cross-sectional data. b. Time plots: a time plot of a variable graphs the level of the variable (on the y axis) against the time period when the variable was collected (on the x axis). i. Slide: Figure 1.12, p. 24 ii. Cycles: regular or cyclical up and down movements in data over time. iii. Trends: a long term movement in one direction over time. 8. Using Statistical Aplets at the Course Website a. The One Variable Statistical Calculator Chapter 2: Describing Distributions with Numbers 1. Measuring Center: Mean a. Mean: Xbar = 1/n ∑xi i. Xbar: the mean of the variable (the mean of the observations on the variable in question for the different individuals in our sample) at which we are looking, where x represents the variable at which we are looking. ii. n: the number of individuals in the data. iii. ∑: the sum iv. x: the variable at which we are looking v. xi: the observation on the variable at which we are looking, for individual i. 1. The “observations for the individuals” otherwise known simply as the “observations”. vi. ∑xi: the total sum of the observations on the variable for each individual (individual 1 through individual n). 2. 3. 4. 5. 6. 1. ∑xi ≡ x1 + x2 + … + xn vii. Notation allows us represent formulas easily. viii. We can calculate the mean of a sample, or a full population. Measuring Center: Median a. Median (M): the midpoint of a distribution i. “The number such that half the observations are smaller and the other half are larger” ii. Finding the Median 1. “Arrange all observations in order of size, from smallest to largest.” 2. If the number of observations n is odd, the median M is the center observation in the ordered list. If the number of observations n is even, the median M is the [mean of]… the two center observations in the ordered list?” 3. “You can always locate the median in the ordered list of observations by counting up (n + 1)/2 observations from the start of the list.” iii. Slide: Example 2.3, p. 42 1. This stemplot allow us to find the middle number/s. Comparing the Mean and Median a. In a skewed distribution, the mean is usually further out in the tail of the distribution (the side of the distribution that extends further. Measuring Spread: The Quartiles, five-number summary, & boxplots a. Quartiles i. First quartile (Q1): the median of the ordered observations to the left of the median. ii. Third quartile (Q3): the median of the ordered observations to the right of the median. b. Five number summary: Minimum, Q1, M, Q3, Maximum c. Boxplot: i. Slide: Figure 2.1, p. 46 Spotting suspected outliers: a. Interquartile range (IQR): Q3 – Q1 b. Interquartile Rule for outliers: i. An observation is a suspected outlier if the observation falls 1.5 x IQR above third quartile or below first quartile. Measuring Spread: Standard Deviation a. Mean absolute deviation b. s (standard deviation) = sqrt [ 1/(n-1) ∑(xi – Xbar)2 ] i. Here xi represents the value of the variable for the i’th individual. c. Slide: Figure 2.2, p. 51 d. s2 (variance) = 1/(n-1) ∑(xi – Xbar)2 e. Calculating s by hand. i. Make a column of observations ii. Make a column of deviations: (xi – Xbar) iii. Make a column of squared deviations: (xi – Xbar)2 iv. Sum the last column and divide by (n – 1) to calculate s2 v. Take the square root of s2 to calculate s. vi. Example 2.7, p. 50 7. Choosing Measures of Center & Spread a. The five-number summary is preferred with a skewed distribution or with strong outliers while the Xbar and s are convenient for somewhat symmetric distributions. Chapter 3: The Normal Distribution 1. Density curves: a. A density curve is another way of representing a distribution for a quantitative variable. A density curve is just a line (a continuous function). i. See Figure 3.1, p. 70 ii. For a density curve, the y axis represents proportion, which is just “percent / 100.” iii. For a density curve, the x axis represents different possible values for the variable at which we are looking. 1. For a histogram, the x axis also represents different possible values (or ranges) for the variable at which we are looking. iv. Proportion of data falling between two values is now represented by the area under the curve (and above the x axis). 1. For a histogram, the proportion of data falling between to values is represented by the height of the bar. v. The total area under the curve is 1. 1. For a histogram, the summed heights of the bars is 1. vi. The proportion for any single value is 0, because the area between any single value and itself is just 0. b. Note, contrary to all of the histograms we have been looking at which show the distribution of a sample, a density curve will typically be used to estimate the distribution of a full population. c. Questions i. How do we calculate the proportion of observations below or above a certain level? 1. How would you do it for a histogram? a. See Figures 3.2a, p. 71, for how to do it with a histogram 2. How would you do it for a normal distribution? a. See Figure 3.2b, p. 71, for how to do it with a density curve. ii. How do we calculate the proportion of observations between two values? 1. How would you do it for a histogram. 2. How would you do it for a normal distribution. iii. What is the height of a horizontal density curve over the region (0, 1)? How about over the region (0, 2)? 1. See Figure 3.4, p. 73 2. Describing density curves a. Median: the equal areas point. i. See Figure 3.5a and 3.5b, p. 73 b. Mean: “the mean is pulled away from the median toward the long tail.”\ i. See Figure 3.5a and 3.5b, p. 73 3. Normal distributions (Normal density curves) a. A Normal distribution is a symmetric bell shaped density curve described by the following equation. i. f (X) 1 X 1 / 2 2 for X . e 2 ii. You do not need to know this equation for the exam. iii. This is the equation for a whole family of normal density curves in the same way that f(X) = mx + b is an equation for a whole family of straight lines. 1. This might look more familiar as y = mx + b. iv. I will use the terms “density curve” and “distribution” interchangeably. b. There is a whole family of Normal density curves. In fact, there are infinitely many normal density curves. By altering μ and σ the density curve can be made to take a wide variety of shapes. However, it will always be symmetric and bell shaped. c. It turns out that μ is the mean (the center) of the distribution, and σ is the standard deviation of the distribution. d. N(μ, σ) is notation for a Normal distribution with mean μ and standard deviation σ. i. For example, N(0, 1) would refer to a normal distribution with a mean of 0 and a standard deviation of 1. ii. μ, the mean, determines the location of the distribution on the x axis. 1. Note that the mean and median are located at the center of a Normal distribution (at the same point). a. See Figure 3.5a, p. 73 2. Later we will use μ to describe the mean of the population, which is the full set of data from which a sample is taken. Xbar is the mean of a sample. iii. σ, the standard deviation, determines the spread of the distribution. 1. See Figure 3.8, p. 75 2. The change of curvature points “are located at distance σ on either side of the mean (μ). a. See Figure 3.8, p. 75 3. Does it make sense that a more spread Normal density curve would have a greater standard deviation σ? Remember, the analogy is a histogram with a greater spread. 4. Later we will use σ to describe the standard deviation of the population, which is the full set of data from which a sample is taken. s is the standard deviation of the sample. e. Note, contrary to all of the histograms we have been looking at which show the distribution of a sample, the Normal distribution will be used to estimate the distribution of a full population. 4. Motivation for using the Normal distribution a. First: “Normal distributions are good descriptions for some distributions of real data.” i. However, it is not perfect. It implies that there are some values for x which are extremely high and extremely low. b. Second: “Normal distributions are good approximations to the results of many kinds of chance outcomes, such as the proportion of heads in many tosses of a coin.” c. Third: “We will see that many statistical inference procedures based on Normal distributions work well for other roughly symmetric distributions.” 5. The 68-95-99.7 rule a. “In [a] Normal distribution with mean μ and standard deviation σ : i. Approximately 68% of the observations fall within σ of the mean μ. ii. Approximately 95% of the observations fall within 2σ of μ. iii. Approximately 99.7% of the observations fall within 3σ of μ.” iv. This analogizes to a histogram. v. Figure 3.9, p. 77 vi. Figure 3.10, p. 78 vii. Example 3.3, p. 79 6. Cumulative proportion a. The “cumulative proportion for” a given variable is the “proportion of individuals whose observed value is equal to or less than” some specified value for the variable in question. i. P(x < “specified value”) ii. Where P is the proportion of data less than the “specified value.” b. The cumulative proportion is just the area below the density curve to the left of the “specified value.” i. Figure, top of page 82 c. The proportion of individuals whose observed value is greater than x for the variable in question is just “1 – the cumulative proportion of x.” i. Example 3.5, p. 82 7. The standard Normal distribution a. The standard normal distribution is a normal distribution with the below properties. That is, it is a distribution for a variable that has the following characteristics: i. μ = 0 ii. σ = 1 b. Figure 3.9, p. 77 c. Note that the value of any observation tells us exactly how many standard deviations that observation is from the mean. i. For example, imagine the observation 2.5 in the standard normal distribution. Since μ = 0 and σ = 1 it is implied that the value 2.5 in the standard normal distribution is 2.5 standard deviations from the mean. d. Conveniently, there is standard normal table in the back of our book on p. 690691 that tells us the cumulative proportion for any observation in a data set that has a standard normal distribution. e. Unfortunately, this table only applies to a standard normal distribution, which is just one of the infinitely many normal distributions. 8. Using the standard Normal table to find proportions for any Normal distribution. a. It turns out that the cumulative proportion for a specific point (observation) in any and every Normal distribution is determined by the number of standard deviations that point is from the mean. b. Therefore, to find the cumulative proportion of a point (x value) in any and every Normal distribution, we can simply calculate how many standard deviations the x value is from the mean, z = (x - μ) / σ, and then look up the number in the standard normal table on p. 676-677. i. z = (x – μ) / σ ii. z is called the “z value” or “z score.” It tells us how far a specific point (x value, that is, a specific observed value of a variable) falls from the mean for a Normally distributed variable. iii. Example 3.7 c. Summary - Finding cumulative proportions for values in any Normal distribution: i. Convert the value (observation) to a z value using the formula: z = (x – μ) /σ ii. Look up the z value in Table A (p. 676-677) to find the corresponding proportion. d. Finding the proportion of data that lie between two particular values. That is, finding the proportion of data that fall within a certain range, say between x1 and x2. i. Convert the x values (x1 and x2) to z values. ii. Look up the z values in Table A and use “the fact that the total area under the curve is 1 to find the required area under the standard Normal curve.” iii. Example 3.8, p. 85. 9. A general approach for finding a cumulative proportion for a certain value in a normal distribution. a. Draw a picture of a distribution, showing the area for which you are looking. b. Write out the problem in the following form. i. P(x < “some value”) 1. When determining the proportion below some value: ii. 1 – P(x < “some value”) 1. When determining the proportion above some value: iii. P(x < “some value”) – P(x < “some other value”). 1. When determining the proportion between two values c. Calculate the z-score (the number of standard deviations away from the mean): i. Convert an x value from the above step to a z value using the formula: z = (x – μ) / σ d. Use the table to look up the corresponding proportion(s): e. Use the proportions to calculate your answer. 10. A general approach for finding a value for a certain proportion in a normal distribution. a. Draw a picture displaying the relevant cumulative proportion (the area to the left of a certain value). i. This may be 1 – “the proportion given in the problem” if the problem asks you to find the value corresponding to a certain proportion above that value. b. Find the z-score: Look up the relevant proportion in Table A and find the corresponding z value. c. Calculate the x value using the formula. i. x = μ + z σ 11. Example of the usefulness of the normal distribution a. If you had sample data on a certain variable for a group of individuals, you could calculate proportions you were interested in using the following steps: i. Assume (following a quick confirming glance at the histogram for the sample) that the variable is distributed Normally. ii. Estimate the mean and standard deviation of the Normal distribution using the sample mean and standard deviation. iii. Use the z formula and the standard Normal table to do such things as calculate the proportion of individuals in the full population whose value (for the variable being considered) falls below a certain level. Chapter 4: Scatterplots and Correlation 1. Explanatory and response variables a. Explanatory variable (causal or independent variable): a variable which “may explain or influence changes in the response variable.” b. Response variable (dependent variable): a variable which “measures an outcome of a study.” Alternatively, a variable that responds to changes in the explanatory variable. c. One way to identify when there is a true explanatory-response relationship is when a treatment of the explanatory variable causes changes in the response variable. d. Example 4.1 e. It is not always obvious whether there is an explanatory-response relationship between variables. i. Example: television and health in cross country data ii. Even when we suspect there is an explanatory-response relationship between two variables, it is sometimes not obvious which is the explanatory and which is the response variable. iii. Example: police and crime in cross city data f. Correlation, the emphasis of this chapter, measures how two variables are related, but not whether the relationship is due to an explanatory-response relationship. 2. Displaying relationships between data: scatterplots a. Up to now we have focused on describing one variable: mean, standard deviation, histogram (distribution), etc. In the next few chapters we will focus on the relationship between two different variables. i. Table 4.1 b. A scatterplot is drawn using observations on two variables from a group of individuals. Each point on the graph represents the following ordered pair for each individual: (observation on first variable for a given individual, observation on second variable for that same individual). i. Figure 4.2 c. Example – height vs. weight: utilize a table with observations on two variables for a group of individuals to “fill in” a graph where one variable is represented on the x axis and the other variable is represented on the y axis. d. A scatterplot will often give insight into the relationship between two variables. (That is, a scatterplot will often give insight into how two variables are related). i. Positive relationship: If a rise in one variable is associated with a rise in the other. ii. Negative relationship: if a rise in one variable is associated with a fall in the other. iii. No relationship: if a rise in one variable is associated with no particular change in the other variable. 2. Interpreting scatterplots a. Overall pattern of relationship i. Direction of relationship 1. Positive association 2. Negative association 3. Note: if the line is sloped upwards, the relationship is positive. If the line is sloped downwards, the relationship is negative. ii. Form of relationship (e.g., linear) iii. Strength of relationship (strong or weak) 1. The closer the pattern of the data are to a curve / line (that is, the more compact the formation of the data are giving the appearance of a curved or straight line), the stronger is the relationship. b. Deviations from the pattern i. Outliers 3. Adding categorical variables to scatterplots a. This is done by using a different plot color or symbol for individuals in each category. b. Doing so can provide information useful in understanding data. For example, it can provide information useful when trying to determine whether the relationship between variables is causal or not. i. Graph: % white versus AFDC payments. c. Figure 4.3. 4. Measuring linear association: Correlation 1 n xi x yi y a. r = n 1 i 1 s x s y b. Correlation tells us about the direction and strength of the LINEAR relationship between two variables. (Two variables may be strongly related, but if the relationship is not close to linear, the correlation will be have a low absolute value.) i. r will always be between -1 and +1. ii. A positive r implies a positive relationship between the variables and a negative r implies a negative relationship between the variables. iii. The more closely the pattern of the data seen in a scatterplot resembles a straight line, the greater the absolute value of r. c. Figure 4.5. d. Chapter 4 example (excel spreadsheet at course website) 5. Facts about correlation a. “Correlation makes no distinction between explanatory and response variables.” b. “Because r uses the standardized values of the observations, r does not change when we change the units of measurement of x, y, or both.” c. “Positive r indicates positive association between the variables, and negative r indicates negative association.” d. “The correlation r is always a number between -1 and 1.” e. “Correlation requires that both variables be quantitative, so that it makes sense to do the arithmetic indicated by the formula for r.” f. “Correlation measures the strength of only the linear relationship between two variables. Correlation does not describe curved relationships between variables, no matter how strong they are.” g. “Like the mean and standard deviation, the correlation is not resistant: r is strongly affected by a few outlying observations.” h. “Correlation is not a complete summary of two variable data, even when the relationship between the variables is linear.” 6. When a scatterplot (and a correlation calculation) reveals a relationship between two variables, it is not necessarily implied that the relationship is causal. Therefore, a scatterplot (and a correlation calculation) cannot tell us whether the relationship between the two variables is causal. a. One variable has a causal effect on another variable when in a properly done experiment an increase in the causal variable results in a change in the average level of the other variable. b. One example of why two variables would be correlated even though there is no causal relationship is when a third variable omitted from the analysis has a causal impact on both variables. c. When making a scatterplot and a causal relationship between the two variables is suspected, the explanatory variable (causal variable) is placed on the horizontal axis and the response variable (other variable) is placed on the vertical axis. i. It is not implied that a variable is explanatory just because it is placed on the x axis and declared by a person doing a study to be an explanatory variable. If the “explanatory” variable does not have a causal impact on the response variable, then it is not an explanatory variable. d. Examples: i. Height plotted against weight 1. Which is the explanatory variable and which is the response variable? ii. Price of house plotted against price of car 1. Is there “another variable” which is driving this correlation? iii. Shoe size plotted against number of basketball games played. 1. Is there “another variable” which is driving this correlation? Chapter 5: Regression 1. Regression Line: “A straight line that describes how a response variable y changes as an explanatory variable x changes.” a. Explanatory variable: the x variable. b. Response variable: the y variable. c. Figure 5.1. d. Basically, a regression line is the equation of the line which comes as close as possible to matching the data in the scatterplot. e. The explanatory variable does not necessarily cause changes in the response variable. i. Example: 1. Explanatory variable: expensive restaurant dinners per month. 2. Response variable: price of car 2. The Least-Squares Regression Line: the least squares regression line of y on x is the line that makes the sum of the squares of the vertical distances of the data points from the line as small as possible. a. Figure 5.5. b. y-hat = a + bx i. This is a linear relationship because it has the general form of a line: y-hat = mX + b. a above corresponds with b here, and bX above corresponds with mX here. ii. iii. c. a: mathematically, a is the y axis intercept of the line. Conceptually, it is the value of the response variable y when the explanatory variable x = 0. d. b: mathematically, b is the slope of the line. Conceptually, it is the amount that the response variable y changes in response to a 1 unit change in the explanatory variable x. e. For any level of x, the height of the line tells us the predicted level of x. y-hat from the above equation tells us the same thing. f. We use a linear regression to do the following: i. b: Estimate the amount that y changes in response to a 1 unit change in x. ii. y-hat: Predict level of y for any given level of x. 1. To get a prediction of y, just plug x into the least-squares regression equation: y-hat = a + bx g. See Chapter 5 Example Problem at course website. 3. Facts about least-squares regression a. Fact 1: “The distinction between explanatory and response variables is essential in regression.” i. If we switch the variables on the x and y axis, we get a different b. b. Fact 3: r2 is the square of the correlation. It is the fraction of the variation in the y values that is explained by the regression of y on x. i. A higher r2 implies that we have a “better” prediction of y. 4. 5. 6. 7. ii. However, r2 does not tell us anything about how accurate the estimate of b is, where b is the estimated impact of a one unit change of x on y. iii. If there were two sets of data that both had the same regression line, the data set with a scatterplot that had a pattern of data more closely resembling a line would have the higher r2. Residuals: “The difference between an observed value of the response variable and the value predicted by the regression line.” a. residual = observed y – predicted y b. residual = y – y-hat c. Figure 5.2. d. Figure 5.5. e. On the graph, this is just the vertical distance between the predicted level of Y for a given level of X (Y-hat, which is the height of the line which most closely matches the data, for a given level of X: Y-hat = a + bX), and the actual level of Y for that same level of X. f. “The mean of the least squares residuals is always zero.” i. This is one of the conditions for the least squares minimization. g. “A residual plot is a scatterplot of the regression residuals against the explanatory variable. Residual plots help us assess how well a regression line fits the data.” i. Figure 5.6. Influential observation: an observation that noticeably changes the level of b when removed. a. Note that not all outliers are influential. i. Figure 5.7. ii. Figure 5.8. Cautions about correlation and regression a. “Correlation and regression lines describe only linear relationships.” b. “Correlation and least-squares regression lines are not resistant to outliers.” c. Beware of predictions made from extrapolation i. Extrapolation: using a regression line to predict the value of a y variable when the level of the x variable is far outside the range of the actual x data. Association Does Not Imply Causation a. “An association between an explanatory variable x and a response variable y, even if it is very strong, is not by itself good evidence that changes in x actually cause changes in y.” i. That is, “a strong association between two variables is not enough to draw conclusions about cause and effect.” ii. “The best way to get good evidence that x causes y is to do an experiment.” b. “The relationship between two variables can often be understood only by taking other variables into account. Lurking variables can make a correlation or regression misleading.” i. Lurking variable: “A lurking variable is not among the explanatory or response variables in a study and yet may influence the interpretation of relationships among those variables.” ii. You should always think about possible lurking variables before you draw conclusions [on causality] based on correlation or regression.” Chapter 8: Sampling (to get accurate data from which estimates can be made) 1. Introduction a. Before we can answer any statistical questions and or perform any statistical analysis (like calculating a regression estimate of b, or calculating the mean for a variable) we need to collect (or have someone else collect) data. This chapter is about the process by which we collect data. 2. Population versus sample a. “The Population in a statistical study is the entire group of individuals about which we want [to acquire] information.” b. A Sample is a subset of the population from which we actually collect information. i. The sample is typically utilized as an alternative to the population because it is too difficult or costly to collect data on the entire population. c. Sampling design: the process by which a sample is chosen from the population. d. Sample survey: a survey given to a sample that is used to acquire information about a population. e. The goal of a sample is for it to be reflective of a population so that it can yield accurate information about the population. However, many samples because of the way in which they are chosen are not reflective of the population. 3. Sample Bias – How to sample badly a. Biased Sample: A sample that systematically favors certain individuals from the population and hence systematically favors certain outcomes – for example, systematically favors a higher or lower mean of a variable. i. A sample that is drawn from a non-representative subset of the population is often biased. b. Convenience sample: “A sample selected by taking the members of the population that are easiest to reach.” i. Convenience samples are often biased samples. 4. Simple random Samples a. Simple Random Sample (SRS): A sample where each member of the population has an equal probability of being selected, and every possible sample has an equal probability of being selected. i. An SRS is one type of probability sample. b. A random sample is unbiased. It does not systematically favor any individuals from the population. That is, we expect that a random sample is not biased towards (i.e., does not contain “too much” of) any particular type of individual from the population. c. Note, however, that even random samples may not be representative of the population. The reason is that even if a sample is chosen randomly, by chance the sample still may end up over-representing certain types of individuals from the population, even if we didn’t expect the sample to over-represent any group. This is particularly true with small samples. 5. Inference about the population a. “The purpose of a sample is to give us information about a larger population. The process of drawing conclusions about a population on the basis of sample data is called inference because we infer information about the population from what we know about the sample.” b. Unfortunately, “it is unlikely that results from a random sample are exactly the same as for the entire population … the sample results will differ somewhat, just by chance.” c. “Properly designed samples avoid systematic bias, but their results are rarely exactly correct and they vary from sample to sample.” d. “One point is worth making now: larger random samples give more accurate results than smaller samples.” 6. Other sampling designs a. Stratified random sample: “First classify the population into groups of similar individuals, called strata. Then choose a separate SRS in each stratum and combine these SRSs to form the full sample.” i. This is another kind of probability sample. ii. Stratified random samples are often used to get a more accurate description of small groups. By “oversampling” a small group, we can get a more accurate description of that group. 1. Note that before the oversampled group (strata) is combined with the other stratum, it must be weighted appropriately. 7. Cautions about sample surveys (Potential sources of bias in a sample) a. Undercoverage: When the chosen sample excludes a group (or groups) from the population. b. Nonresponse: “when an individual chosen for the sample can’t be contacted or refuses to participate.” c. Response bias: When an individual’s response to questions are biased. Examples of response bias include the following. i. “People know that they should take the trouble to vote, for example, so many who didn’t vote in the last election will tell an interviewer that they did.” ii. “The race or sex of the interviewer can influence responses to questions about race relations or attitudes toward feminism.” iii. “Answers to questions that ask respondents to recall past events are often inaccurate because of faulty memory.” d. Wording of questions: “Confusing or leading questions can introduce strong bias, and changes in wording can greatly change a survey’s outcome.” Chapter 9: Experiments (to measure causal effects) 1. Introduction a. The principles in this chapter pertain only to samples taken to analyze the effect of one variable on another (for example, the effect of education on earnings). b. The principles in chapter 8 pertain to both samples taken to analyze certain variables (for example the mean height of a CWU student) and samples taken to analyze the effect of one variable on another (for example, the effect of education on earnings). 2. Experimental studies versus observational studies a. Explanatory variable: The treatment is referred to as the explanatory variable. b. Response Variable: The effect being measured is referred to as the response variable. 3. 4. 5. 6. c. Experimental study: a group of study participants is divided up into a treatment group (or groups) and a non-treatment group (control group). The treatment group is then given a treatment. The effect of the treatment on the response variable is then compared across the two groups. i. The experimenter decides who receives the treatment. d. Observational study: data are collected from members of the population who have received varying degrees of the treatment variable, but where the treatment decision was not made by the experimenter. The effect of the treatment (explanatory variable) on the response variable is then analyzed. i. The experimenter does not decide who receives the treatment. Control: The Key Element Behind The Difference Between Experimental and Observational Studies a. The Relationship Between Confounding Lurking Variables and Control Potential problems with observational studies a. Lurking variable: “A lurking variable is not among the explanatory or response variables in a study and yet may influence the interpretation of relationships among those variables.” b. Confounding: “Two variables (for example, an explanatory variable and a lurking variable) are confounded when their effects on a response variable cannot be distinguished from each other.” c. Observational studies often have confounding lurking variables due to differences in characteristics between the treatment and control group. When there is a confounding lurking variable, the measured effect of the explanatory variable on the response variable is biased. d. Figure 9.1 Experiments a. Vocabulary i. Subjects: the individuals studied in an experiment. ii. Factors: the explanatory variables in an experiment. 1. Factors can be combined to make up a treatment. 2. Different degrees of a factor (for example, different amounts of a drug) can be used to create different treatments. iii. Treatment: “Any specific experimental condition applied to the subjects. If an experiment has several factors, a treatment is a combination of specific values for each factor.” 1. Last chapter the treatments were get a factor or don’t get a factor. This chapter, a treatment can consist of different combinations of factors/non-factors. b. Visual representation of combining factors to get different treatments. i. Example 9.3. ii. Figure 9.2 c. Advantages i. Well run experiments often do not have confounding lurking variables. Therefore, they avoid the bias created by confounding lurking variables. ii. “We can study the combined effects of several factors simultaneously.” How to experiment badly a. Don’t randomly select who receives the treatment (or the different treatments). When individuals aren’t randomly chosen to receive the treatment, experiments become susceptible to the bias created by confounding lurking variables. i. “A simple design often yields worthless results because of confounding with lurking variables.” 7. The logic of randomization in comparative experiments a. “Random assignment of subjects forms groups that should be similar in all respects before the treatments are applied.” b. “Comparative design ensures that influences other than the experimental treatments operate equally on all groups.” c. “Therefore, differences in average response must be due either to the treatments or to the play of chance in the random assignment of subjects to the treatments.” i. Statistical significance: “An observed effect so large that it would rarely occur by chance is called statistically significant.” ii. “If we assign many subjects to each group … the effects of chance will average out and there will be little difference in the average responses in the two groups unless the treatments themselves cause a difference.” 8. How to experiment well: Randomized comparative experiments: “An experiment that uses both comparison of two or more treatments and chance assignment of subjects to treatments…” a. Completely randomized experiment: “All the subjects are allocated at random among all the treatments” i. Visual representation of a completely randomized experiment: 1. Figure 9.3 b. Control group: The group in an experiment that receives no treatment, or that receives an alternative treatment to which the treatment being analyzed is being compared. i. Another visual representation of a completely randomized experiment: 1. Figure 9.4 9. Cautions about experimentation a. “The logic of a randomized comparative experiment depends on our ability to treat all the subjects identically in every way except for the actual treatments being compared.” i. Placebo: a placebo is a dummy, or fake, treatment. Placebos are useful in experiments because individuals given a treatment often show an effect just because of the belief that an effect should occur. To control for this affect, the control group is given a placebo. ii. Double-blind: an experiment is double blind when neither the individuals receiving the treatments, nor the scientist analyzing the effects of the treatment, are aware of who received the actual treatment and who received the placebo. Double blind experiments are useful because often scientists recording effects will record an effect if they expect to see an effect. iii. Lack of realism: When “the subjects or treatments or setting of an experiment … [do] not realistically duplicate the conditions we really want to study.” An unrealistic environment often influences the degree of the effect of a treatment. 10. Principles of experimental design a. Randomize b. Control Group (for comparison purposes) c. Use enough subjects (i.e., have a large sample size) d. Other i. Placebo ii. Double- Blind iii. Realism 11. Matched pairs and other block designs a. Matched pair design: “A matched pairs design compares just two treatments. Choose pairs of subjects that are as closely matched as possible. Use chance to decide which subject in a pair gets the first treatment. The other subject in that pair gets the other treatment. That is, the random assignment of subjects to treatments is done within each matched pair, not for all subjects at once. Sometimes each “pair” in a matched pairs design consists of just one subject, who gets both treatments one after the other. Each subject serves as his or her own control. The order of the treatments can influence the subject’s response, so we randomize the order for each subject.” b. Block design: There is non-random assignment into blocks, or groups (for example men and women), before there is random assignment of treatments. i. Figure 9.5 12. Homework a. Note, any time randomization is required for a problem, please use the Simple Random Sample applet at the textbook website. Chapter 10: Introducing Probability 1. The idea of probability a. Random: “We call a phenomenon random if individual outcomes are uncertain but there is nonetheless a regular distribution of outcomes in a large number of repititions.” i. Alternatively: We call a phenomenon random if individual outcomes are uncertain but there is a distribution which describes the probability of different possible outcomes. ii. Example 10.2: the proportion of heads is random. It is uncertain what the number of heads will be. 1. Nonetheless there is a distribution over the different possible proportions for a given number of tosses. b. Random variable: “A variable whose value is a numerical outcome of a random phenomenon.” c. Probability: The likelihood that something will occur. i. “The probability of any outcome of a random phenomenon is the proportion of times the outcome would occur in a very long series of repititions.” ii. Example 10.2: the probability of getting a “head” is approximately 0.5. But what is it exactly? 1. Figure 10.1 2. How do you know the probability of getting a head is 0.50? Is it really 0.50. 3. What is the probability of getting a head in a weighted coin? 4. Note the great degree of variability in the percentage of heads at smaller sample sizes. 5. Note that the degree of variability in the percentage of heads diminishes with larger sample sizes. 6. How many repititions (how large a sample size) do you need to know whether you have an accurate estimate of the probability? iii. The law of large numbers: the idea that the proportion/percentage approaches the probability as the number of trials increases. 1. Figure 10.1 d. Digression: The law of large numbers applies to the sample mean: i. Vegetable ratings: How should we sort? ii. Finding a lawn and garden gift for your parents at Amazon.com: why does Amazon filter out products with small sample sizes? e. Probability distribution: “The probability distribution of a random variable X tells us what values X can take [think of the different values as different events] and how to assign probabilities to those values.” 2. A new interpretation of distributions. a. Probability vs proportion/percentage: i. Probability and proportion/percentage are closely linked. If the proportion/percentage of times that the event occurred in the population was PROB, then the probability of the event occurring in a random sample would be PROB. 1. For example, if the proportion of CWU students below 68 inches in height is 0.50, then if we randomly selected one CWU student there would be a 50 percent chance (0.50 probability) of the student having a height below 68 inches. b. In chapter 1, distributions represented the percent of data in a certain range or category. c. Now, a distribution represents the probability of getting a certain outcome FOR ONE OBSERVATION. d. This new interpretation is the most practical application of distributions for you personally. It has the most relevance to your everyday decisions. The outcome associated with virtually every decision that you make is a random variable. e. Examples: i. Medical decisions and surgical outcomes. ii. Purchase decisions and product quality outcomes (in an environment of poor quality control). iii. The college decision and your salary outcome? 3. Continuous probability models a. Definition: A probability model with a continuous sample space is called continuous. i. “A continuous probability model assigns probabilities as areas under a density curve. The area under the curve and above any range of values is the probability of an outcome in that range.” ii. Example: the uniform density curve. 1. “The uniform density curve spreads probability evenly between 0 and 1.” 2. Figure 10.5 3. Calculate the probability of being between 0 and 0.5. iii. Note: “The probability model for a continuous random variable assigns probabilities to intervals of outcomes rather than to individual outcomes. In fact, all continuous probability models assign probability 0 to every individual outcome. Only intervals of values have positive probability.” iv. Example: The Normal density curve. 1. “Normal distributions are probability models.” 2. Figure 10.6 Chapter 11: Sampling Distributions 1. Parameters and statistics a. Parameter: “A number that describes the population. In statistical practice, the value of a parameter is not known because we cannot examine the entire population. i. μ ii. p b. Statistic: “A number that can be computed from the sample data without making use of any unknown parameters.” i. Often times, statistics are used to estimate unknown population parameters. For example, we use the sample mean to estimate the the unknown population parameter – the population mean. ii. Xbar iii. Phat c. The emphasis in this class is using the statistic Xbar (the sample mean) to estimate the parameter μ (the population mean). 2. Statistical estimation and the law of large numbers. a. “Because [even] good samples are chosen randomly, statistics such as Xbar are random variables.” b. Law of large numbers: “Draw observations at random from any population with finite mean μ. As the number of observations drawn increases, the mean Xbar of the observed values gets closer and closer to the mean μ of the population.” i. Figure 11.1 3. Sampling Distributions – The Intuition a. The Objective – Estimating the population mean: The ultimate objective in this course is to acquire an estimate of the population mean (the parameter). Will will use Xbar (a statistic) as an estimate of the population mean. But it is only an estimate. 4. 5. 6. 7. b. Xbar is a random variable: we don’t know who will end up in our sample used to calculate Xbar. Therefore Xbar is a random variable. c. Xbar has a distribution: as a random variable, Xbar has a distribution. We call Xbar’s distribution a “sampling distribution.” d. The center of the distribution of Xbar: Where do you think it is? e. The spread (standard deviation) of the distribution of Xbar: what do you think happens to it if the sample size increases? f. The shape of the distribution of Xbar: How would we go about determining the shape of the distribution of Xbar. i. How did we find the shape of the distribution of X in chapter 1. ii. How should we find the shape of the distribution of Xbar now. g. The shape of the distribution of Xbar: The Central Limit Theorem (see below). Sampling distributions a. Definition: “The distribution of values taken by the statistic in all possible samples of the same size from the same population.” b. Example: Xbar i. Xbar is a statistic, it is the sample mean. ii. There is a distribution on Xbar. That is, Xbar can take any one of a number of possible values, each with a certain probability of occurring. c. Figure 11.2 The distribution (sampling distribution) of Xbar: shape, center, & spread. a. “Suppose that Xbar is the mean of an SRS [simple random sample] of size n drawn from a large population with mean μ and standard deviation σ. Then the sampling distribution of Xbar has mean μ and standard deviation σ/(square root n).” i. Mean of Xbar (μxbar): μ 1. Where μ is the mean of x. 2. “Because the mean of Xbar is equal to μ, we say that the statistic Xbar is an unbiased estimator of the parameter μ.” ii. Standard Deviation of Xbar (σxbar): σ / “square root n” 1. Where σ is the standard deviation of x. 2. Figure 11.3 3. The likelihood that the sample mean falls close to the population mean is determined by the spread of the sampling distribution. The shape of the distribution of Xbar – 3 cases. a. Case 1: If x has the N(μ, σ) distribution, then the sample mean Xbar of an SRS of size n will approximately have the following distribution: N(μ, σ/”square root n”). b. Case 2: In reality, for small samples, the shape of the distribution of Xbar is similar to the distribution of X, whatever that distribution looks like, normal or not. c. Case 3: It turns out that the larger the sample, the more the shape of the distribution looks like a Normal distribution, regardless of the distribution of X. d. Figure 11.4 The Central Limit Theorem and the shape of the sampling distribution a. If n is large for an SRS from any population with mean μ and finite standard deviation σ, Xbar is approximately N(μ, σ/“square root n”), regardless of the distribution of x. i. Figure 11.4 ii. Figure 11.5 iii. Figure 11.6 8. Chapter 3 Review a. It is very useful to review Chapter 3 before doing your homework assignment, as many of the principles in the chapter are applications of the same principles learned in Chapter 3. Chapter 14: Confidence Intervals – The Basics 1. Introduction a. Statistical inference: “Statistical inference provides methods for drawing conclusions about a population from sample data.” i. Statistical inference allows us to “infer” information about the population from the sample data. b. Simple Conditions For Inference About A Mean. i. “We have an SRS from the population of interest. There is no nonresponse or other practical difficulty.” ii. “The variable we measure has a perfectly Normal distribution N(μ, σ) in the population.” iii. “We don’t know the population mean μ. But we do know the population standard deviation σ.” 2. The Reasoning of Statistical Estimation a. The 68-95-99.7 rule for Normal distributions says that in 95% of samples: i. Xbar will fall between μ + 2 x σxbar and μ – 2 x σxbar 1. Another way of writing this range is: a. μ ± 2 x σxbar b. Another way of saying the same thing is that in 95% of samples, μ will fall within the range: i. Xbar ± 2 x σxbar ii. Figure 14.1: sample means fall somewhere in the sampling distribution. iii. Figure 14.2: approximately 95% of the above defined intervals around the sample means will capture the unknown mean μ of the population. 3. Margin of Error and Confidence Interval a. A confidence interval capture the concept that the mean from a sample is a less accurate estimate (has more variability) when the sample size is smaller. b. See Figure 10.1. c. The form of a confidence interval: i. estimate ± margin of error 1. “A confidence level C, … gives the probability that the interval will capture the true parameter value in repeated samples.” 2. Xbar ± 2 x σxbar is an example of a 95% confidence interval. d. Interpreting a confidence interval: i. “The confidence interval is the success rate of the method that produces the interval.” ii. “We got these numbers using a method that gives correct results 95% of the time.” 4. Confidence Intervals for a Population Mean: a. Xbar ± z* x (σx / “square root n”) i. Note: σxbar = σx / “square root n”) b. z*, called the critical value, is the number of standard deviations that a confidence interval of size C will fall from the mean. i. Figure 14.3 c. To find z*, do the following: i. The easiest way to find z* is to look it up in z* row near the bottom of Table C. 5. Confidence intervals: the four-step process to solving confidence interval problems a. State i. “We are estimating the mean …” (Identify the variable here.) b. Formulate i. “We will estimate the mean μ using a confidence interval of size C.” (C is whatever size confidence interval you are asked to calculate.) c. Solve i. Check the conditions for the test you plan to use. 1. SRS 2. Normally distributed random variable 3. Known σ ii. Calculate the confidence interval. 1. Xbar ± z* x (σx / “square root n”) a. Find z* from z* row near the bottom of Table C. d. Conclude i. “We are C% confident that the mean … is between … and …” (Use the range implied from the confidence interval calculated above.) 6. How Confidence Intervals Behave a. Margin of error i. z* x (σx / “square root n”) is called the margin of error.\ Chapter 15: Tests of Significance (Hypothesis Tests) – The Basics 1. The Reasoning of Tests of Significance (Hypothesis Tests) a. We make a hypothesis on a population parameter, and then test whether the sample statistic (e.g., Xbar) is consistent with that hypothesis. i. Make a hypothesis ii. Center the hypothesized distribution of Xbar on μ. iii. Accept or reject the hypothesis based on how far Xbar lies from the center of the sampling distribution. 2. Stating Hypothesis a. Start with some finding for which you are trying to find evidence. That is, start with a claim about the population for which you are trying to find evidence. b. Formulate an Alternative Hypothesis: Frequently, but not always, “… the claim about the population that we are trying to find evidence for…” i. For example, if you are trying to find evidence that something has a non zero effect, the hypothesis is that there is zero effect. ii. Denoted Ha iii. A claim about a population parameter. iv. An alternative hypothesis always takes one of the following forms: 1. μ ≠ “hypothesized value” a. This is a two-sided hypothesis. b. Example: testing whether pizza the night before an exam has any effect on exam performance. 2. μ > “hypothesized value” a. This is a one-sided hypothesis b. Example: testing whether a college education has a positive effect on earnings. 3. μ < “hypothesized value” a. This is a one-sided hypothesis v. “The alternative hypothesis is one-sided if it states that a parameter is larger than or smaller than the null hypothesis value. It is two-sided if it states that the parameter is different from the null value.” c. Formulate a Null Hypothesis: “The statement being tested…” i. Denoted Ho ii. It is a claim about a population parameter. iii. It is the opposite of Ha. iv. The book always uses the first below form for the null hypothesis, however, more typically a null hypothesis take one of the following forms. 1. Ho: μ = “hypothesized value” 2. Ho: μ > “hypothesized value” 3. Ho: μ < “hypothesized value” d. Note: when the alternative hypothesis is the claim about the population for which you are trying to find evidence, rejecting the null is evidence in favor of your hypothesis. 3. P values & Statistical Significance a. P Value i. Definition: “The probability, computed assuming that Ho is true, that the test statistic would take a value as extreme or more extreme than that actually observed is called the P-value of the test. The smaller the Pvalue, the stronger the evidence against Ho provided by the data.” ii. Alternative Definition: the probability, assuming Ho is true, of getting a value for Xbar that is any further from Ho. b. Statistical Significance i. Definition: “If the P-value is as small or smaller than α, we say that the data are statistically significant at level α.” ii. When we say a result is “statistically significant at level α” we are saying that our rejection of the null hypothesis is significant at level α. iii. Note that “significant in the statistical sense does not mean important. It means simply not likely to happen by chance.” 4. Tests for a population mean a. Test Statistic i. Definition: a statistic that we construct to test the null hypothesis. ii. “The test statistic for hypotheses about the mean μ of a Normal distribution is the standardized version of xbar.” It is known as the z statistic. 1. z = (Xbar – μo) / (σx / “square root n”) a. μo, the hypothesized value of μ in the null, is used because the test statistic is calculated under the assumption that the null hypothesis is correct. 2. The z statistic tells us how many standard deviations the observed value of Xbar is from the hypothesized value for μ. 5. Tests of significance: the four step process a. State i. State the problem in terms of a specific question about the mean. 1. “We are interested in knowing whether there is evidence that the mean … is …” b. Formulate i. Identify what μx is the mean of: 1. μx is the mean … (Identify the variable here.) ii. State the alternative hypothesis Ha. 1. Note: Ha will be the claim about the population about which we are trying to find evidence. It should be a restatement of the “State” step above, and should take one of the below forms. 2. Ha: a. μx not = “some number,” or b. μx > “some number,” or c. μx < “some number” iii. State the null hypothesis Ho. 1. Ho: a. μx = “same number in Ha” b. μx < “same number in Ha” c. μx > “same number in Ha” 2. Note: the book always puts μx = “same number in Ha”, but this can be confusing for students so you should not do it. c. Solve i. Check the conditions for the test you plan to use. 1. SRS 2. Normally distributed random variable 3. Known σx ii. Calculate the test statistic. 1. z = (Xbar – μo) / (σx / “square root n”) iii. Draw a graph of the sampling distribution assuming that the null hypothesis is true and identify your value for Xbar in the distribution,. iv. Find the p value. 1. If Ho is μx = “same number in Ha” a. and z > 0, then p value = 2 x (1 – “value in Table A”) b. and z < 0, then p value = 2 x “value in Table A” 2. If Ho is μx > … a. p value is “value in table A” 3. If Ho is μx < … a. p value is 1 – “value in table A” d. Conclude i. Describe your results in the context of the stated question. 1. If p < α: “We find evidence against the null hypothesis that …” 2. If p > α: “We do not find evidence against the null hypothesis that …” 3. Note: α is the significant level. When α not given, use 0.05. Chapter 18: Inference about a population mean 1. Conditions for inference a. The data are an SRS (simple random sample) from the population. b. The population distribution for the underlying random variable, x, is approximately Normal. i. The larger is the sample size, the less important is the Normality condition. ii. “In practice, it is enough that the distribution be symmetric and singlepeaked unless the sample is very small.” 2. Estimating the standard deviation of the sampling distribution a. When we don’t know the population distribution standard deviation σ, we use s, the sample standard deviation, as an estimate. 3. The new test statistic: t rather than z a. t = (Xbar – μo) / (s / “square root n”) b. t has the t distribution with (n – 1) degrees of freedom. 4. Matched pairs t procedures a. Definition: “To compare the responses to the two treatments in a matched pairs design, find the difference between the responses within each pair.” This will create a new variable. You should use this new variable when doing your test of significance. 5. Robustness of t procedures a. “A confidence interval or significance test is called robust if the confidence level or P-value does not change very much when the conditions for use of the procedure are violated.” i. For example, even with outliers, the confidence level and significance test results will still be robust if the sample size is large enough. 6. Rules of thumb regarding robustness of t procedures a. “Except in the case of small samples, the condition that the data are an SRS from the population of interest is more important than the condition that the population distribution is Normal. b. Sample size less than 15: Use t procedures if the data appear close to Normal (roughly symmetric, single peak, no outliers). If the data are skewed or if outliers are present, do not use t. c. Sample size at least 15: The t procedures can be used except in the presence of outliers or strong skewness. d. Large samples: The t procedures can be used even for clearly skewed distributions when the sample is large, roughly n > 40.” 7. Tests of significance: the four step process a. State i. State the problem in terms of a specific question about the mean. 1. “We are interested in knowing whether there is evidence that the mean … is …” 2. Note, for matched pair sample designs, the specific question will be about mean of a new variable you must create from the matched pair data. The new variable you create will typically be the difference between two variables for which you have observations. b. Formulate i. Identify what μx is the mean of: 1. μx is the mean … (Identify the variable here.) ii. State the alternative hypothesis Ha. 1. Note: Ha will be the claim about the population for which we are trying to find evidence. It should be a restatement of the “State” step above, and should take one of the below forms. 2. Ha: a. μx not = “some number,” or b. μx > “some number,” or c. μx < “some number” iii. State the null hypothesis Ho. 1. Ho: a. μx = “same number in Ha” b. μx < “same number in Ha” c. μx > “same number in Ha” 2. Note: the book always puts μx = “same number in Ha”, but this can be confusing for students so you should not do it. c. Solve i. Check the conditions for the test you plan to use. 1. SRS 2. Robustness of hypothesis tests using t. (In order to determine robustness, a histogram or stemplot must often be drawn for sample sizes less than 40.) a. For a sample size less than 15: t procedures (like hypothesis tests) are robust when the data appear close to Normal (roughly symmetric, single peak, no outliers). If the data are skewed or if outliers are present, t procedures are not robust. b. For a sample size from 15 to 39: t procedures are robust except in the presence of outliers or strong skewness. c. For a sample size of 40 or above: t procedures are robust even for clearly skewed distributions. ii. Calculate the test statistic. 1. t = (Xbar – μo) / (sx / “square root n”) iii. Find the p value. 1. Find the p value from Table C. First, you need to find the row that is closest to the number of “degrees of freedom”, where the degrees of freedom are n – 1. Then find the two columns which the absolute value of your t value falls between. The p value will be between the numbers in one of the last two rows of the table which correspond with those two columns. Of the two last rows, choose the one that corresponds with your Ha. If Ha has a > or < in it, then use the 2nd to last row. Alternatively, if Ha has a “not =” in it, use the last row. d. Conclude i. Describe your results in the context of the stated question. 1. If p < α: “We find evidence against the null hypothesis that …” 2. If p > α: “We do not find evidence against the null hypothesis that …” 3. Note: α is the significant level. When α not given, use 0.05. 8. The new confidence interval a. “A level C confidence interval for μ is:” i. Xbar + t * s / “square root n” ii. t has the t distribution with (n – 1) degrees of freedom. 9. Confidence intervals: the four-step process to solving confidence interval problems a. State i. “We are estimating the mean …” (Identify the variable here.) b. Formulate i. “We will estimate the mean μ using a confidence interval of size C.” (C is whatever size confidence interval you are asked to calculate.) c. Solve i. Check the conditions for the test you plan to use. 1. SRS 2. Robustness of confidence intervals using t. (In order to determine robustness, a histogram or stemplot must often be drawn for sample sizes less than 40.) a. For a sample size less than 15: t procedures (like confidence intervals) are robust when the data appear close to Normal (roughly symmetric, single peak, no outliers). If the data are skewed or if outliers are present, t procedures are not robust. b. For a sample size from 15 to 39: t procedures are robust except in the presence of outliers or strong skewness. c. For a sample size of 40 or above: t procedures are robust even for clearly skewed distributions. ii. Calculate the confidence interval. 1. Xbar ± t* x (sx / “square root n”) 2. Find t* from the appropriate row in Table C. You need to find the row that is closes to the number of “degrees of freedom”, where the degrees of freedom are n – 1. (n is the sample size) d. Conclude i. “We are C% confident that the mean … is between … and …” (Use the range implied from the confidence interval calculated above.)