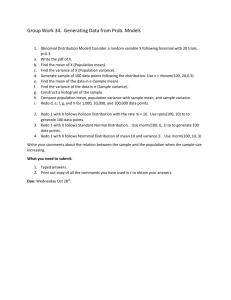

One Factor ANOVA Handout

EDF 802 Dr. Jeffrey Oescher

One Factor ANOVA

I.

Introduction

A.

Description

1.

An analysis of variance (ANOVA) is a procedure for examining the variance in some dependent variable in terms of the portion of variance that can be attributed to a specific independent variable. The independent variable is discussed as a factor in the analysis, and since there is only one independent variable the analysis is frequently referred to as a one factor ANOVA or a one-way ANOVA. In this analysis total variance is partitioned into 1) called within-group or random variance.

2.

The basic arrangement for a one-way ANOVA is shown below. variation due to different levels of the independent variable, called betweengroup variance, and 2) variation within the levels of the independent variable,

Subjects Group

3

X

13

X

23

X

33

X i3 k

X

1k

X

2k

X

3k

X ik where

1

2

3 i

1

X

11

X

21

X

31

X i1

2

X

12

X

22

X

32

X i2 i indicates subjects, and k indicates group membership

B.

Application

1.

Commonly used to test hypotheses about the statistical significance between performances across different groups

2.

Groups typically represent different levels of some treatment

C.

Data - levels of measurement

1.

The dependent variable must be interval or ratio.

2.

The independent variable is categorical and can be nominal, ordinal, or, in some cases, a set of categories derived from interval or ratio data.

D.

Comments

1.

Error rates a.

Comparison-wise error rate

i.

The probability of committing a Type I error for the comparison of two groups ii.

This error rate is α (i.e., alpha) b.

Experiment-wise error rate i.

The probability of committing a Type I error across all comparisons of more than two groups ii.

Multiple t-test example iii.

The actual experiment wise error rate can be calculated using the following formula a) α e

≈ 1 - (1 - α c

) c where α c is the alpha level for each comparison and c is the number of pair-wise comparisons b) Note for a comparison of three groups using a comparison wise error rate of .05, the approximate experiment wise error rate is .14. iv.

An adjustment can be made to the comparison wise error rates being used for each comparison using the following formula. a)

α c

≈ α / c where c is the number of comparisons and α is the comparison wise error rate b) Note a comparison wise error rate of .017 (i.e., .05 / 3) is needed for each of the three comparisons of groups to maintain a .05 experiment wise error rate

II.

Concepts underlying ANOVA

A.

Partitioning of variance

1.

Intuitive presentation a.

Total variation i.

The difference between an individual's score (i.e., X

11

) and the grand ii.

b.

Within group variation i.

The differences among subjects exposed to the same treatment ii.

X

11

X

1

iii.

Attributed to random sampling fluctuations iv.

ANOVA is based on an assumption that within group variation is the same across all groups (i.e., homogeneity of variance)

mean (i.e., X )

X

11

X

c.

Between group variation i.

The differences among group means ii.

X k

X

iii.

Attributed to variation from treatment effects and random sampling fluctuations d.

The model i.

The variation of all individual scores around the grand mean (i.e., total variation) can be described as the sum of the variation of the individuals around their group mean (i.e., within group variation) and ii.

the variation of the groups around the grand mean (i.e., between group

variation).

X

11

X

=

X

11

X

1

+

X k

X

2.

Linear model

X ik

= μ

+

α k

+ e ik

Where X ik

= the i th

score in the k th group

μ = the grand mean for the population

α k

= the effect of belonging to group k

e ik

= random error associated with this score

3.

Algebraic perspective for generalizing across groups and individuals within groups (see handout from Hinkle, Wiersma, and Jurs)

B.

Variance estimates

1.

Sums of squares a.

Total variation b.

Within group variation c.

Between group variation

2.

Mean squares a.

Sums of squares divided by the appropriate degrees of freedom i.

ii.

MS w

MS b

SS

N

w k k

SS

b

1

3.

Expected mean squares a.

Expected mean squares within

E ( MS w

)

e

2 b.

Expected mean squares between

E ( MS b

)

2 e

n (

k

1 e

2

)

C.

Hypotheses

1.

H

0

: μ

1

= μ

2

= … = μ k

2.

H

1

: At least two μ k

are different

D.

Sampling distributions

1.

If H

0

is true a.

Expected mean squares within - error variance i.

E(MS w

) = σ

2 e b.

Expected mean squares between - error variance plus no group effect based on the assumption the null hypothesis is true i.

E(MS b

) = σ

2 e c.

The ratio of MS b

to MS w

is distributed as a F-distribution i.

MS b

MS w

e

2

2 e

1

2.

If H

0

is false a.

Expected mean squares within - error variance i.

E(MS w

) = σ 2 e b.

Expected mean squares between - error variance plus some group effect based on the assumption the null hypothesis is false

i.

E ( MS b

)

2 e

n

k

1

2 k c.

The ratio of MS b

to MS w

is distributed as a F-distribution i.

MS h

MS w

2 e

e n

k

1 k

2 k

1

3.

Statistical significance a.

Determined by comparing the observed F-statistic to the underlying distribution of F with K-1 and N-k degrees of freedom that was generated under the assumption the null hypothesis was true b.

If the observed test statistic is typical of those F-statistics in this distribution, the null hypothesis is accepted c.

If the observed test statistic is atypical of those F-statistics in this distribution, the null hypothesis is rejected

III.

Assumptions underlying ANOVA

A.

Independence of samples

1.

Random samples require observations within groups to be uninfluenced by each other (e.g., individually administered treatments vs. classrooms in which treatments are occurring; two group counseling treatments where the interaction of group members is very high and quite important) a.

Violation of this assumption increases the Type I error rate (e.g., .05 is more like .10) b.

Compensation i.

Decrease α (e.g., .05 → .01) knowing the actual α level is .05 ii.

Use the group mean a unit of analysis c.

While this is a statistical concern, it rarely is considered in analyses in educational contexts

B.

Population from which the samples are drawn are normally distributed

1.

The procedure (i.e., ANOVA) is robust with respect to the violation of this assumption

2.

The term robust means the results of the analysis will be minimally affected by the violation of the assumption

C.

Population variances are equal across groups (i.e., homogeneity of variance)

1.

Homogeneity of variance - the variances of the distributions in the populations are equal a.

Levene's test for homogeneity of variance i.

If the result is non-significant, the assumption has not been violated ii.

If the result is significant, compensate through the interpretation of the results b.

Sample size i.

If sample sizes are equal or approximately equal (i.e., the ratio of the size of the largest sample to the size of the smallest sample is less than

1.5), the procedure is robust with respect to the violation of the homogeneity of variance assumption ii.

If the sample sizes are not equal (i.e., the ratio of the size of the largest sample to the size of the smallest sample is greater than 1.5) there can be a problem a) If the larger variance corresponds to the smaller sample , the F is liberal (e.g., alpha is .10 in reality when it was set at .05) b) If the larger variance corresponds to the larger sample than F is conservative (e.g., alpha is .01 in reality when it was set at .05)

IV.

Example

A.

D2 data set - see the file on Bb

B.

SPSS-Windows procedures

1.

ONEWAY ANOVA procedure

2.

GLM UNIVARIATE procedure

C.

Printout interpretation

1.

Assumptions a.

Homogeneity of variance - Levene's test is non-significant (F

4,26

= 0.25, p

= .906) b.

The procedure is robust with respect to the normality assumption c.

Independence of samples is assumed

2.

Results a.

Statistical significance i.

The results are statistically significant (F

4,26

- 11.19, p = .000) ii.

H

0

is rejected - there is at least one pair of means that reflects a statistically significant difference iii.

Since H

0

is rejected, power is not an issue. However, the computed level of power is 1.00 b.

Practical significance i.

Partial eta squared is .63 ii.

This is interpreted as a large effect size

D.

Summarizing the analyses

The computed F-statistics is F

4,26

- 11.19 (p = .000). The assumption of normality was not tested as the procedure is robust to its violation. The assumption of independence of observations is assumed to be true. The homogeneity of variance assumption was tested using Levene's statistic. The observed value was F

4,26

=

0.25 (p = .906) indicating the equivalency of variances across groups. The observed level of power was 1.00. A large effect size was present (partial eta squared = .63). The following summary data is provided for the analysis.

Table 1

ANOVA Results

Source SS df MS F Sig

Between Groups 150.50 4

Within Groups 87.43 26

37.63

3.36

11.19 .000

Total 237.94 30

The null hypothesis of equal mean scores across groups was rejected; there are statistically significant differences between at least two means.