Data mining, dummy variables

advertisement

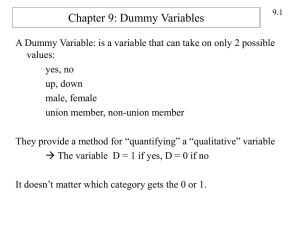

SPS 580 Lecture 7 Data Mining Dummy Variables notes I. THE LINEARITY ASSUMPTION A. It’s called multiple LINEAR regression because Y is assumed to be a linear function of the each X variable Y = a + B1(X1) + B2(X2) +B3(X3) . . . a. We like linear models because they are an intuitive way to talk about the effects of each variable (intervening, control), difference between the zero order and the partial B. Violations of linear assumption: Curvilinearity a. look at it with the zero order relationship., b. if the relationship is curvilinear, then the linear slope doesn’t do as good a job at predicting Y as some other alternatives. c. In most cases the linear model is pretty accurate predictor – not usually the end of the world. d. We’re about to learn a way to deal with the situation when there is a curvilinear relationship. C. Violations of linear assumption: Interactions a. look at by examining the conditional slopes in the three-variable graph. b. If there is an interaction then the effect of an X variable on Y is not linear – because the magnitude of the slope DEPENDS on a third variable. in most cases interactions are not significant. c. But when they are it IS the end of the world. You have to do the analysis separately for the groups involved in the interaction or incorporate an interaction term in the linear regression model. d. We’ll learn about how to deal with them in a couple of weeks. II. SUPPRESSOR EFFECT a. Not a violation of linearity, rather an unusual outcome of causal analysis B1 Y X1 B2 X2 Three variable path diagram B3 b. Happens when the SIGN of the indirect path B2 * B3 is opposite from the SIGN of the direct path B1. c. If this happens then B1 > ZERO ORDER and you get an estimated of suppression effect rather than explanation. III. WHY IS CAUSAL ANALYSIS IMPORTANT? Intervening variables often show points of policy input . . . Let’s say you knew higher income people were moving out of a neighborhood. And that they would often explain their reasons for doing so in terms of neighborhood pessimism. You want to reduce neighborhood turnover. Income Neighborhood Pessimism Move out ??? You can’t do much about income, but you might be able to find things that cause pessimism that you can affect. 1 SPS 580 Lecture 7 IV. Data Mining Dummy Variables notes HOW TO FIND GOOD INTERVENING VARIABLES Y X1 0 Low pessimism 0 Low income 460 1 High Income 580 N 1 High pessimism 540 54% 420 42% B= -12% 1,000 1,000 X1 Y pretend data, not PQ How do you find variables that when you put them inside the causal chain X1 Y, the partial is less than the zero order? A. reflect on own experience or talk to people B. literature – an article or a report C. data mining – there will be certain statistical relationships between X1, X2 and Y V. DATA MINING . . . For an intervening to explain part/all of the X1 Y relationship, two conditions have to be met . . . CONDITION 1: The explanatory variable X2 has a significant impact on Y X2 0 Low fear 1 High fear Y 0 Low pessimism 560 480 N 1 High pessimism 240 30% 720 60% B= 30% 800 1,200 X2 Y is significant pretend data , not PQ Fear is a cause of pessimism This is the intervening CAUSAL process, it comes from psychological theory, literature, observational studies, it is a reflection of social process this is the reason you like stx CONDITION 2: Groups that differ on the independent variable X1 differ on the explanatory variable X2 X1 0 Low income 1 High Income X2 0 Low fear 200 600 N 1 High fear 800 80% 400 40% B= -40% 1,000 1,000 X1 X2 pretend data, not PQ Income groups differ on Fear In order for fear to be a reason income causes pessimism, higher income people have to be less fearful than lower income low income In order for X2 to explain X1 Y, X1 has to be a cause of X2 2 SPS 580 Lecture 7 VI. Data Mining Dummy Variables notes OUTCOME OF SUCCESSFUL DATA MINING A. Mechanically, when you control for X2 the partial is lower than the zero order Y X2 X1 0 Low fear 0 Low income 1 High Income 0 Low income 1 High Income 1 High fear 0 Low pessimism 140 420 320 160 1 High pessimism 60 30% 180 30% 480 60% 240 60% Conditional X2=0 0% Conditional X2=1 0% Partial = 0% N 200 600 800 400 X1 Y controlling X2 pretend, not PQ in this case Partial = 0 B. Intuitively, in X1Y relationship you think you’re looking at groups that differ on X1 X1 0 Low pessimism Y 1 High pessimism N 0 Low income (and higher fear) 460 540 54% 1,000 1 High Income (and lower fear) 580 420 42% 1,000 B= -12% But actually we’re looking at groups that differ on X1 and also X2 So you need to control for X2 to see the impact of X1 alone (Partial) VII. SO HOW DO YOU DATA MINE FOR (OTHER) INTERVENING VARIABLES A. Get a list of candidate intervening variables from the same survey years . . . A. Read a book in the past month -- readers less pessimistic B. Frequency of using the local park in the past month – park users less pessimistic C. Employment status -- unemployed > pessimistic 1 Working Full Time emp94 Employment Status Of Respondent 56.9% 2 Working Part Time 11.5% 3 Temporary Layoff 0.3% 4 Temporary Illness, D 0.9% 5 On Vacation 0.6% 6 On Strike 0.0% 7 Unemployed, Between 1.6% 8 Looking For Work 0.8% 9 Permanent Layoff 10 Retired 0.2% 0.4% 12 Long Term Disabled, 1.1% 13 Taking Care Of Home 10.0% 14 Going To School 2.3% 15 Other 0.4% Total CURRENTLY UNEMPLOYED (CODING EMPSTAT) 96.5% IN THE LABOR FORCE 72.9% 3.5% 12.8% 11 Long Term Ill, Unabl 98 Don't Know CURRENTLY EMPLOYED NOT IN THE LABOR FORCE 0.1% 100.0% 3 27.1% SPS 580 Lecture 7 Data Mining Dummy Variables notes B. Recode the candidate variables, look at the xtabs to see if two conditions are met 1. First check X2 Y to see if the explanatory variable actually causes pessimism Size of X2 --> Y relationship among candidate intervening variables Candidate variables Reading Habits Neighborhood Use Employment, Labor Force Status 0 No reading 1 Read book past month 0 No park use 1 Used local park past month 0 In LF Working 1 In LF Unemployed Pessimism % 37% 34% 40% 30% 31% 45% 2 Not in LF Retired 3 Not in Other B -2% doesn’t make the cut -10% makes the cut weakly 15% makes the cut 36% 43% 2. Then check X1 X2 to see if income groups actually differ on it Size of X1 --> X2 relationship among candidate intervening variables Employment, Labor Force Status Neighborhood Use Income Group 0 Below median 1 Above median B 1 Used local park past month 50% 60% 10% Weak X1 Income 0 Below median 1 Above median 0 In LF Working 60% 84% 23% 1 In LF Unemp 4% 1% -2% Sweet X2 Labor force status 0 Working 1 Not working 0 Working 1 Not working Conditional X1 = 0 Conditional X1 = 1 Partial = 2 Not in LF Retired 18% 5% 3 Not in LF Other 18% 10% Park use might be OK Unemp fails Working v. Other seems important Fails Y Pessimism % 39% 47% 25% 24% 7.6% -1.0% 4.2% BOTTOM LINE: Go with LF status coded (1 = working, 0 = other) Xtab results -- since y= dichot(0,1) Pessimism = .40 - .166 (Income) +.042 (Labor Force Status) slope for LF status is significant Impact of Household Income on Neighborhood Pessimism Zero order -.18 100% Partial (Direct Effect) -.17 94% Intervening effect of non-.01 6% LF participation 4 but the impact of the control variable isn’t very great Worse yet . . . there might be an interaction effect SPS 580 Lecture 7 Data Mining Dummy Variables notes VIII. DEALING WITH CURVILINEARITY A. Start by looking at how to deal with Ordinal (3+) variables resped Highest Level Of Education Completed 1 4th Grade Or Less 0.8% 2 5th-8th Grade 3.2% 3 9-12th Grade, No Diploma 7.2% 4 High School Graduate 0-11 yrs Education is a very important variable for a lot of public policy analysis 17.8% 5 Trade Or Vocational 6.7% 10 Hs Grad, Non Spec. 0.1% 6 Some College 27.4% 7 College Graduate 20.0% 8 Some Graduate Study 3.9% 9 Graduate Degree HSG, Trade Some college College Grad 12.7% 98 Don't Know 0.1% Total It’s not really usable as an interval variable, not across the full range, and not in the US context But you don’t want to lose the gradient, usually best to treat it as a ordinal variable missing 100.0% B. Recode the variable into (k) ordinal categories . . . as shown above Neighborhood Pessimism 60% 51% do a xtab or table of means -- depending on whether Y is dichotomous(0,1) or interval(3+) 50% 39% 40% 30% Look at the pattern in the data 36% 23% 20% line goes down, higher education lower pessimism 10% not much diff between some college vs. HSG/trade 0% 0-11 yrs HSG, Trade Some college College Grad C. Don’t think of the pattern in the data as a line CONTRASTS Neighborhood HSG/Trade Some College College Grad vs. 0-11 vs. 0-11 vs. 0-11 Pessimism 0-11 yrs 51% 51% 51% 51% HSG, Trade 39% 39% Some college 36% 36% College Grad 23% 23% -12% -15% -28% [WARNING DATA ANALYSIS METHOD AHEAD] 5 Think of the pattern in the data as (k-1) separate CONTRASTS . . . SPS 580 Lecture 7 Data Mining Dummy Variables notes D. Think of each of the (k-1) contrasts as something that is measured with a (0,1) dichotomous variable. (0,1) dichotomies created this way are knows as DUMMY VARIABLES DUMMY VARIABLE CODING . . . Education 0-11 yrs HSG, Trade Some college College Grad HSG/Trade vs. 0-11 Some College College Grad vs. 0-11 vs. 0-11 0 1 0 0 0 0 1 0 With (k) categories of education, we need (k-1) dummy variables to estimate the available contrasts 0 0 0 1 The “left out” category is called the reference category (in this case 0-11 yrs of education) A dummy var measures the difference between the contrast category and the reference category E. Creating (K-1) Dummy Variables To Analyze The Impact Of An Ordinal Variable RECODE education (0=0) (1=1) (2=0) (3=0) (ELSE=9) INTO educHSG. VARIABLE LABELS educHSG 'dummy var HSG vs 0-11'. RECODE education (0=0) (3=0) (1=0) (2=1) (ELSE=9) INTO educANYCOLL. VARIABLE LABELS educANYCOLL 'dummy any coll vs 0-11'. RECODE education (0=0) (1=0) (3=1) (2=0) (ELSE=9) INTO educCOLLGRAD. VARIABLE LABELS educCOLLGRAD 'dummy coll grad vs 0-11'. MISSING VALUES educHSG educANYCOLL educCOLLGRAD (9). The result will be (k-1) variables, each of which codes the ENTIRE SAMPLE . . . Original Data Education 0-11 yrs HSG, Trade Some college College Grad total frequency 4,112 8,974 10,023 13,374 36,483 DUMMY VARIABLES Coding HSG/Trade vs. 0-11 Some College vs. 0-11 College Grad vs. 0-11 0 1 27,509 26,460 23,109 8,974 10,023 13,374 Total 36,483 36,483 36,483 Regression works the as before, except that instead of having one education variable there are now 3 dummy variables measuring the effects of education. Whenever you estimate the effect of education, put all (k-1) dummy vars in the regression equation together F. For the ZERO ORDER, there are now (k-1) slopes, t-tests Unstandardized Coefficients All 3 are significant B Std. Error t Sig. (Constant) .494 .018 27.971 .000 educHSG dummy var HSG vs 0-11 -.106 .021 -4.950 .000 educANYCOLL dummy any coll vs 0-11 -.138 .021 -6.600 .000 educCOLLGRAD dummy coll grad vs 0-11 -.269 .020 -13.338 .000 6 D1 and D2 are pretty similar to each other SPS 580 Lecture 7 Data Mining Dummy Variables notes G. To test Education as a control variable, enter ALL (k-1) dummy variables together in the multiple regression equation along with income . . . Unstandardized Coefficients B Std. Error t Sig. (Constant) .513 .018 27.917 .000 Income (0,1) -.130 .013 -9.931 .000 HSG/Trade vs. 0-11 Some College vs. 0-11 College Grad vs. 0-11 -.077 .022 -3.440 .001 -.093 .022 -4.204 .000 -.200 .022 -9.080 .000 Impact of Household Income on Neighborhood Pessimism Zero order -.18 100% Partial (Direct Effect) -.13 74% Intervening effect of education -.05 26% Income effect is reduced substantially education makes a pretty big difference as an explanatory variable H. The prediction equation works the same way too Regression equation . . . Predicted avg(Y) = .513 - .130*(Income)-.077 *(educHSG) -.093 *(educANYCOLL) -.200*(educCOLLGRAD) 60% predicted values 50% 40% 30% 20% 0 Below median income 10% 1 Above median 0% 0-11 yrs HSG, Trade Some college College Grad 7 SPS 580 Lecture 7 IX. Data Mining Dummy Variables notes DUMMY VARIABLES ARE THE MAIN TECHNIQUE FOR DEALING WITH CURVILINEARITY A. Example: Client = WBEZ want to target fundraising Commission research to explore extent to which Education Listen to public radio and reasons why this might be the case B. ZERO ORDER RESULTS 0-11 yrs HSG, Trade Some college College Grad Dont Listen to radio, Listen to radio, Listen to listen to not familiar familiar with wbez radio WBEZ WBEZ 18% 57% 8% 16% 11% 67% 10% 13% 4% 58% 15% 23% 5% 46% 16% 34% Listen to wbez 34% 16% 13% Some college Curvilinear relationship . . . . . . is significant Chi sq(3) = 135 p < .04 phi =.204 Examine Contrasts Minimal difference HSG/Trade vs. 0-11 Small difference Some College vs. 0-11 Large difference College Grad vs. 0-11 23% 0-11 yrs HSG, Trade Total 100% 100% 100% 100% College Grad Unstandardized Coefficients (Constant) HSG/Trade vs. 0-11 Some College vs. 0-11 College Grad vs. 0-11 The listenership variable is nominal (4 cat), so to proceed with causal analysis, I’m going to recode it into a dichotomy B .159 Std. Error .022 t Sig. 7.331 .000 -.037 .026 -1.395 .163 .069 .026 2.667 .008 .179 .025 7.181 .000 Conclusion . . . 8 One of the DUMMIES is not significant SPS 580 Lecture 7 Data Mining Dummy Variables notes C. INTERVENING VARIABLE: Theory . . . . . . Education Politically Independent Listen to Public radio X1 X2 Y Party Affiliation 1 Republican 23% 2 Democrat 38% 3 Independent 25% 4 Other recode X2 to Independent vs. other 3% 5 No Preference 8 Do Not Know 11% 1% 100% Unstandardized Coefficients (Constant) HSG/Trade vs. 0-11 Some College vs. 0-11 College Grad vs. 0-11 Independent vs other X. B .155 Std. Error .022 t Sig. 7.064 .000 -.038 .026 -1.442 .149 .067 .026 2.584 .010 .176 .025 7.026 .000 .023 .017 1.370 .171 REGRESSION ANALYSIS Education effect is still curvilinear Independence isn’t significant HOW TO SUMMARIZE THE ZERO ORDER AND PARTIAL EFFECTS OF AN ORDINAL/NOMINAL VARIABLE MEASURED WITH DUMMY VARIABLES Impact of Education on Public Radio Listening Explained by Political Zero order Partial Independence -.037 -.038 HSG/Trade vs. 0-11 -4% .069 .067 Some College vs. 0-11 3% .179 .176 College Grad vs. 0-11 2% 9 Independence doesn’t explain much of the relationship between education and listenership