Supplementary Information - Journal of the American Medical

advertisement

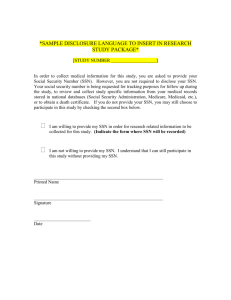

Supplementary Information S1. Hash functions and usage in medical informatics A hash function is an algorithm that takes a block of data and returns a unique fixed-length string. Hash functions are ideally suited for securely de-identifying data: Identical inputs into a hash function always produce identical outputs, but even slight variations (e.g., one character difference) in inputs will produce entirely different output strings. Hash functions are one-way; that is, the output of a hash function cannot be reverse-engineered to yield the inputs. Hash functions are the core method for creating a de-identified synthetic derivative of clinical records for linking to a biobank (BioVU) [1]. In an early pilot, we validated that use of hashes of patient identifiers is acceptable to IRBs and viable for linking patients across participating sites [2]. In our current project, we developed and distributed a software application to standardize the process for participating institutions to create hashes for any given set of patients. S2. DCIFIRHD algorithm performance in the face of data corruption Given that a patient will often provide their demographic details, there is the possibility of “corrupt” data existing when records are merged across sites and over time. This “corrupted” data could be due to a patient omitting certain fields on an intake form, errors due to text input or transcribing written responses, or any number of other possibilities. While it is unknown what percentage of patient data suffers from incompleteness or gross inaccuracy (although the expectation would be that is rather low) we still sought to characterize the performance of the optimized DCIFIRHD algorithm performance in this context. CORRUPTION ALGORITHM We developed an algorithm that corrupts patient information randomly to test the DCIFIRHD algorithm performance. The corruption algorithm performs one of three possible operations to a field in the patient information: shift an element, randomly change the field, or delete the field. Shifting an element is based on a keystroke error upon entry, so if a patient’s name was “Kevin” and the letter “e” was randomly chosen to shift it would be changed to “w”, “s”, “d”, “f”, or “r” with equal probability. Randomly changing elements substitutes characters of the same type into the field (i.e. letters are replaced with letters and digits with digits). Deleting a field replaces the value with “NULL”. The corruption algorithm has two parameters: n, the maximum number of duplicated rows, and p, the probability of corrupting a row of patient data. For each patient in the input data, the patient data is duplicated a random number of times, with that random number uniformly drawn from [1, n]. Rows of patient data to corrupt are chosen randomly based on p. For any row that will be corrupted a field is chosen randomly as well as an error type. In the current test we chose probabilities of 70% to shift an element, 15% to change a field, and 15% to delete the field. After the patient information is corrupted all of the rows are randomly shuffled to destroy any relevant matching information based on row proximity. TEST CORRUPTION DATASET The North Carolina voter registration dataset is a publicly available resource comprised of records for current, registered voters in the state [3]. The records are maintained by the North Carolina State Board of Elections and are updated on a weekly basis and at the time of download consisted of 4.9 million individuals. Similar to previous work with the dataset we used a random subset of the data to use in testing [4]. We randomly selected 50,000 individuals from the entire dataset and generated random, unique SSNs for each individual. This generation of SSNs, along with our assumption that individuals that do not share the same SSN are distinct, ensures that there are no duplicated individual records. DCIFIRHD PERFORMANCE ON CORRUPTED DATA To test the performance of the DCIFIRHD algorithm against imperfect data we generated corrupted versions of the 50,000 individual voter records. The corrupted datasets were generated with n=5 and 10% increments in p (Figure S2). As a comparison we also implemented two naïve matching algorithms, one based on matching a SSN alone and another based on matching the full name. More complex, deterministic algorithms based on demographic attributes (such as SSN + full name) would yield similar but degraded results as either attribute alone since the constraint on matching would just be more difficult. The DCIFIRHD algorithm outperforms a name-based match algorithm in every metric at all levels of corruption. The DCIFIRHD algorithm also performs better in the accuracy, balanced accuracy (balanced accuracy calculated as the arithmetic mean of specificity and sensitivity), and sensitivity metrics than the SSN matching algorithm until p=50%. Specificity at all levels of corruption was equivalent, with DCIFIRHD having a specificity of 0.99922±0.00011 and SSN having 0.99917±0.00012. However, corruption of patient data at a level greater than fifty percent is highly unlikely to represent a real world situation. Additional scenarios would instead likely involve instances of missing SSN numbers or incorrect SSN numbers (one SSN number corresponding to two unique individuals) are likely to exist, which are both missing from our input dataset. To test this we removed the SSN field from 0.001% of the initial 50,000 records and re-ran the analysis. We found a proportional decrease in the accuracy of the SSN matching algorithm that matched the percentage of missing records, while the DCIFIRHD performance was unaffected. Figure S2. Comparison of matching algorithm performance on simulated datasets with increasing amounts of patient information corruption. The corrupted datasets were created from an initial set of 50,000 individuals with n=5 at corruption percentage. The DCIFIRHD algorithm outperforms a naïve algorithm based on matching the individual’s name at every level of corruption and SSN alone until the corruption percentage equals 50 percent. References 1. 2. 3. 4. Pulley, J., et al., Principles of Human Subjects Protections Applied in an Opt‐ Out, De‐ identified Biobank. Clinical and translational science, 2010. 3(1): p. 42-48. Galanter, W.L., et al., Migration of Patients Between Five Urban Teaching Hospitals in Chicago. Journal of medical systems, 2013. 37(2): p. 1-8. Violan, C., et al., Comparison of the information provided by electronic health records data and a population health survey to estimate prevalence of selected health conditions and multimorbidity. BMC Public Health, 2013. 13: p. 251. Kuzu, M., et al., A practical approach to achieve private medical record linkage in light of public resources. Journal of the American Medical Informatics Association, 2013. 20(2): p. 285-292.