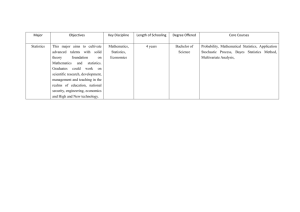

Random number generators

advertisement

9. Monte Carlo Methods Monte Carlo (MC) methods use repeated random sampling to solve computational problems when there is no affordable deterministic algorithm. Most often used in Physics Chemistry Finance and risk analysis Engineering MC is typically used in high dimensional problems where a lower dimensional approximation is inaccurate. Example: n year mortgages paid once a month. The risk analysis is in 12n dimensions. For a 30 year mortgage we have a 360 dimensional problem. Integration (quadrature rules) above 8 dimensions is impractical. Main drawback is the addition of statistical errors to the systematic errors. A balance between the two error types has to be made intelligently, which is not always easy nor obvious. Short history: MC methods have been used since 1777 when the Compte de Buffon and Laplace each solved problems. In the 1930’s Enrico Fermi used MC to estimate what lab experiments would show for neutron transport in fissile materials. Metropolis and Ulam first called the method MC in the 1940’s. In 1950’s MC was expanded to use an probability distribution, not just Gaussian. In the 1960’s and 1970’s, quantum MC and variational MC methods were developed. MC Simulations: The problem being solved is stochastic and the MC method mimics the stochastic properties well. Example: neutron transport an decay in a nuclear reactor. MC Calculations: The problem is not stochastic, but is solved using a stochastic MC method. Example: high dimension integration. Quick review of probability Event B is a set of possible outcomes that has probability Pr(B). The set of all events is denoted by W and particular outcomes are w . Hence, BÎW . Suppose B,C ÎW . Then BÇC represents events in both B and C. Similarly, BÈC represents events that are in B or C. Some axioms of probability are 1. Pr(B)Î[0,1] 2. Pr(AÈB)= Pr(A)+Pr(B) if AÇB¹Æ 3. BÍC ÞPr(B)£Pr(C) 4. Pr(W)=1 The conditional probability that a C outcome is also a B outcome is given by Bayes formula, Pr(B|C)= Pr(BÇC) . Pr(C) Frequently, we already know both Pr(B|C) and Pr(C) and use Bayes formula to calculate Pr(BÇC). Events B and C are independent if Pr(BÇC)= Pr(B)Pr(C)ÞPr(B)= Pr(B|C). If W is either finite or countable, we call W discrete. In this case we can specify all probabilities of possible outcomes as f k = Pr(w = w k ) and an event B has probability Pr(B)= å w k ÎB Pr(w k ) = å w k ÎB fk . A discrete random variable is a number X(w ) that depends on the random outcome w . As an example, in coin tossing, X(w ) could represent how many heads or tails came up. For xk = X(w k ), define the expected value by E[X]= å X(w )Pr(w ) = å xk fk . w ÎW w ÎW The probability distribution of a continuous random variable is described using a probability density function (PDF) f (x). If X Î n and BÍ n , then Pr(B)= ò xÎB f (x)dx and E[X]= òn xf (x)dx. The variance in 1D is given by s 2 = var(X)= E[(X - E[X])2 ]= ò (x- E[x])2 f (x)dx . The notation is identical for discrete and continuous random variables. For 2 or higher dimensions, there is a symmetric n´n variance/covariance matrix given by C = E[(X - E[X])(X - E[X])T ], where the matrix elements C jk are given by C jk = E[(X j - E[X j ])(Xk - E[Xk ])]= cov[X j ,Xk ]. The covariance matrix is positive semidefinite. Common random variables The standard uniform random variable U has a probability density of ì ï í ï î f (u)= 1 if 0 £u £1 0 otherwise. We can create a random variable in [a,b] by Y =(b-a)U +a . The PDF for Y is ì ï ï í ï ïî 1 if a £ y £b g(y)= b- a 0 otherwise. The exponential random variable T with rate constant l >0 has a PDF ì ï í ï î - lt if 0 £t f (t)= l e 0 otherwise. The standard normal is denoted by Z and has a PDF f (z)= 1 e-z2 /2 . 2p The general normal with mean m and variance s 2 is given by X = s Z + m and has PDF f (x)= 1 2ps 2 e-(x-m)2 /2s 2 . We write X ~ N(m,s 2 ) in this case. A standard distribution has X ~ N(0,1). If an n component random variable X is a multivariate normal with mean m and covariance C, then it has a probability density n -(x-m )T C-1 x-m /2 1 æ ö f (x)= e , where Z = çè 2p ÷ø det(C) . Z æ ç è ö ÷ ø Multivariate normal possess a linear transformation property: suppose L is an m´n matrix with rank m, so L: n ® m and onto. If X Î n and Y Î m are multivariate normal, then the covariance matrix for Y is CY = LCX LT assuming that m = 0 . Finally, there are two probability laws/theorems that are crucial to believing that MC is relevant to any problem: 1. Law of large numbers 2. Central limit theorem Law of large numbers: Suppose A= E[X] and ìí Xk |k =1,2, î approximation of A is ü ý þ Í X . The n 1 Ân = n å Xk ® A as n®¥. k=1 All estimators satisfying the law of large numbers are denoted consistent. Central limit theorem: If s 2 = var[X], then Rn = Ân - A» N(0,s 2 /n). Hence, recalling that A is not random, that Var(Rn )=Var(Ân )= 1 n Var(X). The law of large numbers makes the estimator unbiased. The central limit theorem follows from the independence of the Xk . When n is large enough, Rn is approximately normal, independent of the distribution of X as long as E[X]<¥. Random number generators Beware simple random number generators. For example, never, ever use the UNIX/Linux function rand. It repeats much too quickly. The function random repeats less frequently, but is not useful for parallel computing. Matlab has a very good random number generator that is operating system independent. Look for digital based codes developed 20 years ago by Michael Mascagni for good parallel random number generators. These are the state of the art even today. However, the best ones are analog: they measure the deviations in the electrical line over time and normalize them to the interval [0,1]. Some CPUs do this as a hardware instruction for sampling the deviation. These are the only true random number generators available on computers. The Itanium2 CPU line has this built in. Some other chips have this, too, but finding operating systems that will sample this instruction is hard to find. Sampling A simple sampler produces and independent sample of X each time it is called. The simple sampler turns standard uniforms into samples of some other random variable. MC codes spend almost all of their time in the sampler. Optimizing the sampler code to reduce its execution time can have a profound effect on the overall run time of the MC computation. In the discussion below rng() is a good random number generator. Bernoulli coin tossing A Bernoulli random variable with parameter p is a random variable X with Pr(X =1)= p and Pr(X = 0)=1- p . If U is a standard uniform, then p = Pr(U £ p). So we can sample X using the code fragment if ( rng() <= p ) X = 1; else X = 0; For a random variable with a finite number of values Pr(X = xk )= pk with å pk =1, we sample it using the unit interval and dividing it into subintervals of length pk. This works well with Markov chains. Exponential If U is a standard uniform, then T = -l -1 ln(U) is an exponential with rate parameter l with units 1/Time. Since 0<U<1, ln(U)<0 and T>0. We can sample T with the code fragment T = -(1/lambda)*log(rng()); The PDF of the random variable T is given by f (t)= le-lt for some t>0. Cumulative density function (CDF) Suppose X is a one component random variable with PDF f (x). Then the CDF F(x)= Pr(X £ x)= ò f (x')dx' . We know that 0 £ F(x)£1, "x and any u Î[0,1] x'£x there is an x such that F(x)=u . The simple sampler can be coded with 1. Choose U = rng() 2. Find X such that F(X)=U Note that step 2 can be quite difficult and time consuming. Good programming reduces the time. There is no elementary formula for the cumulative normal N(z). However there is software available to compute it to approximately double precision. The inverse cumulative normal z = N -1(u) can also be approximated. The Box Muller method We can generate two independent standard normal from two independent standard uniforms using the formulas R Q Z1 Z2 = -2ln(U1) = 2pU2 = Rcos(Q) = Rsin(Q) We can make N independent standard normal by making N standard uniforms and then using them in pairs to make N/2 pairs of independent standard normal. Multivariate normals Let X Î n be a multivariate normal random variable with mean 0 and covariance matrix C. We sample X using the Cholesky factorization of C = LT L , where L is lower triangular. Let Z Î n be a vector of n independent standard normal generated by the Box Muller method (or similarly). Then cov[Z]= I . If X = LZ , then X is multivariate normal and has cov[X]= LILT =C . There are many more methods that can be studied, e.g., Rejection. Testing samplers All scientific software should be presumed wrong until demonstrated to be correct. Simple 1D samplers are testing using tables and histograms. Errors Estimating the error in a MC calculation is straightforward. Normally a result with an error estimate is given when using a MC method. n Suppose X is a scalar random variable. Approximate A= E[X] by Ân = 1 n åk=1 Xk . The central limit theorem states that Rn = Ân - A» s nZ, where s n is the standard deviation of Ân and Z ~ N(0,1) . It can be shown that s n = 1 , where s 2 = var[X]= E[(X - A)2 ], s2 which we estimate using ŝ 2 = 1 n n 2 then take ŝ = 1 ŝ 2 . (X  ) n å k n n k=1 n n Since Z is of order 1, Rn is of order ŝ n . We typically report the MC data as A = Ân ±ŝ n . We can plot circles with a line for the diameter called the (standard deviation) error bar. We can think of k é ù êë úû standard deviation error bars ê Ân - kŝ n, Ân + kŝ n ú , which are confidence levels. The central limit theorem can be used to show that æé çê çê èë Pr Ân - ŝ n, Ân + ŝ ùö ÷ n úú÷ ûø æé çê çê èë » 66% and Pr Ân -2ŝ n, Ân +2ŝ ùö ÷ n úú÷ ûø » 95%. It is common in MC to report one standard deviation error bar. To interpret the data correctly, knowledge that the real data is outside of the circle one-third of the time has to be understood. Integration (quadrature) We want to approximate a d dimensional integral to an accuracy of e >0 . Assume we can do this using N quadrature points. Consider Simpson’s rule. For a function f (x): n ® , e µN -d/4 . MC integration can be done so that e µN -1/2 independent of d as long as the variance of the integrand is finite. MC integration Let V be the domain of integration. Define I( f )= ò f (x)dx and for uniform xi ÎV V let N N < f >= 1 åi=1 f (xi ) and < f 2 >= 1 åi=1 f 2(xi ). N N Then 2 2 I( f )=< f > ± < < f > - < f > > . N -1