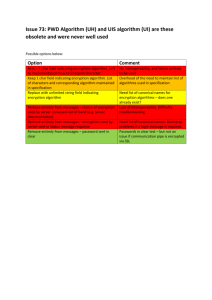

HSS-EncryptionAdvantage

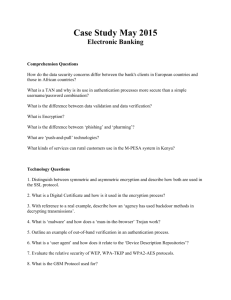

advertisement