PREVALENCE AND CORRELATIONS OF BIASES IN MANAGERIAL

advertisement

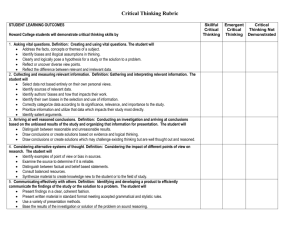

PREVALENCE AND CORRELATIONS OF BIASES IN MANAGERIAL DECISIONS: A CASE STUDY OF THAI MBA STUDENTS Chinnawut Jedsadayanmeta1,*, Dolchai La-ornual2# 1 MBA in Business Modeling and Analysis, Mahidol University International College, Mahidol University, Thailand 2 Business Administration, Mahidol University International College, Mahidol University, Thailand *e-mail: chinnawut.jed@student.mahidol.ac.th, #e-mail: dolchai.lar@mahidol.ac.th Abstract People often display judgmental or cognitive bias when making decisions, which can result in suboptimal outcomes. For organizations and businesses, these biases may lead to losses in profits and resources. In this study, we test 10 common cognitive biases for their prevalence and correlations among 71 Thai MBA students with work experience. The results show that overconfidence and regression to the mean are the two most prevalent biases. We also find that participants who exhibit the conjunction fallacy bias are likely to display the insensitivity to base rate bias. Our findings can help organizations and businesses design corrective and preventive actions to minimize potentially adverse effects from these common biases in managerial decisions. Keywords: cognitive bias, managerial decisions, prevalence, correlation, Thailand Introduction We all make decisions every day. However, we do not always explore every detail and evaluate all possibilities before arriving at a conclusion. Thus, our decision making processes may not always yield optimal solutions. 1 Researchers have identified several biases as the causes of suboptimal decision making. In particular, people generally use a process of “natural assessment”2 to perceive or understand a message or an event by comparing and estimating. Many biases occur because of the underlying heuristics, which are mental shortcuts that are derived from one’s experience or prior knowledge to help a person in making decisions, solving problems, learning, and discovering new things. 3 These “shortcuts” save the decision maker time and money, but, possibly, at the cost of accuracy and quality of the decisions. 1 Although biases rarely lead to optimal decisions, they frequently lead to ‘good’ decisions. 4 And because of limited daily resources including time and effort, it is difficult, if not impossible, for people to avoid using biases in their lives. Biases occur in almost every field, including business. They occur in managerial decisions in the form of performance evaluations, sales forecasts, investment choices, and strategic interactions. Biases and heuristics can lead to both gains and losses in business. For example, heuristics can help eliminate excessive information when screening new projects. 5 Conversely, flawed decisions can lead to losses to firms, such as when making decisions under unsuitable circumstances, with inappropriate emotion, or under public scrutiny. 6 Losses can be in terms of money or opportunities. An overestimated inventory level can lead to higher holding cost and the first impression of a job candidate lead to an ill-suited employee. Previous researchers have identified many biases in decision making. 10 common ones studied in this paper are (1) ease of recall, (2) retrievability, (3) insensitivity to base rates, (4) insensitivity to sample size, (5) misconceptions of chance, (6) regression to the mean, (7) the conjunction fallacy, (8) anchoring, (9) conjunctive- and disjunctive-events, and (10) overconfidence. And although these biases are well known and widely accepted by researchers in the field of judgment and decision making, the authors of the current paper are not aware of research that have investigated these biases comparatively. In particular, there has been no study that has compared the prevalence of each bias and possible correlations among them, especially in the context of managerial decisions. Furthermore, understanding the behavior of local managers in a particular country should benefit foreign entities with economic relations to it. This is particularly relevant for Thailand as the ASEAN Economic Community (AEC) which it will be a part of, is planned to be officially established in 2015. Common Biases In general, a bias in decision making occur as a result of an underlying heuristic or mental shortcut that are influenced by the decision maker’s existing experience or prior knowledge. The three heuristics, which causes the 10 cognitive biases tested in this study, are the availability, representativeness, and confirmation heuristics. The first heuristic is availability, which is based on how easy people think of something. It is a mental shortcut used in estimating probabilities of events by judging how easy it is to come up with examples. 7 It rests on a presumption that if examples can be recalled or retrieved easily, it must also be more probable. 8 Two biases emanating from the availability heuristic that we examine in this paper are: ease of recall and retrievability. The second heuristic is representativeness, which is based on how similar things are. As put forth by Tversky and Kahneman, 9 it is “an assessment of the degree of correspondence between a sample and a population, an instance and a category, an act and an actor or, more generally, between an outcome and a model.” For example, if a person experiences a particular event more often than another, that individual will think that the former is more common than the latter. 9 Five biases emanating from the representativeness heuristic that we test in the current study are: insensitivity to base rate, insensitivity to sample size, misconception of chance, regression to the mean, and conjunction fallacy. The third and final related heuristic is confirmation, which is based on how someone’s beliefs measure up against new information. When people are presented with information that is consistent with their beliefs, they are more likely to accept that information. 10 For example, a person who believes that a stricter gun control in the United States is mandatory will mostly agree to any studies or research pointing out to the same conclusion and will dismiss or turn antagonistic toward information that says otherwise. 11 Three biases emanating from the confirmation heuristic that we examine in this paper are: anchoring, conjunctive and disjunctive events, and overconfidence. Based on the three heuristics described above, the following are the 10 common biases in decision making that are tested in this study. Ease of recall This bias occurs when an event that is more vivid, is covered more by the media, or has happened recently is easier to recall than those that are not. 8 Consequentially, people assign higher probabilities to such events than other events that are more difficult to recall. For example, research has shown that organizations tend to buy natural disaster insurances after they have experienced such an event and not before the event has actually occurred. 12- 13 Retrievability This bias is based on how the human’s memory is structured and how the structure affects the search process. In an experiment, Tversky and Kahneman 9 found that participants estimated that the number of seven-letter words with the letter “n” in the sixth position is less than seven-letter words with “ing” as an ending even though the latter is a subset of the former. With respect to management, human resources managers, even without discriminating, are more likely to hire the type of people that they are similar to than those that they are not. 14 Insensitivity to base rate In this bias, people ignore the base rate when they are given descriptive information, no matter how irrelevant the information is. As an illustration, Kahneman and Tversky 15 found that giving participants an engineer-resembling description of a randomly selected individual from a group highly influenced the judged probability of that person being an engineer despite the different base rates in proportion of engineers in the group. From an organizational perspective, new entrepreneurs spend more time thinking of success rather than considering failures because they think that the base rate of failure is not applicable to them. 16 Insensitivity to sample size This bias occurs when people, using representativeness heuristic, incorrectly interpret that every sample size provides the same value of information. 17 In particular, the researchers showed that participants assess the likelihood of a particular event from a small sample size to be similar to the actual proportion in the population. In an organization, the marketing department usually plays on consumer’s insensitivity by giving numbers in percentage form without disclosing the sample size in advertising messages. 10 Misconception of chance In this bias, people assess the chance of a sequence to be random even when the sequence is too short for it to be so. 15 As the result of getting a head of an earlier flip of a coin has no effect on the current flip, so does prior performance has no influence on the present one. 18 Not uncommonly, many fans and gamblers are susceptible to fallacy by choosing to bet too heavily on the sports person who is perceived to be on a hot streak. Regression to the mean Regression to the mean is a concept many people ignore when they assess the likelihood of extreme events and the next event. For example, a business or an employee who performs really well this year cannot be expected to perform as well at the same level again next year. 15, 7 However, in some extreme cases, regression to the mean is to be expected. 10 For example, if an employee with a historically low performance performs exceptionally well in one year, the manager will not expect the employee to achieve the same high performance again in the future. Conjunction fallacy This bias occurs when people violate the conjunction rule, which states that “the probability of a conjunction, P(A&B), cannot exceed the probabilities of its constituents, P(A) and P(B), because the extension (or the possibility set) of the conjunction is included in the extension of its constituents.” 9 Yates and Carlson 19 explained that it is because of how the vivid representativeness of such conjunctions that matches to the given description that makes P(A&B) more probable than either P(A) or P(B). Anchoring This is a phenomenon that happens when people’s judgments are distorted by existing or new information (an “anchor”), even if it is irrelevant, externaly given, or self-generated. 17 From an organizational perspective, employees face with anchoring when making estimations of several issues, such as sales, inventory, and expense. Two explanations are given. First, Epley and Gilovich 20 explain that, when the anchor is self-created, people make an adjustment from an initial anchor by increasing or decreasing from it. In contrast, when the anchor is externally given, people arrive at an estimate from thinking of or searching for information that is related to the anchor. 21 The confirmation heuristic triggers this tendency in selective searching. 22 This process is similar to how first impression influences a hiring decision, 23 when racial stereotype rises out of how people perceive other people of the same race to those they have met before, 24 or when judges’ sentencing decisions could be influenced by anchors as irrelevant as a roll of dice. 25 Conjunctive- and disjunctive-events This is the bias where people misjudge the probabilities of the two events. More precisly, they overestimate the probability of events that must occur in conjunction with one another (conjunctive), such as in project planning 26 and underestimate the probability of events that must occur independently (disjunctive), such as landing at least a head one time in 10 coin flips. 17 Overconfidence People can be too self-assured in their beliefs and judgements when facing with moderately to extremely difficult questions. 27 Employees in an organisation, especially those in higher level positions, can make a wrong strategic decision when estimating many business numbers because they are too sure of their abilities. Bazerman and Moore 10 explain that, because of confirmation heuristic, people find it easier to search for or generate confirming evidences rather than disconfirming evidences. Affirmatively, Plous 28 state “No problem in judgment and decision making is more prevalent and more potentially catastrophic than overconfidence.” Thus, in order to prevent such bias, Griffin Dunning, and Ross 29 offer that instead of finding information to support someone’s theory, finding contradictory information can be more helpful. Methodology Participants The study involved 71 participants who are Master of Business Administration (MBA) students in international programs at Mahidol University and Thammasat University in Thailand. The participants had an average work experience of more than 3 years and were employed in entry- or mid-level management positions. Participants were given a class score as an incentive to complete the test. Design There were 10 questions in the test, each corresponding to a bias presented in the previous section. These questions were adapted from Bazerman, M.H. and Moore, D.A. 10 Judgement in Managerial Decision Making, 7th edition, New York: John Wiley & Sons. Participant had to respond to all questions, presented in random order to minimize the sequencing effect. The test was conducted in English. Procedure Participants were invited to join the research project in the class. All participants were informed of their rights and had given their informed consents before being given the test. Instructions were given of how to complete the test. The test was administered online in a computer lab setting using an interactive questionnaire. No time limit was set for the test; however, most participants finished the test under 40 minutes. Once all the test results had been recorded, it was possible to determine the prevalence and the correlation of each bias in managerial decisions. Statistical tests are performed to determine the significance of the results. Results Prevalence 100% 100% 90% 90% 83% 82% 83% 76% 80% 70% 60% 50% 49% 45% 40% 30% 27% 20% 20% 10% 0% Figure 1. Prevalence of the common biases among Thai managers with the histogram displaying percentage of participants exhibiting each type of bias. Performing a Pearson’s chi-squared test, we find a significant violation of the biases 2(9, N = 71) = 222.02, p < .001. The lowest number of violated biases is two while the highest number is nine, with a mean of 6.55. The most prevalent bias (Fig. 1) in Thailand is overconfidence. The test shows a 100% violation. The second most violated bias is the regression to the mean bias. The conjunction fallacy and conjunctive- and disjunctive-events rank as the third and the fourth most common biases. For the conjunction fallacy, this shows a similar result as concluded by Tversky and Kahneman. 9 Insensitivity to base rate and insensitivity to sample size come fifth and sixth. Participants violate them by responding to the questions with the biased answers. These two biases are both emanated from the same heuristic. The representativeness heuristic causes people to ignore background information-the base rate-in favor of specific information and incorrectly estimate the probabilities when dealing with sample sizes. In contrast, the least common biases in Thailand are, in order of decreasing prevalence, retrievability, ease of recall, misconception of chance, and anchoring. For the retrievability bias and the ease of recall, these two phenomena are triggered by the availability heuristic, whereby the instances that are easily retrieved or the events that are easier to recall caused the participants to believe that they are more common. Most participants answer correctly in the test of misconception of chance and they rarely display the anchoring bias. Correlation A Pearson’s correlation test is performed to determine the correlation of each bias with one another. Table 1 displays the correlation coefficient of each pair of biases. Table 1. Correlation matrix for the prevalence of biases in Thailand Bias 1 2 3 4 5 6 7 8 9 10 1 1.00 0.01 -0.01 -0.09 -0.23* 0.11 0.03 -0.02 0.11 N/A 2 3 4 5 6 7 8 9 10 1.00 -0.04 0.29** 0.10 0.23* -0.08 -0.06 -0.08 N/A 1.00 -0.18 -0.04 -0.03 0.37*** -0.13 0.08 N/A 1.00 -0.03 0.15 0.01 0.28** -0.08 N/A 1.00 0.09 0.10 -0.14 0.1 N/A 1.00 0.23* -0.07 0.1 N/A 1.00 -0.15 0.10 N/A 1.00 0.13 N/A 1.00 N/A 1.00 Correlation is significant at the 0.01 level (two-tailed) Correlation is significant at the 0.05 level (two-tailed) * Correlation is significant at the 0.10 level (two-tailed) Ease of recall 6. Regression to the mean Retrievability 7. The conjunction fallacy Insensitivity to base rate 8. Anchoring Insensitivity to sample size 9. Conjunctive- and disjunctive-events Misconception of chance 10. Overconfidence Notes: *** ** 1. 2. 3. 4. 5. The pair that shows the strongest significant level is the conjunction fallacy and insensitivity to base rate, r(69) = 0.37, p < .01 with a moderate positive relationship: for those who have the conjunction fallacy, they are moderately likely to have insensitivity to base rate. The conjunction fallacy also displays a weak positive relationship with the regression to the mean bias, r(69) = 0.23, p < .10, but to a small significant degree. Since all of these three biases are emanated from the representativeness heuristic, they are likely to occur at the same time. In order to avoid being distorted by representativeness heuristic, it is important to avoid giving too much weight to only one specific piece of information and it is recommended to consider other information that may be relevant as well. 30 For an organization, it is possible to use teamwork to help reduce the biases by using brainstorming techniques to come up with more cases and ideas that are not conventionally representative of the case at hands. In addition, to a lesser significance, anchoring has a weak positive relationship with insensitivity to sample size, r(69) = 0.28, p < .05. The former bias is emanated from confirmation heuristic, while the latter comes from the representativeness heuristic. This reveals that more than one heuristic can happen at any given time in decision-making processes. 10 To reduce confirmation heuristic, organizations benefit more when employees try to find disconfirming evidences when making managerial decision rather than conforming evidences. Lastly, the last three significant correlations involve at least one availability heuristic. Retrievability has a weak positive relationship with insensitivity to sample size, r(69) = 0.29, p < .05, and regression to the mean, r(69) = 0.23, p < .10. Conversely, ease of recall has a weak negative relationship with misconception of chance r(69) = -0.23, p < .10: for those who exhibit ease of recall, they are less likely to have misconception of chance. Availability heuristic can be avoided or reduced by understanding what information is truly relevant and not what information is readily or easily available at hands. 30 Again, brainstorming can help in this case by allowing more ideas to surface before making any decisions. With this result, the question for organizations now moves from what biases are there to how to reduce those biases. The first step in dealing with biases is to acknowledge that they exist. A strong corporate culture can help raise awareness that biases are naturally occurred and it is easier to see biases in others than in oneself. In this case, using a management concept called “devil’s advocate”-by letting someone takes a strong stand in a particular belief and engages other employees in discussion and constructive argument-will help reducing the biases. In addition, some biases can be alleviated by questioning decision-makers frequently to avoid major business errors based on short-sighted decisions. Nonetheless, some counterintuitive biases are harder to avoid. In this instance, an objective third-party, such as a management-consulting firm, can provide insights into decision-making processes and provide new ideas that are not influenced by the organization’s past experience. Finally, as a general recommendation, organizations should have contingency plans or back-up plans to prepare for any unanticipated events and to help the organizations recover faster if a catastrophic event occurs. In an effort to reduce biases, organizations have to consider the cost of how much to spend to achieve the goal, but achieving so can be something that worth the effort. Discussion and Conclusion This paper comparatively tests 10 common biases in managerial decision making by examining the prevalence and correlations of these biases. We find that overconfidence and regression to the mean are the most common biases among Thai managers. We also find that people who are susceptible to the conjunction fallacy are also prone to be insensitive to the base rate. This latter finding may be because both biases are due to the same representativeness heuristic. There are certain limitations to the findings in this paper, however. First, because we specifically studied decision making among Thai managers, the results may not pertain to business people of other nations. This may simply be due to differences in culture, tradition, and general way of thinking. Thus, it may be beneficial for future studies to examine the behavior of managers from other nations. The second limitation is that this paper is based on 10 specific cognitive biases. And although these biases are among the most common in decision making, the list is not exhaustive. Thus, perhaps other biases, including culture or nationality related ones, could be identified and tested in further research. The third limitation of this paper is that it only examines decision making of individuals. People in organizations often make collective decisions. This could be particularly true due to the collectivism nature of Asian cultures. Therefore, studying how participants decide as a group may yield different results. And because research of biases in a group decision making is scarce, exploration in this area may provide valuable contributions to the field of judgment and decision making. References 1. Gilovich T, Griffin D. Introduction - heuristics and biases: Then and now. In Gilovich T, Griffin D, Kahneman D, editors. Heuristics and biases: The psychology of intuitive judgment. New York: Cambridge University Press; 2002. p. 1-7. 2. Kahneman D, Tversky A. On the study of statistical intuitions. Cognition. 1982; 11(2):123-41. 3. Simon HA. Models of man: Social and rational. New York: Wiley; 1957. 4. Nisbett RE, Ross L. Human interface: Strategies and shortcomings of social judgement. New Jersey: Prentice-Hall; 1980. 5. Albar FM, Jetter AJ. Fast and frugal heuristics for new product screening - is managerial judgment 'good enough?'. Int J Manage Decis. 2013; 12(2):165-89. 6. Wade OL. Instant wisdom: a study of decision making in the glare of publicity. Int J Manage Decis. 2002; 3(2):203-10. 7. Tversky A, Kahneman D. Availability: A heuristic for judging frequency and probability. Cognitive Psychol. 1973; 5(2):207-32. 8. Sherman SJ, Cialdini RB, Schwartzman DF, Reynolds KD. Imagining can heighten or lower the perceived likelihood of contracting a disease: The mediating effect of ease of imagery. In Gilovich T, Griffin DW, Kahneman D, editors. Heuristics and biases: The psychology of intuitive judgment. New York: Cambridge University Press; 2002. p. 98-119. 9. Tversky A, Kahneman D. Extensional versus intuitive reasoning: The conjunction fallacy in probability judgment. Psychol Rev. 1983; 90(4):293-315. 10. Bazerman MH, Moore DA. Judgment in managerial decision making. 7th ed. New York: John Wiley & Sons; 2008. 11. Taber CS, Lodge M. Motivated Skepticism in the Evaluation of Political Beliefs. Am J Polit Sci. 2006; 50(3):755-69. 12. Kunreuther H. Disaster insurance protection: Public policy lessons. New York: Wiley; 1978. 13. Palm R. Catastrophic earthquake insurance: Patterns of adoption. Econ Geogr. 1995; 71(2):119-31. 14. Petersen T, Saporta I, Seidel MDL. Offering a job: Meritocracy and social networks. Am J Sociol. 2000; 106(3):763-816. 15. Kahneman D, Tversky A. Subjective probability: A judgment of representativeness. Cognitive Psychol. 1972; 3(3):430-54. 16. Moore DA, Oesch JM, Zietsma C. What competition? Myopic self-focus in market entry decisions. Organ Sci. 2007; 18(3):440-54. 17. Tversky A, Kahneman D. Judgment under uncertainty: Heuristics and biases. Science. 1974; 185(4157):1124-31. 18. Gilovich T, Vallone RP, Tversky A. The hot hand in basketball: On the misperception of random sequences. Cognitive Psychol. 1985; 17:295-314. 19. Yates JF, Carlson BW. Conjunction errors: Evidence for multiple judgment procedures, including "signed summation". Organ Behav Hum Dec. 1986; 37(2):230-53. 20. Epley N, Gilovich T. Putting adjustment back in the anchoring and adjustment heuristic: Differential processing of self-generated and experimenter-provided anchors. Psychol Sci. 2001; 12(5):391-6. 21. Mussweiler T, Strack F. Hypothesis-consistent testing and semantic priming in the anchoring paradigm: A selective accessibility model. J Exp Psychol. 1999; 35(2):136-64. 22. Mussweiler T, Strack F. The use of category and exemplar knowledge in the solution of anchoring tasks. J Pers Soc Psychol. 2000; 78(6):1038-52. 23. Dougherty TW, Turban DB, Callender JC. Confirming first impressions in the employment interview: A field study of interviewer behavior. J Aapl Psychol. 1994; 79(5):656-65. 24. Duncan BL. Differential social perception and attribution of intergroup violence: Testing the lower limits of stereotyping of Blacks. J Pers Soc Psychol. 1976; 34(4):590-8. 25. Englich B, Mussweiler T, Strack F. Playing dice with criminal sentences: The influence of irrelevant anchors on experts' judicial decision making. Pers Soc Psychol B. 2006; 32(2):188-200. 26. Bar-Hillel M. On the subjective probability of compound events. Organ Behav Hum Perf. 1973; 9(3):396406. 27. Alpert M, Raiffa H. A progress report on the training of probability assessors. In Kahneman D, Slovic P, Tversky A, editors. Judgment under uncertainty: Heuristics and biases. New York: Cambridge University Press; 1982. 28. Plous S. The psychology of judgment and decision making. New York: McGraw-Hill; 1993. 29. Griffin DW, Dunning D, Ross L. The role of construal processes in overconfident predictions about the self and others. J Pers Soc Psychol. 1990; 59(6):1128-39. 30. Klein JG. Five pitfalls in decisions about diagnosis and prescribing. Br Med J. 2005; 330(7494):781-3.