Weka - WordPress.com

advertisement

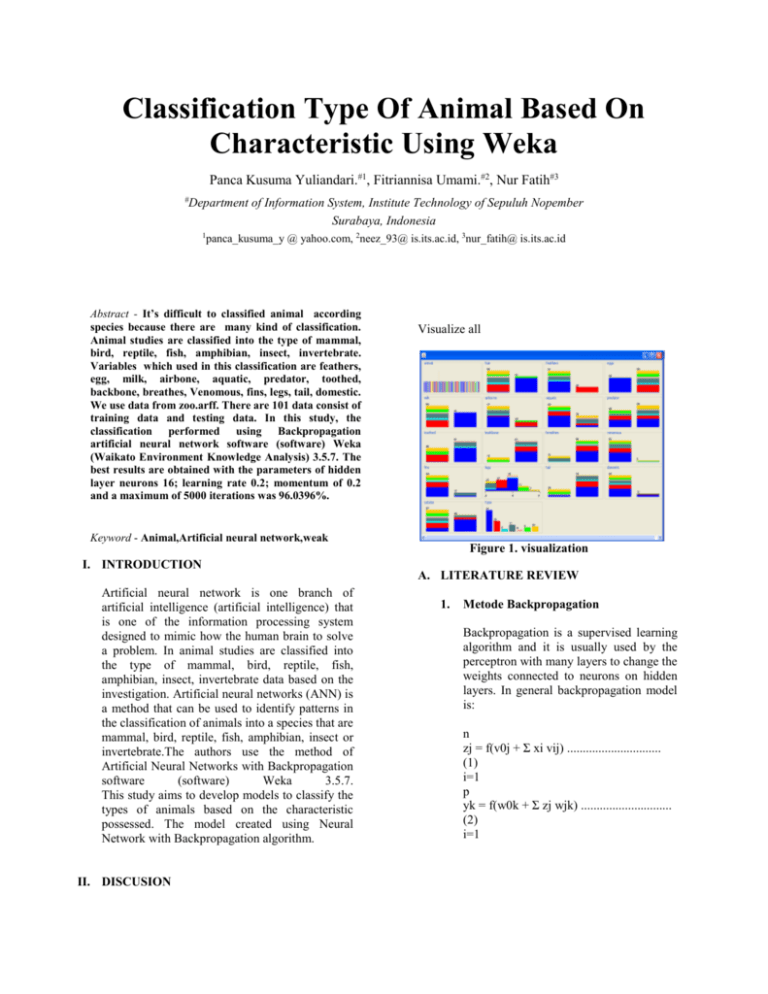

Classification Type Of Animal Based On Characteristic Using Weka Panca Kusuma Yuliandari.#1, Fitriannisa Umami.#2, Nur Fatih#3 # Department of Information System, Institute Technology of Sepuluh Nopember Surabaya, Indonesia 1panca_kusuma_y @ yahoo.com, 2neez_93@ is.its.ac.id, 3nur_fatih@ is.its.ac.id Abstract - It’s difficult to classified animal according species because there are many kind of classification. Animal studies are classified into the type of mammal, bird, reptile, fish, amphibian, insect, invertebrate. Variables which used in this classification are feathers, egg, milk, airbone, aquatic, predator, toothed, backbone, breathes, Venomous, fins, legs, tail, domestic. We use data from zoo.arff. There are 101 data consist of training data and testing data. In this study, the classification performed using Backpropagation artificial neural network software (software) Weka (Waikato Environment Knowledge Analysis) 3.5.7. The best results are obtained with the parameters of hidden layer neurons 16; learning rate 0.2; momentum of 0.2 and a maximum of 5000 iterations was 96.0396%. Visualize all Keyword - Animal,Artificial neural network,weak I. INTRODUCTION Artificial neural network is one branch of artificial intelligence (artificial intelligence) that is one of the information processing system designed to mimic how the human brain to solve a problem. In animal studies are classified into the type of mammal, bird, reptile, fish, amphibian, insect, invertebrate data based on the investigation. Artificial neural networks (ANN) is a method that can be used to identify patterns in the classification of animals into a species that are mammal, bird, reptile, fish, amphibian, insect or invertebrate.The authors use the method of Artificial Neural Networks with Backpropagation software (software) Weka 3.5.7. This study aims to develop models to classify the types of animals based on the characteristic possessed. The model created using Neural Network with Backpropagation algorithm. II. DISCUSION Figure 1. visualization A. LITERATURE REVIEW 1. Metode Backpropagation Backpropagation is a supervised learning algorithm and it is usually used by the perceptron with many layers to change the weights connected to neurons on hidden layers. In general backpropagation model is: n zj = f(v0j + Σ xi vij) .............................. (1) i=1 p yk = f(w0k + Σ zj wjk) ............................. (2) i=1 Description: (1) activation function of the input signals weighted xi sent to the hidden layer. (2) activation function of the sum of the input signals weighted zj sent to the output layer 2. elements and processing elements are connected. 3. Network Architecture Neural network consists of 3 layers: the layer of input / inputs consist of 1 unit of input variable nerve cells, a hidden layer consisting of 16 units of nerve cells, and the output layer and output consists of 7 neural cells. Input layer (xi) is used to accommodate the variable that is 1 animal, while the output layer 7 (yk) is used to present the classification for the type of animal mammal, bird, reptile, fish, amphibian, insect, invertebrate 1. Mammal 2. Bird 3. Reptile 4. Fish 5. Amphibian 6. Insect 7. invertebrata. Description: x = input (input) j = 1 to n (n = 10). v = Weight of the hidden layer. w = weight of output layer. n = number of processing units in the hidden layer. b = bias in the hidden layer and output layer. k = number of processing units in the output layer. Y = Output result of mammal, bird, reptile, fish, amphibian, insect, invertebrate. Backpropagation algorithm using the output error to change the value-weight weight in the back (backward). 1. Starting with the input layer, calculate the output of each processing element through the outer layer. 2. Calculate the error in the outer layer which is the difference between actual and target data. 3. Transform the error in the corresponding error in the input side of the processing elements. 4. Make these errors at the output of each processing element to the error contained in the input. This process repeats until the input is reached. 5. Changing the weight by using the errors on the input side of the superficial Animal Clasification Classification system is based on the type of animal species by looking at the features that animal have.They will be grouped by whether or not there hear, feathers, egg, milk, airbone, aquatic, predator, toothed, backbone, breathes, Venomous, fins, tail, domestic even how many leg they have.According amount characteristic of each animal owned by the new type of animal can be classified in to outline animal.They classified into several types, namely: B. RESEARCH METHODOLOGY 1. Research Procedure The data used in this study consisted of 101 data,which are training data and testing data collected in an arff file. Weka software there are three preprocessing load, analyze, and filter. Load process, , data has been calculated using the formula stored in to arff file. Zoo data is inserted into the Weka open file panel. Analyze the process, is used for variables analysis animal. This animal classification process does not use filters for preprocessing the data is good for processing. Next to classify the tab using the backpropagation algorithm multilayer perceptron. In this classify tab parameters used can be modified. To analyze the backpropagation neural network algorithm is analysze weight. Each unit of input (xi, i = 1) receives the signal xi and deliver signals to the hidden layer units. Input units (xi) is Animal. Vij is the weight multiplied by the unit value of inputs (xi). Each hidden unit (xi, i = 1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16) summed with the weight of the input signal. v0j is bias in the hidden units. Activation function to calculate the output signal and sent to all units in the output layer units. Each unit of output (yk, k = 1,2,3,4,5,6,7) summed weighted input signals. w0k is biased output unit. Activation function to calculate the output signal. Determine the output value mamal animal, bird, reptile, fish, amphibian, insect or invertebrate. 2. Research Technique Zoo data used is in arff format. parameters that used to classify the animal type consists of hidden layer neurons 1,2,3,4,5.; learning rate 0.01; 0.03; 0.05; 0.1; 0 .2. Momentum 0.2; and the number of iteration 5000. Activation function used is function Percepteron.Proses Multilayer neural network with training MultilayerPerceptron use test mode options that crossvalidation training data and testing data is processed into 10 parts (folds) repeatedly. From the 101 data, Weka software will share and process data 9 / 10 for training data and 1 / 10 for testing data. The process stops until the fold to reach 10. Figure 4. output weight C. Output Classification Results of soil classification with artificial neural network method in the Weka backpropagation can be correctly classified according to the table, the kappa statistic and the confusion matrix. Tabel 1. Correctly Classified LR/ HL 2 4 6 8 10 0,01 0,03 0,05 0,1 0,2 60.39 6 % 69.30 69 % 73.26 73 % 82.17 82 % 60.39 6 % 74.25 74 % 88.11 88 % 90.09 9 % 74.25 74 % 87.12 87 % 89.10 89 % 94.05 94 % 79.20 79 % 89.10 89 % 94.05 94 % 96.03 96 % 86.13 86 % 92.07 92 % 94.05 94 % 84.15 84 % 90.09 9 % 94.05 94 % 95.04 95 % 96.03 96 % 96.03 96 % Figure 2. classifier output When tested using the Weka software, proved by the number of 16 hidden layer neurons and learning rate of 0.2 obtained the largest percentage is 96.0396%. So, from the 101 observed data there are 96 unclassified data is correct, while the remaining 5 data in error The second classification consideration. As a second consideration would be seen much kappa statistic of experiments that can be seen in Table 2. Figure 3. output weight consideration would see confusion matrix of the research experiment in table 3. Tabel 2. Kappa Statistic LR/H L 2 4 6 8 10 0,01 0,03 0,05 0,1 0,2 0.44 81 0.57 75 0.63 82 0.76 25 0.78 76 0.41 98 0.64 68 0.84 19 0.86 74 0.86 83 0.63 86 0.82 57 0.85 56 0.92 15 0.92 15 0.71 5 0.85 42 0.92 16 0.94 76 0.93 46 0.81 47 0.89 53 0.92 16 0.94 77 0.94 77 In the table 7 can be seen that the number of neurons 16 and 0.2 learning rate neural networks can reach 0.9477. Value of kappa statistic has a value between -1 and +1. Kappa statistic value> = 0.75 indicates a very decent result. 0 <kappa <0.4 shows reasonable results for some levels. Kappa = 0, showed the same results with random probability. Kappa <0, indicates the probability of a random bad and the result has no meaning. So, with a kappa value of 0.9477 indicates statistical achieve very decent results in the truth of the classification. As a third Tabel 3. Confusion Matrix LR/H L 2 0,01 0,03 0,05 0,1 0,2 a b c d e f g <-classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 5 0 0 0 0 0| c = reptile 0 13 0 0 0 0 0 | d = fish 0 4 0 0 0 0 0| e = amphibian 0 8 0 0 0 0 0| f = insect 0 10 0 0 0 0 0 | g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 3 2 0 0 0 0 0| c = reptile 4 9 0 0 0 0 0| d = fish 2 2 0 0 0 0 0| e = amphibian 5 3 0 0 0 0 0| f = insect 3 7 0 0 0 0 0| g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 1 2 0 1 0 0 1| c = reptile 0 0 0 13 0 0 0 | d = fish 2 2 0 0 0 0 0| e = amphibian 5 2 0 0 0 0 1| f = insect 2 3 0 4 0 0 1| g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 1 1 0 3 0 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 2 1 0 0 0 0 1| e = amphibian 2 2 0 0 0 2 2| f = insect 1 1 0 1 0 3 4| g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 0 1 0 0 3| c = reptile 0 0 0 13 0 0 0 | d = fish 1 1 0 1 0 1 0| e = amphibian 0 0 0 0 0 7 1| f = insect 0 1 0 1 0 2 6| g = invertebrate 4 6 8 10 a b c d e f g <-classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 1 4 0 0 0 0 0| c = reptile 0 4 0 9 0 0 0| d = fish 0 0 0 4 0 0 0| e = amphibian 0 7 0 1 0 0 0| f = insect 1 4 0 5 0 0 0| g = invertebrate a b c d e f g <-classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 0 4 0 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 0 0 0 4 0 0 0| e = amphibian 0 4 0 4 0 0 0| f = insect 1 0 0 9 0 0 0| g = invertebrate a b c d e f g <-classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 2 0 2 0 0 1| c = reptile 0 0 0 13 0 0 0 | d = fish 0 0 0 1 0 0 3| e = amphibian 0 0 0 0 0 0 8| f = insect 0 0 0 0 0 1 9| g = invertebrate a b c d e f g <-classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 1 1 0 2 0 1 0| c = reptile a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 0 4 0 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 2 0 0 2 0 0 0| e = amphibian 2 3 0 3 0 0 0| f = insect 1 0 0 8 0 0 1| g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 3 0 2 0 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 0 0 1 2 0 0 1| e = amphibian 0 0 0 0 0 8 0| f = insect 0 0 0 0 0 3 7| g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 2 0 2 1 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 2 0 1 1 0 0 0| e = amphibian 0 0 0 0 0 8 0| f = insect 0 0 0 0 0 1 9| g = invertebrate a b c d e f g <-- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 0 2 1 0 1| c = reptile a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 1 1 0 3 0 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 2 0 0 1 0 0 1| e = amphibian 0 1 0 0 0 5 2| f = insect 1 0 0 0 0 0 9| g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 0 3 1 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 0 0 2 2 0 0 0| e = amphibian 0 0 0 0 0 8 0| f = insect 0 0 0 0 0 2 8| g = invertebrate a b c d e f g <-- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 3 1 0 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 0 0 3 0 1 0 0| e = amphibian 0 0 0 0 0 8 0| f = insect 0 0 0 0 0 1 9| g = invertebrate a b c d e f g <-- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 3 1 0 0 0| c = reptile a b c d e f g <-- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 1 3 0 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 2 0 1 1 0 0 0| e = amphibian 0 0 0 0 0 6 2| f = insect 0 0 0 0 0 1 9| g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 3 1 0 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 0 0 2 0 2 0 0| e = amphibian 0 0 0 0 0 8 0| f = insect 0 0 0 0 0 2 8| g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 3 1 0 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 0 0 1 0 3 0 0| e = amphibian 0 0 0 0 0 8 0| f = insect 0 1 0 0 0 0 9| g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 3 1 0 0 0| c = reptile a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 2 1 1 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 0 0 2 0 1 1 0| e = amphibian 0 0 0 0 0 7 1| f = insect 0 0 0 0 0 1 9| g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 2 1 1 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 0 0 1 0 3 0 0| e = amphibian 0 0 0 0 0 8 0| f = insect 0 0 0 0 0 2 8| g = invertebrate a b c d e f g <- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 3 1 0 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 0 0 1 0 3 0 0| e = amphibian 0 0 0 0 0 8 0| f = insect 0 0 0 0 0 1 9| g = invertebrate a b c d e f g <-- classified as 41 0 0 0 0 0 0 | a = mammal 0 20 0 0 0 0 0 | b = bird 0 1 3 1 0 0 0| c = reptile 0 0 0 13 0 0 0 | d = fish 0 0 0 1 0 2 1| e = amphibian 0 0 0 0 0 2 6| f = insect 1 0 0 0 0 0 9| g = invertebrate 0 0 0 13 0 0 0 | d = fish 1 0 2 0 0 1 0| e = amphibian 0 0 0 0 0 8 0| f = insect 0 0 0 0 0 1 9| g = invertebrate In table 3 can be seen that the number of hidden layer neurons, learning rate 16 and confusion matrix 0.2 has a very feasible to see the distribution of the error classification. In the confusion matrix, which determines the amount of truth classification is indicated by the formation of the diagonal of the matrix. REFERENCES Nafisah, Sari, Puspitodjati Sulistyo, and Wulandari Sri, “pengklasifikasian jenis tanah menggunakan jaringan saraf tiruan dengan menggunakan algoritma backpropagation”,2008. 0 0 0 13 0 0 0 | d = fish 0 0 3 0 1 0 0| e = amphibian 0 0 0 0 0 8 0| f = insect 0 0 0 0 0 1 9| g = invertebrate 0 0 0 13 0 0 0 | d = fish 0 0 1 0 3 0 0| e = amphibian 0 0 0 0 0 7 1| f = insect 0 0 0 0 0 1 9| g = invertebrate 0 0 0 13 0 0 0 | d = fish 0 0 1 0 3 0 0| e = amphibian 0 0 0 0 0 8 0| f = insect 0 0 0 0 0 1 9| g = invertebrate