Artifact 5d - WordPress.com

Go Eun Chung

Ngongose Bilikha

Nigina Boltaeva

ENG 686

Dr. Glen Poupore

04.21.2015

Assessing Interactive Speaking Mini-Research

Section 1- Purpose and Description of the Participants and the Setting: Why, Who, Where, and When?

This is a speaking assessment that was conducted at Minnesota State University, Mankato,

MN on April 6th 2015. The purpose of this assessment was to make us more aware of the various criteria that are available for assessing speaking. Speaking assessment seems to be a very complicated task, and most teachers find it challenging to assess it properly. Although it appears to be a little complicated, when done properly it can provide vital information to the students and teachers about possible progress being made and areas which need further development. We thought that the best way to assess someone's oral ability is to directly assess their ability to speak by focusing on their fluency, accuracy, syntax, vocabulary, and capacity to explicitly respond to a specific listening task.

Our participants were a male Nepalese (19) and female Saudi Arabian (28). They are both enrolled in ESL 102, and this class is geared towards helping students prepare for academic life in the US. We were aware of the limited proficiency level of our participants before we actually conducted the assessment. The assessment occurred in AH room 209, so on April 6th between

5:10 and 5:30 pm, we allotted 30 minutes for this activity, but found out that it took us a little longer to complete the entire assessment.

Section 2- Presentation of the Two Speaking Assessment Instruments

Rating Scale

Student A

Student B

Rubric

Fluency

Use of

Descriptive and

Vivid

Vocabulary

Pronunciation

Grammar

1

Speech is fragmentary and makes interaction virtually impossible.

Vocabulary limitations so extreme as to make conversation virtually impossible.

2

Frequent hesitations interfere with interaction and comprehensibility

.

Misuses words, has very limited vocabulary, comprehension quite difficult.

3

Hesitations sometimes interfere with interaction.

Frequently uses wrong words, conversation somewhat limited because of inadequate vocabulary.

4

Speaks with a certain degree of ease and confidence.

Hesitations, although present, do not interfere with interaction.

Occasionally uses inappropriate terms and/or must rephrase ideas because of lexical inadequacies.

5

Speaks with natural ease and confidence when interacting.

Uses all vocabulary with full flexibility and precision.

Pronunciation problem so severe as to make speech virtually unintelligible and wrong intonation patterns prevalent.

More than 11 errors in grammar and word order that make speech virtually unintelligible.

Very hard to understand because of pronunciation problems. Must frequently repeat in order to make him/herself understood, and most intonation patterns not consistent.

Makes 9-10 grammatical and/or word order errors throughout the speech that greatly interferes with meaning.

Pronunciation problems necessitate concentration on the part of the listener and occasionally lead to misunderstanding.

Several inconsistent intonation patterns.

Makes 6-8 grammatical and/or word order errors throughout the speech that begin to interfere with meaning.

Most of the time intelligible, although the listener is conscious of different and inappropriate intonation patterns.

Makes 3-5 grammatical and/or word order errors throughout the speech that do not interfere with meaning.

Always intelligible and intonation patterns are appropriate.

Makes 1-2 grammatical and/or word order errors throughout the speech.

Task

Accomplishment

The response is not connected to the task.

The response is very limited in content and/or coherence or is only minimally connected to the task.

The response addresses the task, but development of the idea is limited.

The response addresses the task appropriately, but may fall short of being fully developed.

The response fulfills the demands of the task, with at most minor lapses in completeness.

Comments:

Total: /25

The first assessment tool (rating scale) seems fairly easy to create and implement. It has a limited amount of set criteria or degree of frequency and skills. It also states the criteria or expectations of the assignments, but it does so in very vague or general terms. For example, the categories used are fluency, complexity, and accuracy, and underneath these general categories are sub categories such as: interaction, syntax, vocabulary and inaccurate grammar. The rating does identify those crucial components and categories, but it does fail in precision. For example, it does not explicitly state the expectations or requirements for a score of 5 on fluency, or a 5 on accuracy. How will the student know what the expectations are? As far as assessing each student's performance is concerned, it is a little challenging to the teacher too because it is hard to accurately compare student scores. How would we define inaccurate grammar as opposed to accurate grammar? It does not precisely give us the answer, and more so what happens if we have multiraters? We do not see how multi-raters can still salvage this situation since there are no set expectations. As far as aspects such as washback and authenticity is concerned, we really do not believe that it is very beneficial. How do we adjust instruction based on this kind of tool? The results of this tool are not explicit or precise enough. So all we have are general results as far as these categories are concerned.

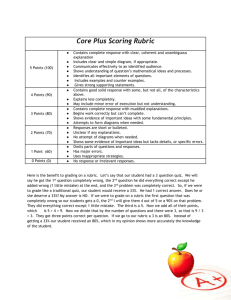

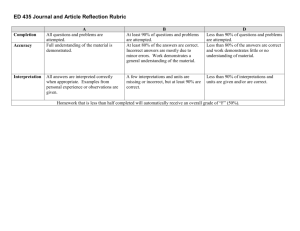

On the other hand, the analytic rubric that was created seems to be able to solve the lingering issues that persisted after the rating scale assessment. Our analytic scale has several components or expectations that seem to cater to this assessment activity. Those components are: fluency, use of descriptive and vivid vocabulary, pronunciation, grammar, task accomplishment, and a comments section. We did not only identify each of these components, but also identified the components of a 5 on the competence level. For example, in order for a student to score a 5 on fluency aspect of the rubric, the student will have to speak with natural ease, have little or no

pauses or hesitation when interacting. The analytic rubric not only has specific outcomes or expectations, but it also has the ability to systematically distinguish one student's performance from the other. For example, we can easily tell what specific areas have been mastered, and at the same time figure out the areas that still need improvement. We initially had a section that included a vocabulary component, but we decided to make this section more precise by adding a component for descriptive and vivid words. This was added in order to measure the student’s ability to communicate events in a detailed, precise and coherent manner. We also decided to expand the grammatical and syntactic piece in order to clearly distinguish the students who were able to communicate fluently from those who were emerging learners. We also distinguished the amount of errors made within the grammatical category in order to make the scoring more reliable. We have also added a comments section in order to give the rater a chance to include some extensive feedback on the categories that are not included in the rubric.

Section 3- Assessment Results/ Comparing the Two Raters / Comparing the Two

Instruments

Table 1: Provided Rating Scale

ESL

Learners

Teacher

Raters

Fluency a)

Fluency b)

Complexity a)

Complexity b)

Laxman

Ngongose Nigina

3

3

4

4

4

4

4

4

Go Eun

1

2

1

1

Ngongose

3

3

3

3

Omra

Nigina

4

5

4

3

Go Eun

2

2

2

2

Accuracy a)

Accuracy b)

4

4

3

4

1

2

4

4

4

4

2

2

Table 2: Created Rubric

ESL Learners

Teacher

Raters

Fluency

Use of

Descriptive and Vivid

Vocabulary

Pronunciation

Grammar

Task

Completion

Raters’

Feedback

Comments to

Students:

Laxman

Ngongose Nigina

4

4

4

3

4

Student seemed to respond to questions accurately and explicitly, but was not audible most of the time.

3

4

3

4

4

Go Eun

2

1

2

1

3

The student was trying to negotiate meaning; however his voice was very low. He is trying to think critically and find the reasons

He finished the task in the end, but his talk was hard to understand.

There were mumbling, hesitation, and simple words only.

Ngongose

4

5

3

5

4

Student seemed to respond to most questions, but paused frequently, as she negotiated meaning with her partner.

Omra

Nigina

3

3

4

4

4

Go Eun

2

2

2

2

3

The student is good at negotiating meaning, but has difficulties with choosing specific terms. She is trying to think critically and find the reasons for

She spoke

English better than the other, but still there were simple words and sentences only.

for change, though it is not a requireme nt for the task. change, though it is not a requirement for the task.

When comparing the scores we gave to the students based on the rating scale, Nigina and

Ngongose have evaluated the students with different grades. When it comes to hesitation,

Ngongose thought that both Laxman and Omra were quite hesitant, thus gave them both 3 out of

5. However, based on the score that Nigina gave them, 4 out of 5, she thought that they are not as hesitant. Ngongose gave both students 3 out of 5 for their ability of interaction, and Nigina thought that Omra is very good at interaction because she was good at negotiating the meaning. That is why Nigina gave Omra 5, and 4 to Laxman. Both Ngongose and Nigina agreed that Laxman’s syntax is quite complex, and both gave him 4 out of 5. As opposed to Nigina’s 4 out of 5 for

Omra’s syntax, Ngongose thought that Omra did not use as complex syntax, and gave her 3 out of

5. Both Nigina and Ngongose agreed that Omra’s use of vocabulary is more limited than that of

Laxman. Moreover, both of the raters agreed that Omra’s pronunciation and grammar are quite developed, they both gave her 4 out of 5 in both categories. Nigina thought that Laxman’s accent is heavier that Omra’s, thus gave him 3 out of 5. However Ngongose and Nigina agreed that

Laxman’s use of grammar is quite impressive, and gave him 4 out of 5.

While scoring the students based on the rubric, Ngongose thought that Both Laxman’s and

Omra’s hesitations do not interfere with interaction. While Nigina thought that their hesitation was sometimes interfering with interaction. Both Ngongose and Nigina thought that Laxman was using descriptive and vivid vocabulary quite successfully. However, when it came to Omra, Nigina thought that she was not always able to use proper vocabulary and gave her 3 out of 5. Ngongose

has graded Omra for the same category with 5 out of 5. Laxman’s pronunciation is more comprehensible for Ngongose, while Nigina thinks that Omra has a lighter accent. Nigina though that none of the students’ grammar errors were interfering with meaning, while Ngongose thought that Laxman’s grammar errors were starting to interfere with meaning, thus she gave him 3 out of

5. Both raters agreed that both students’ responses addressed the task appropriately, but might fall short of being fully developed.

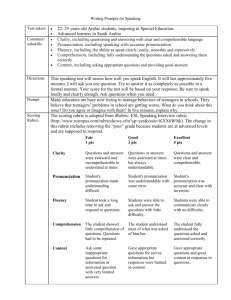

We believe that both the rating scale and the rubric have strengths and weaknesses. The rating scale is quite practical because it does not take much time to create it. That would give the teacher time to spend on actual grading. Its validity is also quite high, as fluency, complexity, and accuracy have subsections to make sure that different targeted aspects of the students’ response are assessed.

Other than that we could not find any strength. The rating scale is not reliable because the expectations for each category are not precise. It is hard to score the students based only on a scale, when there is no explanation on what each number means. This makes the rater less reliable.

Authenticity of the rating scale is also low, as it does not have much of a language, that it why it may be difficult for some raters to follow it. The rating scale may not provide much positive washback because the students may not be able to interpret numbers into explanation of how they performed in the task.

On the other hand, the rubric is also high in practicality. It may take longer time to design it, compared to the rating scale. However, when it is ready to use, it is very practical because the expectations are clear and precise, so it takes less time and effort to grade each student. This also speaks to reliability of the rater. The rater reliability is higher because as we have mentioned earlier, clear and precise expectations for each section make scoring less subjective. Rubric provides higher washback because when the student receives the rubric before the task, he/she knows based

on what he/she will be graded, and after receiving the grade, it will be easier for him/her to interpret it.

After analyzing the scores that we gave to the students separately, we thought that the last criterion of the rubric, namely, task accomplishment needs more precision. When we were scoring the students, it was harder to decide on a score based on the last criterion. We thought that the explanation of each score for task accomplishment could have been elaborated more. We could add parts which would reflect the requirements of the task. We are thinking of changing some terms in that part. Some of the options could be "Task Completion" or "Task

Performance".

Section 4- What did you learn by doing the assignment?

Assessing our ESL students by utilizing the rating scale and the analytic rubric has been very beneficial to each and every one of us in terms of practicality, washback, reliability, and validity.

We also learned how to adjust the content of our rubric based on the expectations of our assignment.

Our group consisted of three members, and this assignment required that we, as multiraters, had to come to a general consensus as far as the final results are concerned. So, each rater did not just assign a numeric score, but each had to justify the score, and then be open to suggestions from other team members. So, although some of us had strong opinions as far as assessing scores are concerned, we learned to work cooperatively as we negotiated meaning, and came to a final score on each participant.

We also learned how to adjust our rubric to suit our teaching expectations, objectives and goals. When we utilized the rating scale we found out that it was easy to use, but lacked precision, so we decided to eliminate its ambiguity by creating a rubric which covered the different components of the listening task. When we discovered that the rubric lacked a task completion section, we also decided to add that to the rubric. So we all learned that even our assessment tools can be custom made. So, in a way we are actually in charge of our assessment in the classroom. We learned that although the analytic rubric can be very challenging and difficult to create, it ends up being more authentic than the rater scale. We thought the rater scale does have its place, but when teach core content listening skills, it is best to utilize rubrics. So, for future references, if I'm placed in an ESL speaking class, and I'm required to assess a particular speaking task, I will have an idea of what the major criteria would be based on the nature of the task and assignment.

The most important thing we all learned is that speaking can actually be assessed, prior to this task, our assessment was only based on their ability to form complete sentences, now we can actually explore the syntactic, vocabulary, and other crucial components that we implemented here. We can also make up our own speaking assessment based on the interests of the learners.