January 10 - Department of Psychology

advertisement

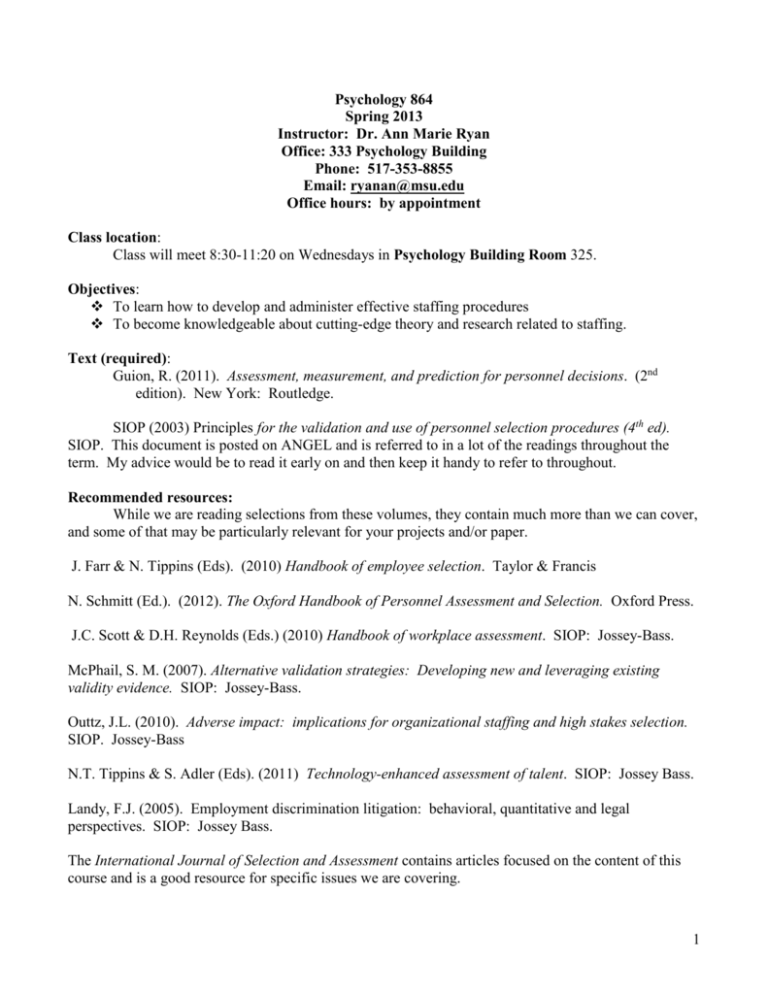

Psychology 864 Spring 2013 Instructor: Dr. Ann Marie Ryan Office: 333 Psychology Building Phone: 517-353-8855 Email: ryanan@msu.edu Office hours: by appointment Class location: Class will meet 8:30-11:20 on Wednesdays in Psychology Building Room 325. Objectives: To learn how to develop and administer effective staffing procedures To become knowledgeable about cutting-edge theory and research related to staffing. Text (required): Guion, R. (2011). Assessment, measurement, and prediction for personnel decisions. (2nd edition). New York: Routledge. SIOP (2003) Principles for the validation and use of personnel selection procedures (4th ed). SIOP. This document is posted on ANGEL and is referred to in a lot of the readings throughout the term. My advice would be to read it early on and then keep it handy to refer to throughout. Recommended resources: While we are reading selections from these volumes, they contain much more than we can cover, and some of that may be particularly relevant for your projects and/or paper. J. Farr & N. Tippins (Eds). (2010) Handbook of employee selection. Taylor & Francis N. Schmitt (Ed.). (2012). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press. J.C. Scott & D.H. Reynolds (Eds.) (2010) Handbook of workplace assessment. SIOP: Jossey-Bass. McPhail, S. M. (2007). Alternative validation strategies: Developing new and leveraging existing validity evidence. SIOP: Jossey-Bass. Outtz, J.L. (2010). Adverse impact: implications for organizational staffing and high stakes selection. SIOP. Jossey-Bass N.T. Tippins & S. Adler (Eds). (2011) Technology-enhanced assessment of talent. SIOP: Jossey Bass. Landy, F.J. (2005). Employment discrimination litigation: behavioral, quantitative and legal perspectives. SIOP: Jossey Bass. The International Journal of Selection and Assessment contains articles focused on the content of this course and is a good resource for specific issues we are covering. 1 Website: I rely on the ANGEL website to communicate with students regarding the class. All required readings are posted there (for your use only), as well as additional resources of interest. Grades are also posted via ANGEL as are announcements regarding classes. Grading Criteria: Participation (class attendance, preparation, discussion) Validation data analysis Reflection assignments Research proposal Applied Project (deliverable and organizational feedback) 15% 5% 15% 25% 40% Information on specific assignments can be found under the Lessons tab/Assignments folder on Angel. Attendance Policy: For graduate courses, there is a lot of in-class exchange of ideas and discussion of readings. Missing class is problematic and will be considered in awarding of participation points. Absences will be excused only in accordance with ombudsmen’s website on Attendance Policy (see www.msu.edu/unit/ombud) Academic Integrity: Article 2.3.3 of the Academic Freedom Report states that “The student shares with the faculty the responsibility for maintaining the integrity of scholarship, grades, and professional standards.” In addition, the Psychology Department adheres to the policies on academic honesty as specified in General Student Regulations 1.0, Protection of scholarship and grades, the all-University Policy on Integrity of scholarship and Grades, and Ordinance 17.00, Examinations (see MSU website). Therefore, unless specifically directed otherwise, you are expected to complete all course assignments, including homework, papers and exams, without assistance from any source. You are expected to develop original work for this course; therefore, you may not submit course work you completed for another course to satisfy the requirements for this course. Students who violate MSU rules will receive a failing grade in this course. Consistent with MSU’s efforts to enhance student learning, foster honesty, and maintain integrity in our academic processes, instructors may use a tool called Turnitin to compare a student’s work with multiple sources. The tool compares each student’s work with an extensive database of prior publications and papers, providing links to possible matches and a ‘similarity score’. The tool does not determine whether plagiarism has occurred or not. Instead, the instructor must make a complete assessment and judge the originality of the student’s work. Research proposal submissions to this course will be checked using this tool. Students should submit papers to Turnitin Dropboxes without identifying information included in the paper (e.g. name or student number), the system will automatically show this info to faculty in your course when viewing the submission, but the information will not be retained by Turnitin. Student submissions will be retained only in the MSU repository hosted by Turnitin. This means that you cannot turn in the same paper across multiple courses but you can turn the paper in to a source outside of MSU (e.g., a journal, a grant proposal, a conference submission) and it will not be flagged as previously submitted. 2 If you require special accommodations with regard to a disability, please discuss that with me. Commercialization of lecture notes and university-provided course materials is not permitted in this course. Readings: Guion Chapters 5 and 6 are a review of 818. Guion Chapter 13 should also be a review if you have had 818 and 860. You should review these chapters on your own, but feel free to talk with me about any questions you might have about content – basic measurement principles are foundational to selection research, so it is important that you understand these concepts. January 9: Course introduction Guion Ch 1 Bangerter, A., Roulin, N. & Konig, C.J. (2012). Personnel selection as a signaling game. Journal of Applied Psychology, 97, 719-738. RANKING OF SITES DUE JAN 11 January 16: Job analysis Guion Ch 2 Schippman, J.S. (2010). Competencies, job analysis, and the next generation of modeling. P 197-231. In J.C. Scott & D.H. Reynolds (Eds.) Handbook of workplace assessment. SIOP: Jossey-Bass. Sanchez, J. & Levine, E. (2012). The rise and fall of job analysis and the future of work analysis. Annual Review of Psychology, 63, 397. Campion, M. A., Fink, A. A., Ruggeberg, B. J., Carr, L., Phillips, G. M., & Odman, R. B. (2011). Doing competencies well: Best practices in competency modeling. Personnel Psychology, 64(1), 225-262. Schumacher, S., Kleinmann, M., & König, C. J. (2012). Job analysis by incumbents and laypersons: Does item decomposition and the use of less complex items make the ratings of both groups more accurate? Journal of Personnel Psychology, 11(2), 69-76. ASSIGNMENT: DISCUSSION BOARD ON ORGANIZATIONAL ASSESSMENT; SET UP FIRST MEETING WITH SITE 3 January 23: Criteria Guion Ch 3 Cleveland, J.N. & Collella, A (2010). Criterion validity and criterion deficiency: what we measure well and what we ignore. In J. Farr & N. Tippins (Eds). Handbook of employee selection. Taylor & Francis. 551-567. Borman, W. C & Smith, T. N. (2012). The use of objective measures as criteria in I/O Psychology. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press. Murphy, K. (2010). How a broader definition of the criterion domain changes our thinking about adverse impact. In Outtz, J.L. Adverse impact: implications for organizational staffing and high stakes selection. O’Boyle E & Aguinis H (2012). The best and the rest: revisiting the norm of normality of individual performance. Personnel Psychology, 65, 79-119. ASSIGNMENT: JOBS AND CRITERIA REFLECTION January 30: Validation Basics Guion Ch 7 Sackett, P.R., Putka, D.J. & McCloy, R.A. (2012). The concept of validity and the process of validation. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press. Jeanneret, P.R. & Zedeck, S. (2010). Professional guidelines/standards. In J. Farr & N. Tippins (Eds). Handbook of employee selection. Taylor & Francis. Focal Article Murphy, K. R. (2009). Content validation is useful for many things, but validity isn’t one of them. Industrial and Organizational Psychology: Perspectives on science and practice, 2, 465-468. Commentaries: pp. 469-516. READ AT LEAST 3 OF THE COMMENTARIES Guion, R. M. (2009). Was this trip necessary? Thornton, G. C. (2009). Evidence of content matching is evidence of validity. Putka, D. J., McCloy, R. A., Ingerick, M., O’Shea, P. G., & Whetzel, D. L. (2009). Links among bases of validation evidence: Absence of empirical evidence is not evidence of absence. Tonowski, R. F. (2009). “Content” still belongs with “validity” 4 Binning, J. F., & LeBreton, J. M. (2009). Coherent conceptualization is useful for many things, and understanding validity is one of them. Highhouse, S. (2009). Tests don’t measure jobs: the meaning of content validation. Goldstein, I. L., & Zedeck, S. (2009). Content validity and Murphy’s angst. Kim, B. H., & Oswald, F. L. (2009). Clarifying the concept and context of content validation. Davison, H. K., & Bing, M. N. (2009). Content validity does matter for the criterion-related validity of personality tests. Spengler, M., Gelleri, P P., & Schuler, H. (2009). The construct behind content validity: New approaches to a better understanding. O’Neill, T. A., Goffin, R. D., & Tett, R. P. (2009). Content validation is fundamental for optimizing the criterion validity of personality tests. Tan, J. A. (2009). Babies, bathwater, and validity: Content validity is useful in the validation process. Reply: Murphy, K. R. (2009). Is content-related evidence useful in validating selection tests? PP. 517-526. ASSIGNMENT: OUTLINE FOR DELIVERABLE February 6: Further issues in validation Guion Ch 12, p417-419 Gibson, W. M.. & Caplinger, J. A. (2007). Transportation of validation results. In S. M. McPhail (Ed.), Alternative validation strategies: Developing new and leveraging existing validity evidence (pp. 2981). John Wiley. McDaniel, M. A. (2007).Validity generalization as a test validation approach. In S. M. McPhail (Ed.), Alternative validation strategies: Developing new and leveraging existing validity evidence (pp. 159-180). John Wiley. Newman, D. A., Jacobs, R. R., & Bartram, D. (2007). Choosing the best method for local validity estimation: Relative accuracy of meta-analysis versus a local study versus Bayes-analysis. Journal of Applied Psychology, 92, 1394-1413. Focal article Johnson, J.W., Steel, P., Scherbaum, C.A., Hoffman, CC, Jeanneret, P.R. & Foster, J. (2010). Validation is like motor oil: synthetic is better. Industrial and Organizational Psychology, 3, 305-328. 5 Commentaries (p329-370): READ AT LEAST 3 Oswald & Hough Validity in a jiffy: how synthetic validation contributes to personnel selection Bartram, Warr & Brown. Let’s focus on two-stage alignment not just on overall performance Russell. Better at what? Schmidt & Oh Can synthetic validity methods achieve discriminant validity Harvey. Motor oil or snake oil: synthetic validity is a tool not a panacea Murphy: Synthetic validity: a great idea whose time never came Vancouver: Improving I-O Science through synthetic validity Hollweg: Synthetic oil is better for whom? McCloy, Putka & Gibby. Developing an online synthetic validation tool Response: Steel et al. At sea with synthetic validity p 371-383. ASSIGNMENT: PAPER TOPIC February 13: US Legal issues, reducing adverse impact, bias analysis Guion Ch 4 and 9 ADVERSE IMPACT/LEGAL READINGS Outtz, J.L. & Newman, D.A. (2010). A theory of adverse impact. In Outtz, J.L. (Ed). Adverse impact: implications for organizational staffing and high stakes selection. P 53-94. Landy, F.J., Gutman, A. & Outtz, J.L. (2010). A sampler of legal principles in employment selection. J. Farr & N. Tippins (Eds). (2010) Handbook of employee selection. Taylor & Francis Ryan, A.M. & Powers, C. (2012). Workplace diversity. N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press. Focal article: McDaniel, M.A., Kepes, S. & Banks, G.C. (2011). The Uniform Guidelines are a detriment to the field of personnel selection. Industrial and Organizational Psychology, 4, 494-514. Commentaries: READ AT LEAST 3 6 Dunleavy et al. Guidelines, Principles, Standards, and the Courts: why can’t they all just get along? Tonowski. The Uniform Guidelines and personnel selection: identify and fix the right problem Outtz. Abolishing the Uniform Guidelines: Be careful what you wish for Barrett, Miguel & Doverspike. The Uniform Guidelines: Better the devil you know. Sharf. Equal employment versus equal opportunity: a naked political agenda covered by a scientific fig leaf. Reynolds & Knapp SIOP as advocate: developing a platform for action. Sackett The Uniform Guidelines is not a scientific document: implications for expert testimony Brink & Crenshaw The affronting of the Uniform Guidelines: from propaganda to discourse Mead & Morris About babies and bathwater: retaining core principles of the Uniform Guidelines Jacobs, Deckert & Silva Adverse impact is far more complicated than the Uniform Guidelines indicate Hanges, Aiken, & Salmon The Devil is in the details (and the context): a call for care in discussing the Uniform Guidelines. Reply: McDaniel, Kepes & Banks. Encouraging debate on the Uniform Guidelines and the disparate impact theory of discrimination. TEST BIAS READINGS Kuncel, N.R. & Klieger, D.M. (2012). Predictive bias in work and educational settings. J. Farr & N. Tippins (Eds). (2010) Handbook of employee selection. Taylor & Francis Aguinis, H., Culpepper, S.A. & Pierce, C.A. (2010). Revival of test bias research in preemployment testing. Journal of Applied Psychology, 95, 648-680. Focal article: Meade, A. W., & Tonidandel, S. (2010). Not seeing clearly with Cleary: What test bias analyses do and do not tell us. Industrial and Organizational Psychology: Perspectives on science and practice, 3, 192-205. Commentaries: READ AT LEAST 3 Cronshaw & Chung-Yan The need for even further clarity about Cleary Woehr What test bias analyses do and don’t tell us: let’s not assume we have a can opener 7 Sackett & Bobko. Conceptual and technical issues in conducting and interpreting differential prediction analyses Putka, Trippe & Vasilopoulos Diagnosing when evidence of bias is problematic: methodological cookbooks and the unfortunate complexities of reality. Borneman Using meta-analysis to increase power in differential prediction analyses Colarelli, Han & Yang Biased against whom? The problems of “group” definition and membership in test bias analyses. Response: Meade & Tonidandel Final thoughts on measurement bias and differential prediction. February 20: Score use/Decision making/ Basic test development Guion Ch 8; Ch 11 p394-398only; Ch 12 p411-417 and 421-447; and Ch 15, p 521-534only Kehoe, J. (2010). Cut scores and adverse impact. In Outtz, J.L. (Ed). Adverse impact: implications for organizational staffing and high stakes selection. P289-322 Hattrup, K. (2012). Using composite predictors in selection. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press. Johnson, J.W. & Oswald, F, (2010). Test administration and the use of test scores. J. Farr & N. Tippins (Eds). (2010) Handbook of employee selection. Taylor & Francis Mueller, L., Norris, D., & Oppler, S. (2007). Implementation based on alternative validation procedures: ranking, cut scores, banding, and compensatory models. In S. M. McPhail (Ed.), Alternative validation strategies: Developing new and leveraging existing validity evidence (pp. 349-405). John Wiley. DeCorte, W., Sackett, P.R. & Lievens, F. (2011) Designing pareto-optimal selection systems: formalizing the decisions required for selection system development. JAP, 96, 907-926. ASSIGNMENT: VALIDATION ANALYSIS February 27: Ability and job knowledge predictors/technology in testing/adaptive testing Guion Ch 10, p335-352 only; Guion Ch 11 p 375-389 and p 391-394 ABILITIES Ones, D.S., Dilchert, S. & Viswesvaran, C. (2012). Cognitive abilities. I n N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press. 8 Berry, C. M., Clark, M.A. & McClure, T.K. (2011). Racial/ethnic differences in the criterion-related validity of cognitive ability tests: a qualitative and quantitative review. Journal of Applied Psychology, 96, 881-906. Focal article Scherbaum, Goldstein, Yusko, & Ryan (2012). Intelligence 2.0: reestablishing a research program on g in I-O psychology. Industrial and Organizational Psychology, 5, 128-48. Commentaries READ AT LEAST 3 Ackerman, P.L. & Beier The problem is in the definition: g and intelligence in I-O psychology Lievens, F. & Reeve, C.L. Where I-O psychology should really (re)start its investigation of intelligence constructs and their measurement Brouwers, S.A. & Van de Vijver, F.J.R. Intelligence 2.0 in I-O psychology: revival or contextualization? Lang, J.W.B. & Bliese, P.D. I-O psychology and progressive research programs on intelligence Cucina, J.M., Gast, I.F. & Su, C. g 2.0: factor analysis, filed findings, facts, fashionable topics and future steps. Oswald, F.L. & Hough, L. I-O 2.0 from intelligence 1.5: staying (just) behind the cutting edge of intelligence theories Helms, J.E. A legacy of eugenics underlies racial-group comparisons in intelligence testing. Weinhardt, J.M. & Vancouver, J.B. Intelligent interventions Huffcutt, A.I., Goebl, A.P. & Culbertson, S.S. The engine is important, but the driver is essential: the case for executive functioning Postlewaite, B.E., Giluk, T.L. & Schmidt, F.L. I-O Psychologists and intelligence research: active, aware, and applied Response: I-O Psychology and Intelligence: A starting point established. TECHNOLOGY Scott, J.C. & Lezotte, D. V. (2012). Web-based assessments. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press. McCloy, RA & Gibby, RE (2011). Computerized adaptive testing. P153-189. In N.T. Tippins & S. Adler (Eds). Technology-enhanced assessment of talent. SIOP: Jossey Bass. 9 Fetzer, M. & Kantrowitz, T. (2011). Implementing computer adaptive tests. P 380-393. In N.T. Tippins & S. Adler (Eds). Technology-enhanced assessment of talent. SIOP: Jossey Bass. Focal article Tippins, N.T. (2009). Internet alternatives to traditional proctored testing: where are we now? Industrial and Organizational Psychology: Perspectives on science and practice, 2, 2-10. Commentaries: (pp. 11-68) READ AT LEAST THREE Bartram, D. The international test commission guidelines on computer-based and internetdelivered testing. Pearlman, K. Unproctored internet testing: Practical, legal, and ethical concerns. Hense, R., Golden, J. H., & Burnett, J. Making the case for unproctored internet testing: Do the rewards outweigh the risks? Kaminski, K. A., & Hemingway, M. A. To proctor or not to proctor? Balancing business needs with validity in online assessment. Weiner, J. A., & Morrison, J. D. Unproctored online testing: Environmental conditions and validity. Foster, D. Secure, online, high-stakes testing: Science fiction or business reality? Burke, E. Preserving the integrity of online testing. Arthur, W., Glaze, R. M., Villado, A. J., Taylor, J. E. Unproctored internet-based tests of cognitive ability and personality: Magnitude of cheating and response distortion. Drasgow, F., Nye, C. D., Guo, J., & Tay, L. Cheating on proctored tests: The other side of the unproctored debate. Do, B. Research on unproctored internet testing. Reynolds, D. H., Wasko, L. E., Sinar, E. F., Raymark, P. H., & Jones, J. A. UIT or not UIT? That is not the only question. Beaty, J. C., Dawson, C. R., Fallaw, S. S., & Kantrowitz, T. M. Recovering the scientistpractitioner model: How IOs should respond to unproctored internet testing. Gibby, R. E., Ispas, D., McCloy, R. A., & Biga, A. Moving beyond the challenges to make unproctored internet testing a reality. Reply pp. 69-76. Tippins, N. T. Where is the unproctored internet testing train headed now? 10 March 6: SPRING BREAK March 13: Issues in use of personality measures, interests, integrity tests, and emotional intelligence Guion Ch 11, p401-409 only PERSONALITY Hough, L. & Dilchert, S. 2010. Personality: Its measurement and validity for employee selection. In J. Farr & N. Tippins (Eds). (2010) Handbook of employee selection. Taylor & Francis INTERESTS Van Iddekinge CH, Roth PL, Putka D & Lanivich SE (2011). Are you interested? A meta-analysis of relations between vocational interests and employee performance and turnover. JAP, 96, 1167-1194. INTEGRITY Target article on integrity: Van Iddekinge, CH, Roth, PL, Raymark PH & Odle-Dusseau 2012 The criterion-related validity of integrity tests: an updated meta-analysis JAP, 97, 499-531. Commentaries READ ALL Harris, WG., Jones JW, Klion, R, Arnold, DW, Camara, W & Cunningham, MR (2012). Test publishers’ perspective on “an updated meta-analysis”: comment on Van Iddekinge, Roth, Raymark & Odle-Dusseau (2012), JAP, 97, 531-53 Ones, D.S., Viswesvaran, C & Schmidt, FL (2012). Integrity tests predict counterproductive work behahviors and job performance well: comment on Van Iddekinge, Roth, Raymark & OdleDusseau (2012), JAP, 97, 537-542 Reply: Van Iddekinge, CH, Roth, PL, Raymark PH & Odle-Dusseau 2012. The critical role of the research question, inclusion criteria, and transparency in meta-anlayses of integrity test research: a reply to Harris et al (2012) and Ones, Viswesvaran, and Schmidt (2012), JAP, 97, 543-549 Perspective: Sackett, PR & Schmitt N (2012). On reconciling conflicting meta-analytic findings regarding integrity test validity. JAP 97 550-556. EMOTIONAL INTELLIGENCE Focal article Cherniss, C. (2010). Emotional intelligence: Towards clarification of a concept. Industrial and Organizational Psychology: Perspectives on science and practice. 3, p110-126 Commentaries: READ AT LEAST 3 Cote, S. Taking the “intelligence” in emotional intelligence seriously 11 Gignac, G.E On a nomenclature for emotional intelligence research Petrides KV Trait emotional intelligence theory Roberts RD Matthew G & Zeidner M Emotional intelligence: muddling through theory and measurement Jordon PJ Dasborough MT Daus CS & Ashkanasy NM A call to context Van Rooy DL Whitman D & Viswesvaran C Emotional Intelligence: additional questions still unanswered Harms PD & Crede M Remaining issues in emotional intelligence research: construct overlap, method artifacts and lack of incremental validity Newman DA Joseph DL & MacCann C Emotional intelligence and job performance: the importance of emotion regulation and emotional labor context Antonakis J & Dietz J Emotional intelligence: on definitions, neuroscience and marshmellows Kaplan S Cortina J & Ruark GA Oops…we did it again: industrial-organizational’s focus on emotional intelligence instead of on its relationships to work outcomes. Riggio RE Before emotional intelligence: research on nonverbal, emotional and social competences. Reply: Cherniss, C. Emotional Intelligence: new insights and further clarifications. ASSIGNMENT: ASSESSMENT REFLECTION March 20: Issues in use of interviews and biodata Guion Ch 14 INTERVIEWS Diboye, R.L., Macan, T. & Shahani-Denning, C. (2012). The selection interview from the interviewer and applicant perspectives: can’t have one without the other. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press. Melchers, K.G., Lienhardt, N., von Aarburg, M. & Kleinmann, M 2011 Is more structure really better? A comparison of frame-of-reference training and descriptively anchored rating scales to improve interviewers’ rating quality. Personnel Psychology 64, 53-87. Swider, B.W., Barrick MR., Harris, TB & Stoverink AC (2011) Managing and creating an image in the interview: the role of interviewee initial impressions. JAP 96, 1275-1288 12 Barrick, MR, Swider BW, & Stewart G (2010). Initial evaluations in the interview: relationships with subsequent interviewer evaluations and employment offers. JAP 95 1163-1172. BIODATA Mumford, M.D., Barrett, J.D. & Hester, K.S. (2012). Background data: use of experiential knowledge in personnel selection. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press. Levashina, J., Morgeson, F.P. & Campion, M.A. 2012. Tell me some more: exploring how verbal ability and item verifiability influence responses to biodata questions in a high-stakes selection context Personnel Psychology, 65, 359-383. ASSIGNMENT: PROPOSAL DUE March 27: Issues in use of SJTs, worksamples, simulations and assessment centers, individual assessment Guion Ch 11 p389-391 only; p534-552 Lievens, F. & DeSoete B (2012). Simulations. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press.383Lievens F & Sackett PR 2012 The validity of interpersonal skills assessment via situational judgement tests for predicting academic success and job performance JAP 97 460-468 Roth, P.L., Buster, M.A. & Bobko, P(2011). Updating the trainability tests literature on black-white subgroup differences and reconsidering criterion-related validity, JAP, 96, 34-45. Hoffman, B.J., Melchers KG, Blair CA Kleinmann M & Ladd RT 2011 Exercises and dimensions are the currency of assessment centers. Personnel Psychology, 64, 351-395. Focal article: Silzer R & Jeanneret R 2011. Individual psychological assessment: a practice and science in search of common ground. Industrial and Organizational Psychology 4, 270-296. Commentaries: READ AT LEAST 3 Hazucha, J.F., Ramesh A Goff M Crandell S Gerstner C Sloan E Bank J & van Katwyk P Individual psychological assessment: the poster child of blended science and practice Kuncel NR & Highhouse S Complex predictions and assessor mystique Laser SA An iconoclast’s view of individual psychological assessment: what it is and what it is not. 13 Klehe U-C Scientific principles versus practical realities: insights from organizational theory to individual psychological assessment Lowman RL The question of integration and criteria in individual psychological assessment Morris SB, Kwaske IH & Daisley RR The validity of individual psychological assessments Tippins NT What’s wrong with content-oriented validity studies for individual psychological assessments? Miguel R & Miklos S Individual executive assessment: sufficient science, standards and principles Moses J Individual psychological assessment: you pay for what you get Doverspike D Lessons from the classroom: teaching an individual psychological assessment course. Response: Jeanneret R & Silzer R Individual psychological assessment: a core competency for industrialorganizational psychology. ASSIGNMENT: Tentative draft of report (optional) Schedule Presentation practices (as needed) week of April 16 and April 23 April 3: Cases week NO CLASS MEETING THIS WEEK Sellman, WS, Born DH Strickland WJ & Ross JJ (2010). Selection and classification in the US Military. In J. Farr & N. Tippins (Eds). (2010) Handbook of employee selection. Taylor & Francis Jacobs, R & Denning DL (2010). Public sector employment In J. Farr & N. Tippins (Eds). (2010) Handbook of employee selection. Taylor & Francis Malamut, A,van Rooy DL, Davis, VA (2011) Bridging the digital divide across a global business: development of a technology-enabled selection system for low-literacy applicants. In N.T. Tippins & S. Adler (Eds). Technology-enhanced assessment of talent. SIOP: Jossey Bass. Grubb AD (2011) Promotional assessment at the FBI: how the search for a high-tech solution led to a high-fidelity low-tech simulation. In N.T. Tippins & S. Adler (Eds). Technology-enhanced assessment of talent. SIOP: Jossey Bass. Hense R & Janovics J (2011) Case study of technology-enhanced assessment centers. In N.T. Tippins & S. Adler (Eds). Technology-enhanced assessment of talent. SIOP: Jossey Bass. 14 Cucina, JM, Busciglio HH, Thomas PH, Callen NF, Walker DD & Schoepfer RJG (2011) Video-based testing at US customs and border protection. In N.T. Tippins & S. Adler (Eds). Technology-enhanced assessment of talent. SIOP: Jossey Bass. ASSIGNMENT: CASES REFLECTION April 10: Recruitment Ryan AM & Delany T (2010). Attracting job candidates to organizations. In J. Farr & N. Tippins (Eds). (2010) Handbook of employee selection. Taylor & Francis Breaugh JA (2012). Employee recruitment: current knowledge and suggestions for future research. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press Earnest DR Allen DG & Landis RS (2011) Mechanisms linking realistic job previews with turnover: a meta-analytic path analysis. Personnel Psychology, 64, 865-898 Uggerslev KL Fassina NE & Kraichy D (2012) Recruiting through the stages: a met-analytic test of predictors of applicant attraction at different stages of the recruiting process Personnel Psychology, 65, 597-660. Griepentrog BBK Harold CM Holtz BC Klimoski RJ & Marsh SM (2012) Integrating social identity and the theory of planned behavior: predicting withdrawal from an organizational recruitment process. Personnel Psychology 65 723-753. Reynolds, D. H., & Weiner, J. A. (2009). Ch 5. Designing online recruiting and screening websites. Online recruiting and selection: Innovations in talent acquisition (pp. 69-90). Wiley-Blackwell. ASSIGNMENT: RECRUITMENT REFLECTION April 17: Selection system administration, applicant perceptions, utility and marketing selection systems Tippins NT (2012). Implementation issues in employee selection testing. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press Kehoe, J, Brown S & Hoffman CC (2012). The life cycle of successful selection programs. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press Cascio WF & Fogli L (2010). The business value of employee selection. In J. Farr & N. Tippins (Eds). (2010) Handbook of employee selection. Taylor & Francis Gilliland SW & Steiner DD (2012) Applicant reactions to testing and selection In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press 15 Sturman MC (2012) Employee value: combining utility analysis with strategic human resource management research to yield strong theory. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press Boudreau, JW (2012) “Retooling” evidence-based staffing: extending the validation paradigm using management mental models. In N. Schmitt (Ed.). The Oxford Handbook of Personnel Assessment and Selection. Oxford Press Focal article Highhouse, S. (2008). Stubborn reliance on intuition and subjectivity in employee selection. Industrial and Organizational Psychology: Perspectives on science and practice, 1, 333-342. Commentaries: (pp. 343-372) READ AT LEAST 3 Kuncel, N. R. Some new (and old) suggestions for improving personnel selection. Colarelli, S. M., & Thompson, M. Stubborn reliance on human nature in employee selection: statistical decision aids are evolutionarily novel Klimoski, R., & Jones, R. G. Intuiting the selection context Choragwicka, B., & Janta, B. Why is it so hard to apply professional selection methods in business practice? Martin, S. L. Managers also over rely on tests. Phillips, J. M., & Gully, S. M. The role of perceptions versus reality in managers’ choice of selection decision aids. Fisher, C. D. Why don’t they learn? O’Brien, J. Interviewer resistance to structure. Mullins, M. E., & Rogers, C. Reliance on intuition and faculty hiring. Thayer, P. W. That’s not the only problem. Reply: pp. 373-376 Highhouse, S. Facts are stubborn things. ASSIGNMENT: ADMINISTRATION REFLECTION April 24: Globalization/Ethics/Future of staffing/Project review and reflection Guion p353-374 only 16 Lefkowtiz J & Lowman RL (2010). Ethics of employee selection. In J. Farr & N. Tippins (Eds). (2010) Handbook of employee selection. Taylor & Francis Ryan, AM & Tippins NT (2010). Global applications of assessment. J.C. Scott & D.H. Reynolds (Eds.) (2010) Handbook of workplace assessment. SIOP: Jossey-Bass. Sackett et al. (2010). Perspectives from twenty-two countries on the legal environment for selection. J. Farr & N. Tippins (Eds). (2010) Handbook of employee selection. Taylor & Francis ASSIGNMENT: FINAL REPORT April 30: Semester wrap-up – scheduled time is 7:45-9:45 ASSIGNMENT: FINAL REFLECTION 17 Milestones/assignments due Jan 11 Jan 16 Jan 23 Jan 30 Feb 6 Feb 20 March 13 March 20 March 27 April 3 April 10 April 17 April 24 April 30 Rankings of site choices Question ideas for first org meetings Schedule 1st meeting for this week if possible Jobs and criteria reflection Detailed outline of your deliverable Paper topic Validation data analysis Assessment exploration and reflection Proposal due Tentative draft of report (optional) Case reflection Recruitment reflection Administration reflection Presentation practices (as needed) week of April 16 and April 23 Final Report Final reflections 18