16_Malave_compiled

advertisement

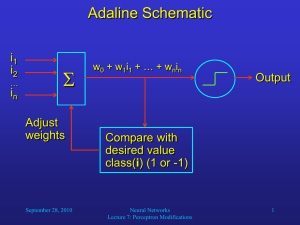

AY1. Character recognition is hard (for machines) because: a. characters are man-made as opposed to natural objects b. there is a lot of variability in the way each character is written c. humans have purpose-built character detectors; machines do not d. all of the above e. none of the above AY2. Linear threshold units are ______ real neurons in the brain: a. nothing like b. exact replica of c. inspired by d. none of the above AY3. What is a useful property of the perceptron? a. the perceptron can learn to "program itself" b. the perceptron can produce arbitrarily shaped boundaries that separate different classes of objects c. the perceptron can solve XOR problems d. the perceptron can be built via different media: chemical circuits, electrial circuits, digital computers e. a & d AY5. T/F: The backpropagation algorithm is a way to do credit assignment: attributing classification error to different weights in a neural network according to how much they each contributed to an output error. a. True b. False AY6. What does the success of neural networks in learning different tasks suggest about human cognitive development? a. Babies are hard-wired to fear spiders b. Babies are born with intricate and detailed innate knowledge about human languages c. Babies are born with an internal representation of faces d. Babies have all the cognitive skills of adults; they just lack motor coordination e. None of the above LS 5. When a perceptron is categorizing a series of items and it makes an error, what happens? a. It decreases the error by moving the boundary toward classifying the incorrect item correctly b. It starts the categorization process again from the beginning, and keeps running until by chance no errors are made and then uses that category boundary in the future c. It skips that item and categorizes the rest d. It uses all of the above strategies to correct errors e. None of the above strategies are used by perceptrons to correct errors KC2. The perceptron is a. A simple form of a neural network b. Used for classification c. Able to learn linear decision boundaries for categorizing inputs d. Both A and B DM3. True or False: A neural network can be trained to learn what sounds to produce in response to an input of written words. a. True b. False JS 1. Linear Threshold Units are similar to neurons in which of the following ways? a. They summate inputs. b. If inputs surpass a certain value, the unit activates. If they don’t, it remains at rest. c. They rely on the flow of ions to relay signals. d. a & b e. a & c JS 2. Both Dr. Cottrell and Mr. Malave discussed the use of a “hidden layer” in neural networks. How does this hidden layer make the neural network more powerful? a. Reconfigures the computer’s hardware in order to adapt it to a task. b. Helps the neural network to capture more complex and subtle statistical patterns in the input data c. Facilitates machine learning by making it more efficient d. Calculates whether a solution is possible within a finite number of steps, and then decides whether to continue the process or not. e. None of the above. JS 3. Which of the following is an example of an “Exclusive Or” statement? a. For this animal to leave you alone, your height must be greater than 2m or less than 0.4m. b. To avoid crashing after traversing this ramp, you must be travelling between 50 and 70 mph. c. For these pants to fit, you must have waist size < 32 inches or leg length > 32 inches. d. In order for Tom to adopt a dog, he must have owned his house for two consecutive years or owned a dog for one full year. e. All of the above. WC1. Why types of things can we do with a decision boundary generated by a perceptron? a. Create Venn diagrams to categorize the data. b. Divide different categories of data by creating a threshold. c. Separate data points that are mostly overlapping. d. Create backpropagation through the perceptron. e. Make a line of best fit for each category of data. WC2. What does the Perceptron Convergence Theorem say? a. If there is a line that can perfectly separate the data into categories, the perceptron will find it in a finite number of steps. b. The perceptron outputs 0's and 1's based on whether the data is significant or not. c. The data input to the perceptron always converges onto a line. d. The perceptron will continue to cycle indefinitely if it finds a line to divide the data. e. The perceptron analyzes input by dividing data into a grid-like pattern. WC3. Which of the following is/are evidence that a machine can learn? a. Machines can do very complicated math. b. Machines can recognize patterns like categories or characters. c. Machines can recognize speech. d. Both a and b. e. Both b and c. WC4. Which of the following terms best describes adjusting a line on a graph if a dot is on the wrong side of the line as discussed in Vicente Malave’s lecture? a. Learning machine. b. Random dot paradigm. c. Error correction procedure. d. Unit rotation. e. Line rotation procedure. SD4: How can neural networks be augmented to learn more complicated problems (i.e. xor problem)? a. Greater memory capacity b. Additional output units c. More hidden units d. Increase processing power e. None of the above (redundant) SD5: What helps a neural network to learn a configuration of weights that more correctly classify input data? a. Programmers continue to adjust the algorithm b. Backpropagation algorithm c. Adding more hidden units d. The weights are preprogrammed e. All of the above BT4. True or False: Exclusive-Or data inputs can always be separated via a linear decision boundary. a. True b. False