TTS_v12

advertisement

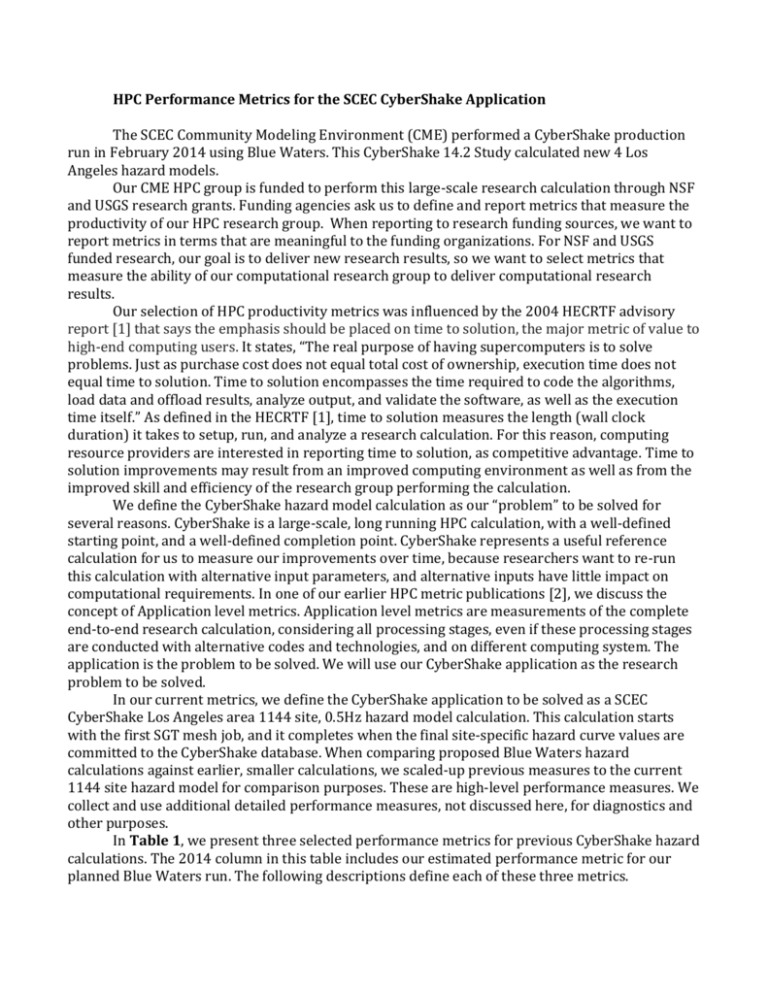

HPC Performance Metrics for the SCEC CyberShake Application The SCEC Community Modeling Environment (CME) performed a CyberShake production run in February 2014 using Blue Waters. This CyberShake 14.2 Study calculated new 4 Los Angeles hazard models. Our CME HPC group is funded to perform this large-scale research calculation through NSF and USGS research grants. Funding agencies ask us to define and report metrics that measure the productivity of our HPC research group. When reporting to research funding sources, we want to report metrics in terms that are meaningful to the funding organizations. For NSF and USGS funded research, our goal is to deliver new research results, so we want to select metrics that measure the ability of our computational research group to deliver computational research results. Our selection of HPC productivity metrics was influenced by the 2004 HECRTF advisory report [1] that says the emphasis should be placed on time to solution, the major metric of value to high-end computing users. It states, “The real purpose of having supercomputers is to solve problems. Just as purchase cost does not equal total cost of ownership, execution time does not equal time to solution. Time to solution encompasses the time required to code the algorithms, load data and offload results, analyze output, and validate the software, as well as the execution time itself.” As defined in the HECRTF [1], time to solution measures the length (wall clock duration) it takes to setup, run, and analyze a research calculation. For this reason, computing resource providers are interested in reporting time to solution, as competitive advantage. Time to solution improvements may result from an improved computing environment as well as from the improved skill and efficiency of the research group performing the calculation. We define the CyberShake hazard model calculation as our “problem” to be solved for several reasons. CyberShake is a large-scale, long running HPC calculation, with a well-defined starting point, and a well-defined completion point. CyberShake represents a useful reference calculation for us to measure our improvements over time, because researchers want to re-run this calculation with alternative input parameters, and alternative inputs have little impact on computational requirements. In one of our earlier HPC metric publications [2], we discuss the concept of Application level metrics. Application level metrics are measurements of the complete end-to-end research calculation, considering all processing stages, even if these processing stages are conducted with alternative codes and technologies, and on different computing system. The application is the problem to be solved. We will use our CyberShake application as the research problem to be solved. In our current metrics, we define the CyberShake application to be solved as a SCEC CyberShake Los Angeles area 1144 site, 0.5Hz hazard model calculation. This calculation starts with the first SGT mesh job, and it completes when the final site-specific hazard curve values are committed to the CyberShake database. When comparing proposed Blue Waters hazard calculations against earlier, smaller calculations, we scaled-up previous measures to the current 1144 site hazard model for comparison purposes. These are high-level performance measures. We collect and use additional detailed performance measures, not discussed here, for diagnostics and other purposes. In Table 1, we present three selected performance metrics for previous CyberShake hazard calculations. The 2014 column in this table includes our estimated performance metric for our planned Blue Waters run. The following descriptions define each of these three metrics. 1. Application Core Hours (Hours): The application core hours metric provides as an indication of the scale of the HPC calculation needed to solve the problem of interest, in our case a CyberShake seismic hazard model calculation. We define application core hours as the sum of all the core hours used to complete the application. So 1000 cores running for 10 hour requires 10,000 core hours. A reduction in the number of application core hours required to perform a hazard model calculation will be considered a performance improvement. Improvements may come from improved codes, improved computational techniques, more efficient computers, and other sources. For Blue Water’s we will convert Blue Waters XE Node-Hours x 32 to get core hours, and Blue Waters XK Node-Hours x 16 to get core hours. We will count core hours as measured on the machine at the time we ran the calculation. No conversion from 2008 core hours to 2013 core hours, due to differences in core performance, is made. Our metrics include only core hours for simplicity. Data I/O is another type of computational metric that might be added in the future. 2. Application Makespan (Hours): The application makespan metrics describes how long it takes to run the calculation, and solve the problem. We define our application makespan as the total execution duration of our application [3]. We will measure our application makespan as the wallclock time (in hours) between the start of the calculation until the completion of calculation. This measure includes time waiting in queues and any processing stops and delays. A reduction in the application makespan will be considered a performance improvement. Improvements may come from many sources including improved codes, simplified techniques, use of larger or faster computers, automation of calculation, and other sources. 3. Application Time to Solution (Hours): The application time to solution metric includes time to setup the problem, time to run the calculation, and time to analyze the results. In research terms, we might describe this metric in terms of testing a hypothesis. Start with a hypothesis, how long does it take to setup a computation to test the hypothesis, run the calculation, and analyze the results long enough to prove or disprove the hypothesis. The HECRTF definition for time to solution [1] includes defining a computational problem, coding a solution, setting up and checking the calculation on a computing resource, running the full-scale calculation, and analyzing the results. A reduction in time to solution will be considered a performance improvement. Because our application has working codes, we will define our application time-to-solution as application setup-time plus the application makespan plus the application analysis time. Setup time and analysis time are vaguely defined and harder to measure in a repeatable and reliable way. For our CyberShake calculation, we can roughly define the start of the setup time as the time we get access to a new supercomputer. We will define CyberShake analysis time to include standard processing such as hazard maps, disaggregation, and averaging-based factorization. Setup time measurements are particularly vulnerable to uncertainties introduced by multitasking of a group. Until we have good measurements, we will use operator estimates for setup and analysis times. Furthermore, we will assume the same setup and analysis time for all machines. If these estimates introduce uncertainties too large for your intended use, consider using our makespan metric that focuses on execution time of the calculation. Although we do not have historical measures for setup and analysis time, we will include these in our time to solution calculations as a reminder that the setup and analysis times are important considerations in an important metric. Conclusions: We have identified three high-level computational performance metrics and use them to track our ability to complete a CyberShake hazard calculation over the last six years. Our metrics include application core hours, as an indicator of computational efficiency, application makespan, as an indicator of execution efficiency, and application time to solution, as an indicator of computational research efficiency. Several HPC advisory reports have identified time to solution [1][4] [5] as the main figure of merit to computational scientists [6]. In these reports, Time to solution includes getting a new application up and running (the programming time), waiting for it to run (the execution time), and, finally, interpreting the results (the interpretation time). We use our CyberShake hazard model calculation as the computational problem to be solved, and we report the time to solution for three previous solutions of this problem. Our current measurements show continued improvements each time we calculate a new solution. Our improvements have come from many sources including from access to faster supercomputers, improved computational methods, and an increased computational efficiency gained through workflow automation. Table 1: Measurements from four previous CyberShake hazard calculations, with values of two earliest scaled up to the current 1144 site scale of the last and the planned calculations. 2014 results are based on measurements taken after we completed our CyberShake 14.2 Study. CyberShake Application Metrics (Hours): Application Core Hours: Application Makespan: Application Time to Solution: PSHA 2008 2009 2013 2014 (Mercury, (Ranger, (Blue Waters/ (Blue Waters) normalized) normalized) Stampede) 0.69 19,448,000 16,130,400 12,200,000 10,032,704 (CPU) (CPU) (CPU) (CPU+GPU) 98 seconds 70,165 6,191 1,467 342 7 days + 7 72,493 8,519 3,795 2,670 days + 1min References: [1] Federal Plan for High-end Computing: Report of the High-end Computing Revitalization Task Force (HECRTF) (2004) Executive Office of the President, Office of Science and Technology Policy, 2004 [2] Metrics for heterogeneous scientific workflows: A case study of an earthquake science application (2011) Scott Callaghan, Philip Maechling, Patrick Small, Kevin Milner, Gideon Juve, Thomas H Jordan, Ewa Deelman, Gaurang Mehta, Karan Vahi, Dan Gunter, Keith Beattie and Christopher Brooks, International Journal of High Performance Computing Applications 2011 25: 274 DOI: 10.1177/1094342011414743 [3] Scientific Workflow Makespan Reduction Through Cloud Augmented Desktop Grids (2011) Christopher J. Reynolds, Stephen Winter, Gabor Z. Terstyanszk, Tamas Kiss, Third IEEE International Conference on Coud Computing Technology and Science, 2011 [4] The Opportunities and Challenges of Exascale Computing (2010) Summary Report of the Advanced Scientific Computing Advisory Committee (ASCAC) Subcommittee on Exascale Computing, Department of Energy, Office of Science, Fall 2010 [5] National Research Council. Getting Up to Speed: The Future of Supercomputing. Washington, DC: The National Academies Press, 2004. [6] Computational Science: Ensuring America’s Competitiveness (2005) The President’s Information Technology Advisory Committee (PITAC) CyberShake Los Angeles Region Hazard Model Calculation Core Hours (Hours) 25,000,000 Core-Hours (Hours) 20,000,000 15,000,000 Series1 10,000,000 5,000,000 0 2007 2008 2009 2010 2011 2012 SCEC Project Year 2013 2014 2015 CyberShake Los Angeles Region Hazard Model Calculation Core Hours (Hours) 18,000,000 16,000,000 Core-Hours (Hours) 14,000,000 12,000,000 10,000,000 8,000,000 Series1 6,000,000 4,000,000 2,000,000 0 2008 2009 2010 2011 2012 2013 SCEC Project Year 2014 2015 CyberShake Los Angeles Region Hazard Model Calculation Makespan (Hours) 80,000 70,000 Makespan (Hours) 60,000 50,000 40,000 Series1 30,000 20,000 10,000 0 2007 2008 2009 2010 2011 2012 SCEC Project Year 2013 2014 2015 CyberShake Los Angeles Region Hazard Model Calculation Makespan (Hours) 7,000 Makespan (Hours) 6,000 5,000 4,000 3,000 Series1 2,000 1,000 0 2008 2009 2010 2011 2012 SCEC Project Year 2013 2014 2015 CyberShake Los Angeles Region Hazard Model Calculation Time To Solution (Hours) 80,000 Time To Solution (Hours) 70,000 60,000 50,000 40,000 Series1 30,000 20,000 10,000 0 2007 2008 2009 2010 2011 2012 SCEC Project Year 2013 2014 2015 CyberShake Los Angeles Region Hazard Model Calculation Time To Solution (Hours) 9,000 Time To Solution (Hours) 8,000 7,000 6,000 5,000 4,000 Series1 3,000 2,000 1,000 0 2008 2009 2010 2011 2012 SCEC Project Year 2013 2014 2015