TO: Sandra Stewart, ACT/CAAP FROM: Debbie Furlong, UW

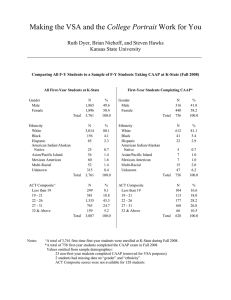

advertisement

TO: Sandra Stewart, ACT/CAAP FROM: Debbie Furlong, UW-Green Bay DATE: August 7, 2009 RE: Research request regarding student “motivation” Yesterday afternoon in the break out session I referred to work I have done to evaluate the importance of student motivation in low stakes testing. In my work, student effort appears to have as much impact on outcomes as entering skill levels. If I’m right, then the VSA approach will only produce valid interinstitutional comparisons if 1) all institutions are equally adept at motivating students to do their best or 2) the regression model used to predict the learning gains controls, somehow, for effort/motivation as well as entering capabilities. I believe ACT/CAAP can make two important contributions to the VSA dialogue by analyzing the motivation item included on the CAAP. First, does student response to the CAAP motivation item vary across schools, or are all students equally motivated regardless of the school they attend? I suspect there are large variations between institutions. Second, does motivation matter? By regressing CAAP outcome scores on two variables (ACT score and the motivation item), ACT/CAAP can test whether motivation explains any of the variation in CAAP scores once capabilities are held constant. If motivation does impact outcome, how important is it? In my work here at UW-Green Bay, motivation or effort appears to play such a significant role that excluding it from the model explaining student gains would lead us to draw many incorrect conclusions. We conduct the College BASE as a required sophomore assessment on about 800 students a year, many of whom started as freshmen and provided ACT scores. Our testing protocol requires students to remain in the testing room for 70 minutes in order to complete the requirement and register for their junior year. C-BASE allows students to take as long as 180 minutes. We document how long students spend testing, and I feel it gives us a meaningful proxy of their effort and motivation. The figure below plots average C-BASE composite scores (y axis) for each level of ACT Composite (x axis), with separate lines for varying amounts of time spent. At every level of ACT, greater effort results in higher outcomes. This is so self-evident and obvious that nobody will question this outcome. It’s the statistical models that cause me concern. The table below contains regression results for a model predicting College BASE composite scores using ACT Composite and the time spent. I ran the model for the past six years combined and then for each year separate. [For the combination of all years, C-Base scores range from 124 to 443 with a mean of 285 and a standard deviation of 57. Our ACT Composite scores range from 13 to 33 with a mean of 23 and a standard deviation of 3.2. The time variable ranges from 1 to 5 (see the graph for definitions) with a mean of 3.1 and a standard deviation of 1.32.] Coefficient (standard error) Standardized Estimate Constant ACT Composite Score Time Spent taking CBASE 2003 - 08 2003 2004 2005 2006 2007 2008 -21.7* (4.19) 10.1* (0.17) 0.57 24.1* (0.42) 0.56 .67 3363 -1.6 (10.7) 9.7* (0.44) 0.62 21.5* (1.14) 0.53 0.65 451 -22.6 (10.2) 10.2* (0.43) 0.57 24.3* (1.08) 0.54 0.69 541 -5.0 (9.8) 9.2* (0.40) 0.56 24.3* (1.0) 0.58 0.65 594 -15.3 (9.8) 9.8* (0.41) 0.56 24.8* (1.0) 0.57 0.69 577 -38.3* (10.5) 10.5* (0.43) 0.59 24.9* (1.0) 0.59 0.67 584 -48.7* (10.5) 11.4* (0.46) 0.57 23.1* (1.0) 0.53 0.68 616 R-Squared Number of observations Dependent variable is College-BASE Composite score * indicates significance at the 99% level. The standardized coefficients for ACT Composite and Time Spent taking the assessment have not only been quite consistent over time, they are practically equal. I interpret this to mean that student motivation and effort is as important to the model as entering capabilities. I am familiar with the work being done by the CLA on motivation and outcomes on that test. It is quite possible that the phenomenon UWGB is experiencing with the College BASE reflects something unique to multiple-choice, bubble-form, low-stakes testing. I would like for ACT to extend the research reported in the “ACT Report: Voluntary System of Accountability Learning Gains Methodology”. What kind of variance do those schools show across the motivation item? What impact does including the motivation item along with ACT Composite have on the results? Maybe it really does “all come out in the wash” in institution-level comparisons. That would be a wonderful and heartening outcome for the VSA project. In closing, I would encourage you to share my memo freely with others at ACT, but to respect my need for it to remain confidential. This is particularly important because our C-BASE program has not gone through my local IRB and I don’t feel I have the authority to use this data as part of a national research agenda about measuring learning gains. If any of your researchers think this is something that UWGB does need to contribute to that dialogue, I will work with our IRB to explore options regarding presentation or publication. Thank you so much for letting me speak about this at the break out session yesterday. I know there were many others there with concerns of their own, and I worry that I took too much of our group’s time.