here - MSCS

advertisement

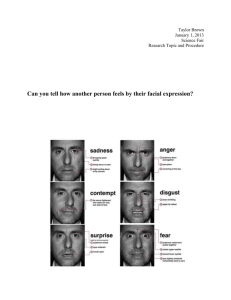

LITERATURE REVIEW Several researches are based on Facial Action Coding System (FACS), first introduced by Ekman and Friesen in 1978 [18]. It is a method for finding taxonomy of almost all possible facial expressions initially launched with 44 Action Units (AU). Computer Expression Recognition Toolbox (CERT) have been proposed [32, 33, 1, 36, 35, 2] to detect facial expression by analyzing the appearance of Action Units related to different expressions. Different classifiers like Support Vector Machine (SVM), Adabooster, Gabor filter, Hidden Markov Model (HMM) have been used alone or in combination with others for gaining higher accuracy. Researchers in [6, 29] have used active appearance models (AAM) to identify features for pain from facial expression. Eigenface based method was deployed in [7] for an attempt to find a computationally inexpensive solution. Later the authors included Eigeneyes and Eigenlips to increase the classification accuracy [8]. A Bayesian extension of SVM named Relevance Vector Machine (RVM) has been adopted in [30] to increase classification accuracy. Several papers [28, 4] relied on artificial neural network based back propagation algorithm to find classification decision from extracted facial features. Many other researchers including Brahnam et al. [34, 37], Pantic et al. [7, 8] worked in the area of automatic facial expression detection. Almost all of these approaches suffer from one or more of the following deficits: 1) reliability on clear frontal image, 2) out-of-plane head rotation, 3) right feature selection, 4) fail to use temporal and dynamic information, 5) considerable amount of manual interaction, 6) noise, illumination, glass, facial hair, skin color issues, 7) computational cost, 8) mobility, 9) intensity of pain level, and finally 10) reliability. Moreover, there has not been any work regarding automatic mood detection from facial images in social network. We have also done an analysis on the mood related applications of Facebook. Our model is fundamentally different from all these simple models. All these mood related applications in Facebook require users to choose a symbol that represents his/her mood manually but our model detects the mood without user intervention. Automatic Facial Expression Detection Classification Technique Focus Principal Component Linear Discriminant Artificial Neural Neonates Adults Patients Analysis (PCA) Analysis (LDA) Network (ANN) Dimension 2D Table I. Features of several reported facial recognition models NoS Learning Computational Accuracy or Model/ 2D/3D Complexity img Classifier Eigenfaces 38 Eigenface/ 2D Low 90-92% [7] sub Principal Component Analysis Eigenfaces+ 38 Eigenfaces+ 2D Low 92.08% Eigeneyes+ sub Eigeneyes+ Eigenlips Eigenlips [8] AAM[6] 129 SVM Both Medium 81% sub [32,35] 5500 Gabor filter, Both High 72% Littlewort img SVM, Name 3D Intensity No No No Somewhat ANN [28] 38 sub RVM [30] 26 sub 204 img 1 sub 1336 frame 100 sub Adaboost Artificial Neural Network RVM 2D Medium 91.67% No 2D Medium 91% Yes Facial High and 2D Low Cont. SomeGrimace low pass monitoring what [31] filter Back Back 2D Medium to NA for No propagation propagation high pain [29] NN Support Vector Machine - SVM, Relevance Vector Machine - RVM, ANN - Artificial Neural Network, continuous, NA – Not Available, subject – sub, image – img. Cont. – CRITICAL AND UNRESOLVED ISSUES Deception of Expression (suppression, amplification, simulation): The volume of control over suppression, amplification, and simulation of a facial expression is yet to be sorted out while determining any type of automatic facial expressions. Galin and Thorn [26] worked on the simulation issue but their result is not conclusive. In several studies researchers obtained mixed or inconclusive findings during their attempts to identify suppressed or amplified pain [26, 27]. Difference in Cultural, Racial, and Sexual Perception: Multiple empirical studies performed to collect data have demonstrated the effectiveness of FACS. Almost all these studies have selected individuals mainly based on gender and age. But the facial expressions are clearly different in people of different races and ethnicities. Culture plays a major role in our expression of emotions. Culture dominates the learning of emotional expression (how and when) from infancy, and by adulthood that expression becomes strong and stable [19, 20]. Similarly, the same pain detection models are being used for men and women while research shows [14,15] notable difference in the perception and experience of pain between the genders. Fillingim [13] believed this occurs due to biological, social, and psychological differences in the two genders. This gender issue has been neglected so far in the literature. We have put ‘Y’ in the appropriate column if the expression detection model deals with different genders, age groups, and ethnicity. Table II. Comparison Table Based on the Descriptive Sample Data Name Age Gender Ethnicity Eigenfaces[7] Eigenfaces+ Eigeneyes+ Eigenlips [8] AAM[6] [32,35] Littlewort AAN [28] RVM [30] Y Y Y Y Y Y Y Y (66 F, 63 M) NM Y Y (18 hrs to 3 days) N Y Y (13 B, 13 G) Y N (only Caucasian) N Facial Grimace N [31] Back Y Y NM propagation [29] Y – Yes, N – No, Not mentioned – NM, F – Female, M – Male, B – Boy, G – Girl. Intensity: According to Cohn [23] occurrence/non-occurrence of AUs, temporal precision, intensity, and aggregates are the four reliabilities that are needed to be analyzed for interpreting facial expression of any emotion. Most researchers including Pantic and Rothkrantz [21], Tian, Cohn, and Kanade [22] have focused on the first issue (occurrence/non-occurrence). Current literature has failed to identify the intensity level of facial expressions. Dynamic Features: Several dynamic features including timing, duration, amplitude, head motion, and gesture play an important role in the accuracy of emotion detection. Slower facial actions appear more genuine [25]. Edwards [24] showed the sensitivity of people to the timing of facial expression. Cohn [23] related the motion of head with a sample emotion ‘smile’. He showed that the intensity of a smile increases as the head moves down and decreases as it moves upward and reaches its normal frontal position. These issues of timing, head motion, and gesture have been neglected that would have increase the accuracy of facial expression detection. Reference [1] Bartlett, M.S., Littlewort, G.C., Lainscsek, C., Fasel, I., Frank, M.G., Movellan, J.R., “Fully automatic facial action recognition in spontaneous behavior”, In 7th International Conference on Automatic Face and Gesture Recognition, 2006, p. 223-228. [2]Braathen, B., Bartlett, M.S., Littlewort-Ford, G., Smith, E. and Movellan, J.R. (2002). An approach to automatic recognition of spontaneous facial actions. Fifth International Conference on automatic face and gesture recognition, pg. 231-235. [3] F. Pighin, J. Hecker, D. Lischinski, R. Szeliski, and D. H. Salesin, “Synthesizing realistic facial expressions from photographs”, Computer Graphics, 32(Annual ConferenceSeries):75–84, 1998. [4] Jagdish Lal Raheja, Umesh Kumar, “Human facial expression detection from detected in captured image using back propagation neural network”, In International Journal of Computer Science & Information Technology (IJCSIT), Vol. 2, No. 1, Feb 2010,116-123. [5] Paul Viola, Michael Jones, “Rapid Object Detection using a Boosted Cascade of Simple features”, Conference on computer vision and pattern recognition, 2001. [6] A. B. Ashraf, S. Lucey, J. F. Cohn, T. Chen, K. M. Prkachin, and P. E. Solomon. The painful face II-- Pain expression recognition using active appearance models. International Journal of Image and Vision Computing, 27(12):1788-1796, November 2009. [7] Md. Maruf Monwar, Siamak Rezaei and Dr. Ken Prkachin, “Eigenimage Based Pain Expression Recognition”, In IAENG International Journal of Applied Mathematics, 36:2, IJAM_36_2_1. (online version available 24 May 2007) [8] Md. Maruf Monwar, Siamak Rezaei: Appearance-based Pain Recognition from Video Sequences. IJCNN 2006: 2429-2434 [9] Mayank Agarwal, Nikunj Jain, Manish Kumar, and Himanshu Agrawal, “Face recognition using principle component analysis,eigneface, and neural network”, In International Conference on Signal Acquisition and Processing, ICSAP, 310-314. [10] Murthy, G. R. S. and Jadon, R. S. (2009). Effectiveness of eigenspaces for facial expression recognition. International Journal of Computer Theory and Engineering, Vol. 1, No. 5, pp. 638-642. [11] Singh. S. K., Chauhan D. S., Vatsa M., and Singh R. (2003). A robust skin color based face detection algorithm. Tamkang Journal of Science and Engineering, Vol. 6, No. 4, pp. 227-234. [12] Rimé, B., Finkenauera, C., Lumineta, O., Zecha, E., and Philippot, P. 1998. Social Sharing of Emotion: New Evidence and New Questions. In European Review of Social Psychology, Volume 9. [13] Fillingim, R. B., “Sex, gender, and pain: Women and men really are different”, Current Review of Pain 4, 2000, pp 24–30. [14] Berkley, K. J., “Sex differences in pain”, Behavioral and Brain Sciences 20, pp 371–80. [15] Berkley, K. J. & Holdcroft A., “Sex and gender differences in pain”, In Textbook of pain, 4th edition. Churchill Livingstone. [16] Pantic, M. and Rothkranz, L.J.M. 2000. Expert System for automatic analysis of facial expressions. In Image and Vision Computing, 2000, 881-905 [17] Pantic, M. and Rothkranz,, L.J.M. 2003. Toward an affect sensitive multimodal human-computer interaction. In Proceedings of IEEE, September 1370-1390 [18] Ekman P. and Friesen, W. Facial Action Coding System: A Technique for the Measurement of Facial Movement, Consulting Psychologists Press, Palo Alto, CA, 1978. [19] Malatesta, C. Z., & Haviland, J. M., “Learning display rules: The socialization of emotion expression in infancy”, Child Development, 53, 1982, pp 991-1003. [20] Oster, H., Camras, L. A., Campos, J., Campos, R., Ujiee, T., Zhao-Lan, M., et al., “The patterning of facial expressions in Chinese, Japanese, and American infants in fear- and anger- eliciting situations”, Poster presented at the International Conference on Infant Studies, Providence, 1996,RI. [21] Pantic, M., & Rothkrantz, M., “Automatic analysis of facial expressions: The state of the art”, In IEEE Transactions on Pattern Analysis and Machine Intelligence, 22, 2000, pp 1424-1445. [22] Tian, Y., Cohn, J. F., & Kanade, T., “Facial expression analysis”, In S. Z. Li & A. K. Jain (Eds.), Handbook of face recognition, 2005, pp. 247-276. New York, New York: Springer. [23] Cohn, J.F., “Foundations of human-centered computing: Facial expression and emotion”, In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI’07),2007, Hyderabad, India. [24] Edwards, K.., “The face of time: Temporal cues in facial expressions of emotion”, In Psychological Science, 9(4), 1998, pp270-276. [25] Krumhuber, E., & Kappas, A., “Moving smiles: The role of dynamic components for the perception of the genuineness of smiles”, In Journal of Nonverbal Behavior, 29, 2005, pp-3-24. [26] Galin, K. E. & Thorn, B. E. , “Unmasking pain: Detection of deception in facial expressions”, Journal of Social and Clinical Psychology (1993), 12, pp 182–97. [27] Hadjistavropoulos, T., McMurtry, B. & Craig, K. D., “Beautiful faces in pain: Biases and accuracy in the perception of pain”, Psychology and Health 11, 1996, pp 411–20. [28] Md. Maruf Monwar and Siamak Rezaei, “Pain Recognition Using Artificial Neural Network”, In IEEE International Symposium on Signal Processing and Information Technology, Vancouver, BC, 2006, 28-33. [29] A.B. Ashraf, S. Lucey, J. Cohn, T. Chen, Z. Ambadar, K. Prkachin, P. Solomon, B.J. Eheobald: The Painful Face - Pain Expression Recognition Using Active Ap-pearance Models: In ICMI. 2007. [30] B. Gholami, W. M. Haddad, and A. Tannenbaum, “Relevance Vector Machine Learning for Neonate Pain Intensity Assessment Using Digital Imaging”, In IEEE Trans. Biomed. Eng., 2010. Note: To Appear [31] Becouze, P., Hann, C.E., Chase, J.G., Shaw, G.M. (2007) Measuring facial grimacing for quantifying patient agitation in critical care. Computer Methods and Programs in Biomedicine, 87(2), pp. 138-147. [32] Littlewort, G., Bartlett, M.S., and Lee, K. (2006). Faces of Pain: Automated measurement of spontaneous facial expressions of genuine and posed pain. Proceedings of the 13th Joint Symposium on Neural Computation, San Diego, CA. [33] Smith, E., Bartlett, M.S., and Movellan, J.R. (2001). Computer recognition of facial actions: A study of co-articulation effects. Proceedings of the 8th Annual Joint Symposium on Neural Computation. [34] S. Brahnam, L. Nanni, and R. Sexton, “Introduction to neonatal facial pain detection using common and advanced face classification techniques,” Stud. Comput. Intel., vol. 48, pp. 225–253, 2007. [35] Gwen C. Littlewort, Marian Stewart Bartlett, Kang Lee, “Automatic Coding of Facial Expressions Displayed During Posed and Genuine Pain” , In Image and Vision Computing, 27(12), 2009, p. 1741-1844. [36] Bartlett, M., Littlewort, G., Whitehill, J., Vural, E., Wu, T., Lee, K., Ercil, A., Cetin, M. Movellan, J., “Insights on spontaneous facial expressions from automatic expression measurement”, In Giese,M. Curio, C., Bulthoff, H. (Eds.) Dynamic Faces: Insights from Experiments and Computation, MIT Press, 2006. [37] S. Brahnam, C.-F. Chuang, F. Shih, and M. Slack, “Machine recognition and representation of neonatal facial displays of acute pain,” Artif. Intel. Med., vol. 36, pp. 211–222, 2006.