Optimization Methods – Summer 2012/2013 Examples of questions

advertisement

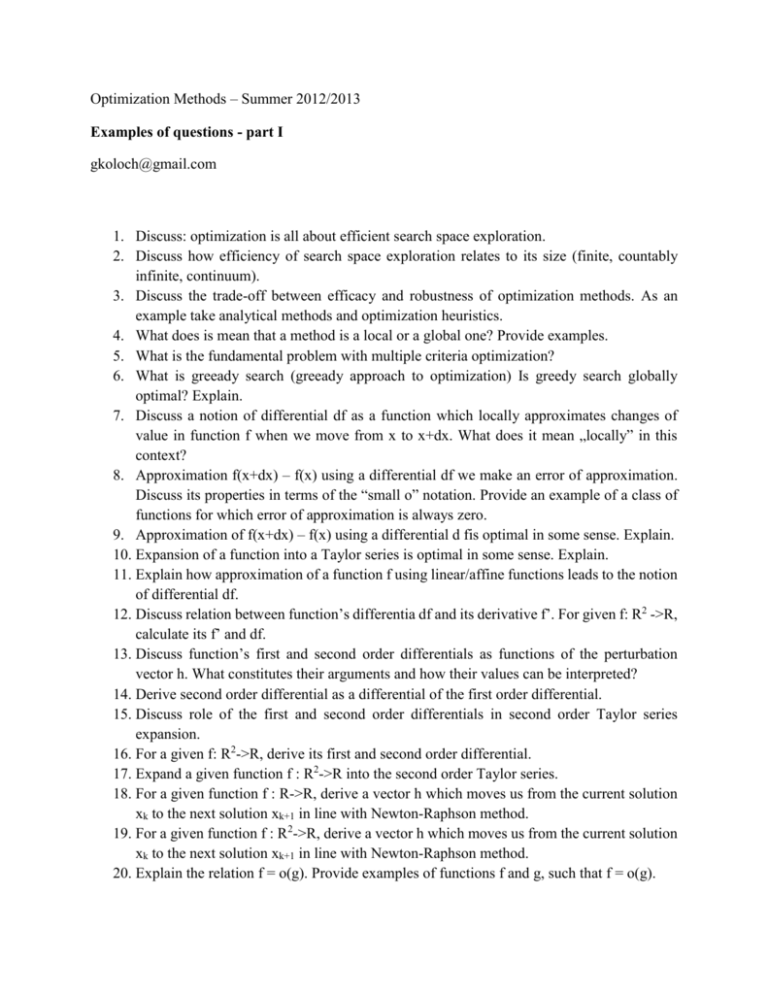

Optimization Methods – Summer 2012/2013 Examples of questions - part I gkoloch@gmail.com 1. Discuss: optimization is all about efficient search space exploration. 2. Discuss how efficiency of search space exploration relates to its size (finite, countably infinite, continuum). 3. Discuss the trade-off between efficacy and robustness of optimization methods. As an example take analytical methods and optimization heuristics. 4. What does is mean that a method is a local or a global one? Provide examples. 5. What is the fundamental problem with multiple criteria optimization? 6. What is greeady search (greeady approach to optimization) Is greedy search globally optimal? Explain. 7. Discuss a notion of differential df as a function which locally approximates changes of value in function f when we move from x to x+dx. What does it mean „locally” in this context? 8. Approximation f(x+dx) – f(x) using a differential df we make an error of approximation. Discuss its properties in terms of the “small o” notation. Provide an example of a class of functions for which error of approximation is always zero. 9. Approximation of f(x+dx) – f(x) using a differential d fis optimal in some sense. Explain. 10. Expansion of a function into a Taylor series is optimal in some sense. Explain. 11. Explain how approximation of a function f using linear/affine functions leads to the notion of differential df. 12. Discuss relation between function’s differentia df and its derivative f’. For given f: R2 ->R, calculate its f’ and df. 13. Discuss function’s first and second order differentials as functions of the perturbation vector h. What constitutes their arguments and how their values can be interpreted? 14. Derive second order differential as a differential of the first order differential. 15. Discuss role of the first and second order differentials in second order Taylor series expansion. 16. For a given f: R2->R, derive its first and second order differential. 17. Expand a given function f : R2->R into the second order Taylor series. 18. For a given function f : R->R, derive a vector h which moves us from the current solution xk to the next solution xk+1 in line with Newton-Raphson method. 19. For a given function f : R2->R, derive a vector h which moves us from the current solution xk to the next solution xk+1 in line with Newton-Raphson method. 20. Explain the relation f = o(g). Provide examples of functions f and g, such that f = o(g). 21. Explain the relation f = o(g). Is it possible that f = o(g) and simultaneously g = o(f)? Explain. 22. Explain the relation f = o(g). Is it possible that f = o(g) and simultaneously f = o(g2)? Explain. 23. Prove that gradient constitutes local direction of a steepest ascent of a function. What does it mean „local” in this context? 24. Explain that using a gradient one can approximate a change in function’s value along an arbitrary direction up to the first order. What does it mean „up to the first order: in this context? 25. Explain that using a differential one can approximate a change in function’s value along an arbitrary direction up to the first order. What does it mean „up to the first order: in this context? 26. Discuss how Hessian relates to the local curvature of a function. Explain consequences for second order conditions. 27. Explain first and second order conditions for extreme points. For a given function f : R>R, derive first and second order conditions. 28. Explain first and second order conditions for extreme points. For a given function f : R2>R, derive first and second order conditions. 29. Explain: analytical methods are efficient, but lack robustness. 30. Prove that grad f(x) = 0 is necessary for x to be an extrem point. Is this condition also sufficient? Explain. 31. Discuss graphical interpretation of first and second order conditions. 32. Explain workings of a gradient descent method (provide necessary formulae). 33. Explain workings of a steepest descent method (provide necessary formulae). 34. Discuss properties of consecutive search directions in the course of settpest descent optimization. 35. Discuss difference between locat and global minimum. 36. Is Newton-Raphson method a global one? Explain. 37. Is steepest descent method a global one? Explain. 38. Prove that gradient is orthogonal to the tangent to the level set. 39. Explain Rayleigh’s theorem. Provide an example of application. 40. Explain Cauchy’s theorem. Provide an example of application. 41. How scalar product is involved in calculation of a differential? 42. How line search methods are used as ingredients of the steepest descent method? 43. Discuss first order conditions. How second differentials are linked to the type of an extreme point (min/max)? Explain. 44. What does it mean that a second order differentia is positive/negative definite? Provide a necessary and sufficient condition for positive/negative definiteness of a second order differential. 45. What does it mean that a square matrix is positive/negative definite? Provide a necessary and sufficient condition for positive/negative definiteness of a square matrix. 46. Explain a chosen line search method. 47. What does it mean that settpest descent methods utilizes first order information? 48. Explain workings of the Newton-Raphson method. What does it mean that NewtonRaphson methods utilizes first and second order information? 49. Is it true that, for a given perturbation vector, second order Taylor series approximation always produces smaller approximation error than second order approximation? Explain. 50. Are there any functions for which third order Taylor series expansion coincides with the first order expansion? Explain. 51. Are there any functions for which first order Taylor series approximation results in a bigger approximation error than the second order approximation? Explain. 52. Is it true that second order Taylor series approximation produces a smaller limiting error than the first order approximation? Explain. 53. Derive a formula which shifts current solution xk to the next solution xk+1 in case of Newton-Raphson method for functions f: R->R. 54. Derive a formula which shifts current solution xk to the next solution xk+1 in case of Newton-Raphson method for functions f :Rn->R. 55. What does it mean that Newton-Raphson method utilizes information on direction and on curvature? 56. Let gx(h) be expansion of function f into the Taylor series in the point x along direction h. Show that g’x(0)=f’(x) and g’’x(0)=f’’(x). 57. Let gx(h) be expansion of function f into the Taylor series in the point x along direction h. Show that dgx(0)(u)=df(x)(u) and d2gx(0)(u1,u2)=d2f(x) (u1,u2). 58. Prove that Newton-Raphson method converges in the first iteration in case of quadratic functions. 59. Prove that Newton-Raphson method converges in the first iteration in case of quadratic forms. 60. Explain what it means that gradient methods and Newton-Raphson method have descent property? 61. Prove that a vector which shifts xk to xk+1 in case of Newton-Raphson method constitutes locally a direction of improvement of objective function’s value. What does it mean „locally” in this context? 62. Prove first order conditions. 63. Prove second order conditions. 64. Is it possible, that first order conditions are satisfied in x, but x is not an extrem point? Explain. 65. Discuss how finding extrem points of a function f relates to solving equation g(x) = 0. How f and g are related? 66. Show how Newtona-Raphsson can be used to solve f(x) = 0? 67. What does it mean that a condition is necessary? What does it mean that a condition is sufficient. Provide a non-mathematical example. 68. Prove any matrix differential calculus formula. 69. Discuss Levenberg-Marquardt modification of Newtona-Raphson method? 70. What is matrix regularization? When and why it can be applied to the Newton-Raphson method? 71. Prove that when Newton-Raphson method converges, its step length approaches zero. 72. Is Newton-Raphson method always convergent? Explain. 73. What is search space enumeration? Discuss positive and negative features of such a method.