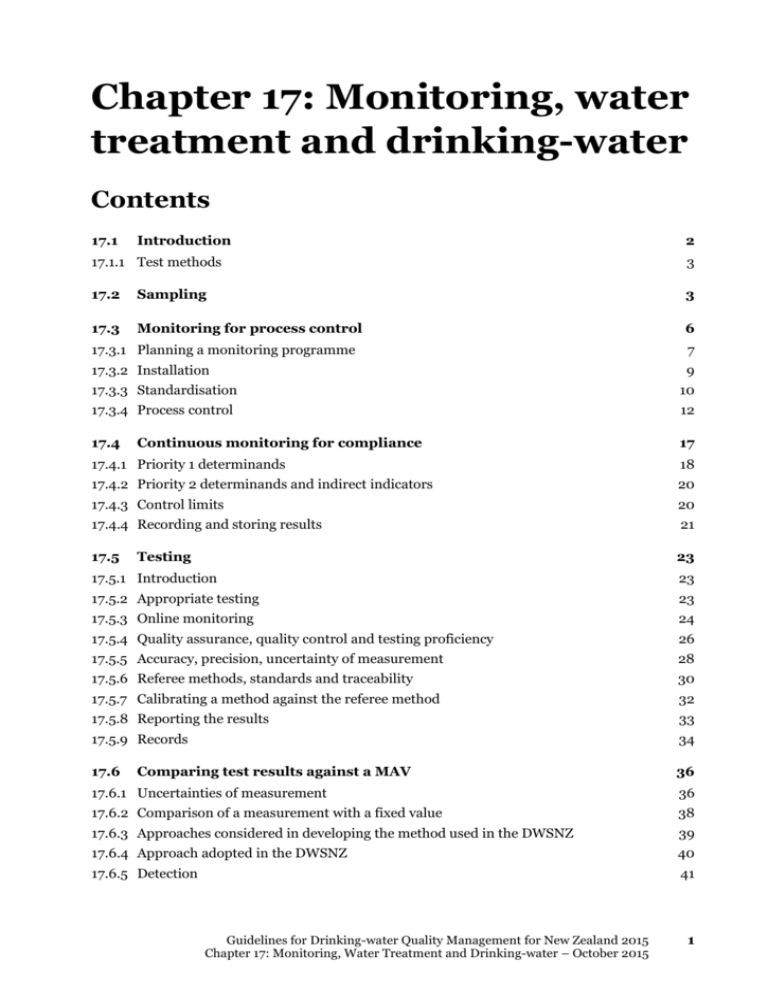

Chapter 17: Monitoring, water treatment and

advertisement