19) SuperResolutionMethods(3-1

advertisement

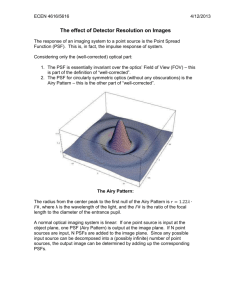

ECEN 4616/5616

3/1/2013

What is Superresolution?

1. Recovering information beyond the diffraction limit of the optics.

a. Analytic Continuation: Surprisingly, superresolution is theoretically possible, for

a normal optical system. The theoretical proof of this involves the concept of

analytic continuation, described on subsequent pages. Unfortunately, the signalto-noise limitations of most optical systems prevent this method from being

practically useful at this time.

b. Near-field optical scanning: This involves scanning a sub-wavelength aperture

in close proximity to the object being imaged. Analysis and experiment show that

some light will pass through an aperture that is much smaller than the

wavelength:

Object

Sub-λ aperture

Incident light

Detector

Scan the aperture

or object

Near-field scanning has a very limited depth of field – however research has

shown that a correctly designed array of sub-wavelength apertures can have a

DOF several times larger than a single aperture, while maintaining subwavelength resolution.

c.

Lucky Imaging: This refers to the process where an optical system that is

imaging through a turbulent medium occasionally records spatial frequencies of

objects that would normally be beyond the system’s cut-off frequency (diffraction

limit). Conceptually, this works as shown below:

“Lucky” Ray Path

Object

Normal Ray Path

Random Phase Distortion

What the figure shows is the random distortion momentarily acting as an

objective lens, and the camera is then the “eyepiece” to a much larger (and

higher resolution) optical system. For this instance, the “lucky” image will appear

in front of the normal focal plane.

Other possibilities are:

The phase distortion creates a magnified image of the object

The phase distortion creates a real image of the object, closer to the

camera. (In this case, the image would appear behind the normal image

plane.)

Before dismissing this as extremely unlikely, it should be noted that all of the

above and much more has been observed in mirages – a situation whereby the

atmosphere creates random optical systems capable of stunning effects.

2. Recovering information about a known object that is not explicitly present in the

image:

For example, if it is known that the object is a point source, how accurately can the

position of the PSF be determined on the detector plane? If the PSF falls only on one

pixel, the answer is “one pixel accuracy”. If the PSF is oversampled, however – say it

pg. 1

ECEN 4616/5616

3/1/2013

covers an area of 10x10 pixels – then, since the form of the PSF is known (the Airy

pattern), its central location can be determined to a small fraction of a pixel, given

sufficient SNR.

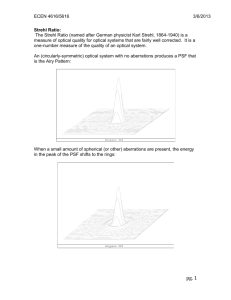

The Rayleigh Criteria for resolution of a telescope is based on this principle. The radial

𝜆

(angular) distance from the peak of the Airy pattern to the first null is 1.22 . Rayleigh

𝐷

made the judgment call that, given the SNR of then-current telescopes and the human

eye as detector, two Airy patterns could be just distinguished if the peak of one fell on the

𝜆

first null of the other. Hence 1.22 is the angular resolution of a telescope for point

𝜆

𝐷

sources (and 1.22 𝑓 is the distance the two patterns will be separated on a focal plane).

𝐷

This method can be extended to objects other than point sources, as long as they are

known. For example, a sharp edge can be located to much less than a pixel’s width, as

long as the image model is correct and the edge image extends over multiple pixels.

There are a number of optical systems and research on this subject. At CU there are

experimental setups that have shown the ability to locate single fluorescent molecules to

𝜆

≈

in x, y, and z. (The z-location was done by engineering a pupil distortion which

100

caused the PSF to be asymmetric and rotate with defocus.)

3. Fluorescent methods: There are a number of methods of exciting fluorophores in

regions smaller than what can be addressed by a normal PSF. They are identified by an

acronym salad of designations such as STED, PALM, STORM, etc.

We will describe STED (Stimulated Emission Depletion) microscopy – those interested

can look up the others.

The method uses fluorophores which can be excited at one wavelength, and inhibited at

another wavelength – both exciting and inhibiting wavelengths must be distinct from the

fluorescent wavelength, so that they may be separated before detection.

Both the exciting and inhibiting wavelengths are projected onto the sample. The

resolutions and intensities are arranged such that the exciting wavelength exceeds the

effect of the inhibiting wavelength only in a very small central region of both PSFs. This

is the region where fluorescence will occur, and can be made significantly smaller than

any possible PSF through the optics.

Region of fluorescence

Inhibiting wavelength

exciting wavelength

pg. 2

ECEN 4616/5616

3/1/2013

Taylor Series and Analytic Continuation:

The Taylor series expansion of a function about zero (also called the Maclauran expansion) is

defined as:

𝑥2

𝑥𝑛

𝑓(𝑥) = 𝑓(0) + 𝑓 ′ (0)𝑥 + 𝑓 ′′ (0) + ⋯ + 𝑓 (𝑛) (0)

+⋯

2!

𝑛!

Any function which is equal to its Taylor expansion inside a convergence disk,|𝑥| < 𝑅, is called an

analytic function on that disk. If a function is equal to its Taylor expansion for all x, it is called an

entire analytic function.

Sin(x), cos(x) and ex are all entire analytic functions.

Why does the Taylor expansion work? The Taylor expansion of a polynomial is just the

polynomial. For example:

𝑙𝑒𝑡 𝑓(𝑥) ≡ 𝑎𝑥 2 + 𝑏𝑥 + 𝑐

Then, 𝑓(0) = 𝑐, 𝑓 ′ (0) = 𝑏, 𝑓 ′′ (0) = 2𝑎,

𝑥2

and the Taylor expansion is: 𝑐 + 𝑏𝑥 +2a = 𝑓(𝑥)

2

So, saying that a function is analytic is equivalent to saying it can be represented by a (possibly

infinite) polynomial – and the Taylor theorem tells that knowledge of such a function’s value and

derivatives at a single point is equivalent to knowledge of the entire function.

Analytic Continuation and Superresolution:

From the point of view of an optical system, an object is just a pattern of light. This pattern could

be decomposed (via the Fourier Transform) into an expression involving only complex

exponential functions.

The imaging system relays a band-limited copy of the object light pattern to the detector, where it

is captured as an image. Compared to the object (considered as a 2D light pattern), the image is

a copy of the lower spatial frequencies of the object, but the higher spatial frequencies are not

passed by the optical system.

As Goodman shows (“Introduction to Fourier Optics, p. 134), the Fourier transform of such an

image (or the object’s light pattern) is an entire analytic function. The reasoning goes like this:

The Fourier Transform is a sum of sin functions; Each individual sin function can be represented

by a Taylor polynomial, hence the entire Transform can also be so represented, since a sum of

polynomials is itself a polynomial. Hence, the Fourier Transform of an image is an entire analytic

function.

What this implies is that a sufficiently accurate knowledge of a portion of the image Fourier

Transform (e.g., the lower spatial frequencies) can be used to restore the unknown higher spatial

frequencies by means of extrapolation with a 2D Taylor polynomial (or similar method) derived

from the known data. This process is known as analytic continuation.

There are hundreds of theoretical papers on this subject – and even some actual experimentation

– covering many different methods of achieving resolutions beyond the passband of the optics.

Alas, the results are not currently spectacular – given the Signal-to-Noise ratios achievable with

optical systems and detectors, the degree of successful extrapolation is only a few percent

increase in resolution.

pg. 3

ECEN 4616/5616

3/1/2013

Discrete analog to analytic continuation:

In a system where all the data is discretely sampled, such as an image from a pixilated detector

or its Discrete Fourier Transform, the derivatives are estimated by means of finite differences

between the data samples.

Finite Differences:

Let F be a sequence of numbers:

𝐹 = {⋯ , 𝑥−3 , 𝑥−2 , 𝑥−1 , 𝑥0 , 𝑥1 , 𝑥2 , 𝑥3 , ⋯ }, and 𝐹𝑛 ≡ 𝑥𝑛

Then, using simple central differences to approximate derivatives, we have:

𝐹0′ =

𝐹0′′ =

𝑥1 − 𝑥−1

2

′

𝐹1′ − 𝐹−1

𝑥2 − 2𝑥0 + 𝑥−2

=

2

4

(3)

=

(4)

=

𝐹0

′′

𝐹1′′ − 𝐹−1

𝑥3 − 3𝑥1 +3𝑥−1 −𝑥−3

=

2

8

(3)

𝐹0

𝐹1

(3)

− 𝐹−1

𝑥4 − 4𝑥2 + 6𝑥0 − 4𝑥−2 + 𝑥−4

=

2

16

& etc.

Another, more compact way to calculate the nth finite difference, is to fit a polynomial of at least

order n to the data. (Since you are trying to estimate the nth derivative, the polynomial must have

a non-zero derivative of the same order.) Such a polynomial will require knowledge of at least

n+1 points of the sequence – or n different from the point about which you are calculating.

Hence, in discretely sampled data, knowledge of the higher-order finite differences about a single

point in a sequence is equivalent to knowledge of other points in the sequence distant from the

evaluation point.

pg. 4

ECEN 4616/5616

3/1/2013

Field Curvature and Scan Relays:

Constructing an x-y scan relay using one-axis galvanometer-driven mirrors involve at least 4

positive lenses. This likely to add a positive Petzval sum to the system, resulting in a scan plane

with negative curvature.

The possible solutions are:

1. Design the scan lenses as triplets, say, such that they have a negligible Petzval sum. It

might be possible to purchase commercial scan lenses with these characteristics.

2. Design the scan objective to have a negative Petzval sum to counter the curvature added

by the scan relay(s).

3. Put an afocal lens group in the system, at or near a relay point, designed to negate the

field curvature added by the scan relays:

Here is a pair of lenses which have zero power, but add positive curvature (negative

Petzval sum) to a parallel beam:

These lenses were found by starting with two plano-convex lenses, using Pickups in the LDE

(above) to ensure that the result was symmetric, curving the image plane in the desired direction,

and then using the Default Merit Function to solve for a solution. Different starting points, or more

lenses (perhaps with different glasses) will give different (and possibly better) solutions.

pg. 5