B90.3302 / C22.0015 NOTES for Wednesday 2011.FEB.16

B90.3302 / C22.0015

NOTES for Wednesday 2011.FEB.16

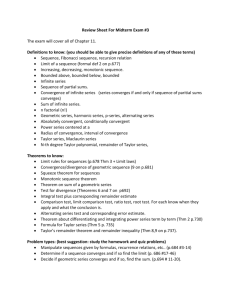

Advertised topics:

Markov and Chebyshev inequalitites

Moments and moment generating function

Law of large numbers

Central Limit Theorem

Sequence of presentations:

1. Fundamental theorem of simulation

2.

3.

4.

5.

6.

7.

8.

Existence of moments, simulations showing moment calculations

Results (Minitab) on long-tails.

Chebyshev (and Markov) inequalities

Moments and the moment generating function.

Limit ideas, first convergence in probability, then convergence in distribution

Invoke law of average, X

Central Limit theorem

The material for this class is on the handouts. This document is just an outline sketch.

For some random variables, the expectation is infinite, and for others the expectation does not exist. This is on a handout (which also gets to point 3, showing simulations).

Many densities have a factor e - factor in x . An example is the exponential, f ( x ) = α e -α x .

The exponent has a linear function of x . Such a random variable will have all its moments. Even the gamma distribution, with a density of the form x power e

-α x

, will have all its moments, since the exponential part will overwhelm any algebraic part.

The normal density has the factor e

1

2

2 x

2

, so the exponent has a quadratic function of x .

This has very short, very nice, very compact tails. We love the normal distribution.

The Cauchy distribution has a tail like

1 x

2

. The Cauchy mean does not exist. In

1 contrast, the density f ( x ) = x

2

I

x

1

has infinite mean.

We have a handout that shows what samples look like. These are quite amazing. Also, they carry serious warnings to financial people who think about long tails. The special message is that you can be looking at a very long run of data without ever seeing the

“black swan.”

1

The moment generating function is standard material for the previous course B90.3301, so we’ll just summarize the high points.

M

X

( t ) = E e t X

M

( r )

(0) = E X r

If X and Y are independent, and if Z = X + Y , then M

Z

( t ) = M

X

( t ) M

Y

( t ).

We should also mention the characteristic function. This is C

X

( t ) = E e

itX

. The advantage here is that | e

itX

| = 1, so that the characteristic function always exists. (It’s calculated as the expected value of a bounded quantity, so there’s nowhere for it to run off to.)

Some convergence notions.

1. Suppose that a

1

, a

2

, a

3

, … is a sequence of numbers. There’s nothing random here. Sometimes we use a notation like { a n

} to represent the sequence. We use the notation { a n

}

a to indicate that the sequence converges. Formally this means the following:

Specify a positive

, no matter how small.

Given this

, there exists a position number in the sequence, call it

N

*

, so that { n

N

*

implies | a n

- a | <

}.

This is saying that, for any

there is a position in the sequence in which all remaining terms are within

of the limit a .

The fact that you can do this for any

means that the sequence converges. n

1

The sequence converges to zero. The sequence converges n

to 1. The sequence 1

b n

converges to e b . n

The sequence { n } diverges to infinity. The sequence { n (-1) n

} does not converge. The sequence n n

1

n

does not converge, although we could note that it has accumulation points at -1 and +1.

2. If X

1

, X

2

, X

3

, … is a sequence of random variables, we use the notation

{ X n

}

X to note “converges in probability.” Formally, this is converted to a statement about a sequence of numbers. For any positive

,

2

3.

4. no matter how small, consider the sequence in which a n

= P X n

X

. The statement { to { a n

}

0 (for every

).

X n

}

X is exactly equivalent

In most applications of this, the limit X is just a single point, meaning

P[ X = c ] = 1 (for some c ). If X n

is the average of the first n observations in a set of independent identically distributed observations, then

{ X n

}

, where

is the mean of the population from which X is sampled.

The statement { X n

} ms

X is read “ X n

converges in mean square to X

.”

This is also converted to a statement about a sequence of numbers. Let b n

= E[ ( X n

- X ) 2

{ b n

}

0.

]. The statement { X n

}

X is exactly equivalent to

This is very important for the following two reasons:

The condition can be easy to check, since E[ ( X n

- X ) 2 ] =

E X n

2

2E

X X n

E

X

2

. In many instances, this is very easy to check. Suppose, for example, that X n

is really X n

, the average of the first n observations in a set of independent identically distributed observations, and that X is the constant

.

Then E[ ( X n

- X )

2

] = E[ ( X n

-

)

2

] = Var(

X n

) =

2

. This trivially n converges to 0, so that X n

.

Mean square convergence implies convergence in probability. In symbols, this says

{ X n

} ms

X implies { X n

}

X

The statement { X n

} X is read “ X n

converges in distribution to X

.”

This is a sample about the cumulative distribution functions of the random variables. Suppose that F n

( t ) = P[ X n

t ] is the cumulative distribution function of X n

. Then let F ( t ) = P[ X

t ] be the cumulative distribution function of the limit X . The claim is now converted to a statement about a sequence of numbers. Specifically, { F n

( t ) }

F ( t ) for any t .

Well…. not quite any value of t . The convergence is only required to work at those values of F for which F ( ) is continuous. As you

3

are aware, if F corresponds to a discrete random variable, there will be points at which F jumps.

The major use of this, by far, is the Central Limit theorem. If X n

is the average of the first n observations in a set of independent identically distributed observations from a population with mean

and standard deviation

, then n

X n

N (0, 1).

4