Evaluation of Formal Assessment Reviewed by Myria Knapp Test

advertisement

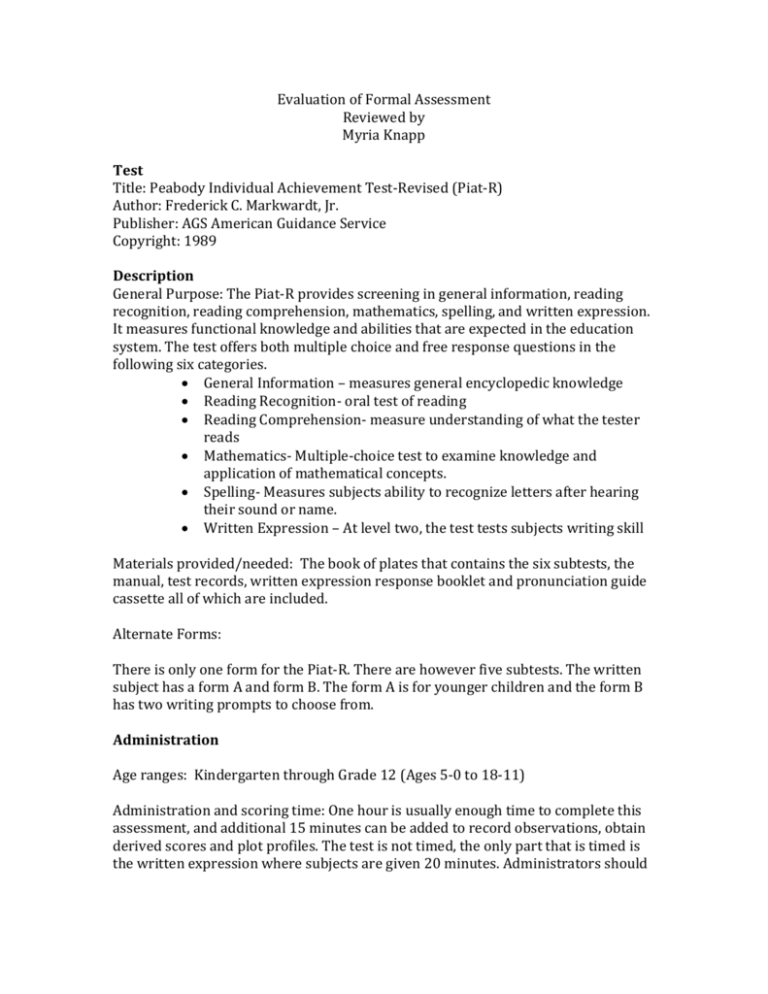

Evaluation of Formal Assessment Reviewed by Myria Knapp Test Title: Peabody Individual Achievement Test-Revised (Piat-R) Author: Frederick C. Markwardt, Jr. Publisher: AGS American Guidance Service Copyright: 1989 Description General Purpose: The Piat-R provides screening in general information, reading recognition, reading comprehension, mathematics, spelling, and written expression. It measures functional knowledge and abilities that are expected in the education system. The test offers both multiple choice and free response questions in the following six categories. General Information – measures general encyclopedic knowledge Reading Recognition- oral test of reading Reading Comprehension- measure understanding of what the tester reads Mathematics- Multiple-choice test to examine knowledge and application of mathematical concepts. Spelling- Measures subjects ability to recognize letters after hearing their sound or name. Written Expression – At level two, the test tests subjects writing skill Materials provided/needed: The book of plates that contains the six subtests, the manual, test records, written expression response booklet and pronunciation guide cassette all of which are included. Alternate Forms: There is only one form for the Piat-R. There are however five subtests. The written subject has a form A and form B. The form A is for younger children and the form B has two writing prompts to choose from. Administration Age ranges: Kindergarten through Grade 12 (Ages 5-0 to 18-11) Administration and scoring time: One hour is usually enough time to complete this assessment, and additional 15 minutes can be added to record observations, obtain derived scores and plot profiles. The test is not timed, the only part that is timed is the written expression where subjects are given 20 minutes. Administrators should wait 30 seconds on the mathematics and 15 seconds on the other subtest, when waiting for a response. Types of scores reported: The test raw scores can be calculated into grade and age equivalents, grade-and-age based standard scores, percentile ranks, normal curve equivalents, and stanines. Starting points, basal and ceiling levels: The testers grade level determines the starting point. You start on the first subtest by simply finding their grade. To find the starting point of the next subtest you take the raw score from the previous subtest. If a subtest is omitted, the raw score on the subtest before the one that was omitted determines the starting point. The basal is the five highest correct consecutive answers. The ceiling is when the subject makes five errors in seven consecutive items. Standard error of measurement and confidence levels: The standard error of measurement mean of the total test raw score is 5.8; this is at the 68% confidence level. At the 95% confidence level the SEM is 11.368. At the 99% confidence level the SEM is 14.964. Norming Procedures Sampling procedures: A professional or counselor was designated for each school district. They surveyed, chose examiners and supervised the whole testing process at each location. The examiners were professionals who had experience in the education world. Every examiner had to attend a two-hour workshop; in order to make sure all examiners understood proper administration. Size and characteristics of sample: The sample was taken from 1,563 students ranging from kindergarten through Grade 12 in 33 communities nationwide. There were an additional 175 kindergarteners tested. Most students were from the public school (91.4%). Special education classes were excluded. The sample was given to an equal amount of sexes, and distributed around all geographic regions, socioeconomic statuses, and race/ethnic groups. There were more kindergartners (150) than any other age group, and this number got less with age (grades 9-12) in each region. Date of Norms: Between April and June of 1986. Reliability Split-Half Reliability: Calculated using raw scores on even items and raw score on odd items. The coefficient by grade ranged from .95-.98. The coefficient by age ranged from .93-.99. Kuder-Richardson Reliability: All six subtests by grade had a coefficient that ranged from .94-.98. The coefficient by age ranged from .95-99. Test-Rest Reliability: To obtain this data they randomly select 50 subjects in kindergarten, 2nd, 4th, 6th, 8th and 10th. These subjects were tested twice with a two to four week interval. The coefficient ranged from .84 to .96 by grade. The coefficient by age ranged from .90 to .96. Item Response Theory Reliability: This reliability was based on estimated raw scores for both age and grade. The coefficient by grade ranged from .96-.99. The coefficient by age ranged from .96-.99. Validity Content Validity: The information from the Kuder-Richardson and split-half reliability helped make the cause of content validity. The PIAT-R also went into detail of how they tested validity in ever subtest. They went into great research to make sure every subtest had content that accurately measured what it said it would measure. Construct Validity: Construct validity was proven in several ways. The first of these is developmental changes. Test scores are expected to increase with age and grade. The raw score means of this test increase with grade level, and age level. The second way this test proved construct validity was through correlation with other tests. The correlation of the PIAT to the PVVT-R was .72 for total mean correlation. They also correlated the PVT-R with the original PIAT. The correlation for these two tests for grades and age ranged from .83 - .97. Factor analysis was another way they proved validity. The intercorrelation between relationships among the test ranged from .61.95 among grade. The intercorrelation among tests of age ranged from .66-.96. Classroom uses Suggested by Authors: The test is used to measure functional knowledge and abilities that are seen in the education setting. It is suggested as a use for when a survey of scholastic achievement is needed. It also provides a diagnostic instrument for achievement level of subjects. Many professionals in the school setting can use the test in many settings. It provides information on student’s strengths and weaknesses, and behavior in testing situations. The manual says the test provides an insight into how the student learns and handles school subjects. This in turn helps provide a way for intervention and helping the student in his or her weaknesses. The test can be used outside of the school system. It could be used as a way to measure achievements of a candidate for a job or training. My Opinion of Appropriate Uses: I believe this is a test that would be great to determine a students overall academic level. If you have time, you could use this to test every student in the beginning of the year to just see where they’re at in every subject level, and have a better idea. However that seems very unlikely due to the size of the test. It would be valuable in the beginning stages of intervention (RTI), when trying to figure out why a student is falling behind. It could be used to measure progress; however once you find exactly where the student is struggling I believe you would give them an assessment tailored to that subject area. I believe this test would be best used to initially get an idea of a students strengths and weaknesses, and from there choose more tests or a plan of intervention. Desirable Uses It measures a variety of content areas Gives an overall idea of a student’s ability, strengths and weaknesses. They had a strong norm reference, with many variety and large sample. Great way to assess student’s total academic knowledge. Reliability coefficients averaged in the .90’s Seemed like there was a lot that went into making sure the test had strong validity in each subtest. Undesirable Uses Did not take a norm reference from children with special needs. No color in test booklet Longer test No Alternate Form Old Test A pretty high standard error of measurement compared to other tests. The book mentioned starting point is determined with chronological age but testing booklet says it’s with grade. Confusing. Have to stall between tests to count raw score. It seems like students would lose focus during this time.