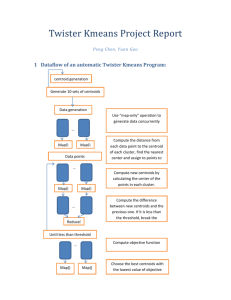

report

advertisement

D IGITAL S IGNAL P ROCESSING Final Project 2007/08 Irene Moreno González 100039158 José Manuel Camacho Camacho 100038938 DSP08-G95 IMG/JMCC DSP08-G95 Table of Contents Visualisation of Data .................................................................................... 2 Linear Classifiers .......................................................................................... 2 Single Perceptron with ADALINE Learning Rule ..................................................... 2 Sequential Gradient Rule & Soft Activation .............................................................. 3 Non-Linear, Non-Parametric Classification............................................ 6 Reducing Costs via Clustering ................................................................... 8 1 IMG/JMCC DSP08-G95 DIGITAL SIGNAL PROCESSING Final Project 2007/08 VISUALISATION OF DATA In order to have a general view of the problem, the cloud of points is represented first, showing with each colour which class the data belongs to. LINEAR CLASSIFIERS Single Perceptron with ADALINE Learning Rule This machine has the same structure as the hard SLP (single layer perceptron). The structure that will be used is as follows: x1 w1 z x2 w2 w0 + 1 o 1 1 x x1 x2 w0 w w1 w2 1 2 IMG/JMCC DSP08-G95 Given a group of already classified samples, the perceptron can be trained following a Widrow-Hoff algorithm applied to the input at the error of the decider: In each iteration, the weights are re-calculated as shown above, where the samples used as input are the training samples. The step controls the speed of the convergence in the algorithm. There are some design decisions that were taken during the implementation: - Weights are initialized randomly; for this reason, even if the training samples and the iterations do not change, different results are obtained each time the algorithm is run. - A pocket algorithm with zipper is used to obtain the optimum weights. Although the weights are calculated with the training samples as inputs, it is the validation set the one used to selects the optimum weights to keep. In this way, better generalization of the problem is reached, since the perceptron is receiving more information about the distribution of the data; but its raise of the computational complexity can be shown as a disadvantage, since for each re-calculation of the weights, the error over the whole validation set has to be obtained. - In each iteration both training and validation sets are reordered in a random way, but initial weights are no longer random, since the ones from previous iterations are used. A number of 100 iterations is chosen, although the algorithm reaches its convergence much earlier, as it will be shown later. - Although it is important to know the samples that were correctly classified, not the percentage error but the MSE one is considered during the training phase, since not only has the success rate to be optimized, also an intermediate position of the border between the clouds of patterns is sought. - The value of the step was set by trial and error: different steps were used until the best results were reached. For the case of the ADALINE algorithm, a final step of 0.0001 was chosen. Step: 0.0001 Weigths: w0=0.196 w1=0.519 w2=0.074 Error rate|TRN = 3.23 % Error rate|VAL = 3.31 % Error rate|TEST= 3.15 % 3 IMG/JMCC DSP08-G95 In order to obtain the error rate for each set, every point is multiplied by the weights, and the decision will be based in the sign of the result. Decision border and the error rates that were obtained are show above. Next plot shows the evolution of the error rate of the validation set through the iterations of the algorithm. The fact that the test rate is lower than the training and validation one is not relevant: linear classification is performed, so depending on the position of the clouds of data for each set, the problem can be more separable or less. Nevertheless, as it was pointed out before, these results depend on the initialization of the problem each time, since it follows a random procedure. As it can be seen from the figures, the algorithm gets its minimum before the 15 th iteration, so the weights stored in the pocket are no longer renewed from that moment (right side figure). The MSE error of the validation set starts increasing after that minimum (left side figure). With this algorithm, the number of error is not minimized, but a convergence to a local minimum is ensured. Sequential Gradient Rule & Soft Activation The advantage of the sequential gradient rule is that the hard decision is replaced by a derivable approximation. In this case, soft hyperbolic tangent activation function was used. In this way, a Least Mean Squared (LMS) algorithm can be implemented for the recalculation of the weights: Parameters and design decisions remain the same as in the ADALINE case, but a better performance and better results are obtained with this learning rule, as it is shown in following graphs and percentages. A step of 0.05 was used. 4 IMG/JMCC DSP08-G95 Step: 0.05 Weigths: w0=3.262 w1=8.834 w 2=-1.292 Error rate|TRN = 3.23 % Error rate|VAL = 3.31 % Error rate|TEST= 3.15 % As can be seen in the figures, convergence is fast and optimal for this rule. The algorithm gets its minimum around the 20th iteration, but the recalculation of the weights is less unstable than in ADALINE case. 5 IMG/JMCC DSP08-G95 NON-LINEAR, NON-PARAMETRIC CLASSIFICATION k-NN Classifier was implemented as required in the guide. Following sections depict classifier’s properties in terms of error rates, expresiveness and computational cost. Comparision with linear classifier results is provided as well. a. Plot the training and validation classification error rates of this classifier as a function of k, for k = 1, 3, 5, 7, …, 25. Fig. 1 Training and Validation Error Rates (as percentage) for each value of ‘k’, for k=1,3…25 At a first glance, it can be clearly seen that error rates are slightly lower at training than in validation classification, for any value of k. This is due to the k set during the training classification has at least one training point belonging to the true class, which is the sample itself. Latter does not hold for validation, even, if training points are not representative enough, this method may generalise badly and training error rates could not be reliable. Validation set was used to estimate the optimum k parameter. Classification resulted in, This value is employed to compute results for the test set later on. b. Depict the decision borders for the classifiers with k = 1, 5, 25. Fig. 3, Fig. 2 and Fig. 4 show classification border for three different values of k. Dealing with expressiveness, this method has better properties compared to the linear classification, error rates are reduced thanks to a better fitting of these classification borders to the data spread all over the plane, specially those at the right part, which were always missed by the linear border. 6 IMG/JMCC DSP08-G95 Given a training set from which build up a classification border, effect of k choice is discussed here. 1-NN method has null training error rate, its border is therefore flawless, as it can be seen, creating very expressive shapes. Validation error rate, however, it is not the best, hence it can be concluded that 1-NN classifier over-fits to the training set, laying rather bad generalization in validation (and we guess that also for testing). 25-NN classifier has exactly the opposite behavior. For each classification it takes into account so many training points, which introduce wrong information to the classifier. Actually, this classifier neither fits well the Fig. 3 Classification Border for the 1-NN Classifier training nor the validation set. In between is the 5-NN. It has not such a good training error rate, however it establishes a not so shaped classification border compared to 1NN. In principle, that can lead to better generalization and less sensitivity to outliers. Furthermore, it seems clear that, in the choice of k depends on how close/far are the training points of different classes to each other. Fig. 2 Classification Border for the 5-NN Classifier In order to obtain the results for this part of the assignment, several simulations with the implemented k-NN classifier were carried out. Time consumption was much higher than in linear classification. If operations are needed to calculate distance between one sample from the training set to a training point, and k operations to select the k closest points and counting its labels, we get that in order to classify n samples, Same computational cost is required for validation and test if those sets have similar size as the training set. Fig. 4 25-NN’s Classification Border 7 IMG/JMCC DSP08-G95 c. Obtain the optimum value for k according to the validation set, and give the test classification error rate that would be obtained in that case. As mention, value of k parameter that minimizes validation error rates is k=9. In that case, 9-NN classification had following error rate, This error rate is lower than the one for the linear classifier, according to the improvement of the expressiveness. Test error for this classifier is slightly higher than the training and validation, which may be due to the dependability of the training set for the classification. REDUCING COSTS VIA CLUSTERING Implement the classifiers corresponding to kC =1, 2, 3,…, 50, and compute their training and validation classification error rates. Fig. 5 Training (orange) and Validation (blue) error rates expressed as missed samples out of set size in the vertical axe for kc=1,2,…,50 An iteration of the algorithm for each of the possible number of centroids is run and plotted. As can be extracted from the figure, error rates have a reasonable value when more than 3 centroids per class are used. The fact is that, due to the shape of the cloud of the given points, three or less centroids are not good enough to represent the data. In addition, training error rates are always lower than validation ones, since the centroids seek their optimum position with reference to the training set. 8 IMG/JMCC DSP08-G95 Select the value kC* that minimizes the following objective function J(kC) = Te + log10(kC) Where Te is the validation error rate. Obtain the test classification error rate when using kC*, and plot the classification border of this classifier. Fig. 6 Objective Function J(kC) for kC = 1,2,…,50. In order to obtain the optimum value of centroids to be used, as a compromise between the error rate and the complexity of the problem, the objective function J is compute for each of the values of kc. It results with a minimum value for a kC*=20. The test error is computed for this case. Using this target function, large numbers of centroids are avoided. Using Te as figure of merit, kC would trend to the given number of training points, because error rates would converge to the 1-NN error, and therefore, computational cost would not be reduced. Instead, weighting the error percentage with log10(kC), not only affects the optimum kC to the error rate, but computational cost is kept low. Following picture depicts the test points and classification border for kC=20 The border of the classifier gets a quite good adaptation to the samples. Actually, this border is the set of equidistant points among different centroids representing each class. Fig. 7 An issue that was found during the development of the algorithm is that, when having a relative high kC, after some iterations some of the centroids did not get any sample assigned to them, since all the close samples found a closer centroid to join. Our implementation, does not re-allocate these centroids. But different adjustments can be done to the algorithm to handle this problem: those "dummy" centroids can either be assigned a new random value, nudging them, or splitting clusters with greater dispersion into two groups, producing two new clusters. 9 IMG/JMCC DSP08-G95 Repeat now the experiment 100 times, and give the following results: Plot the average value and the standard deviation of the training and validation error rates as a function of kC. Since the initialization of the centroids is done in a random fashion, different results of error rates and performance are obtained from different runnings of the algorithm. For this reason, a good way to get trusty results is to run the experiment a given number of iterations, extracting the average value and standard deviation of the error rates. In this case, 100 iterations were performed, and the figures below show the results: Fig. 8 Mean and Standard Deviation of Error Rates (no percentages) for different values of kC. Despite using v as letter for the right graph, it is not the variance, it is sigma or standard deviation. From the figures it can be concluded that: - There is a strong dependency of the error rates on the initialization when using small number of centroids (less than 10). This is due to the fact that the shape of data spread all over the plane makes those few centroids not representative of data set. Bad initialization may cause the error to grow even more (large confidence interval). - For higher values of kC both the and decrease steady towards the 1-NN behavior. As it is discussed latter, these results show that the relatively small improvement of the error rate for large kC may not be enough to justify the increase the cost of employing such amount of centroids. - When there is one centroid per class, algorithm has the worst performance. Convergence is reached at the first iteration, since the centroid is simply the center of mass of all the samples of its class. Hence, average error is constant through all repetitions up. Therefore, variance is null, since the centroid is always the same, so that error rate is deterministic. 10 IMG/JMCC DSP08-G95 Using a histogram, give an approximate representation of the distribution of the 100 values obtained for kC*. Obtain the average value for kC*, and for its corresponding test error rate. In order to carry out this section, we have assumed that for each initialization of the centroids, kC can be view as a different discrete random variable. By adding 100 random variables, central limit theorem guarantees that independently of each variable distribution, the total mixture can be characterized as a Gaussian distribution. The histogram shows the results obtained through the 100 iterations: for each of them, a value of turned out to be the optimum one, and the histogram represents the frequency of those elected values. Fitting a Gaussian p.d.f. to the displayed histogram, expectation of kC is, Latter results are consistent with previous analysis. Average error is higher than 1-NN error. Furthermore, optimum choice for kC is at the beginning of the flat region of Fig. 8, where, as mentioned, good properties in term of errors can be achieved without increasing algorithm complexity. Dealing with computational cost, like in previous section, if is said to be the cost to compute the Euclidean distance between two points, and k is the cost of computing the closest centroid among k centroids, total cost of classifying a entire n-sample set is as follows, Hence, Thus, once centroids have been calculated (there may not be reduction of computational cost while training), this clustering approach reduces computational cost to a linear cost in n during classification, instead of k-NN quadratic approach, providing more scalability while keeping such a good performance in terms of errors. As mentioned, kC also affects the complexity. A randomly chosen classification border of a classifier obtained among the 100 iterations using the optimum estimated kC early is shown below. 11 IMG/JMCC DSP08-G95 CONCLUSIONS TO THIS REPORT Linear Classifiers: they provide a simple tool for data classification, with reasonable performance which can be enough for certain applications. Their main drawbacks are the lack of expressiveness (linear borders) and sensitivity to step size. Soft activation has shown better performance (almost same error rates but less iterations). k-NN classifier gave the best performance for this classification problem. Its good properties to depict complex classification borders provided the best classification pattern. Good k choice is critical to have good generalisation. The main shortcoming of this algorithm is the humongous computational cost, because it performs a global sweep for each sample to get choose the most suitable class for that sample. Clustering classification provides in this particular case the chance to set up a trade-off between computational cost and accuracy. The amount of centroids calculated during training for each class determines the accuracy of the results. Small number of centroids means low computational cost is required but performance turns out to be clumsy, whereas is every training point becomes a centroid (extrem case) same performance as de k-NN algorithm is achieved by means of the already mentioned computational effort. 12