Dynamic Interface Design

advertisement

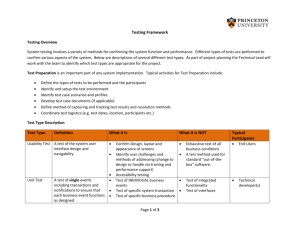

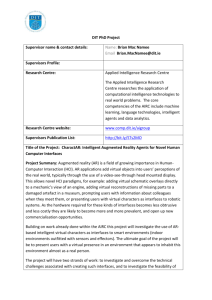

1 Edmond Yee Advisor: Professor David Kirsh Dynamic Interface Design General Goal Quite a few accredited interface theories from the 80s till now have failed to stress the importance of the user’s role in interface design.1 In particular; they assume that all users have constant expectations about what to do with an interface. Whether an interface is successful or ‘direct’ largely depends on the user’s life experiences, age, cultural background, and prior experience to the interface or a similar one. This effect is especially common in interfaces built for complex task domains. For example, a user who is expert in squash may found the field in a tennis racket completely natural whereas a user unfamiliar with the racket sports may found the field of a tennis racket novel and unnatural. The problem here is that most of these interfaces for complex task domains are built for experts with high conceptual model (higher expressive capacity) which makes these interfaces difficult to learn. The goal here is to give an account on how to effectively create the feeling of directness between a particular interface and a user when the user’s conceptual model is always changing. This paper will explore this question. Direct Manipulation Interface The idea of direct manipulation was first introduced by Edwin Hutchin in the paper, “Direct Manipulation Interface” (1985). The paper was initially written to address design technique of what now known as graphical user interface. However, they have brought up important ideas of interface design that should not be overlooked. The fundamental goal of direct manipulation is to create an interface that give rise to users’ feeling of directness. An interface can achieve this goal by reducing the distance between what the user has in mind to achieve a goal and the way an interface is designed to achieve that goal. That is, the closer the match between the user’s expectations and the interface design, the shorter the distance and the higher the directness. The distance also refers to the gulf between the user’s intention and the meaning of expression in an interface. Specifically, the level of descriptions must be matched in order to reduce the size of the gulf. Level of description refers to the level of detail narrative in a task. For instance, the highest level of description of a task can be “writing a paper”, a lower level would be typing in a word processing program, and the lowest level could be the changes of bits inside the electronics of the computer. There are two different types of distances: 1) semantic and 2) articulatory. Semantic is the distance between the meaning of expression of a task in the interface language and the meaning of that task in the user’s mind. For instance, the semantic distance of an interface that is suppose to show the water level in a cup could be increased by graphically illustrates the rate of change of the water rather than increasing instantly from one water level to another. Articulartory distance refers to the distance between the meaning of expression and the form of expression. In the cup example, articulartory distance would be the representation of the cup, should it be triangular? Rectangular? Or in cylindrical shape? So if it is in ‘cup’ shape that most people recognize and illustrated in animated ‘water’, the system will be articulartory more direct. 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 2 According to Hutchin et.al, direct engagement is whether the user engages in direct interaction with the object of interest. With that said, 1) the input and output domain must be seamlessly integrated together so that driving the interface would have the same feeling as manipulating the actual object. For instance, pressing a GUI button with a mouse and clicking gestures would have less engagement then pressing the same button on a touch screen. In the latter case, there is no (at least really small) intermediary layer between input and output. 2) Semantic and articulatory distances should be minimized as much as possible and that 3) there should be no delay in direct engaging system unless it is suggested by the knowledge of the system itself. What Direct Manipulation Interface does not answer? The problem with direct manipulation interface is that the theory seems to ignore the importance of the role that the user plays in an interface. They defined direct manipulation principle to be a feeling of directness that the user gets. This means that the user’s perception is crucial to this type of interface. There are generally two problems in this theory: 1) The feeling of directness is subjective and that one person thinks an interface is direct does not necessary mean that another person will also think it is direct, 2) the feeling of directness can change over time within a single person. As I have mentioned before, one of the most important goal in direct manipulation is to match the level of descriptions between the user and the interface. This essentially means that it would either up to the user or the interface to make that effort to bring closer the level of description. However, due to the fact that a user must participate in using an interface, the user must make some effort in bringing closer the level of description. They have acknowledged this fact by claiming that “the user must generate some information-processing structure to span the gulf.” (Hutchins 1985) Now, they claim that a good interface must take full efforts to bridge the gulf and thus minimizing what the user has to do. However, users’ level of description in a task is not constant and each user thinks of the task differently in different levels. For instance, a professional tennis player would think of a tennis racket differently than a novice player that had never played tennis before. How can a designer assure that the design of a tennis racket matches the level of these two different players? He cannot (at least not so simple). A tennis racket is simply designed to match the level of descriptions of the professionals. That is why it is difficult for a novice tennis player to hit a ball with it. Thus, it is impossible for one static interface to match the level of description in everyone’s mind. Hutchin also mentions that automated behavior does not reduce the semantic distance because the user has to do too much work to bridge the gulf. But why not? Automated behavior is extremely crucial on how humans learn to use tools/interfaces in this world. Driving, writing, typing, playing a piano, riding a bike and even walking is a result of automated behavior. More importantly, the expressive power of each of these interfaces would be very limited without automatization. Imagine how many song (or notes) one can play on a piano without automated behavior. Imagine how driving would be like without automatizing the skills. Think about how different ones’s life could be without automatizing those natural born ‘interfaces’ call legs when one is small. Without automatization, the gulfs between many interfaces and the users are simply impossible to be bridge and that those interfaces would never achieve its full expressive power. For instance, a user could only use the piano to play individual notes if he is not achieving automated behavior. Continuous harmonics and certain tempo could never be achieved, not even 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 3 mention the possibility to control acoustical nuances. Thus, many good interfaces are designed for matching the level of description when one reaches automatization of certain behaviors in order to maximize the expressive power of the interfaces. With that said, an interface that is complicated and not easy to learn at first does not necessary concludes that it is a bad interface. Likewise, an easy to learn interface which minimizes the effort a user may not be a good interface since their expressive power can be easily limited. That is, a toy piano which only has 10 keys is much easier to play and learn compare to a 72 keys standard keyboard, but a 10 keyboard would never achieve the capability of a 72 keys keyboard. Directions in solving the problems However, the direct manipulation theory is not something that one could simply set aside. After all they are the pioneers who recognize the importance of matching level of description between a user’s intention and functions of an interface in order to minimize the gulf and maximize the expressive power/possibilities. But it is not as simple as they would think to achieve this goal; that is to match the level of descriptions. As I have mentioned above, intentions can varies among person and within person, there is simply impossible to design a static interface which is capable of matching all levels of descriptions. For example, an interface can either built to match a low level of description (like a guitar) which will maximize the possibilities (and expressive power) but minimize the feeling of directness of first time players (which makes it really hard to learn) or a high level interface which is relatively easy to learn but with very limited capabilities (e.g. the specially designed guitar in the Guitar Hero game series). One way to solve this problem is to create an interface that would dynamically change its design to match the user’s intention/level of descriptions. The most difficult thing to do in building such an interface is to where the highest level of description should be set. That is, how can a designer assure that everyone could immediately create a feeling of directness in that level? To achieve that one must first find out what behavior or expectation is immediately obvious and natural to all people in this world with very few exceptions (physically/mentally disable people, young children, etc). The study of affordance of object and natural mapping would help us identify this primitive nature of interaction. Tangible User Interface However, before starting the discussion in affordance, I will introduce a more recent interface theory. Tangible User Interface is first officially introduced in the “Tangible Bits” paper by Hiroshi Ishii (1997). In their vision, they think that traditional Graphical User Interfaces separates people from the physical world that we live and interact with for millions of years. Thus, it is necessary to bring back elements of physical interactivity/physical form of objects into user interface design, and go beyond pure GUIs. Rather than relying overly on human visual perception like GUIs do, TUIs put a much stronger emphasis on taking advantage of all human sensory experiences. It is important to note that Tangible User Interface is not about making computer ubiquitous but to use people’s natural legibility of interacting with tangible objects in designing interfaces. It brings up two important concepts: 1) using people’s natural legibility of interacting with tangible system in designing interface, and 2) “taking advantages of multiple senses and the multi-modality of human interactions with the real world” (Ishii 1997). Note that multi-modality does not necessarily equal to multi-sensibility. For instance, an interface that is taking advantage of human’s ability to distinguish colors and shapes are an example of multi1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 4 modal system but not a multi-sensory system. According to Ishii (1997), an example of natural legibility would be the fact that we know what to expect of a flashlight. This idea of natural legibility is basically a subset of affordance. While this concept is very powerful when defined correctly, they have made a big mistake when implementing the concept. I will explain this later. Taxonomy of TUIs “A Taxonomy for and Analysis of Tangible Interfaces” by Fishkin (2004) is an important paper since it provides one of the best frameworks of tangible interfaces. The paper starts out by giving a very board definition of TUIs and attempting to narrow it down. However, they found that narrowing the definition of tangible interfaces problematic because many classical “digital desk”- type interfaces are not identified as TUI while some interfaces that have the same exact configurations are said to be tangible interfaces. This contradiction makes it Figure 1 meaningless to provide a binary (yes/no) framework to identify whether or not one system is a tangible interface. Rather, they found it useful to view tangibility in a 2D multi-value taxonomy. The two spectrums are embodiment and metaphor and they are both divided into four levels. It is important to note that increasing the tangibility does not necessary yield a better system. Embodiment is defined to be the proximity level between the input and output focus of a system. The four levels from highest to lowest embodiment (tangibility) are full, nearby, environmental and distant (see fig. 1). Metaphor is defined to be a measure of how much the user’s action in manipulating a particular system analogous to an action in the real world; of course, the stronger the analogy, the higher the tangibility. Generally, metaphor is only measured in two ways: noun and verb. They use these two values because they think that noun and verb are innate concept to human being based on cognitive psychology studies. The four levels are created based on these two scales: none, noun or verb, noun and verb, and full (see fig. 1). This taxonomy, however, suffers from almost the exact problem mentioned in Direct Manipulation Interface theory. Both theories assumes that users of an interface would have constant conceptual models. As Fishkin (2004) mentioned in the paper when attempting to define embodiment: “To what extent does the user think of the states of the system as being ‘inside’ the object they are manipulating? To what extent does the user think of the state of computation as being embodied within a particular physical housing?” It is clear that Fishkin himself understand that his taxonomy of TUIs depends almost entirely on users’ conceptual model (or perceived affordance in Norman’s term) about an object. If this is true, how one could accurately identify 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 5 the embodiment and metaphorical level of an interface or object? One could definitely do that, but that identification may suffer heavily from personal bias which may lead to serious design problems. For instance, if I am an interface designer, I must have high level knowledge about how a certain system operates and that I might think that the system is operating at ‘full’ metaphorical level while it is not the case for most people. Fishkin (2004) defined full metaphorical level by claiming that “the user need make no analogy at all—to their mind, the virtual system is the physical system; they manipulate an object and the world changes in the desired way”. Notice this is almost the same logic purposed in Direct Malipulation Interface (Hutchin 1985) Though both of these theories realize the importance of user’s conceptual model, they seem to build their theory by almost completely ignoring this fact. Although many researches in TUI suffer from this same problem, the ideas of designing interfaces based on natural legibility and taking full advantage of human sensibility are powerful concepts that should not be overlooked. I will now discuss the concept of natural legibility in terms of affordance defined by Donald Norman in 1988. General Concept of Affordance According to “The Design of Everyday Things” by Donald Norman (1988), affordance of object refers to the possible actions embedded and suggested by the object itself, to quote them, “the perceived and actual properties of the thing, primarily those fundamental properties that determine just how the thing could possibly be used.” (Norman 1988, PP 9) As mentioned in the book, a useful (but informal) way to know the affordance of an object is to ask questions like, “this is for… or this feature is for…” Some example of fundamental properties of objects can be very strong. A piece of glass is suggested to be seeing through; there is no way that a person could pretend not to see through it. The user could either not look at it or see through it. However, it is not possible for him to physically go through that piece of glass. This type of fundamental properties could be used as a natural guidance (or constraints) that when carefully taken advantage of could match the highest level of language in an interface that anyone could easily understand. Note that although the idea of affordance is used to describe ‘object’, it is logical to apply this idea to the design of physical interfaces due to the fact that a physical interface must be an object itself. This will be further discussed later in this proposal. Perceived Affordance VS Real/Physical Affordance Knowing the affordance of an object is always helpful, but it is important to note that most affordance is not naturally perceived to be the same by the user like the piece of glass mentioned above. That is, cultural conventions are very often involved in the process of identifying affordance of object or interfaces. For instance, the affordance for people to twist the cap counterclockwise out of a bottle of water is a cultural convention. This is learned affordance. That is, the value of affordance of this little piece of plastic on a bottle is not constant but user dependent. If this is the case, affordance simply re-introduced the same problem as I have mentioned above but not really attempting to solve it. Again, the goal is to identify a guideline that would help us identify a primitive nature of interaction which is stable enough that everyone knows what to do. In May 1999, Norman wrote an article clarifying the concept of affordance2. He divided the term affordance into perceived affordance and real affordance. According to him, most object affordances that people have identified are perceived affordances which essentially refer to 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 6 affordances of conventions. That is, for example, affordances of a plastic cap on a typical water bottle. A clear definition is that perceived affordances are what a particular user perceived or expected and that they can be independent to the real/physical affordances of an object. Physical affordance in contrast is the natural affordance of object that must be perceived in some way. A good example is the ‘see-through’ affordance of a piece of glass mentioned above. Another example would be the old-school 3.5 inches floppy disc in which it is physically limited to be inserted into a floppy drive in one and only one direction out of eight possible ways (there are two sets of the four possible directions since floppy discs have two sides). Physical/real affordance is a very important concept and a powerful one since it clears out users’ subjective views to an object or a physical interface (or it avoids these subjective views). If real affordance is properly taken advantage of, it would be highly possible to create an interface that all users from any part of the world with any cultural background will naturally and instantly know how to use this interface. In the paper, “Technology Affordance”, William Gaver (2001) also suggested a similar idea. The paper mentioned that “distinguishing affordances from perceptual information about them is useful in understanding ease of use.” (Gaver 2001) However, it is still difficult for a designer to accurately identify and take use of real affordances of an object. Constraint One effective way to take advantage of affordance is to create an affordance by introducing constraints. According to Norman (1988), there are four different kinds of constraints: physical, semantic, cultural and logical. Physical constraint is closely connected to real/physical affordance. Since human being lives and interacts with this physical world for billions of years, every one of us is expert in physical laws and rules in the earth. For instance, every human that lives in this world would know what to expect if you release a ball above the ground: it would be impossible for the ball to not fall on the ground unless there are some other external forces which go against the gravitational force. This is one example of many physical laws that designers can use to create physical constraints on objects or interfaces which limits action possibilities. Physical constraint provides extremely strong cues to the user of how to interact with an object or an interface without words or instructions. Consider the 3.5 inches floppy disc again. Designer purposely created the disc to be slightly rectangular so that it is impossible to insert it sideways. This is a physical constraint which limits four possibilities out of eight. The diagonal corner and the sliding direction of the mental door prevent other possibilities and allow users to insert the disc in one direction only. Introducing constraints reduce the real affordance of the floppy disc. The user must follow this constraint when inserting the disc into the drive because the real affordance is now limited to one direction. It is important to note that those physical constraints greatly reduced the time required for one to learn to use a floppy drive system. Imagine none of those constraints are presented, the user must insert the disc in eight different directions to test which directions the floppy drive could read the data in the disc. This process is done by observing appropriate feedbacks from the computer. It is true that the user might eventually learn which way is the right way to insert the disc but it would take much more time (and training) to achieve the same goal. Cultural constraints may not be as powerful as physical constraints, but they are extremely useful in designing objects and interfaces as well. Cultural constraints refer to constraints perceived by users; they are conventions of a group of people. Cultural constraints are closely connected to the idea of perceived affordance since perceived affordance is 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 7 affordances that each person thinks an object have by looking at it. Cultural conventions can be useful in that they provide addition hints which limit or guild users to the real affordances blinded to an object. Take the floppy disc example again, the little arrow on one side of the disc that sits right next to the sliding metal door is an example of cultural conventions or constraints. People in American culture or most other cultures would understand what that arrow means and insert the disc following the direction of that arrow. It is also a convention for them to know that they have to insert the disc while the arrow is visible and facing upward. This arrow provides a short-cut for people to make use of the real affordance of the disc rather than testing it eight times through instant feedbacks of trial and error. However, this arrow is certain not a good cultural constraint since it has really low visibility. A better example would be the ‘bar like’ door handle which promote pushing on the right side of the door mentioned in Norman’s book (1988). In this example, the real affordance of the door is pushing it on the right side. The cultural constraint is the ‘bar like’ door handle located on the right side of the door, and people would perceive it as a connection to the real affordance of that door. Semantic constraint is constraint promoted by the situation or system. For example, tires should be inserted around the wheels. This type of constraints is very similar to cultural constraints and that it should be a subset of cultural constraints. Logical constraint refers to limitation created by proper logical rules. For example, if there are five identical tires and four identical wheels, one of those tires that installed to one of those wheels has been damaged. It is logical to replace that damaged tire with the fifth tire which is identical to all other tires. This follows the logic if A=B and A can be installed to C, B must also be installed to C. Logical constraint can sometimes be as powerful as physical constraint because logical rules are facts and that they are always true if the premises are true. However, the problem with logical constraint is that people would more likely think inductively (what is probably true) rather than deductively (logical rules described above) when facing a logical problem. (Gray 2006) Thus, it would be very difficult for designer to use logical constraint in product design due to this bias. Visibility and Feedback Visibility is extremely important in interface and object design. In order for some object to be perceived to have certain affordances, the first thing to do is to make something visible. This is true no matter whether the affordances are perceived or real. Note that according to Norman, visibility here not only refers to traditional visual sensation (what one can see) but also other perceptual experience like auditory sense. A good design must convey good visual message to inform the correct affordances of an object. In 1. 2. 3. 4. Figure 2 Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 8 his essay, William Gaver (2001) has described the relationship between affordances and the quality of visual information presented in a system (see fig. 2). The horizontal axis, real affordance, indicates whether a certain affordance in fact present in a system. The vertical axis indicates whether there is visual or perceptual information present that suggests a certain affordance. For instance, a TV remote control with a curve metal on one side and not the others would provide visual information suggesting the remote control is transmitted from that side. Here, signal transmission is one of the real affordance of the system, and if the curve metal is on the same side as the signal transmitter, this visual/perceptual information (curve metal) supports the real affordance. Gaver calls those affordance perceptible affordance. Note that perceptible affordance is different from perceived affordance. In this example, the way that most users expect the curve to be a transmitter is the perceived affordance of this item. Again, the perceived affordance does not necessary to be the same as the real affordance. If the actual signal transmitter is on the opposite side of that metal piece, the perceptual information would give false hints to the real affordance. In this case, the user will try to aim that metal curve closer to the TV to get better signal, only to find that the signal is hardly getting stronger. This is called false affordance. Hidden affordance is simply when some real affordance of a system is hidden from the user (or very hard to find); for instance, an on/off switch inside the computer. When there is no visual cue about some non-existing affordance, the user simply expects that part of the system to afford nothing. Gaver refers situation like this to correct rejection by the user. This taxonomy of visual/perceptual information and affordance is useful because it provides basic design guidance to what should and should not be made visible. With good visibility, users can figure out what functions are supported and not supported in a system. They could learn the possibilities that a system is capable of; what can be done and what can’t be done. Good visibility also gives users immediate cues to physical constraints of a system and that the process of trial and error can be avoided. Since trial and error can almost always damage the system or interface, it is crucial to provide accurate hints to the capability. For instance, the side mirror of my car does not afford flipping and that the designer purposely made no gap between the car body and the mirror so that a false affordance could be avoided instantly without trying to push it and cause irreversible damage to the mirror. Moreover, visibility in a system is more than showing the user the affordances of a system and the capacity of those affordances. Making something visible is crucial for producing immediate, obvious and accurate feedback (or in rare cases, delayed feedback to match the nature of a task) for the users. Having good visual feedbacks is important because this is the only way for a user to know whether he has done what is intended with the interface/object. It is also important for people to know the state of a system, and the status of whatever is being manipulated if it is an interface. For instance, turning a steering wheel clockwise would cause the car I am sitting in to turn right. Since the car has immediate and accurate feedback, I know the car is turning right at the moment (the state of a system) and further turning is not needed. Imagine if the state of this system does not provide accurate and immediate feedback (the car would sometimes turn and sometimes don’t) how could one possibly rely on this interface i.e. the steering wheel? It is very unlikely that someone would risk their life dealing with such an interface with not accurate and delayed feedback. Natural Mapping 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 9 Mapping refers to some sort of relationship from one thing to another. For example, A maps to 1, B maps to 2, C maps to 3, etc. According to Norman (1988), natural mapping refers to mapping that people will figure out naturally and instantaneously. This is a critical idea of boosting the feeling of directness in an interface. Natural mapping both relies on cultural conventions and physical standards. One of the best examples would be the steering wheel of a car. People would naturally turn the wheel clockwise to make the car go right and counterclockwise for turning left. There is no thinking involved in the process and the spatial analogy is naturally done. This seems to be an easy concept to apply. However, the problem for natural mapping is that it is difficult to know for sure what types of natural mapping are cultural conventions and what other types are intuitive for all people in this world. This is an important question before natural mapping can be effectively applied to interface or object design. (Norman 1988 PP 23) There is in fact a large amount of ongoing research in Cognitive Psychology trying to find a more concrete answer to what type of mapping is intuitive for people and that practice is not involve in the process. (Fischer, 1999) People call this field of study Stimulus-Response Compatibility. This is a difficult research topic because it is extremely difficult for researchers to differentiate between learned conventions and ‘real’ intuition. The progress in this field of study is largely incomplete and that there are no specific guidelines or methodology to apply the knowledge to object or interface design. (Fischer, 1999). Although the field of S-R compatibility is very immature, there are still some useful research data which can give designer a basic sense of how to apply natural mapping to their design. According to Norman, natural mapping can be done in four possible ways: spatial, cultural, biological and perception. (Norman 1988 & Fischer 1999) Among all, biological effect seems to be the most powerful since it would have a really high chance of being intuitive to all human being in this world, and that everyone from any cultural background could acquire proper hints when dealing with such a system. One worth mentioning biological effect is the study done by Wikens et. al. (Wickens, 1984). The study shows that when a stimulus offers to the right side of the brain (for instance, an apple on the left visual field), responses using the same side of the brain (e.g. left hand) would be faster and more accurate than if stimuli and responses need to be processed across hemispheres. Spatial effect is also another very powerful mapping that could be relied on when designing interfaces. The steering wheel example mentioned before is a good example of spatial mapping in which turning the wheel to a certain direction maps to turning the car to that direction. The studies of the arrangement of the four burner stoves (mapping between four stoves and four controls) also show that spatial analogy is very intuitive and that it has higher priority over cultural mappings. (Chapanis, Smith, Shinar, 1967) However, some spatial analogy, especially those that could varies among culture may not be as stable as biological one since spatial analogy that seems intuitive to us may not be intuitive to people in other cultural group. One example of that would be the way that Aymaras would map future as backwards and past as forward (Nunez 2006). And they may have difficulties using the backwards and forward buttons on the web browsers that all westerners think it is natural and intuitive. Cultural effect is mapping that is affected by cultural conventions. For instance, the way that most people in the western culture would think that turning a knob clockwise means increasing the volume. As I have mentioned in this essay, cultural effect can be problematic since they are not universal and intuitive for all people. But they can sometimes be helpful if the 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 10 designer is almost certain that the cultural conventions they are applying are strong enough that it is intuitive to most people. Perception effect is similar to that of spatial effect. It simply states that linear and direct grouping and patterning could increase the intuitiveness of how people think of the task. In a sense this effect is forming a mental model for the users similar to spatial effect. Some examples of perception effects including using the same color for similar controls, or applying sensor lines on control-responses panel. For example, drawing visual lines connecting each control to the appropriate stove that it corresponds to. (Chapanis, 1965) Although there is no concrete framework of how to apply those mapping technique to designing interfaces (Fischer 1999), it is still better to have some relational mapping than none. Arbitrary mapping of controls and responses would make an interface or object confusing and extremely difficult to use. (Norman 1988) They may also create an extra layer that the user must learn to adapt before they could achieve their intention. Even though the mapping may not be completely intuitive (like the biological compatibility), the user could off load more cognitive resources (less to remember) to a system with good relationship between placement and control along with good visibility and feedbacks so that a system would be easier to learn. (Norman 1988, PP 23) Interfaces, what are they? I have been using the word interface and object interchangeably without really defining what is an interface. It is definitely beyond the scope of this writing if a detail definition of interface is given. A short and concise definition should be enough to lay the foundation of this discussion. An interface is the point of interaction between one entity and other entity (s). This point of interaction can be both physical and virtual. Here, interface refers to the point of interaction between human and other entity (s). For instance, a steering wheel is the point of interaction between human and a vehicle. The guideline of what is good and bad interface that I am about to discuss primary describes physical/material interfaces, but they are common design principles that should well fit into designing good virtual interfaces as well. Good Interfaces The best interface is the one that is the most direct. One could achieve his goal without the obstruction of the interface, without recognizing how he can do it. To write is to write; there is nothing more to it. In a sense the best interfaces is our own body parts. Think about how you grab a book. The interface here is your hand and that it is a point of interaction between your brain and the physical world (in this case the book). It is unnatural for anyone to think about how to use their fingers; to consciously thinking about how to balance the grip with your little finger while holding the book. In fact anyone should recall (at least partially) how we learn to use these interfaces to achieve our goals. A baby will not naturally born to walk or run around the football field; it is also a learning process, an adaptive process in which one has to build a representation in our sensory motor cortex in the brain (Squire 2008). What even more interesting is how astronauts must re-learn the way they use their leg and feet to walk on the moon. To picture that, imagine walking under the swimming pool or walking on the ice skating field with your bear feet. Why does getting to a desire place in those situations suddenly become so difficult? Why do your feet suddenly become more visible than you can ever imagine. It is because the environment itself created an illusion of a different interface; that is, a pair of legs that suddenly 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 11 gets a lot lighter or a pair of extremely slippery foot. If this seems not too convincing, think about how a tennis expert would feel if all he can use is a 20 lbs racket. Thus, these observations could lead us to think that our limbs are interfaces which are not too different from a steering wheel, a tennis racket, a pair of ice skating shoes, etc. Everyone could take those interfaces and with a considerable amount of practice, the user will build up feeling of directness just like the direct feeling we have for walking or holding a book. In fact, we can look at our limbs as interfaces that are designed by the natural evolution process. They slowly change in a way that they can be used to do what they are intended to do (for instance, feet with flap shape for better balance). However, there is a major problem with this logic: Does that mean any interfaces can be learned or adapted to create the feeling of directness? The answer is yes and no. No, because the human brain and body has limitations. For instance, it is certainly not possible for anyone to adapt and feel direct to play tennis with a 100 lb racket. Yes, because human brain and body is reasonably flexible (Squire 2008) and we can learn to adapt to almost any interfaces to achieve our intended goal as long as it is within the body’s limitation. Nevertheless, a frame-work of good interface is essential because the time it takes to create the feeling of directness can be strongly reduced and sometimes eliminated with good design. The question here comes to what considered being good interface design. There are actually couple major differences between our natural born interfaces and interfaces that are not part of our body: 1) proprioceptive sense, 2) sensory feedback, and 3) the innateness of knowing possible functions of those interfaces. The first two are easy to understand in which proprioceptive sense give us the feeling of where they are and what they are doing (Squire 2008) and sensory feedback simply tell us how and what they have contact with and where is being touched. The last point I made about the innateness of knowing possible functions means that people are innate to know what can be moved and what not3; they know the limitation of their hands and every single joints. A baby will not be taught that he has ten fingers and that each fingers has three joints which can only be moved in one direction (towards the palm). He intuitively knows all possibilities and limitations of those natural born interfaces. Nevertheless, those three elements are impossible to achieve in everyday object or interfaces. For instance, a pair of scissors is an interface for cutting paper. The question is if it just lay in front of me, how could I possibly know that it is for cutting, its possibilities and limitations? There is obviously no proprioceptive sense for me to figure out the answers. What I can do is to rely on the visibility of the operating Figure 3 parts of the interface, or what Norman called it “system image” (Norman 1988, 17). (see fig. 3) Details about visibility could be found in the section above. By looking at the pair of scissors, I can tell that it can cut things because it contains two sharp blades, those are the perceived affordance or in this case the real affordance of the system. However, I might not be able to figure out what the holes do just by looking; that 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 12 is, I might not be able to perceive the real affordance of those holes. However, by the time I picked up the scissors I would automatically have several fingers inside the big hole and the thumb in the small one. “The mapping between holes and fingers- the set of possible operations- is suggested and constrained by the holes.” (Norman 1988, PP 12) Finally, I must rely on the sensory feedback from my hands and the visual feedbacks from the moving blades to correctly operate this interface. Now, it should be clear that a good interface is one that has the real affordances clearly visible, taking good advantages of natural mappings and constraints, and has good, obvious Figure 4 and immediate feedbacks. Exportable Systems One interesting thing that people do all the time is to learn by exploring. This means that in order to figure out the actual affordance of an interface, it is best to test them out. The way that I try to figure out what those holes on the scissors afford is an example of explorative action. Explorative action is almost always unavoidable and that it can be extremely helpful for learning the mapping, real affordances and other underlying structure of an interface. According to Norman, there are three principles for designing an interface that is good for exploring. 1) Possibilities must be clear in exportable system. In each state, the users must be able to see and act on the allowable actions, and that good visibility would remind them what they can do. 2) Feedback of each action must be clear and easy to understand. There should be no hidden feedbacks and if there are, designers should find alternate ways to make the feedbacks direct so that people could build a clear image of cause and effect of each action. 3) What is done to the system should be reversible, if it is irreversible, it is important for the system to have some way to signal the user before execution. Scaffolds Scaffolding is known to be the temporary framework that construction worker built as an aid while constructing buildings. They are physical aids with highly organized structured which will be modified (add or remove features) during the construction process. In the end, they will be completely removed when the building is ready to be used. When applied to learning, scaffolding is a framework that supports the learning process much like how the metal and bamboo tubes support an under-constructing building. A good and concise description of the role that scaffolding plays in learning can be found in the book, ‘Educational Psychology’ (Woolfolk, 2004). She said that scaffolding could be: “clues, reminders, encouragement, breaking the problem down into steps, providing an example, or anything else that allows students to do more on their own.” The crucial idea here is the last part, where scaffoldings “allow students to do more on their own”. Imagine applying this idea to interface design, where people could “do more on their own” 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 13 solely by observing or interacting with an interface. There is in fact a real life example. Learning to ride a bicycle is always a difficult task. One of my friend in fact had spent four days (3 hours/day) teaching me how to ride a bike. During that time, I fell many times and injure myself at least 4 times. The bicycle is designed for people with mental model of how to operate a bicycle, which is why it is so difficult for me to operate this interface. But as I have mentioned early on in this writing, interfaces should not be like that, i.e. they should not be designed only aiming for a certain mental model in a group of people. Here, if training wheel is installed to a bicycle, the bike would become an interface that fits well into mental models of people who have never ride a bicycle before. Rather than dealing with speed and balance at the same time, the training wheels help the user to get the direct feeling of riding a bike first before dealing with balancing. The training wheels are scaffolds which allow bicycle learners to feel the relationship between speed and balance. However, they also limit the ‘expressive power’ or the potentials of the interface. Adding training wheels on a bicycle would prevent the user from turning the bike freely, and that the user must slow the bike down to a certain speed and slowly turn the bike. The way they turn it would be very different from turning without the scaffolds. Therefore, it is important for designers to notice the limitations and benefits that a certain sets of scaffolds would do to the user. And once the user reaches a certain level, the scaffolds should be modified or changed or completely removed accordingly. Nevertheless, I should add that if scaffolds are not obstructing the expressive power of an interface, it is recommended to keep them so that they can be part of the system and improve the visibility. The letter prints on a keyboard is one good example. According to Lev S. Vygotsky, the distance between the achievement that a student will learn on their own and that a student will learn from others (teachers, peers) is called Zone of Proximal Development (Vygotsky, 1978). The main idea of my thesis is to create a dynamic interface which will completely reduce or inverse the ZPD (i.e. student would learn better from the interface with equal or higher achievement within the same period of time). This idea is in fact similar to how people learn to play complex video games: “Learning is a central part of gaming. Popular games often illustrate good instructional design, using a variety of technologies and conventions to scaffold a player through his ZPD. A game's "curriculum" is the skills and knowledge necessary to advance through the game and eventually master it. The player is initially unskilled in and ignorant of the game's curriculum, although many games share a similar curriculum. Many games include in-game tutorials, and most games ramp up difficulty over time. Such scaffolding is an appropriate approach to guiding astudent through his ZPD. Commercial game designers aren't deliberate Vygotskians. Rather, game players value games that foster "playing in the zone," a state of Platonic ecstasy in which challenges are just barely surmountable, building in difficulty without halting the flow of gameplay and success. Vygotsky believed that optimal instruction keeps a learner challenged and successful, and he'd probably agree that the zone of "playing in the zone" is the ZPD in a different hat.” (Buchanan, 2003) Although video gaming is not my interest here, it is important to realize the important role that scaffolding plays in gaming, and how a ‘good instructional design’ helps “scaffold a player through his ZPD” much like how a good interface may scaffold a user through the learning process. Summing up The thesis that I am about to present will mainly focus on creating a good dynamic interface which would train the user to reach a certain achievement in a shorter period of time. 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 14 Again, the idea is to reduce the Zone of Proximal Development in complex interfaces. A user should achieve a feeling of directness faster than learning from a static interface. According to what I have said early on in this paper, the first thing to do is to get the user to learn the possibilities and limitations of the interface. This can be achieved by introducing the fundamental elements of building a ‘good interface’; that is, one that has the real affordance clearly visible, accurate, immediate and obvious feedback, plus taking good advantages of mapping and constraints. At the fundamental level, it is not necessary to worry too much about the expressive power of the interface. The most important thing is to get the user to know what to do and a rough sense of how to do it. Here is where the idea of scaffolding comes into play. The set of first level scaffolds should match the mental model of a beginner and that temporary limitations to the expressiveness are unavoidable. As the user achieve a certain level, his mental model and the way he thinks about the task would change and that the scaffoldings should be modified accordingly. It is important to note that those changes should still follow the guideline of a good interface. In Norman’s term, the system image of an interface would be dynamically altering in responds to the ever changing user’s model. (Norman, 1988, P. 17) By the time, the user achieves the expert level; scaffoldings could be completely removed (though they don’t have to be) if they obstruct the potentials and expressiveness of the nature of the task. To make this thesis more concrete, I will now propose that it would take a shorter period of time for a novice Theremin user (one that with no experience with the Theremin) to achieve the same or similar proficient level if he begins his training with a well-redesigned Theremin with modifiable scaffoldings compares to the classical Theremin. The classical Theremin is considered to be a bad interface mainly because of its lack of visibility and obscure feedback. Imagine looking at the classical Theremin, there is a metal antenna on the right and a ring shaped metal wire on the left, plus some knobs on the wooden (or sometimes plastic) housing. It is completely unclear of what and how to interact with it; the implication is almost completely unknown. Since visibility of the interface fails to provide first time player enough information about the possibilities and limitations, it would be natural to explore the interface hoping to understand what it does. Although the novice player may eventually figure out that the mental ring is a controller for controlling volume (or amplitude) and the rod shaped antenna for frequency (or pitch), it is still unclear how it should be played. That is, it is extremely difficult for an untrained player to find the exact corresponding distance of a certain octave or note. There is no physical constraintt or guidance to remind the player what pitch or loudness he is playing. Natural mapping maybe present (the further the distance the higher the amplitude or the lower the pitch) which allow the user to naturally control the sound; however, the mapping is shallow and not specific enough for the user to rely upon. The feedback is also not obvious and confusing. For instance, since the electromagnetic signal is not constant across the vertical (top to bottom) as well as the horizontal plane across the rod shaped antenna, it is very difficult for a first time player to know what orientations he should rely on when changing the pitch since moving the hand in both planes would change the note but one of the plane (the vertical) is not designed to accurately and proportionally map the musical notes. That is to say it is not accurately tuned. Therefore, I will attempt to re-design the Theremin according to the guideline of a good interface I defined earlier. Some of the elements (the system image of the Theremin) will also be modified to match varying user’s model so that the user could naturally learn from the interface itself and diminish the Zone of Proximal Development (i.e. so that the distance between the user’s own 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 15 achievement by learning on their own and the user’s achievement when taught by someone else can be reduced). Methodology The method below is especially designed in order to understand whether or not a well designed dynamic interface would significantly shorten the learning process of an interface. a. Subjects: A minimum of 36 subjects will be participating in this experiment. Half of them will be male and half of which will be female. They will be about 18 – 22 years of age, mostly undergraduate students from UCSD. 1/3 of those subjects must be chosen very carefully in which the size of their hands must be near identical. All subjects must not have prior experience with the Theremin but must be able to read musical notations and have basic knowledge of music: preferably played an instrument before. a. Experimental Design: The experiment will focus on three conditions: Control (C), Modified Interface (MI) and Video Control (VC). (see fig 6) Control is the condition where the subjects will only have the original unmodified Theremin and nothing else. Subjects in Video Control condition will have a classical Theremin and a set of video instructions with expert Thereminist, Thomas Grillo teaching the right way to use a classical Theremin. The video series will be divided into three levels; e.g. subjects in the first level are required to watch “The basic of playing a Theremin”. In the MI condition, sFigure 5 u bjects will be required to play a melody with a modified Theremin. The 36 subjects will be randomly and evenly placed into the three conditions. This means that there will be 12 subjects in each condition in the basic setting. There are three levels: the first level span the range from no knowledge about the interface to some basic knowledge (see section five for detail). Subjects will be required to perform a target melody 1. 2. 3. 4. Figure 6 Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 16 within a 1 hour period. Level 1 target melody simply requires the players to play seven full steps within a certain octave five times. That is, repeat “do- re-me-fa-so-la-ti- do’-tila-so-fa-me-re-do” five times. Their performance will be determined by the accuracy of pitch and tempo. They will be required to play the target melody once every 20 minutes. Their progress will be video captured each time they play for later studies and evaluations of accuracy. Accuracy of pitch and tempo will be determined by modified version of computer guitar toner (see fig. 5). Level 2 and level 3 will follow similar experimental structure with increasingly more complex melody. (Complexity will be evaluated according to frequency of note jumping, i.e. do-fa will be more difficult to play then do-re, and tempo of the piece) Learning time will increase to two hours on level 2 and three hours on level 3. If subjects in MI conditions (Series 1) cannot achieve the desire accuracy during the recording time (for example, 1 hour in level 1), they will be trained additional time to achieve at least 90% accuracy on all variables (tempo, pitch) before Figure 7 the group is divided in the next level. This ensures the subjects to achieve a concrete set of accomplishment before advancing to the next level. However, this will be done separately from the main experimental method. Accuracy of amplitude will also be measured in level 2 and level 3 with similar method. In addition to amplitude, speed and accuracy, vibrato will be mandatory in level 3 as a subjective measure of expressiveness. To measure the quality of vibrato, I have to find Figure 8 different subjects to evaluate the original group’s level of expressiveness (how good is their vibrato) in a scale of 1-5 from the video clips captured during the last trial of level 3. Since this is purely subjective, the evaluation group should be more than 20 people in order to form a statistically significant result. Another way to measure expressive power is to separate the last trail into couple 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 17 separate sub-trials and keep videotaping them until whoever among the three first achieves automate behavior. If time is allowed, this experiment will be divided into three series. Each series will focus on the learning progress of each condition. For example, in series 1, performance of 36 subjects in each condition will be compared and statistically evaluated. The 12 subjects in the ‘MI’ condition will be divided into three groups continuing to the next level. The 4 subjects in the level 2 MI condition will then divided and promoted to level 3. Series 2 and series 3 will follow the same set up only with different condition focus. (see fig. 7 & 8) The design of the three series experimental conditions is helpful since it makes possible an obvious performance comparison. For instance, if the performance of the same subjects in level 1 MI condition differ significantly after divided into different learning groups, it is logical to conclude that the differences is resulting from the different structure of the interface rather than other variables. This design also allows multiple ways of evaluating subjects’ performance. For example, it is possible to analysis a general subjects’ performance in the MI-MI-MI (subjects who are trained by the MI interface in all three levels) sequence against sequence like C-C-C and VC-VC-VC. It is also useful to analysis results within level as mentioned above. However, such a set up would be extremely time consuming for subjects, and it is very unlikely to find 24 people willing to spend the time participating in my experiment. Nevertheless, series-2 is significant enough to be carried out since it could yield interesting data when comparing to series 1. b. Stimulus design: As mentioned before, “do-re-me-fa-so-la-ti-do’-ti-la-so-fa-me-re-do” five times in ¼ beat would very likely be the first level stimulus. An example of level 2 stimuli could be melodies like “me-me-me-fa-me-re-fa-so—re-so-la-ti-ti-so”. Note that they are mostly musical notes that are proximal to each other. In level 2, the subjects will also require to play each note on a unique amplitude or loudness. For instance, very loud ‘me’ and softer ‘me’ will be the first two notes that the subjects are required to play. Level 3 stimulus would be an actual song like ‘The Blade Eagle’ (national emblem of the United States). The user would be required to play this song with vibrato. c. The way that the experiment will be carried out: The experiment will be expected to carry out in a sound-proofed 100 to 200 square feet room. A digital video camera will be recording every 20 minutes for a 2 minutes duration. The user will be clearly signaled before the recording is carried out. And he will be required to perform the target piece whether they feel like they are ready or not. d. Predictions: For predictions, it would be natural to expect that subjects in the MI condition to perform the piece with much better control comparing to the other two conditions. It is important to realize that a better performance within a certain fixed period is ideally equivalent to achieving the target level within a shorter time. Thus, whoever groups in here that could produce the best performance would yield the shortest learning curve. Note that MI condition should have a smaller error bar and data should be less deviated due to better design of the instrument. The Control condition should have the worst 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 18 performance since there is no instruction to guild the subject through how to operate the Theremin. Video control condition should produce better results compare to that of the control condition since there is proper guidance of what should be done. However, the resulting accuracy in both pitch and tempo will still be far from perfect. After all, watching other people play is different from playing on their own. There is only a weak mental model created by watching others. Here, I will assume that I have finished collecting data from all 36 subjects in level 1. Keep in mind that amplitude will also be measured in level 2 and level 3. And that vibrato will also be measured subjectively in level 3. Here are the expected results: Level 1 Video Control condition Avg. Accuracy 100 90 % accuracy 80 70 60 59 52 50 40 30 39 30 44 40 20 10 0 Trial 1 (20min) Trial 2 (40min) Trial (20min/trial) Trial 3 (60min) avg. tempo accuracy avg. pitch accuracy Graph 1 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 19 Level 1 M.I. condition Avg. Accuracy 100 90 80 90 80 78 % accuracy 70 60 95 67 62 50 40 30 20 10 0 Trial 1 (20min) Trial 2 (40min) Trial 3 (60min) avg. tempo accuracy avg. pitch accuracy Trial (20min/trial) Graph 2 Level 1 Control condition Avg. Accuracy 100 90 80 % accuracy 70 60 51 50 41 33 40 30 20 33 25 40 10 0 Trial 1 (20min) Trial 2 (40min) Trial 3 (60min) avg. tempo accuracy Trial (20min/trial) avg. pitch accuracy Graph 3 Notice that (Graph 2) the average pitch in the MI condition will be much more accurate than that of the average tempo. This anticipating result could be explained by a good visibility of the electromagnetic field which separates the pitches, so that subjects 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 20 no longer need to intuitively ‘guess’ where a certain note is in midair. (See section six for detail) It is also logical to expect a lower performance in pitch then in tempo in the control condition since it is definitely easier to control the tempo with the volume loop in an all-or-none fashion (i.e. move your hand all the way to stop the Theremin from producing sound and release so that it produces some sound) than finding the correct pitch in midair with completely no physical or visual aid. Tempo control in MI condition is also expected to yield a better performance since the volume controller now has physical and visual feedbacks. It may also due to the fact that the same cognitiveresources that are required in finding the accurate pitch in the control condition is offloaded to the system in MI condition; and that those access cognitive-resources can now be used to accurate the tempo in a particular melody. Level 1 Trial 3 all conditions pitch and tempo accuracy 59 Conditions VC 52 78 MI 95 51 C 40 0 20 40 60 % accuracy 80 100 Lvl 1 Trial 3 tempo accuracy Lvl 1 Trial 3 pitch accuracy Graph 4 Here is an example of overall performance comparison (Graph 4). Once again, subjects in MI condition will be expected to have the highest performance. Level 2 and 3 will have conditions like amplitude accuracy and expressiveness. The Interface The fundamental goal of building this interface is stated in section three. Here, I will present some detail about the modified interface. Design: 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 21 The interface is divided into three levels hoping to take full advantage of the user’s model for boosting up the learning process. The system image of the first level should aim for matching the fundamental user models. That is, to understand the implication of the interface, what it does, and its limitations. Once the users acquire that mental model, he will advance to level two, in which the interface here is modified to yield higher capacity and expected the user to have the basic mental model of how to operate the Theremin. Advancement from second to third level would be based upon the same logic. Classical Theremin The Theremin has a loop antenna controlling the amplitude or loudness, a rod antenna controlling the pitch and two knobs, one controlling the maximum amplitude and another one controlling the pitch scales. More specifically, the closer the hand is to the loop antenna, the lower the amplitude and the closer the hand is to the hand is to the rod antenna, the higher the pitch. If the user is left-handed, simply turn Theremin around for them to play. Modified Theremin: Subjects will be given a modified Theremin and the piece of melody that they will play depending on their level. I will give a brief overview of the interface design in different levels. Level 1 The modified Theremin has three main parts. Amplitude controller, pitch controller and posture mounts. Since the amplitude will increase by lifting the hand above and away from the loop on the player’s left-hand-side, I will install a supporting platform long enough that unrelated parts will not obstruct the electromagnetic wave of the volume loop. One end above the loop will have an adjustable hand shaped hand mount which provides strong visible hints for people to put their left hand inside. The rail will have teeth which will provide subjects tactile feedback to help keeping the arm steadily. A bright light will cast a shadow of the platform on the back wall which creates a visual labeling of the amplitude against the pre-painted scales on the wall. The pitch controller will include a large transparent poly-board placed around the pitch antenna (see fig. 9). This board must be transparent since it will have minimal effect of the electromagnetic wave (a transparent board is transparent because electromagnetic wave like light wave can go through it). A specially designed projecting lamp will project rings of different colors of light on the poly-board creating a visible musical scale. The knob for adjusting the range of the electromagnetic wave will be integrated to the top part of the light which is controlling the range of projecting light if technically possible. Once success, this would make the non-visible electromagnetic wave visible. The poly-board will be 2.5 feet in radius since Thereminist Barry Schwam recommended that the first note of the best tuned Theremin should appear about 2 feet from the antenna. The set of posture mounts will include a large supporting stage for the subject’s whole body. The stage will be created with a back supporting board and a front board which will cover the subject’s whole body. (see fig. 9) The point of the front board is to stabilize the influences of the body to the electromagnetic field. Thereminist Barry Schwam4 once said that natural body area and subtle movements caused by breathing can easily create noticeable effect to the magnetic field. Thus, a large board will almost completely eliminate this unwanted variable. The back supporting board will provide subject some support to their body to reduce chances of getting fatigue. Elbow and arm support will be provided serving the same purpose. 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 22 There will also be some minor modification to the interface such as maximum volume level indicator and an on/off led light indicator. Level 2 The interface in level 2 will be very similar to that in level 1 with less physical constraints and thus, higher expressive power. The rail road with adjustable hand mount controlling the volume loop will be removed. The left hand will be free from any form of constraints so that subjects could move their hand up and down with higher speed and flexibility and thereby increases the expressive power of the system. But the light and shadow system will remain there and the light will directly cast a shadow of the hand to the scales on the wall. Eliminating this constraint should allow possible expressiveness required by the melody in level 2. This logic is analogous to how training wheels prevents one from turning the bike above a certain speed. Level 3 Since the pitch control must be more flexible for the user to create vibrato, I will take out the poly-board so that subjects’ hand will have no chance of relying on the board as a physical support which will more or less obstruct the movement. The board will be replaced by a light layer of smoke which will become a substitutional medium for the lamp to project the scale upon. Other elements will be inherited from level two. 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 23 1. 9 Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer Figure and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) 2. Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 3. There is no study proving that, but this should be a reliable prediction based on the evolution theory 4. Video from http://expertvillage.com 24 Timeline Week 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 1. 2. 3. 4. Review general concept Review general concept Review general concept Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com 25 Citations Books Norman (2002). The design of everyday things. Basic Books. Reprint. Originally published: The psychology of everyday things (1988). Basic Books. Gray (2006) Psychology. Worth Publishers. Woolfolk (2004) Educational Psychology. Allyn & Bacon. Squire, Roberts, Spitzer, Zigmond, McConnell, Bloom (2008) Fundamental Neuroscience, Third Edition. Academic Press. Vygotsky, Cole, John-Steiner, Scribner, and Souberman. (1978). Mind in society : The development of higher psychological processes. Cambridge: Harvard University Press. Articles Hutchins, Hollan, Norman. “Direct Manipulation Interfaces.” Human-Computer Interaction, Volumn 1, pp. 311-388 (1985) Ishii, Ullmer. “Tangible Bits: Towards Seamless Interfaces between People, Bits and Atoms” CHI 97, 22-27 (1997) Fishkin. “A taxonomy for and analysis of tangible interfaces” Pers Ubiquit Comput 8: 347-358 (2004) Fischer. “Intuitive Interfaces: A literature review of the Natural Mapping principle and Stimulus Response compatibility” J.F. Schouten School for User-System Interaction Research (1999) Norman. “Affordance, Conventions and Design” Issue of interaction PP 38-43 (1999) <http://www.jnd.org/dn.mss/affordance_conv.html> Gaver. “Technology Affordances” (2001) <http://www.cs.umd.edu/class/spring2001/cmsc434-0201/p79-gaver.pdf> Buchanan. “The Heritage & Legacy of Vygotsky & Computer Games”. College of Education, Michigan State University, Lansing, Michigan, USA (2003) 1. 2. 3. 4. Literatures including Direct Manipulation Interface, Hutchins et al. (1985), Emerging Frameworks for TUIs, Ullmer and Ishii (2001), A Taxonomy for and Analysis of Tangible Interfaces (2004) Affordance, Conventions and Design (1999) Issue of Interaction, PP 38-43 There is no study proving that, but this should be a reliable prediction based on the evolution theory Video from http://expertvillage.com