Dynamic Multi Processor Task Scheduling using Hybrid Particle

advertisement

International Journal of Emerging

Technology & Research

Volume 1, Issue 4, May-June, 2014

(www.ijetr.org)

ISSN (E): 2347-5900 ISSN (P): 2347-6079

Dynamic Multi Processor Task Scheduling using

Hybrid Particle Swarm Optimization with Fault Tolerance

Gayathri V S1, Sandhya S2, Hari Prasath L3

1, 2

Student, Department of Information Technology, Anand Institute of Higher Technology, Chennai

3

Assistant Professor, Department of Information Technology, Anand Institute of Higher Technology,

Chennai.

Abstract-The problem of task assignment in

heterogeneous computing systems has been

studied for many years with many variations. PSO

[Particle Swarm Optimization] is a recently

developed population based heuristic optimization

technique. The hybrid heuristic model involves

Particle Swarm Optimization (PSO) algorithm

and Simulated Annealing (SA) algorithm. The

PSO/SA algorithm has been developed to

dynamically schedule heterogeneous tasks on to a

heterogeneous processor in a distributed setup.

PSO with dynamically reducing inertia is

implemented which yields better result than fixed

inertia.

Task mitigation – dynamic reassignment of

tasks to processor in response to changing

loads on the processors and communication

network

Dynamic allocation technique can be applied to large

sets of real – world application that are able to be

formulated in a manner which allows for

deterministic execution. Some advantages of the

dynamic technique over static technique are that,

static technique should always have a prior

knowledge of all the tasks to be executed but

dynamic techniques do not require that.

2. RELATED WORK

Keywords: GA, HPSO, Inertia, PSO, TAP, Distributed

System, Simulated Annealing.

1.INTRODUCTION

The problem of scheduling a set of dependent or

independent task in a distributed computing system is

a well – studied area. In this paper, the dynamic task

allocation methodology is examined in a

heterogeneous computing environment.

Task definition – the specification of the

identity and the characteristic of a task force

by the user, the compiler and based on

monitoring of the task force during

execution.

Task assignment – the initial placement of

tasks on processors.

Task scheduling – local CPU scheduling of

the individual tasks in the task force with

the consideration of overall progress of the

task force as a whole.

© Copyright reserved by IJETR

Several research works have been carried out in Task

Assignment Problem [TAP]. The traditional methods

such as branch and bound, divide and conquer, and

dynamic programming gives the global optima, but it

is often time consuming or do not apply for solving

typical real – world problems.

The research [2,5,12] have derived optimal task

assignment to minimize the sum of task execution

and communication cost with the branch and bound

methods. Traditional methods used in optimizations

are deterministic, fast and give exact answer but often

get stuck on local optima. Consequently another

approach is needed when traditional methods cannot

be applied for modern heuristic are general purpose

optimization algorithms. Multiprocessor scheduling

methods can be divided into list heuristics, meta

heuristics. In list heuristics, the tasks are maintained

in a priority queue, in decreasing order of priority.

The tasks are assigned to the few processors in the

(Impact Factor: 0.997)

822

International Journal of Emerging Technology & Research

Volume 1, Issue 4, May-June, 2014

(www.ijetr.org) ISSN (E): 2347-5900 ISSN (P): 2347-6079

𝑛

FIFO manner. A meta heuristic is a heuristic method

for solving a very general class of computational

problems by combining user-given black-box

procedures, usually heuristics themselves, in a

hopefully efficient way.

Xik --> set to 1 if task ‘i’ is assigned to

Available meta heuristics included SA algorithm [9],

Genetic Algorithm [2,6], Hill climbing, Tabu Search,

Neural networks, PSO and Ant Colony Algorithm.

PSO yields faster convergence when compared to

Genetic Algorithm, because of the balance between

exploration and exploitation in the search space. In

this paper a very fast and easily implemented

dynamic algorithm is presented based on Particle

Swarm Optimization [PSO] and its variant. Here a

scheduling strategy is presented which uses PSO to

schedule heterogeneous tasks on to heterogeneous

processors to minimize total execution cost. It

operates dynamically, which allows new tasks, to

arrive at any time interval. The remaining of the

paper is organised as follows. Section 3 deals with

problem definition, section 4 illustrates PSO

algorithm. The proposed methodologies are

explained Section 5.

processor ‘k’

n --> number of processor

r --> number of task

eik --> incurred execution cost if task ‘i’ is

executed on processor ‘k’

cij --> incurred communication cost if task

‘i’ & ‘j’ executed on different processor

mi --> memory requirement of task ‘i’

pi --> processor requirement of task ‘i’

Mk --> memory availability of processor ‘k’

Pk --> Processor capability of processor ‘k’

Q(x) --> objective function which combines

the total execution and communication cost

(2) --> says that each task should be

assigned exactly one processor

(3) & (4) --> are the constraints that to

assure the resource demand should never

exceed the resource capability

3. PROBLEM DEFINITION

This paper considers the TAP with the following

scenario. The system consists of a set of

heterogeneous processors (n) having different

memory and processing resources, which implies that

tasks (r) executed on different processors encounters

different execution cost. The communication links

are assumed to be identical however communication

cost between tasks will be encountered when

executed on different processors. A task will make

use of the resources from its execution processor.

The objective is to minimize the total execution and

communication cost encountered by task assignment

subject to the resource constraints. To achieve

minimum cost for the TAP, the function is

formulated as

𝑟

𝑛

𝑟−1

𝑟

𝑛

𝑀𝑖𝑛 𝑄(𝑥) = ∑ ∑ 𝑒𝑖𝑘 𝑥𝑖𝑘 + ∑ ∑ 𝑐𝑖𝑗 (1 − ∑ 𝑥𝑖𝑘 𝑥𝑗𝑘 ) −

𝑖=1 𝑘=1

𝑖=1 𝑗=𝑖+1

𝑛

𝑛

∀ 𝑖 = 1,2, … . , 𝑟−→ (2)

𝑘=1

∑ 𝑚𝑖 𝑥𝑖𝑘 ≤ 𝑀𝑘

∀ 𝑘 = 1,2, … . , 𝑛−→ (3)

𝑘=1

© Copyright reserved by IJETR

𝑘=1

∀ 𝑘 = 1,2, … . , 𝑛−→ (4)

𝑥𝑖𝑘 ∈ {0,1}

∀𝑖, 𝑘−→ (5)

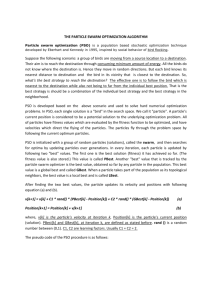

4. PARTICLE SWARM

OPTIMIZATION

PSO is a stochastic optimization technique which

operates on the principle of social behaviour like bird

flocking or fish schooling. In a PSO system, a swarm

of individuals (called particles) flow through the

swarm space. Each particle represents the candidate

solution to the optimization problem. The position of

a particle is influenced by the best particle in its

neighbourhood (ie) the experience of neighbouring

particles. When the neighbourhood of the particle of

the entire swarm, the best position in the

neighbourhood is referred to as the global best

particle and the resulting algorithm is referred to as

‘gbest’ PSO.

When the smaller neighbourhood

are used, the algorithm is generally referred to as the

‘lbest’ PSO. The performance of each particle is

measured using a fitness function that varies

depending on the optimization problem.

𝑘=1

→ (1)

∑ 𝑥𝑖𝑘 = 1

∑ 𝑝𝑖 𝑥𝑖𝑘 ≤ 𝑃𝑘

Each particle in the swarm is represented by the

following characteristics ,xij - the current position of

the particle, vij - the current velocity of the particle,

pbestij – personal best position of the particle. The

personal best position of the particle ‘i’ is the best

position visited by particle ‘i’ so far. There are two

versions for keeping the neighbour best vector

namely ‘lbest’ and ‘gbest’. In the local version each

(Impact Factor: 0.997)

823

International Journal of Emerging Technology & Research

Volume 1, Issue 4, May-June, 2014

(www.ijetr.org) ISSN (E): 2347-5900 ISSN (P): 2347-6079

particle keeps track of the best vector ‘lbest’ attained

by its local topological neighbourhood of particles.

For the global version, the best ‘gbest’ is determined

by all particles in the entire swarm. Hence the ‘gbest’

model is a special case of the ‘lbest’ model.

The following equations are used for the velocity

updation and the position updation

𝑣𝑖𝑗 = 𝑤 ∗ 𝑣𝑖𝑗 + 𝑐1 ∗ 𝑟𝑎𝑛𝑑1(𝑝𝑏𝑒𝑠𝑡𝑖𝑗 − 𝑝𝑎𝑟𝑡𝑖𝑐𝑙𝑒𝑖𝑗 ) + 𝑐2

∗ 𝑟𝑎𝑛𝑑2(𝑔𝑏𝑒𝑠𝑡𝑖𝑗 − 𝑝𝑎𝑟𝑡𝑖𝑐𝑙𝑒𝑖𝑗 )−→ (6.1)

If we consider it for local best version then the above

equation can be rewritten as

𝑣𝑖𝑗 = 𝑤 ∗ 𝑣𝑖𝑗 + 𝑐1 ∗ 𝑟𝑎𝑛𝑑1(𝑝𝑏𝑒𝑠𝑡𝑖𝑗 − 𝑝𝑎𝑟𝑡𝑖𝑐𝑙𝑒𝑖𝑗 ) + 𝑐2

∗ 𝑟𝑎𝑛𝑑2(𝑙𝑏𝑒𝑠𝑡𝑖𝑗 − 𝑝𝑎𝑟𝑡𝑖𝑐𝑙𝑒𝑖𝑗 )−→ (6.2)

Equation (6.1) & (6.2) is followed by

Here c1 & c2 are cognitive co-efficient and

rand1&rand2 are the two random variables drawn

from U(0,1). Thus the particle flies through potential

situation towards pbesti and gbestin a navigated way

while still exploring new areas by the stochastic

mechanism to escape from local optima. If c1=c2

each particle attracts to the average of pbest and

gbest. Since c1 expresses how much particle trusts its

own past experience it is called the cognitive

parameter and c2 expenses how much it trusts the

swarm called the social parameter. Most

implementations use a setting with c1 roughly equal

to c2. The inertia weight ‘w’ controls the momentum

of the particle. The inertia weight can be dynamically

varied by applying an annealing scheme for the wsetting of the PSO where ‘w’ decreases over the

whole run. In general the inertia weight is set

according to the equation (8).

𝑤𝑚𝑎𝑥 − 𝑤𝑚𝑖𝑛

∗ 𝑖𝑡𝑒𝑟−→ (8)

𝑖𝑡𝑒𝑟𝑚𝑎𝑥

A significant performance improvement is seen by

varying inertia.

5. PROPOSED METHODOLOGY

This section discusses the proposed dynamic task

scheduling using PSO. Table1 shows an illustrative

example where each row represents the particle

which corresponds to the task assignment that assigns

five tasks to three processors. [Particle3, T4] = P1

means that in particle 3, the task 4 is assigned to

processor 1. This section discusses simple PSO,

hybrid PSO. In PSO, each particle corresponds to a

© Copyright reserved by IJETR

Particle Number

Particle1

Particle2

Particle3

Particle4

Particle5

T1

P3

P1

P1

P2

P2

T2

P2

P2

P3

P1

P2

T3

P1

P3

P2

P2

P1

T4

P2

P1

P1

P3

P3

T5

P2

P1

P2

P1

P1

Table 1: Representation of Particles

𝑝𝑎𝑟𝑡𝑖𝑐𝑙𝑒𝑖𝑗 = 𝑝𝑎𝑟𝑡𝑖𝑐𝑙𝑒𝑖𝑗 + 𝑣𝑖𝑗 −→ (7)

𝑤 = 𝑤𝑚𝑎𝑥 −

candidate solution of the underlying problem. In the

proposed method each particle represents a feasible

solution for the task assignment using a vector of ‘r’

element and each element is an integer value between

1 to n. The below table shows an illustrative example

where each row represents the particle which

correspond to a task assignment that assigns the five

task to three processors

In a hybrid version hybridisation is done by

performing simulated annealing at the end of an

iteration of simple PSO. Generally TAP assigns ‘n’

tasks to ‘m’ processors. So that the load is shared and

also balanced. The proposed system calculates the

fitness value of each assignment and selects the

optimal assignment from the set of solutions. The

system compares the memory and processing

capacity of the processor with the memory and

processing requirements of the task assigned, else the

penalty is added to the calculated fitness value.

The algorithm is used for dynamic task scheduling.

The particles are generated based on the number of

processors used, number of tasks that have arrived at

a particular point of time and population size is

specified. Initially particles are generated at random

and the fitness is calculated which decides the

goodness of the schedule. The pbest and gbest values

are calculated. Then the velocity updation and

position updation are done. The same procedure is

repeated for maximum number of iterations specified.

The global solution which is the optimal solution is

obtained. When a new task arrives it is compared

with the tasks that are in the waiting queue and a new

schedule is obtained thus a sequence keeps on

changing with time based on the arrival of new tasks.

Each particle corresponds to a candidate solution of

the underlying problem. Thus each particle represents

a decision for task assignment using a vector of ‘r’

elements and each element is an integer value

between 1 to n. The algorithm terminates when the

maximum number of iterations is reached. The near

optimal solution is obtained by using Hybrid PSO.

(Impact Factor: 0.997)

824

International Journal of Emerging Technology & Research

Volume 1, Issue 4, May-June, 2014

1.1Fitness Evaluation

(www.ijetr.org) ISSN (E): 2347-5900 ISSN (P): 2347-6079

Generate the Initial Swarm

The initial population is generated randomly and

checked for the consistency. Then each particle must

be assigned with the velocities obtained randomly

and it lies in the interval [0,1]. Each solution vector

in the solution space is evaluated by calculating the

fitness value for each vector. The objective value of

Q(x) in (1) can be used to measure the quality of each

solution vector. In modern heuristics the feasible

solutions are also considered. Since they may provide

a valuable clue to targeting optimal solution. A

penalty function is only related to constraints (3) &

(4) and it is given by

Evaluate the Initial Swarm using the fitness

function

Find the personal best of each particle and the

global best of the entire swarm

Update the particle velocity using global best

Apply velocities to the particle positions

𝑟

𝑃𝑒𝑛𝑎𝑙𝑡𝑦(𝑥) = max (0, ∑ 𝑚𝑖 𝑥𝑖𝑘 − 𝑀𝑘 )

𝑖=1

𝑟

Evaluate new particle positions

+ max (0, ∑ 𝑝𝑖 𝑥𝑖𝑘 − 𝑃𝑘 ) −→ (9)

𝑖=1

The penalty is added to the objective function

wherever the resource requirement exceeds the

capacity. Hence the fitness function of the particle

vector can finally be defined as in equation

Re - evaluate the original swarm

G

L

O

B

A

L

B

E

S

T

P

S

O

Find the new personal best and global best

𝐹𝑖𝑡𝑛𝑒𝑠𝑠(𝑥) = (𝑄(𝑥) + 𝑃𝑒𝑛𝑎𝑙𝑡𝑦(𝑥))−1 −→ (10)

Hence the fitness value increases the total cost is

minimized which is the objective of the problem

Has the maximum

Iteration reached?

1.2 Simple PSO

As we have already seen in Section 2 Classical PSO

is very simple. There are two versions for keeping the

neighbours best vector namely ‘lbest’ and ‘gbest’.

The global neighbourhood ‘gbest’ is the most

inituitive neighbourhood. In the local neighbourhood

‘lbest’ a particle is just connected to a fragmentary

number of processes. The best particle is obtained

from, the best particle in each fragment.

1.2.1gbest PSO

In the global version, every particle has access to

fitness and best value soo far of all particles in the

swarm. Each particle compares with fitness value

with all other particles. It has exploitation of solution

spaces but exploration is weak. The implementation

can be depicted As a flow chart as shown in Fig. 1

Get the best individual from the last

Fig: Global best Pso

1.2.2lbest PSO

In the local version, each particle keeps tracts of best

vector lbest attained by its local topological

neighbourhood of particles. Each particle compares

with its neighbours decided based on the size of the

neighbourhood. The groups exchange information

about local optima. Here the exploitation of the

solution space is weakened and exploration becomes

stronger.

6. HYBRID PSO

© Copyright reserved by IJETR

(Impact Factor: 0.997)

825

International Journal of Emerging Technology & Research

Volume 1, Issue 4, May-June, 2014

(www.ijetr.org) ISSN (E): 2347-5900 ISSN (P): 2347-6079

Modern meta-heuristics manage to combine

exploration and exploitation search. The exploration

seeks for new regions, and once it finds a good

region, the exploitation search kicks in.

The embedded simulated annealing heuristic

proceeds as follows. Given a particular vector, its r

elements are sequentially examined for updating. The

value of the examined element is replaced, in turn, by

each integer from 1 to n, and retains the best one that

Generate the Initial Swarm

Evaluate the Initial Swarm using the fitness

function

Initialize the personal best of each particle and

the global best of the entire swarm

Update the particle velocity using personal best

or local best

Apply velocities to the particle positions

Evaluate new particle positions

Re - evaluate the original swarm and find the

new personal best and global best

Improve solution quality using simulated

annealing

Has the maximum

Iteration reached?

Get the best individual from the last

Fig: Hybrid Best Pso

© Copyright reserved by IJETR

H

Y

B

R

I

D

B

E

S

T

P

S

O

attains the highest fitness value among them. While

an element is examined, the values of the remaining

r-1 elements remain unchanged. A neighbour of the

new particle is selected. The fitness values for the

new particle and its neighbourhood is found. They

are compared and the minimum value is selected.

This minimum value is assigned to the personal best

of this particle. The heuristic is terminated if all the

elements of the particle have been examined for

updating and all the particles are examined. The

computation for the fitness value due to the element

updating and all the particles are examined. Since a

value change in one element affect the assignment of

exactly one task, we can save the fitness computation

by only recalculating the system costs and constraint

conditions related to the reassigned task. The flow is

shown in Fig. 3

7.DYNAMIC TASK

USING HYBRID PSO

SCHEDULING

Modern meta-heuristics manage to combine

exploration and exploitation search. The exploration

search seeks for new regions, and once it finds a good

region, the exploitation search kicks in. However,

since the two strategies are usually interwound, the

search may be conducted to other regions before it

reaches the local optima. As a result, many

researchers suggest employing a hybrid strategy,

which embeds a local optimizer in between the

iterations of the meta-heuristics. Hybrid PSO was

proposed in [24] which makes use of PSO and the

Hill Climbing technique and the author has claimed

that the hybridization yields a better result than

normal PSO. This paper uses the hybridization of

PSO and the Simulated Annealing Algorithm. PSO is

a stochastic optimization technique which operates

on the principle of social behavior like bird flocking

or fish schooling. PSO has a strong ability to find the

most optimistic result. The PSO technique can be

combined with some other evolutionary optimization

technique to yield an even better performance.

Simulated Annealing is a kind of global optimization

technique based on annealing of metal. It can find the

global optimum using stochastic search technology

from the means of probability. Simulated Annealing

(SA) algorithm has a strong ability to find the local

optimistic result. And it can avoid the problem of

local optimum, but its ability of finding the global

optimistic result is weak. Hence it can be used with

other techniques like PSO to yield a better result than

used alone.

(Impact Factor: 0.997)

826

International Journal of Emerging Technology & Research

Volume 1, Issue 4, May-June, 2014

(www.ijetr.org) ISSN (E): 2347-5900 ISSN (P): 2347-6079

The HPSO shown in Fig 1 is an optimization

algorithm combining the PSO with the SA. PSO has

a strong ability in finding the most optimistic result.

Meanwhile, at times it has a disadvantage of local

optimum. SA has a strong ability in finding a local

optimistic result, but its ability in finding the global

optimistic result is weak. Combining PSO and SA

leads to the combined effect of the good global

search algorithm and the good local search algorithm,

which yields a promising result. The embedded

simulated annealing heuristic proceeds as follows.

Given a particle vector, its r elements are sequentially

examined for updating. The value of the examined

element is replaced, in turn, by each integer value

from 1 to n, and retains the best one that attains the

highest fitness value among them. While an element

is examined, the values of the remaining r -1

elements remain unchanged. A neighbor of the new

particle is selected. The fitness values for the new

particle and its neighbor are found. They are

compared and the minimum value is selected. This

minimum value is assigned to the personal best of

this particle. The heuristic is terminated if all the

elements of the particle have been examined for

updating and all the particles are examined. The

computation for the fitness value due to the element

updating can be maximized. The procedure for

Hybrid PSO is as follows,

1. Generate the initial swarm.

2. Initialize the personal best of each particle and the

global best of the entire swarm.

3. Evaluate the initial swam using the fitness

function.

4. Select the personal best and global best of the

swarm

5. Update the velocity and the position of each

particle using the equations (4 ) and (5 ).

6. Obtain the optimal solution in the initial stage.

7. Apply Simulated Annealing algorithm to further

refine the solution.

8. Repeat step 3- step 7 until the maximum number of

iterations specified.

9. Obtain the optimal solution at the end.

8.FAULT TOLERANCE

After the Scheduling is been done each and above

process is allowed to run on the respective processor

which was allotted after scheduling. After some time

if a new process comes at run time then automatically

an interrupt is raised as specified in the above steps.

Now at the time of execution if a processor has gone

failure then the task assigned to that task will leads to

failure.

© Copyright reserved by IJETR

In order to overcome this problem we keep track of

the task execution at regular time interval.

The process of Fault tolerance is as follows

1. Task execution is done with the

respective allocated processor

2. A log is created with regular time

interval

3. If a processor gets failure then an

interrupt is raised and from log file

status of each task executed is collected

4. Then with the available details

rescheduling is done and process is

restarted

9.CONCLUSION

In many problem domains, the assignment of the

tasks of an application to a set of distributed

processors such that the incurred cost is minimized

and the system throughput is maximized. Several

versions of the task assignment problem (TAP) have

been formally defined but, unfortunately, most of

them are NPcomplete. In this paper, we have

proposed a particle swarm optimization/simulated

annealing (PSO/SA) algorithm which finds a nearoptimal task assignment with reasonable time. The

Hybrid PSO performs better than the local PSO and

the Global PSO.

REFERENCES

[1] AbdelmageedElsadek.A, Earl Wells.B, A

Heuristic model for task allocation in

heterogeneous distributed computing systems,

The International Journal of Computers and

Their Applications, Vol. 6, No. 1, 1999.

[2] Annie S. Wu, Shiyun Jin, Kuo-Chi Lin and

Guy Schiavone, Incremental Genetic Algorithm

Approach to Multiprocessor Scheduling, IEEE

Transactions on Parallel and Distributed

Systems, 2004.

[3] Batainah.S and AI-Ibrahim.M, Load

management in loosely coupled multiprocessor

systems, Journal of Dynamics and Control,

Vol.8, No.1, pp. 107-116, 1998.

[4] Chen Ai-ling, YANG Gen-ke, Wu Zhi-ming,

Hybrid discrete particle swarm optimization

algorithm for capacitated vehicle routing

problem, Journal of Zhejiang University, Vol.7,

No.4, pp.607-614, 2006

(Impact Factor: 0.997)

827

International Journal of Emerging Technology & Research

(www.ijetr.org) ISSN (E): 2347-5900 ISSN (P): 2347-6079

Volume 1, Issue 4, May-June, 2014

[5] Dar-TzenPeng, Kang G. Shin, Tarek F.

Abdelzaher, Assignment and Scheduling

Communicating Periodic Tasks in Distributed

Real-Time Systems, IEEE Transactions on

Software Engineering, Vol. 23, No. 12, 1997.

[6] Edwin S .H .Hou, Ninvan Ansari, and Hong

Ren, A genetic algorithm for multiprocessor

scheduling, IEEE Transactions On Parallel And

Distributed Systems, Vol. 5, No. 2, 1994.

[7] FatihTasgetiren.M& Yun-Chia Liang, A

Binary Particle Swarm Optimization Algorithm

for Lot Sizing Problem, Journal of Economic

and Social Research, Vol.5 No.2, pp. 1-20.

[8] Maurice Clerc and James Kennedy, The

Particle Swarm—Explosion, Stability, and

Convergence in a Multidimensional Complex

Space, IEEE Transactions on Evolutionary

Computation, Vol. 6, No. 1, 2002.

[9] Osman, I.H., Metastrategy simulated

annealing and tabu search algorithms for the

vehicle routing

problem, Annals of Operations Research,

Vol.41, No.4, pp.421-451, 1993

[10] Parsopoulos.K.E, Vrahatis.M.N, Recent

approaches to global optimization problems

through particle swarm optimization, Natural

Computing Vol.1, pp. 235 – 306, 2002

[11] Peng-Yeng Yin, Shiuh-Sheng Yu, Pei-Pei

Wang, Yi-Te Wang, A hybrid particle swarm

optimization

algorithm for optimal task assignment in

distributed systems, Computer Standards &

Interfaces , Vol.28, pp. 441-450, 2006

[12] Ruey-Maw Chen, Yueh-Min Huang,

Multiprocessor Task Assignment with Fuzzy

Hopfield Neural

Network Clustering Techniques, Journal of

Neural Computing and Applications, Vol.10,

No.1, 2001.

[13] Rui Mendes, James Kennedy and José

Neves, The Fully Informed Particle Swarm:

Simpler, Maybe Better, IEEE Transactions of

Evolutionary Computation, Vol. 1, No. 1,

January 2005.

[14] Schutte.J.F, Reinbolt.J.A, Fregly.B.J,

HaftkaR.T. andGeorge.A.D, Parallel global

optimization with the particle swarm algorithm,

International Journal for Numerical Methods in

Engineering, Vol 6, pp.2296–2315, 2004

© Copyright reserved by IJETR

[15] Tzu-Chiang Chiang , Po-Yin Chang, and

Yueh-Min Huang, Multi-Processor Tasks with

Resource and Timing Constraints Using Particle

Swarm Optimization, IJCSNS International

Journal of Computer Science and Network

Security, Vol.6 No.4, 2006.

[16] Virginia Mary Lo, Heuristic algorithms for

task assignment in distributed systems, IEEE

Transactions on Computers, Vol. 37, No. 11, pp.

1384– 1397, 1998.

[17] YskandarHamam , Khalil S. Hindi,

Assignment of program modules to processors:

A simulated annealing approach, European

Journal of Operational Research 122 509-513

2000

[18] IoanCristianTrelea, The particle swarm

optimization algorithm: convergence analysis

and parameter selection, Information Processing

Letters, Vol. 85, pp. 317–325, 2003.

[19] James Kennedy, Russell Eberhart, Particle

Swarm Optimization, Proc. IEEE Int'l.

Conference on Neural Networks, Vol.4,

pp.1942-1948.

[20] Shi.Y, and Eberhart.R, Parameter Selection

in Particle Swarm Optimization, Evolutionary

Programming VII, Proceedings of Evolutionary

Programming, pp. 591-600, 1998

[21] Kennedy.J and Russell C. Eberhart, Swarm

Intelligence, pp 337-342, Morgan-Kaufmann,

2001.

[22] Graham Ritchie, Static Multi-processor

scheduling with Ant Colony Optimization and

Local search, Master of Science thesis ,

University of Edinburgh, 2003.

[23] Van Den Bergh.F, Engelbrecht.A.P., A

study of particle swarm optimization particle

trajectories, Information Sciences, PP. 937–97,

2006.

[24] Yuhui Shi, Particle Swarm Optimization,

IEEE Neural Network Society, 2004

[25] Multiprocessor Scheduling using HPSO

with Dynamically varying Inertia, International

Journal of Computer Science and Applications,

Vol 4 Issue 3, pp 95 – 106, 2007

[26]Multiprocessor Scheduling using Particle

Swarm Optimization, International Journal of

the computer, The Internet and Management,

vol-17 No. 3, pp 11-24, 2009.

(Impact Factor: 0.997)

828