Hawkeye: 3D visualisation and modelling with ICT

advertisement

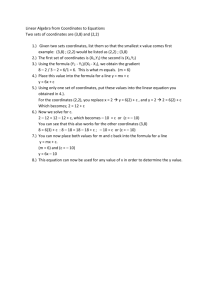

Hawkeye: 3D visualisation and modelling with ICT Preface A week or so ago I circulated some ideas about using pairs of synchronised video clips from different views of the same object in motion to recreate its 3D space-time coordinates and to be able to plot its trajectory as a space curve. The context there was that I have recently become interested in some of the experimental techniques used in sports science, particularly biomechanics, and wanted to see if some of the ideas could be made accessible to interested students and teachers without all the expensive hardware and software usually connected with it! Thanks to helpful feedback from a number of recipients I have brushed up the ideas into the form of an article, and taken the opportunity both to correct an error and to add some material on 3D vector geometry. In the first case I had made an error in my choice of the viewing plane in assuming it would contain the “world” z-axis – which would only be true if the view point V was in the ground plane. For greater generality the viewing plane should be perpendicular to OV. Looking at some standard texts on computer graphics (including my own “Geometry with Microcomputers”) the standard approach to perspective geometry is based on using successively a number of matrices to rotate, scale and project points – in the most general case using 4x4 matrices with homogeneous coordinates! Assuming that most interested readers would have little or no knowledge of matrices or their geometric application I needed to find a different technique. In my original piece I used some similar triangles and trigonometry to compute projections. With the more general position of the viewing plane I needed to find a more appropriate approach. However the article is not just a technical one offering solutions to a problem which anyone interested should now be able to integrate into their own explorations. There is a pedagogical dimension as well. I wanted to explore a use of CAS assistance in an area of mathematics in which I have never previously used it before: vector geometry. Although 2D vectors are in the KS3/4 curriculum they do not get much attention! The best we can hope for is that students might remember that the journey from A to B as a vector is the same as the journey from A to C and then from C to B: (1) AB = AC + BC. Perhaps also that if a and b are position vectors of A and B then (2) AB = AO + OB = -OA + OB = -a + b = b – a, and that if A, B, C lie on the same straight line then (3) AC = t.AB for some value of t. Maybe a few students will recognise that these work in 3D and 2D? That’s enough for us to find the position vector r of any point R on the line VP as: (4) r = OR = OV + VR = OV + t.VP = v + t.(p – v) = (1-t).p + t.v - which is pretty well the most useful tool for vector geometry: the vector equation of a line. What remains are tools to help with testing and making things perpendicular. Again, from GCSE coordinate geometry, students should recognise the condition for the 2D vectors a = and b = to be at right angles (aka perpendicular aka orthogonal) – by considering the gradients of OA and OB: xa/ya = -yb/xb, to get (5) xa.xb + ya.yb = 0. So we can probably get away with defining this to be the 2D dot product of vectors a, b and by stating that this extends to the 3D case as well, where the dot product, dotP(a,b), is given by: a.b = xa.xb + ya.yb + za.zb, and that a is perpendicular to b if (and only if) a.b = 0 . (So we don’t need to raise the idea of scalar product as a b cos .) This enables us to show that x = is perpendicular to v = . The remaining trick we need is to produce a vector y which is perpendicular to both x and v, and I would be content to say that this is what the function y = crossP(v,x) produces! (We can always check that v.y = 0 = x.y). I would be as enthusiastic now about slogging through the algebra to arrive at the perspective projection functions xx and yy as I was when I was punished at primary school by copying out longer and longer psalms – and almost certainly less accurate! By applying algebraic results in known geometric situations, like the carousel, we can get immediate visual feedback (Logo style) as to whether or not we are on the right lines. In pursuing this investigation I used just 3 different software tools: Cabri 3D, Tracker and TI-Nspire. These seem to me to represent possible elements of a basis set of software for science and mathematics experimentation, modelling and problem-solving which should be available to all schools, students and teachers. At the moment I need to add some data-logging software such as Vernier’s Logger Pro, and maybe another geometry tool like Sketchpad, Cabri 2D or Geogebra – we just need Lord Mandelson to invest!! Hawkeye: 3D visualisation and modelling with ICT Adrian Oldknow March 2010 aoldknow@yahoo.co.uk 1. Introduction We are now used to having TV and video cameras at major sporting events e.g. to adjudicate contested line calls in tennis or to assist a third umpire in cricket. They permit us to see simulations of how the ball was travelling based on 3D reconstructions of its flight and hence to predict whether or not it would land in court or hit the wicket. In this article we will take a look at some of the mathematical techniques which make this possible. First we will use some ICT tools to establish how to map the 3D coordinates of an object in space onto 2D coordinates of its perspective projection from a viewpoint onto a viewing plane. Then we will use the technique to show how we can obtain visualisations of geometric loci, of algebraic space curves and of 3D scatter plots. These techniques should enable the interested reader to set up and explore 3D models for themselves using appropriate ICT tools. We will start with a 3D geometry tool (Cabri 3D), and a mathematical package containing 2D geometry, graphing, computer algebra, numeric computation and spreadsheets (TI-Nspire) to try to obtain the magic formulae. 2. Setting up the 3D scene In order to make calculations of positions we need some sort of coordinate system for 3D space. We will start with a common one in which the plane defined by the origin O together with the x- and y-axes forms the “ground floor” – or horizontal plane, and so the z-axis is the line perpendicular to this through O – i.e. the vertical. The conventional way of sorting out positive from negative directions is to use a so-called “right triad” where if, using your right hand, your middle finger points along the positive x-axis (coming towards you), your first finger points up the positive z-axis (upwards) and your thumb points along the positive y-axis (in front of you from left to right). This is the representation which Cabri 3D uses. Cabri 3D’s starting point is just to give you three short coloured vectors and the shaded ground plane (z = 0). The red and green vectors are in the ground plane and the blue one represents the vertical. We have constructed points X, Y and Z at the ends of the vectors and measured their coordinates, together with the origin O. We have constructed a point (x, y, 0) in the ground plane which can be freely dragged around. Next we have constructed the vertical to the ground plane through (x, y, 0) and constructed a point (x, y, z) on it. This point, together with the origin, defines one diagonal of a cuboid whose vertices are shown. In order to investigate projective geometry we need to define an object P (xp, yp, zp) to view and a viewpoint V (xv, yv, zv) from which to view. From these we should be able to calculate the position of the projected image point I (xi, yi, zi) in the chosen viewing plane and convert these to 2D coordinates (Xi, Yi) for use in 2D graphing and drawing. In the figure alongside, a sphere has been constructed with centre O through a point A along the extended x-axis. The point V is taken on the surface of the sphere (hidden), and the diagram shows the actual values of the coordinates (vx, vy, vz), the length r = OA, and the angles = óNOV and = óAOS, which correspond to the spherical polar coordinates of V. The vector OV is the perpendicular (also called the “normal”) to the viewing plane through O. This establishes the connection between the 3D “Cartesian” coordinates with which we will be working, and the “spherical polar coordinates” used for example in Google Earth, SatNav and GPS systems. Later we will set up such a system for improved “feel” when manipulating 3D objects on screen. So, how with just 6 numbers (the 3D coordinates of V and P) can we calculate the 3D and 2D coordinates of the projected image I? Any point X on the viewing x-axis will have 0 for z-coordinate and be such that OX is perpendicular to OV. We need a few ideas from vector geometry. Two vectors are perpendicular if their “dot product” is zero. In particular the point X (-yv, xv, 0) has zero dot product with V (xv, yv, zv). The “vector product” of two vectors along OV and OX will give a vector along the third axis in the viewing plane shown as OY. We can find the “vector equation” of any point J on the line VP in the form: J = (1-t)V + tP , and there will be a unique value of t for which J lies in the plane XOY. This is when OJ is perpendicular to OV. So we form the dot product J.V and find the value of t for which this is zero. Substituting this value for t in the equation for J gives the vector I. Then we can consider the viewing plane x-axis. Here the point Q lies on OX so that IQ is perpendicular to OX. We can write OQ = s.OX and use the previous technique to set up and solve a dot product equation for s. Finally we repeat this trick for the viewing y-axis and find R on OX which is such that IR is perpendicular to OX. Of course the algebra can get pretty messy, so having a CAS system, such as with the Math Boxes on a TINspire Notes page, is a great boon. In the screen below we have a TI-Nspire notes page open on the left and the Cabri 3D geometry page on the right. We copy the values for the vectors v and p, and solve the equation v.((1-t)v + tp) = 0 for t. The value of 1.8 corresponds to the ratio between the lengths VP and VI. The vector i agrees with the coordinates of I, too! We define vector x by copying in the values of –yv, xv and 0. Vector y is defined as the cross product of v and x. For the viewing x-axis we find that OQ = 1.34 OX, and so the 2D X coordinate of I is OQ = 6.7. Repeating this for the viewing y-axis we find that OR = 0.24 OY, so the 2D Y coordinate is OR = 6.4. Now we can run repeat trials by adjusting V and/or P in the Cabri screen, copying the changes to any independent variables to the TI-Nspire screen, and checking that the 3D and 2D viewing coordinates are computed correctly. Once we are happy that the process (or “algorithm”) is correct then we can go and find algebraic functions which carry out the transformations. In the first of the algebra screens we set up the general coordinates for the points V and P in the column vectors v and p. We enter the elements for the vector x for the point X on the viewing x-axis so that v.i = 0, and compute the vector y for the point Y on the viewing y-axis as the cross-product of v with i. This ensures we have three mutually perpendicular (“orthogonal”) vectors for the viewing coordinate system. The coordinates for the image point I in the viewing plane are found by solving where the point (1-t)v + tp meets the viewing plane, and so is perpendicular to v. This value of t is substituted in the formula for i to find the coordinates xi, yi and zi as functions of our 6 independent variables xv, yv, xv, xp, yp, zp. In the second screen we find the values of the parameters s and u which define the projections of I onto the viewing x- and y-axes. In the third screen below these are used to define the projected 2D coordinates xx and yy as functions of the 6 variables. Now we have just what we wanted: a pair of functions xx and yy which take the coordinates of the viewpoint V and an object point P and return the X and Y coordinates of the projection of P in the viewing plane. You could use these functions in other software, such as Excel, to produce perspective views of data and curves, but we will stick with TI-Nspire. xx(xp,yp,zp,xv,yv,zv) = yy(xp,yp,zp,xv,yv,zv) = 3. Plotting a space curve Alexander is piloting the black plane which swoops up and down as it goes round and round. Any part of him is tracing out a curve in space. Can you visualise the locus of his left ear? We will try and model this path both in Cabri 3D (as a trajectory) and in modelling software such as TI-Nspire (as a space curve) – by using parametric functions to define his x-, y- and zcoordinates at any time t. We start with a point A in the ground plane and construct the circle through A around the vertical z-axis vector. P is any point on this circle. B and C are points taken on the perpendicular to the ground plane through O. The line parallel to the x-axis through B (not shown) is taken as the axis for the circle centre B through A. Q is any point on this circle. The perpendicular to the ground plane at P is constructed, as is the plane through Q parallel to the ground plane. This plane cuts the vertical through Q at S and the z-axis at R. P and Q can slide independently on their domains (the horizontal and vertical circles). As they do so, S moves on a space curve. P and Q can be made to move around their circles at different speeds using the Animation controller. The resulting trajectory of S can be traced as shown. To model this algebraically we note that since P is describing a circle in the ground plane, the x- and y-coordinates of S will be of the form: x = a cos (dt), y = a sin (dt), where a = OA is the radius of the circle and d defines the speed of rotation. Q is also describing a circle, but it is its z-coordinate OR which fixes the z-coordinate of A, and this is given by z = b + c cos (et), where b = OB, c = BC and e defines the speed of rotation. On the fourth “CAS” screen we define the functions xe, ye and ze which convert from spherical polar coordinates (r,, ) to Cartesian coordinates (x, y, z). We also define drawing parameters and function to conform with measurements taken from Cabri 3D. Now we can plot the architect’s style plan and elevations by plotting each of the three pairs of parametric equation. The plan view is just the circle, and the elevations are known as Lissajous’ figures. We should now have all we need to get to work with 3D projected displays. The first test is to create a 3D data set from which we will produce a 2D scatter plot. In a spreadsheet we set up a list of values for time t, called tv, as a sequence from 0 to 500 in steps of 5. We define the lists xcoord, ycoord and zcoord as values for the x, y, z coordinates of points on the aircraft’s flight path calculated from the functions fx(t), fy(t) and fz(t) at each value of t in tv. On a Graph page we have set up sliders by which you can adjust the coordinates of the viewing position V. These set the values of the variables px, py and pz used to convert (xcoord, ycoord, zcoord) into the projected 3D coordinates (xproj, yproj, zproj) and finally the 2D versions (xdat, ydat). The scatter plot of ydat against xdat is plotted as the red dots on the Graph page. Well, we seem to have passed that test! We can also project the three parametric equations (fx, fy, fz) into the 2D parametric representation of the space curve: x = xx(fx(t), fy(t), fz(t)), y = yy(fx(t), fy(t), fz(t)) again with t running from 0 to 500 in steps of 5, and with the document options for angles set to degrees (not radians). Now we have passed that test, it just remains to check out polar coordinate means of data entry. So we have divided a new page between Geometry (thin strip on left) and Graph – thus allowing us to rescale the graphs without upsetting the geometric constructions. Values for latitude (theta), longitude (phi) and radius (aa) are entered using the dials and slider. These control the variables ex, ey and ez used on the new spreadsheet page to compute data sets xdat2 and ydat2 using the same functions and data as before. Now we have “Google Earth-like” controls to view the space curve from different positions. As a final bit of fun we can extend the range of the plotting parameter t to show more of the space curve, hide the scatter plot, and construct a point on the curve. This is used as the centre of a small circle constructed with the Compass tool using the small segment at the top right as radius. The point’s Attributes can be changed to animate it slowly along the curve, which brings up the animation controller. So now you can see a perspective view of Alexander’s head. But not quite! However, the simple construction used at the start of this section wasn’t actually correct for the aeroplane ride! It would be OK for the old fashioned steam carousel ride, though. If you look back at the picture of the plane-ride you can see that there is a point of suspension from the top and that there are hydraulic rams which push and pull the struts which support the planes. So we can model this by seeing that as D moves to H, so A moves to F and slides on an arc of a circle. The distance CP (from the centre to Alexander’s ear) is fixed by the lengths and angles of the polygon CFGP, and so that P also has a locus which is an arc of a circle in the plane CFE. So we can combine the up and down motion of P in that plane with a rotational motion around OC. If we just animate the point on the arc we get a constant speed with an abrupt change of direction at each end, which wouldn’t be comfortable. Ideally we should make a closer study of the actual mechanism used, or, failing that, get some video clips of the actual motion. In the absence of that one way to map smooth circular motion on to a circular arc is shown in the Cabri 3D model. An arbitrary circle has been drawn around C and a point on it is animated. Tangents are drawn to the circle to pass through the end points of the arc. Taking their intersection as centre we can project the point on the circle onto a point on the arc. See if you can make a simpler model – maybe using Lego, Meccano or K-nex? Can you turn this into a set of parametric equations for the x-, y- and z-coordinates of the pilot’s ear as functions of the parameter t? 4. Capturing and interpreting 3D data with video cameras Recently I had the pleasure of seeing a fully equipped biomechanics laboratory at the University of Chichester, equipped with a battery of cameras, force plates, reflectors and computers to track different parts of a human body in motion. Having been working at analysing different types of 1- and 2dimension motion I came away inspired to see if I could set up my own little home studio and work out how to recapture 3D motion from synchronised video clips of the same motion taken from two Casio Exilim digital video cameras mounted on tripods to view the same scene but at right angles to each other. Here is an image of the makeshift studio. So that is the basis of my home-baked Hawkeye system. The heavy wooden elephant is suspended by a long stiff spring from the retort stand mounted on the folding projector stand, counterbalanced by a pile of books. Two metre rules are set out on the ground at right angles, and two camera tripods are lined up with them to focus on the top of the elephant’s head. My wife, Jennie, has control over the left camera (the one with the zoom lens) and I have control over the right camera (the compact one). I set the elephant in a sort of 3D oscillation, get to my camera, say “ready, steady, shoot” and we each take a few seconds of video. So as you can see the experimental conditions are a bit rough and ready! The next step is to collect the two AVI files on the laptop and to analyse them using the Tracker 3 software (free Java download) from Doug Brown of Cabrillo College, USA. This has the advantage of more expensive sports analysis software of being able to auto-track on a given part of the object. Here are screens from Tracker showing the apparent trajectory of Ellie in the left and right hand views both as tracks on the video clip and scatter plots drawn from the captured x- and y-coordinate data. A still photograph was taken from each camera position of Ellie at rest in order to have a common set of axes and scales. One of the struts of the projector stand was conveniently 50cm long. You can see the tracking on the left video was a bit rough and ready – but we can iron some of the bumps out when we do the data analysis. The auto-tracking was done using Ellie’s white eyes with the black lenses, and an offset marker placed at the hook on the top of the elephant’s head. The motion was relatively sedate, so we didn’t need to use the high speed (210 fps) capability of the cameras, and the captured video is at 30 fps in 640x480 resolution. Running two copies of Tracker side by side we can synchronise the action by skipping the first 20 or so frames from the right hand video. Data from each table for about 7.2s is copied and pasted into Excel, where it is converted to 3 d.p. numeric data format before being copied and pasted into a TI-Nspire List & Spreadsheet page. The first three columns (ta, xl, yl) are the time, x- and y-coordinate data from the left Tracker view, and the next two (xr, yr) are the corresponding data from the right view. Now we can draw some scatter plots in TI-Nspire: Here we have plots of trajectories for each view together with views of each coordinate against time. We can see that each coordinate looks roughly a sinusoidal function of time, and so, technically, the trajectories will be reasonably modelled by Lissajous figures. We have estimated the centres of the cameras to be above points on the floor which are roughly each 1.65m from the point below where Ellie is suspended at rest. Using a Graph page we can ensure we have equal axes for comparison. The basic principles of the “stereo pair” technique are to convert the right and left images (xr, 0, yr) and (0, xl, yl) on the coordinate planes y = 0 and x = 0. We can use the functions already established in section 2, but as a check we can also arrive at them with simpler mathematics. We can use ratios and similar triangles or 2D coordinate geometry in the ground plane (z=0) to find xc and yc in terms of ax, by, xl, yl, xr and yr. The formulae are shown in the screen shot. The formulae can be validated by using measurements taken from a scale drawing where the point S can be dragged about. Results can then be used in the vertical right-angled triangles QBT and RAU to compute estimates zc1 and zc2 from the left and right views, and to take their average for the value of zc. The coordinates (ta, xc, yc, zc) now give the space-time positions of the trajectory of Ellie. We can also check these out with the 3D to 2D projection functions we derived earlier, this time working with 3D coordinates in Cabri 3D. We will now see if we can fit sinusoidal models to each of the position coordinates in a Spreadsheet. Well we can find approximations for xc(t) and yc(t) but not for zc(t) using sinusoidal regression calculations. Instead, for the zc coordinates we can plot a sine graph and then stretch, shift and squeeze the curve whose equation is y = a.sin(b.t + c) + d to get a good “by eye” fit. Up to now we have been working in degrees throughout, but the sinusoidal models are all in radians, so will you need either to start a new file, or to use a conversion factor h = 180/. We can see that these do make reasonable approximations to a smooth motion, which the view from the top reveals to be an underlying elliptic conical pendulum while Ellie is still bouncing up and down – like some fairground rides and carousels. We can export the parametric equations for the motion into 3D graphing software, such as Autograph to check out alternative views. But the mathematics which projects 3D into 2D built-in to Autograph is something we have just seen in section 2 above that we can replicate in TINspire. Now we know the functions xx and yy to compute the horizontal and vertical co-ordinates of the projected image in the viewing plane we can apply them to the parametric functions used to model the trajectory (xc, yc, zc) and so project the space curve onto any chosen plane. So again we can set up sliders to define values for the viewing point (xv, yv, zv) and plot the corresponding projected trajectory. A more convenient means of viewpoint control would be to use spherical polar coordinates (r, , ). Again we can split a TI-Nspire page into a Geometry area for the viewing gadgets, and a Graph area for the projected space curve and data scatter plot. 5. Conclusion In summary, then we have: developed a method for projecting from 3D space coordinates to 2D display coordinates for any given viewing position (xv, yv, zv) based on simple vector geometry; developed a methodology for mathematical problem solving integrating features of geometry, measurement, data-handling and computer algebra to establish and validate symbolic results; developed a technique for producing 3D loci as trajectories in Cabri 3D geometry software using animation; developed an interactive graphical user interface using spherical polar coordinates (r, , ); established a practical mechanism for collecting 3D data (xc, yc, zc) for the path in space of a moving object from a pair of synchronised video clips from orthogonal viewpoints; developed a method for modelling 3D motion with parametric functions (xc(t), yc(t), zc(t)) such as sine functions: a sin(bx + c) + d; applied this to displaying both parametric space curve graphing of a model and 3D scatter-plots of data. Of course, once everything can have a GPS tracker attached to it, we will be able to directly capture 3D coordinate data, but for now we can see how Hawkeye and other systems can use videos to reconstruct position. This is also the basis of some of the computer gaming devices under development. With the Nintendo Wii system you are using wireless data-capture from sensors – in that case accelerometers, but other systems will use stereo webcams to achieve the same results. If you have some motion detectors you could experiment with setting three of them up on orthogonal axes at A(ax,0,0), B(0,by,0) and C(0,0,cz) from where each can measure the distance rA, rB, rC to an object such as a bouncing ball P(x,y,z). The intersection of the spheres centre A, radius rA etc. will determine the position and hence coordinates of P. Then our projection techniques will allow us to display the trajectory of P from any viewpoint V. See if you can set up and use such a tracking system.