Computational methods for the detection of structural variation in the

advertisement

Master Thesis

9-2-2016

Computational methods

for the detection of

structural variation in the

human genome.

Erik Hoogendoorn

Student Number: 3620557

Master’s programme: Cancer Genomics and Developmental Biology

Utrecht Graduate School of Life Sciences

Utrecht University

Supervisor:

Dr. W.P. Kloosterman

Department of Medical Genetics

University Medical Center Utrecht

2

1 Abstract

Structural variations are genomic rearrangements that contribute significantly to evolution, natural

variation between humans, and are often involved in genetic disorders. Cellular stresses and errors in repair

mechanisms can lead to a large variety of structural variation events throughout the genome. Traditional

microscopy- and array-based methods are used for the detection of larger events or copy number variations.

Next generation sequencing has in theory enabled the detection of all types of structural variants in the human

genome at unprecedented accuracy. In practice, a significant challenge lies in the development of

computational methods that are able to identify these structural variants based on the generated data. In the

last several years, many tools have been developed based on four different categories of information that can

be obtained from sequencing experiments: read pairs, read depths, split reads and assembled sequences.

In this thesis, I first introduce the topic of structural variation by discussing its impact in various areas,

what mechanisms can lead to its formation, and the types of structural variation that can occur. Subsequently, I

describe the array-based and sequencing-based methods that can be used to detect structural variation. Finally,

I give an overview of the tools that are currently available to detect signatures of structural variants in NGS

data and their properties, and conclude by discussing the current capabilities of these tools, possible future

directions and expectations for the future.

Keywords: Structural variation; Copy Number Variation; Next-Generation Sequencing; Detection algorithms;

Read pair; Read depth; Split read; De novo assembly.

3

4

2 Contents

1

Abstract

3

2

Contents

5

1

Introduction

6

2

Structural variation

6

2.1

The importance of structural variation

6

2.2

Causes for structural variation

7

2.3

Types of structural variation

7

Detecting structural variation

8

3

3.1 Array-based methods

ArrayCGH

SNP arrays

Advantages and limitations

3.2 Sequencing-based methods

Read pair

Read depth

Split read

De novo assembly

Advantages and limitations

9

9

11

11

11

12

Computational methods

12

4

4.1

5

Read mapping

13

4.2 Read pair

Clustering-based methods

Distribution-based methods

14

14

15

4.3

Read depth

17

4.4

Split read

19

4.5 De novo assembly

Genome assembly

Identification of structural variation

20

20

21

4.6

22

Combined approaches

Discussion

The status quo

Possible improvements: integration of recent advances

Future perspectives

6

8

8

8

9

References

24

24

25

25

27

5

1 Introduction

Structural variation describes genetic variation that affects the genomic structure. Although human

genomic variation was first thought to be mostly due to SNPs (Single Nucleotide Polymorphisms), it has

become clear that human genomic and probably phenotypic differences are related more to structural variation

than SNPs1,2. Structural variation can range in size from several bp (base pairs) to entire chromosomes.

Structural variation contributes significantly to human diversity and disease occurrence, and is an important

consideration in any genetic study3,4. Structural variation studies used to be limited to the detection of larger

variants like aneuploidies and chromosomal rearrangements by using microscopic methods. The development

of array-based and, more recently, sequencing-based methods has enabled the detection smaller

submicroscopic structural variants (SVs) at greater resolution. Next generation sequencing-based (NGS)

methods are theoretically able to identify SVs of all types at previously unattainable speeds and resolution, and

several different methods have been developed to detect signals in the data that indicate structural variants,

each with their own advantages and disadvantages. However, these methods require extensive computational

analysis and the development of various types of algorithms to filter the data, compare it to reference or other

samples and detect the signals associated with structural variation. Here, I will introduce the effects structural

variation can have in humans and other species, the mechanisms that can result in the formation of SVs and the

different types of structural variation that can occur. Subsequently, I will give an overview of the methods that

can be used to detect structural variation, and provide an overview of the currently available computational

tools used for the detection of SVs in the human genome based on next-generation sequencing.

2 Structural variation

2.1 The importance of structural variation

Structural variation is now known to cover more nucleotide variation in the human population than SNPs,

and thousands of SVs are likely to be present in each genome1,2,5. Many SVs span, relocate or break encoding as

well as regulating elements in the genome. This may often have no observable effect, but can also induce

dosage effects, gene disruption, new fusion genes, new regulatory cascades, the formation of new SNPs and

differences in epigenetic regulation due to relocation5–7. Thus, although many SVs may be neutral, they still

introduce a large source of genetic and phenotypic variation not just between humans but in all species8,9.

Considering the effects of SVs on phenotypic variation, the occurrence of structural variation is also

expected to significantly affect natural selection and thus evolution5,8. Indeed, structural variation has been

suggested to be related to the evolution of new species as well as the evolution within various species9–11.

Examples exist in plants12 as well as primates13–15, also for the emergence of human specific-genes16. Several

papers have shown recent human evolution in genes related to diet, reproduction and disease-related genes

due to structural variation17–19.

Structural variation has been characterized extensively in relation to disease. Variants affecting gene

regulation or coding sequences may result in a wide variety of genomic disorders8,20,21. Two models for the

relationship between structural variation and disease have been proposed, based on rare and common

structural variation22. The first model describes rare and often de novo SVs in the population can cause various

disorders, collectively accounting for a large fraction of these disorders22. Examples are found for various birth

defects23–25, neurological disorders26–30 and predisposition to cancer31,32. The second model concerns SVs

common in the population, especially copy number variable gene families, thought to collectively contribute to

susceptibility of complex diseases, especially related to the immune system22. Examples for this model are

HIV33, malaria34 and various immune disorders 35,36. Although examples can be found for both models, these are

probably not comprehensive for all human disease in relation to structural variation. For example, a simple

division between rare and common variants may be too simplistic37. However, it is clear that the detection of

structural variation can have a large impact on the investigation of human disease, both in diagnosis and

treatment of diseases38,39.

In addition to their role in disease, SVs are also essential for normal functioning of human life. Class Switch

Recombination (CSR)40 and V(D)J recombination41 are processes that rely on structural variation that is

stimulated by our body itself. These processes are essential for the generation of diverse mature B cells in

response to antigen stimulation, and thus for the human immune system. The study of SVs may also tell us

more about genetic mechanisms that shape genome structure as well as genome evolution. Over the last years,

6

the need to take structural variation and its roles into account has become apparent4. However, essential for

each of these research areas remains the accurate and unbiased identification of structural variants.

2.2 Causes for structural variation

Although first considered to occur randomly42, structural variants form in specific situations, in response to

specific environmental and cellular triggers. Various stressors like replication, transcription and genotoxic or

oxidative stress, or combinations of these, can be the trigger for structural variation43. These stresses can result

in DNA breaks and stalled DNA replication forks sensitive to the formation of structural variants. Specific

sequences are more sensitive to structural variation due to their structure, associated proteins or epigenetic

modifications43. Furthermore, the proteins involved in generation of functional recombination in the immune

system may have off-target effects, leading to double-strand breaks. Subsequent errors in DNA repair or

recombination then cause the structural variant to be implemented locally or between two loci in physical

proximity.

For example, non-homologous end joining (NHEJ) is an error-prone repair mechanism for DNA doublestrand breaks. Individual double-strand breaks are efficiently repaired by classical NHEJ, however the presence

of two double-strand breaks can result in chromosomal translocations. Alternate end joining (A-NHEJ), is a

different pathway that is associated with genomic rearrangements. However, the precise mechanisms are

currently unknown44. Allelic homologous recombination repairs double-strand breaks using a template

sequence and is relatively error-free. However, defects in homologous recombination could result in non-allelic

homologous recombination (NAHR). In this case, non-allelic sequences, often LCRs, LINE-1 and Alu repeat

elements or pseudogenes are used as a template for repair, resulting in structural variations 8. Additionally,

repetitive and transposable elements like those involved in NAHR are considered to contribute to structural

variation through the effects of retrotransposition and microhomology, which can result in Complex

Chromosomal Rearrangements (CCRs). Several models exist for the explanation of these CCRs. The MMBIR

model (microhomology-mediated break-induced replication) posits that single DNA strands of collapsed

replication forks anneal to any single-stranded DNA in proximity. Following polymerization and template

switches result in CCRs45. A similar model, FoSTeS (Fork Stalling and Template Switching), suggests replication

fork template switching, but without breaks15,46. Finally, intra- and interchromosomal CCRs may result from

random non-homologous end joining of fragments after an event termed chromothripsis. In this model, one or

multiple chromosomes locally shatter, then fuse again randomly, possibly due to radiation or other events

resulting in widespread chromosomal breakage23,47. For more information on this topic, please see a

comprehensive review by Mani et al.43.

2.3 Types of structural variation

Structural variation can occur in many types, among which a distinction can be made between copy number

variant (CNV) and copy number balanced variants. Copy number balanced SVs include inversions and

translocations. Copy number variant SVs include deletions, insertions and duplications. Insertions may involve

a novel sequence or a mobile-element. Mobile element insertions can result from translocations or

duplications. Duplications can occur as tandem duplications, where the duplicated segment remains adjacent to

the source DNA, or interspersed, where the duplicated DNA is incorporated elsewhere in the genome. These

events may occur intrachromosomally, but also between different chromosomes, leading to interchromosomal

variants. The term is structural variant was traditionally used to refer to larger variants larger than 50 bp or 1

kb (kilobase)22. However, any variant other than a SNV (Single Nucleotide Variant) may be considered to alter

the structure of the chromosome. As some of the methods discussed here are able to identify events of sizes

from 1 to 50 bp at base pair resolution, the term structural variant is used for any non-SNV genetic variant.

Of course, one event may include combination of multiple types of SVs, resulting in more complex patterns

or CCRs, where for example an inverted fragment may contain a deletion or an insertion, or any other

combinations. Detecting CCRs is more problematic for most methods. Additionally, an insertion may

correspond to deletion elsewhere in the genome, resulting in what is essentially a translocation. However, not

all methods may detect both events and may thus infer CNVs erroneously. Accurate identification of a certain

SV may thus require comprehensive identification of all structural variation in the studied genome48. The

ability for detection of these types of variants differs with respect to the various methods used, as is discussed

below.

7

3 Detecting structural variation

As mentioned above, structural variants can differ greatly in terms of size. Larger structural variants are

considered microscopic variants, as these can be detected using traditional microscopy-based cytogenetic

techniques. That includes genome-wide techniques like karyotyping, chromosome painting and FISH-based

methods. Still commonly used, these methods can identify most types of structural variants beyond several Mb

(Megabases) and aneuploidies. Improvements based on these techniques are still developed, providing higher

resolution and sensitivity49.

For the detection of smaller, submicroscopic SVs with higher resolution and sensitivity, more recent

molecular methods are required. These methods can be classified as either array-based or sequencing-based.

Common to these methods is that SVs are identified by comparing the experimental genome to a reference or

other sample genome, inferring variants from the differences. I will briefly introduce these array- and

sequencing-based methods below.

3.1 Array-based methods

Microarrays were originally developed for RNA expression profiling, but now have a wide range of

applications, including the detection of structural variation. Microarray-based methods rely on the design of

microarray chips on glass slides, using immobilized cDNA oligonucleotides as targets for hybridization by

experimental DNA. Although sequencing-based methods for the detection of CNVs are becoming more costeffective and popular, clinical diagnostics still mainly use microarray screening50. Detection of CNVs with arraybased methods is possible using two types of microarrays: ArrayCGH (Comparative Genomic Hybridization)

and SNP arrays. Recent platforms, marketed by companies like Agilent, Illumina, Roche and Affymetrix, enable

the detection of millions of probes on one chip, and new arrays are still being developed that increase the

sensitivity and resolution even more.

ArrayCGH

ArrayCGH platforms can be used to detect relative CNVs by competitive hybridization of two fluorescently

labeled samples to the target DNA. Experimental DNA is fragmented and fluorescently labeled prior to

hybridization. By using different fluorescent dyes, for example Cy3 (green) and Cy5 (red) for each sample, the

measured fluorescence for each color can give an indication for the abundance of experimental DNA from each

sample. It is important to use known reference samples, as a gain in one sample can’t be distinguished from a

loss in the other without further information. For accurate identification of SVs, normalization is often needed

due to experimental biases for GC content in the DNA and dye imbalance.

The first ArrayCGH experiments used large inserts, for example BACs (Bacterial Artificial Chromosomes), as

targets, and were able to detect CNVs in the range of 100 kb and longer51. The current use of oligonucleotides

allows the detection of CNVs with a resolution only several kilobases52,53. An advantage of ArrayCGH is the

availability of custom arrays, allowing its use as a diagnostic platform 50,54. ArrayCGH platforms can reach high

resolutions, especially using custom solutions2, but can’t match NGS-based methods.

SNP arrays

SNP arrays were originally designed to detect single nucleotide polymorphisms, but have been adapted for

the detection of CNVs. Similarly to ArrayCGH, SNP arrays rely on hybridization to target NDA. However in SNP

arrays, only the test sample is hybridized, and no competitively hybridizing reference sample is used. The

intensity of the fluorescence upon binding is used as a measure for the matching sequences in the sample. For

the detection of CNVs, intensities measured across many spots on the slide are clustered. CNVs are detected by

comparing these sample values to (a set of) reference values from a database or from a different experiment.

Several algorithms have been developed for this analysis, and an overview of these can be found in a review by

Winchester et al.55.

Similar to ArrayCGH, SNP array resolution has increased significantly in the years since its first use, which

typed56. Currently, millions of SNPs can be interrogated on one chip. In addition to improvements in resolution,

the design of arrays has focused on incorporating more informative SNPs in regions with known CNVs,

increasing the amount of variants detected in one experiment57. However, this does have an important negative

side-effect, as it introduces a large bias towards known CNVs. SNP arrays generally tend to have lower

sensitivity in the detection of CNVs compared to ArrayCGH. However, SNP arrays provide advantages like

additional information for genotyping, parental origin of CNVs, are more accurate in the determination of copy

numbers and allow detection of LOH (Loss Of Heterozygosity)49.

8

Advantages and limitations

A major disadvantage of array-based versus sequencing-based methods is that only gains and losses

compared to a reference can be identified. Thus, balanced variants like translocations and large inversions

cannot be identified, meaning that other experiments are needed to identify the location and type of the SV

events in the test sample. Array-based methods are also unable to detect smaller variants and have a lower

resolution, and thus miss a wide range of SVs that are potentially of interest. However, array-based methods

are less costly and have a higher throughput than sequencing-based methods, so it is possible to genotype a

larger number of individuals in less time and for a lower cost. Analysis of the data also requires less

computational resources than sequencing-based methods. In addition to predesigned genome-wide solutions, it

is often possible to order custom designs, allowing studies to focus on regions of interest, or increase overall

resolution. Combinations of these two types of arrays have been used to detect CNVs. Either by integration of

results58, using SNP arrays for fine-mapping regions identified by ArrayCGH59, or using hybrid CGH+SNP

arrays49,60. These methods could provide more robust identification of structural variation as well as additional

information versus existing approaches. This seems prudent, as a recent assessment has shown relatively low

(<70%) reproducibility for repeated experiments as well as poor (<50%) concordance between platforms61.

3.2 Sequencing-based methods

Detection of multiple different types of structural variation based on sequencing methods was first

performed using paired-end mapping by Tuzun et al.62. This study was based on capillary Sanger sequencing

using fosmid-end sequences. Throughput and resolution based on this data are not optimal, but the longer read

lengths allow the reliable identification of large variants. The development of high-throughput next- generation

sequencing technologies has enabled sequencing of a full human genome within a week. Since 2005, several

companies including 454 Life Sciences, Illumina, and Life Technologies have marketed platforms with ever

increasing throughput and base-calling accuracy, longer read lengths as well as lower costs versus traditional

capillary methods. More recently, single Molecule Sequencing (SMS) has become a possibility with Helicos’

Helioscope platform and non-optical sequencing was introduced with Life Techologies’ Ion Torrent sequencer.

Among other applications, this development in sequencing technology has enabled the genome-wide

detection of structural variation at unprecedented resolution and speeds. Several methods have been employed

for the identification of SVs using NGS data. The most self-evident method would be de novo assembly of a

genome, with subsequent alignment to a reference to determine the structural differences. However, de novo

assembly of a human genome remains challenging due to the relatively short read lengths generated by NGS

platforms63. As a result, other methods were developed that use direct alignment of reads to one of the human

genome reference assemblies. These methods are read pair, read depth and split read approaches, and are

based on the identification of discordant patterns in sequencing data. I will describe the basic principles of each

of these approaches below.

Read pair

As mentioned earlier, the first sequencing-based identification of SVs used a read pair method, which was

applied to data from capillary sequencing62. The first NGS-based study on the genome-wide identification of SVs

applied a similar method, using the same algorithms as in the earlier study but without any optimizations for

the new type of data64. Most of the current sequencing technologies, excluding SMS platforms, are capable of

generating paired-end or mate-paired reads. In read pair sequencing, both ends of a linear fragment with an

insert sequence are sequenced, whereas in mate-pair sequencing a circularized fragment is used. Although the

method of generating the read pairs differs, the detection of SVs based on the generated data is essentially the

same. An important consideration in the detection of SVs is that the insert size for mate-pair libraries (1.5-40

kb) is often larger than for paired-end libraries (300-500bp)65.

Read pair methods detect SVs by mapping read pairs with a predetermined insert size back to the reference

genome. Assessing the mapping locations of the reads to the reference genome, a discordant span or

orientation of the read pair indicates the occurrence of a genomic rearrangement (Figure 1). If read pairs map

further apart than the insert size this suggests a deletion, whereas if read pairs map closer together or one read

can’t be mapped together this suggests a (novel) insertion. Furthermore, insertions of mobile elements or other

genomic regions map to the locations in which these are present on the reference genome. Inversion

breakpoints are detected by a changed orientation of one of the reads inside the inversion, as well as varying

spans for the pairs. Interspersed duplications or translocations can be detected by complex patterns where in

several pairs one of the reads maps to a different location or chromosome. Finally, tandem duplications can be

9

detected by read pairs that have a correct orientation, but are reversed in their order and have differences in

their span.

Figure 1 The four sequencing-based methods used to identify structural variation, and the signatures that can be detected for each

type of SV. The top line indicates reference DNA. Red arrows indicate breakpoints. MEI = Mobile Element Insertion. RP= Read Pair. For a

full legend see Alkan et al.22 (Copied from Nature Reviews Genetics, Alkan et al. 201122.)

As single read pairs are not reliable on their own due to possible mismapping or ambiguous mapping,

multiple read pairs belonging to the same variant are clustered to increase the reliability of detection, as well as

identify the breakpoint locations for the variant more accurately. Libraries with larger insert sizes (several

kilobases) are better at detecting larger variants, but are often not able to reliably detect smaller variants due

to the distribution of insert sizes66. In contrast, libraries with smaller insert sizes can’t reliably detect the larger

events, but have higher resolution and are able to detect smaller variants. A major disadvantage of the read pair

methods is that insertions larger than the insert size cannot be detected conventionally. Although with lower

power, algorithmic detection of these insertions is possible when considering a linked signature, as described

by Medvedev Et al.67. For example, a large insertion from a region far away in the genome (a translocation or

duplication), the read pair will be detected as spanning a huge range in the reference genome, as regions that

were first far from each other are now relatively close and used to generate the read pair. By finding this

signature for both break-ends (the newly formed sequences by colocation of the flanking sequences and the

insertion) and linking these, it is possible to determine the origin and possibly the size of the insertion. For

novel sequence insertions this is more difficult, as one of the reads from the read pair will not map to the

10

genome. In this case, additional steps like assembly or targeted sequencing of the insertion sequence would be

required.

Read depth

Analysis of read depth, also called depth of coverage (DOC), can identify structural variants by evaluating

the depth of reads to mapped to the reference genome. This approach was first used in combination with NGS

data to detect CNVs in healthy and tumor samples from the same individuals68. For this method, a uniform

distribution of reads is assumed, often according to a Poisson distribution. Sufficient deviation from this

distribution is expected to be due to copy number differences in the sequenced genome. Alternatively, the

expected copy number of a region can be derived from a comparison of read depth to reads of a control

genome. For both variants, a loss region will have less reads mapped to it than expected, whereas a gain region

will have more reads mapped (Figure 1).

The major disadvantage of read depth versus the other sequencing-based methods is that only CNVs can be

detected. The location of events can’t be retrieved, and copy number balanced events like inversions or

translocations can’t be detected. However, it’s the only sequencing-based method that can accurately predict

copy numbers69. Larger events are more reliably detected than smaller ones, as the statistical support increases

with the size. The reliability of the SVs detected is directly related to the sequencing coverage. As a result, the

sequencing biases in the different platforms affect SV detection as well. For example GC-rich or –poor regions

as well as repeat regions are sequenced less reliably, introducing biases70. These biases, as well as mismapped

reads influence the data more than in other sequencing-based methods. Algorithms based on case versus

control data suffer less from biases due to sequencing, as these are assumed to be cancelled out. However,

these are more costly as it requires additional genomes to be sequenced.

Split read

Split read mapping detects structural variation by using unmappable or only partially mappable reads. The

breakpoint of a SV is found based on a read which can only be mapped to the genome in two parts. Detection of

SVs is similar to read pair-based methods, but instead of two paired reads, two parts of one read are used

(Figure 1). A deletion will show a read mapping with alignment gaps in the reference genome, whereas

insertions will show alignment gaps in the test genome. Like with read pairs, part of a read not mapping may

indicate a novel sequence insertion, and partial mapping to a known mobile element in the reference genome

indicates a MEI (Mobile Element Insertion). Reads spanning tandem duplications will have the split read

mapping in reverse order. Interspersed duplications or interchromosomal translocations will show part of a

read mapping to the duplicated region or another chromosome. Like read pair methods, split read mapping

may use clustering of reads to increase the reliability of the findings.

Split read mapping was originally used in combination with sanger sequencing, which produces longer

reads than current NGS platforms71. The shorter reads currently generated by NGS platforms significantly

reduce the power of SV detection using this method, as the length of a split read from NGS is rarely uniquely

mappable to the genome. This results in strongly ambiguous, and often impossible mapping of reads, especially

to regions with repeats or duplications. However, it is currently still possible to map breakpoints for smaller

deletions (max. ~10 kb)as well as very small insertions (1-20 bp) at base pair resolution by using an algorithm

called Pindel72. Using this method, also called anchored split mapping67, read pairs are used to select reads

where one read maps uniquely to the genome and the other can’t be mapped. Knowing the location and

orientation of the first read, the second read can be split-mapped using local alignment based on the known

insert size, reducing the search space for possible mappings as well as ambiguous mapping significantly.

However, this does require that one of the reads is mapped uniquely, which is still not always possible.

The advantage of this method is that it can map breakpoints of SVs at base pair resolution. However, for

larger events or those involving distant genomic regions this is still problematic. Using anchored split mapping

to reduce the search space for split reads is an important step towards making split mapping useful in

combination with NGS platforms, but may be hampered by inserts or deletions in between the reads, affecting

mapping distance. Longer read lengths will make split read mapping even more powerful, as unique mapping

of at least one end may not be required.

De novo assembly

Ideally, full alignment of de novo sequenced genomes against one or multiple reference genome(s) would be

used to identify all structural variation in the genome. Depending on the algorithms and reference genome(s)

used, this would enable unbiased detection of all types and lengths of SVs. Although studies have described

11

assembly of human genomes based on short-read data, these and other approaches still require assembly to the

reference genome. Two human genome assemblies have recently been used to identify structural variation 73.

However, this study was still limited in the identification of SVs in repetitive regions and was only able to

identify homozygous SVs. Local de novo assembly is possible in more reliable genomic regions74. This allows

alignment to the reference genome and subsequent identification of structural variants using these generated

contigs. Identification of SVs is then possible using the same principles as in split read mapping, with

differences only in identification of MEIs and tandem duplications (Figure 1). As these fragments are typically

much larger than read fragments, this method is much more reliable in the identification of breakpoints and

larger SVs.

Although de novo assembly of genomes and subsequent pairwise comparison is expected to become the

standard method of SV detection, this is currently still problematic due to the limited read lengths and

assembly collapse over regions with repeats and duplications63. As these regions are especially susceptible to

the formation of structural variation, this further decreases the reliability of SV detection due to false positives

as well as false negatives. Additionally, differences in coverage between genomic regions due to biases affect

assembly, inducing gaps and complicating statistics in assembly. Finally, de novo assembly requires extensive

computational resources. In algorithms that reduce the computational requirements, tradeoffs are often

necessary in terms of sensitivity to overlaps. Although improvements in these areas have been made with

newer tools, the problems are still unsolved74. Further development of algorithms and sequencing platforms

will be required before this method will be able to detect all structural variation reliably.

Advantages and limitations

A major advantage of sequencing-based methods over array-based methods is the possibility to detect all

types of variants in a single experiment; both copy-balanced and copy-variant. Additionally, SVs of a broader

range of lengths can be detected with significantly less bias, as no the genomic regions measured are not

predetermined as is necessary for microarray probes. The resolution of sequencing enables breakpoint

detection at base pair level with high enough coverage, allowing detailed investigation of CCRs as well. NGSbased methods are expected to replace microarrays for SV discovery and genotyping. Although costs of whole

genome sequencing have declined significantly, these are currently still a large factor. This is especially true for

genome-wide detection of structural variation, as the reliability of the findings depends in a large part on the

sequencing coverage attained in the experiment. However, the decline in costs is expected to continue quickly

over the coming years, in concert with the further development of single-molecule and third-generation

sequencing platforms65. A problem common to all methods is the limited read length of current generation

sequencing platforms, causing significant ambiguity in the mapping of reads, especially in repetitive regions.

Third-generation sequencing technologies with increased read length and insert sizes are expected to alleviate

these problems at least partially. but the development of new algorithms and the integration of information will

also be an important factor.

The different sequencing-based methods each have their own strengths and weaknesses in the detection of

SVs. Read pair-based methods are efficient at detecting most types of structural variation and extensively used,

however the insert size affects the length of the detected SVs significantly. Approaches based on read depth are

able to identify sequence copy numbers, but only able to detect CNVs and at poor breakpoint resolution.

Although split read mapping can identify breakpoints at base pair resolution, its sensitivity is currently a lot

lower than other methods due to unreliability outside of unique genomic regions. Finally, de novo assembly of

genomes promises to be the method to solve most of the problems, but is currently not yet possible and

dependent on the further development of sequencing techniques and algorithms. Several tools have been

developed recently to integrate the information from the various methodologies. By combining algorithms,

several biases or deficiencies of some of these methods may be alleviated. Furthermore, several strategies seem

more suitable for the detection of certain classes or properties of structural variants. For example, read depth

information is more suitable for copy number detection than other methods, and split read information may

indicate the breakpoints most reliably. Any combination of methodologies will need to take into account these

factors.

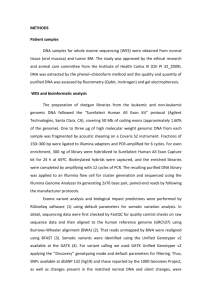

4 Computational methods

Various tools have been developed for NGS-based detection of structural variation. Here, I will give an

overview of the currently available tools for read pair-, read depth-, split read- and assembly-based methods of

genome-wide SV detection in the human genome with NGS data. Tools combining the information from several

12

detection methods to improve the results are discussed separately. As read mapping is an important first step

for the read pair-, read depth- and split read-based methods, and assembly algorithms are similarly important

in the assembly-based identification of SVs, approaches and tools used for these steps are discussed as well.

An important distinction between the tools is the strategy that is used for alignment of the reads and how

the SV identification algorithms process those alignments. The alignment processing strategies can be classified

as either ‘hard clustering’ or ‘soft clustering’75. Most approaches use hard clustering, considering only the best

mapping of each read to the genome for the identification of SVs. This works well for unique regions of the

genome, but has lower sensitivity in tandem duplication and repeat regions. Some newer approaches use soft

clustering, where reads are mapped to all possible locations, and all these mappings are considered in the

detection of putative SVs. Although this increases sensitivity, soft clustering may lead to more false positives

and often requires careful filtering of input reads. In sample-reference analyses, these false positives are offset

by an increase in true positives as more SVs in total are present. However, in related samples the false positives

may constitute higher percentage of total due to the low amount of total SVs between the samples. Thus, it is

important that the clustering strategy is appropriate for the study, and the parameters in tools using the soft

clustering strategy are well understood and set carefully. Table 1 summarizes the tools used for SV

identification as discussed here, showing which clustering approach is used, what types of SVs can be detected,

as well as their defining characteristics or advantages over other approaches.

4.1 Read mapping

Except for de novo assembly, all computational methods described here require mapping of the to the

reference genome as a first step. Many tools have been developed for this purpose, based on several different

approaches. These tools mainly differ in how they find the possible mapping locations on the genome, whereas

a final alignment step on these possible mapping locations to determine the scoring is generally performed by

using the traditional Smith-Waterman76 alignment algorithm. The first development was the classical “seed and

extend” approach77. Here, a seed DNA sequence is found based on a “hash tables” containing all DNA words of a

certain length (k-mers) present in the first DNA sequence (this can be either the reads or the reference

genome). The hash table is then used to locate the k-mer sequence in the other DNA sequence. Subsequently,

this seed is extended on both sides to complete the alignment. This approach is used in several tools, like

BLAT78, SOAP79, SeqMap80, mrFAST69, mrsFAST81 and PASS82. This implementation is simple and quick for

shorter word lengths, but becomes exponentially more memory-intensive with longer word lengths.

An improvement on this approach was introduced with PatternHunter83, which uses “spaced seeds”. This

approach is similar to the “seed and extend” approach, but requires only some of the seed sequence’s positions

to match. Thus, if a 5-mer is used, it may be that only the 1st, 3rd and 5th positions need to match the other

sequence. This approach is more sensitive and allows for mutations in the seed sequence, but may introduce

false matches that slow the mapping process down, and does not allow indels in the sequence. Many tools were

developed based on this approach, including the Corona Lite mapping tool84, ZOOM85, BFAST86 and MAQ87.

Newer tools like SHRiMP88 and RazerS89 improve on this approach by requiring multiple seed hits and allowing

indels.

Other “trie-based” approaches are aimed at reducing the memory requirements for alignment and use

“Burrows-Wheeler Transform” (BWT), an technique that was first used for data compression90. The term trie

comes from retrieval, as it can be used to retrieve entire sequences based on their position in a list. Different

data structures can be used with this approach, based on prefix trees, suffix trees, FM-indices or suffix arrays,

but the search method is essentially the same91. In trie-based approaches, the various k-mers are compressed

into one string based on their position relative to the start of the string. These can be used to directly search the

reference genome, even allowing simultaneous search of similar strings as these are compressed together. This

further decreases the memory requirements and search times, but does require more computing time for the

construction of compressed strings. Several very fast tools like SSAHA292, BWA-SW93, SOAP294, YOABS95 and

BowTie96 have been created based on this approach. Even faster alignment tools like SOAP397, BarraCUDA98

and CUSHAW99 combine trie-based approaches with GPGPU computing, taking advantage of parallel GPU cores

to accelerate the process.

Most of the newer mapping tools are specifically designed to take into account the properties of NGS

platforms; shorter reads, more data and sequencing errors. However, some tools like BLAT, SSAHA2, YOABS

and BWA-SW are useful for mapping longer reads. Additionally, some mapping tools are developed specifically

for certain platforms. For example, SHRiMP, BFAST and drFast map color-space reads associated with SOLiD

platforms, and SOAP and BowTie tools were designed for use with data from Illumina platforms. For more

extensive information on this topic, a good review was written by Li et al.91. The selection of the mapping tool is

13

an important consideration, also when selecting one specifically for certain SV detection methods. Split read

mapping requires specific strategies, and BWA-SW and MOSAIK100 are examples of only few mapping tools that

provide split mapping information. Finally, instead of alignment as a first step, some assembly-based

algorithms require (whole genome) alignment as one of the later steps in SV identification, as will be discussed

in the section on de novo assembly below.

4.2 Read pair

Many tools have been developed for SV identification based on read pair data. These use algorithms that

can be grouped into two categories: those based on clustering, and those based on distribution. Algorithms

from both categories can identify discordant read pairs by differences in span and orientation, and may group

read pairs for increased reliability. The difference lies in that clustering-based algorithms identify discordant

read pair mapping distance by a fixed distance like a certain amount of standard deviations or based on

simulations, whereas distribution-based algorithms test the mapping span distribution of a certain cluster of

read pairs and calculate the chance of these being discordant by comparison to the genome wide distribution.

Clustering-based methods

The first read pair-based approaches using capillary sequencing by Tuzun et al.62, and using NGS by Korbel

et al.64, both employed a clustering-based approach where a cluster is formed based on a minimum of two read

pairs. These approaches used hard clustering of the reads. The standard clustering strategy used here detects

SV signatures based on read pairs with discordant span and orientation, as described above in the introduction

of NGS-based methods. The span is considered discordant if it deviates four or more standard deviations (SDs)

from the mean. The limitations of these studies lie in the reduced sensitivity due to the use of hard clustering,

as well as the fixed cutoff for the read pair distance and the number of required read pairs for a cluster, which

can affect the specificity strongly based on the coverage attained66.

The VariationHunter101,102 tool improves on the previous approaches by using soft clustering, thus

increasing sensitivity. The same read pair distance cutoff (four SDs) as in earlier approaches is used. After

mapping of all reads, a read is removed from consideration if it has at least one concordant mapping. If a read

has only discordant mappings, it is classified as discordant. Each possible mapping is then assigned to each

possible cluster of reads indicating a SV. Then, two algorithms may be used for the identification of SVs based

on the clusters: VariationHunter-SC (Set Cover) or VariationHunter-Pr (Probabilistic). The first algorithm

identifies SVs based on maximum parsimony, selecting clusters so the amount of implied SVs introduced is

minimal. The second algorithm calculates the probability of a cluster representing a true SV based on the read

mappings, with a clusters above a certain probability (90% was used in the paper) identified as SV clusters.

Evaluation by the authors showed significant improvement in detecting SVs over previous methods, especially

in the repeat regions. However, sensitivity was still lacking due to GC-content affecting the distribution of

reads. Additionally, the fixed read pair distance cutoff used means that smaller differences in span with

possibly good support are still ignored.

PEMer103 is a tool combining various functions in an analysis pipeline, with the purpose of SV identification.

Reads are first pre-processed based on the sequencing platforms used, and optimally aligned to the reference

genome (using hard clustering). Subsequently, discordant read pairs are identified based on the clustering

approach. It’s possible to merge clusters obtained from different experiments and with different cutoffs in a

‘cluster-merging’ step. This is a significant improvement over other tools, as it allows the use of multiple cutoffs

for cluster formation and a custom cutoff for the calling of discordant read pairs. Furthermore, PEMer is

modular, and offers extensive customization, allowing improvements to certain modules without having to

design an entirely new pipeline. Another advantage is that PEMer can detect linked insertions as described by

Medvedev et al.67, allowing the detection of insertions longer than the library insert size. Although the

customizability is a large advantage, the parameters need to be carefully set to ensure good results.

Implementation of a soft-clustering mapping algorithm may further increase the sensitivity of this tool.

Another tool using a read pair clustering-based approach is HYDRA104. It uses soft-clustering, taking into

account multiple possible mappings to specifically improve the identification of SVs arising from multi-copy

sequences. Multiple mappings of the same read are considered to support the same SV if they span the same

interval. Based on the support for each mapping, a variant call is generated for those with the highest support.

Subsequently, SV types are identified as in a standard clustering-based approach which, in addition to the

standard signatures, is able to detect several other signatures for tandem duplications and inversions that

increase the sensitivity for these types of SVs. Although developed for the identification of structural variant

breakpoints in the mouse genome this approach should also applicable to the human genome. This approach

14

may be very useful if applied to the specific identification of SVs in repeat and duplication regions. However, a

significant risk in using this approach is that many false positives may be introduced if the mappings are not

screened properly before the HYDRA tool is used, as mapping quality is not taken into account.

SVM2105 is a recently introduced tool that uses a read pair-based approach, including non-standard

signatures typically found flanking a SV event to increase the reliability of SV detection. SV flanking regions

have defining properties for insertions larger and smaller than the insert size, as well as deletions. In addition

to the default read pair span changes, OEA read pairs (One-End Anchored, read pairs of which only one read

maps) are used. For deletions, there will be a sharp peak of OEA pairs on each strand about as long as the read

length, as these cannot be mapped in their entirety. For insertions, this peak will become broader with the size

of the insertion until the insert size is reached. Thus, the boundaries of an insertion larger than the insert size

can be detected even though no spanning read pairs are available. Statistics on the characteristics of read pairs

found around insertion and deletion regions are used in a machine-learning algorithm that detects SVs. A

Support Vector Machine (SVM) is trained to recognize each of these statistics so SVs can by classified into their

respective classes. Finally, a post-processing step combines clusters of these sites and identifies types and

lengths of insertions and deletions by standard comparison of the span of read pairs to the global mean insert

size. Although the boundaries of insertions larger than the insert size of the library are recognized, the size of

these events cannot be determined. A comparison by the authors showed an increased specificity in detecting

smaller (1-30bp) insertions and deletions versus BreakDancer. However, the detection of SVs other than

insertions and deletions was not implemented. Adapting this method to also consider read pairs that map at

great distances may also increase the sensitivity for detecting translocations or MEIs.

Distribution-based methods

Distribution-based detection of discordant read pairs was introduced with the MoDIL tool106. Using

discordant as well as concordant read pairs, this tool compares the distribution of mapping distances for read

pairs in a specific genomic locus to the genome-wide distribution to identify SVs. A shift in the distribution

towards shorter spans indicates an insertion, whereas a shift towards longer spans indicates a deletion. This

enables the identification of insertions and deletions in the range of 10-50 bp using paired-end data. An

advantage of this tool is that heterozygous variants may be identified by observing a shift of half of the read

pairs, which is not possible in clustering-based methods. As this tool only detects a very specific length of

insertions and deletions, it is far from comprehensive. However, it is useful for detecting smaller insertions and

deletions, possibly as part of a larger pipeline.

MoGUL107 was developed based on MoDIL, but uses sequencing data from multiple genomes to enable the

detection of common SVs from low-coverage genomes. After a soft clustering step, read pairs from multiple

individuals are clustered. Based on these clusters, SV calls are generated based on the span distribution in a

manner similar to MoDIL. Based on this data, indels of 20-100 bp can be detected if the minor allele frequency

(MAF) is at least 0.06. Although Rare variants cannot be detected using this method, several variants that were

not detected by MoDIL could be detected with the increased power for common variants in MoGUL. Although

this tool is not useful for studying a single genome, it is effective in situations where a group of individuals is

studied, allowing sequencing at low coverage and thus lower costs to identify common variants. This may be

useful in situations where for example a familial disease or population differences are studied.

BreakDancer108 combines clustering-based and distribution-based read pair-based SV detection by using

two different algorithms. BreakDancerMax is used to detect all types of structural variation using the standard

clustering strategy. BreakDancerMini is distribution-based and used to detect smaller insertions and deletions

that are not found by BreakDancerMax, typically in the range of 10-100 bp. In addition to the insertions,

deletions and inversions detected by previous methods, BreakDancerMax is able to identify inter- and

intrachromosomal translocations. A comparison of BreakDancer to VariationHunter and MoDIL by the authors

showed increased sensitivity and specificity due to the combination of the two methods, as well as the

algorithmic improvements enabling the detection of other SV types. However, the detection of variant zygosity

as with MoDIL is not possible using BreakDancerMini. Another possible limitation of the BreakDancer tool lies

in the detection of SVs in repeat regions, as it relies on hard clustering.

15

Table 1: Overview of computational tools used for the detection of SVs based on NGS data.

RP: Read Pair, RD: Read Depth, SR: Split Read, BP: Breakpoint, CN: Copy Number, TD: Tandem Duplications, MEI: Mobile Element Insertion, VH-SC: VariationHunter-Set Cover, VH-PR: VariationHunter-Probability,

BDMax: BreakDancerMax, BDMini: BreakDancerMini, EWT: Event-Wise Testing, CBS: Circular Binary Segmentation, MSB: Mean-shift Based HMM: Hidden Markov Model, SV: structural variant, OEA: One End

Anchored, beRD: breakend Read Depth

*Considers ambiguously mapping reads, but maps these randomly and subsequently uses only that mapping.

4.3 Read depth

Read depth methods can be grouped into two categories: those based on differences in read depth across a

single genome and those based on case versus control data. Using a single sample, reads are mapped to the

reference genome and CNVs are identified based on the average read depth or the read depth in other regions.

Using case versus control data, differences in copy number ratios after mapping to a reference genome are used

to identify copy number differences between the two genomes. Among both categories, the algorithms use

genomic ‘windows’ in which the read depths are measured that determine the resolution at which copy

number ratios are determined. Windows with similar read depths or copy number ratios are then merged to

find CNV regions. Most read depth algorithms discussed use hard clustering alignment methods, evaluating

only the best mapping of each read.

The first algorithm used to detect copy number variants from NGS read depth data was an adapted circular

binary segmentation (CBS) algorithm68 originally developed use with arrayCGH data109. This was a applied to a

case versus control (cancer) dataset to identify somatically acquired rearrangements. The copy number ratio of

the two samples was determined in genomic windows. The size of the genomic windows used was nonuniform, requiring 425 reads per window. This allows the resolution to become higher with higher sequence

coverage. After mapping the reads to the reference genome, copy number change points were found by using

the CBS algorithm for the segmentation of windows with differing copy numbers. The CBS algorithm segments

the genome by testing for change points between different parts testing whether an observation is significantly

different from the mean of a segment. This is done recursively, and stopped when no more changes can be

found.

The readDepth110 tool uses a CBS-based approach similar to those used in the first read depth-based

studies. A major difference is that readDepth does not require the sequencing of a control sample, but calls

CNVs based on a single sample. readDepth employs the CBS-based read depth strategy where the genome is

divided into windows, and the genome is segmented by the CBS algorithm until no more differences in copy

number can be detected to identify CNV regions. However, several improvements over earlier methods are

introduced. Genomic windows are calculated based on a desired FDR (False Discovery Rate) that can be input

by the user based on the number of reads. Heuristic thresholds for the detection of copy number gain and loss

events are calculated based on the desired FDR and number of reads as well. Furthermore, the readDepth tool

is able to process bisulfite-treated reads in addition to regular sequencing reads, and can thus also study

epigenetic alterations. Several corrections for biases are introduced as well: The mapability of reads is

corrected by multiplying the amount of reads in a window by the inverse of the percent mapability detected in

a mapability simulation, and regions with extremely low mapability are filtered out. Read counts in each

window are also normalized by using a LOESS method to fit a regression line to the data.

RDXplorer111 is a tool that detects CNVs based on the EWT (Event-Wise Testing) algorithm. This algorithm

uses 100 bp windows to identify CNV regions based on the differences in read depth in a single sample. As a

first step, all read counts mapped to each window are corrected for the GC content. This is done by multiplying

the read count for each window with the average deviation from the read count for all windows with the same

GC percentage. This manner of GC content correction has been adopted by many other read depth-based tools.

The amount of reads in each window is then converted into a Z-score in a two-tailed normal distribution. Based

on the desired FPR (False-Positive Rate) the upper- and lower-tail probabilities identify gains and losses

respectively. Afterwards, adjacent windows with a copy number change in the same direction are merged to

identify the range of the CNV. The correction for GC content is a positive addition as this is a significant bias in

read depth methods. The authors state that the read counts of 100 bp windows approximate the normal

distribution well at 30x coverage, but more flexible settings are preferred as these windows may be too small

or too large in experiments with better or worse overall coverage.

JointSLM112 is an algorithm that is also based on EWT, but was developed to detect common CNVs present

in multiple individuals using multivariate testing. Due to the increased statistical power by including multiple

genomes, JointSLM is able to determine smaller CNVs than the EWT algorithm alone. Although it was designed

for multivariate testing, this tool may also be used to study a single genome in a manner similar to EWT. Like

other population- or group-based algorithms, this may be useful in the detection of CNVs between populations.

CNVnator113 uses a mean shift-based (MSB) approach to identify CNVs in single genomes. This approach is

also derived from an algorithm designed for the identification of copy number shifts in ArrayCGH data114. The

optimal window size is determined as the one at which the ratio of average read depth to its standard deviation

is roughly 4:5. In the MSB approach, copy number variant regions are identified by merging each window with

flanking windows with a similar read depth. If a window with a read depth significantly different from that of

17

the merged windows is encountered, a break is detected. Subsequently, CNVs are called based on the

probability in a t-test that that the read depth of that segment is significantly different from the global read

depth. In addition to mapping of unique reads, CNVnator maps ambiguously mapping reads randomly to

clustered read placements. Thus, it is not limited to unique regions by using best mappings only, but does not

consider all possible mappings by either. Read counts are corrected for GC content in a method similar to the

one used in RDXplorer.

CNVeM115 uses read depth in single samples to determine CNVs by assigning ambiguously mapping reads to

genomic windows fractionally. It is the only read depth-based tool that explicitly uses soft clustering. After

mapping, the genome is divided into windows of 300 bp and an initial estimation of copy numbers is made

based on an EM (Expectation Maximization) algorithm. A second step then evaluates all possible mappings of

reads to calculate the posterior probability of each mapping, then assigns reads fractionally to windows based

on this probability. This algorithm differs between read assignments with differences in sequence as small as

one nucleotide, and predicts the copy numbers of each position. Instead of classifying CNVs as gains or losses,

the copy number of each base is then determined based on these assigned reads, and the CNVs are determined

from this copy number. In a comparison by the authors, this approach was found to have higher accuracy in

detecting CNVs than CNVnator. It is also able to detect whether paralog regions are copied or deleted.

BICseq116 is a tool that uses the MSB approach for the identification of CNVs, but is designed for use with

case vs. control data instead of single samples. The definition of windows, merging of windows, and calling of

CNVs is done similarly to the process in CNVnator. However, BICseq the Bayesian Information Criterion (BIC)

as the merging and stopping criterion. By using the BIC, no bias is introduced by assuming a Poisson

distribution of reads on the chromosome, increasing the reliability of the results. Furthermore, the case vs.

control approach is used to correct for the GC content bias.

CNV-seq117 is a tool for CNV detection based on the case versus control approach. This tool contains a

module for calculation of the best window size based on the desired significance level, a copy ratio threshold

and the attained coverage level. After mapping of the reads to the genome, genomic regions with potential

CNVs are identified by a sliding of non-overlapping windows across the genome, measuring the copy number

ratio in each window. The probability of a random occurrence of these ratios is calculated by a t-statistic, based

on the hypothesis that no copy number variation is present. The hypothesis is rejected if the probability of a

CNV exceeds the user-defined threshold, and a difference in copy number between the two genomes is inferred.

Segseq118 uses a strategy that focuses on the CBS-based identification of CNV breakpoints for copy number

ratios in case versus control data. Similar to CNV-seq, sliding windows are used to compare copy number

ratios. However, Segseq has a variable window size based on a user-specified amount of required reads. Segseq

identifies breakpoints by comparing the copy number ratio in each window to those in the adjacent windows.

Significant change in the ratio versus either window identifies a breakpoint and copy number change.

Subsequently, all windows with the same copy number ratio are merged to identify copy number variant and

copy number balanced regions.

rSW-seq119 is a tool that, similar to Segseq, uses case versus control read depths to identify changes in copy

number ratio. However, rSW-seq directly identifies CNV regions by registering cumulative changes in the ratio

as breakpoints of CNVs. Reads for each sample are sorted according to their mapping on the genome, and the

read depths for each sample are subtracted from each other. Local positive or negative sums indicate copy

number gains or losses. Regions with equal read depths are ignored, and regions where read depth differences

are detected are defined as CNVs. This gives a very intuitive overview of where CNVs are found, and can also

identify CNV regions within other CNVs. rSW-seq’s resolution is dependent on the sequencing depth, but seems

limited as CNVs smaller than 10 kb were not reported. It is the only read depth-based tool discussed here that

does not require the specification of genomic windows.

CNAseg120 is another tool that uses genomic windows to identify differences copy number between case

and control data. In addition to LOWESS regression normalization for GC content, CNAseg uses Discrete

Wavelet Transform (DWT) to de-noise regions using, smoothing out regions with low mapability. This is

necessary as a novel HMM-based (Hidden Markov Model) segmentation step is introduced to segment the

windows based on the read depth. An additional algorithm then uses Pearson’s x2 test to merge segments with

a similar copy number ratio, and the copy number state is estimated by comparing the log ratio of read depths.

This identifies segments of contiguous windows with similar read depth, which are then defined as CNVs. This

tool was shown by the authors to increase the specificity and lower the amount of false positives versus CNVseq without affecting sensitivity.

Unless specified otherwise, the single sample read depth-based tools discussed here assume a uniform

Poisson distribution of reads over the whole genome, thus considering any aberration in read depth an effect of

18

copy number. As read depths do in fact vary over the genome due to various biases 70, more accurate models

like the BIC better approximate the distribution of reads over the genome. Although all tools described here are

able to detect differences in copy number within or between genomes, the actual copy number of these regions

is not always automatically determined. In most studies, the copy number may be estimated by normalizing the

median of the read depth in a copy number variant region normalized to that of copy number 2 and rounding to

the nearest integer68,111,112. This has been shown to work well for most platforms by comparing to regions with

known copy numbers, however the copy numbers did not correlate well for the SOLiD platform 121. In a recent

review of read depth approaches121, it was found that the EWT-based tools provide the best results in terms of

both sensitivity and specificity. CBS- and MSB-based tools are better at detecting CNVs with a large number of

windows (50-100), but worse at detecting those with a smaller number of windows (5-10). CNASeg performs

better on high coverage data, but worse on low coverage data. CNV-seq seems to perform poorer overall. In

combination with high coverage data, the EWT-based tools detect CNVs as small as 500 bp, while the CBS- and

MSB-based tools identify CNVs with a size of 2-5 kb. Thus, there seems to be a great deal of variation between

the performance of different tools, also based on the type of data that is used.

4.4 Split read

Few tools have yet been developed for the identification of SVs using split read methods using NGS data.

Most of these rely on specific alignment strategies to identify breakpoints. Pindel72 uses a pattern growth

algorithm to identify the breakpoints of deletions and insertions. As described above, this tool uses anchored

split mapping. Read pairs are selected where one read maps uniquely and the other can’t be mapped under a

certain threshold are used. With the uniquely mapping read as the anchor point, the direction of the read as

well as the user-specified maximum deletion size are used to define a region where Pindel will attempt to map

the other read. This is done using the pattern growth algorithm which searches for minimum (to find the 5’

end) and maximum (to find the 3’ end) unique substrings to map both sides of the read. The read is then

broken into either two (deletion) or three (short intra-read insertion fragment in the middle) fragments. At

least two supporting reads are required for each event. Pindel is able to identify the breakpoints at base pair

accuracy, even for deletions as large as 10 kb. Although the sensitivity of this approach is still problematic in

repeat regions, allowing mismatches in mapping of the anchor read may increase the sensitivity in the future.

By reducing the search space, the chance of mapping partial reads to the human genome is significantly

increased and split read mapping is made possible for NGS platforms. However, the search space may be

affected by insertions or deletions in between the reads. By combining this approach with information of the

mapping distance of surrounding read pairs, the accuracy may be increased.

The AGE122 (Alignment with Gap Excision) tool adopts a strictly alignment-based approach to split read

mapping. Based on two given sequences in the approximate location of SV breakpoints, it simultaneously aligns

the 5’ and 3’ ends of both sequences similar to Smith-Waterman local alignment. The final alignment is then

constructed by tracing back the maximum position in the matrix of each alignment and then aligning the 5’ and

3’ ends. The SV region is then the unaligned region in between. This approach is able to identify SV breakpoints

with base pair accuracy, and also the exact SV length and sequence if the whole sequence is supplied. However,

it does require external identification of the SV region as well as two sequences as input. These sequences need

to be unique enough for proper alignment, which means that either the putative SV region needs to be small

enough or the provided sequences long enough. The SV type needs to be determined by additional processing

of the results. which is often difficult to obtain with current NGS platforms. Considering the input and

additional processing needed, the alignment algorithm is only useful for SV identification as part of a larger

pipeline.

ClipCrop123 detects SVs by using soft-clipping information. Soft-clipped sequences are defined as partially

unmatched fragments in a mapped read. Unmapped parts of partially mapped sequences are used, with a

minimum length of 10 bp. Subsequently, these clipped reads are mapped to the reference genome maximally

1000 bp on either side of the mapped part. Sequences mapping further ahead indicate deletions, inversely

mapping sequences indicate inversions, sequences mapping before the mapped read indicate tandem

duplications, and a cluster of unmapped reads from both sides indicates insertions. Similarly to read pair

methods, additional tandem duplications over those present in the reference genome can’t be detected.

Remapping of unmapped reads is used to differentiate between novel insertions or mobile element

insertions/translocations, with novel insertions not expected to map to the reference genome. Clipped reads

are clustered if they support the same event, and a reliability score based on this support is used to determine

the most likely event. ClipCrop is able to detect a larger variety of signatures, and is not limited by the direction

of the search space like Pindel. Furthermore, ClipCrop was shown to more efficiently detect short duplications

19

(<170 bp) than CNVnator, BreakDancer and Pindel based on simulated data. However, the detection of larger

events was worse than with other methods.

4.5 De novo assembly

Assembly-based identification of structural variation requires two steps: the assembly of the sequence, and

the alignment of this sequence against a reference genome for detection of the variants. Assembly can be

performed either completely de novo, or by using varying degrees of information from a reference assembly.

Assembly can currently be used to identify SV in two ways: local sequence assembly allows the reconstruction

of loci with possible variants, and whole genome assembly would provide the most comprehensive

identification of structural variation in a genome by aligning (large parts of) whole genomes. Alignment to the

reference genome may then identify all types of SVs as well as CCRs using similar methods as split read

mapping.

Genome assembly

The first step, genome assembly, is not a trivial task. Several recent reviews have been published on this

topic, explaining it in more detail63,74,124. Repeat sequences, read errors and heterozygosity present the greatest

challenges here. The short read length of NGS platforms complicates these challenges even more. Previous

assemblers used for the assembly of Sanger sequencing reads were insufficient for use with NGS data, so

several new assemblers have been developed to deal with these problems. NGS assemblers can be divided into

four categories: greedy algorithms, Overlap/Layout/Consensus (OLC) methods, de Bruijn Graph (DBG)

methods and String graphs74,124.

Most early assemblers used greedy algorithms. These operate by simply extending the seed sequence with

the next highest-scoring overlap to the assembly until it is no longer possible. The score is calculated based on

the amount of overlapping sequence. A problem with these algorithms is that false positives are easily added to

a contig, especially with shorter reads. Two identical overlapping sequences in the genome may lead to the

incorporation of unrelated sequences, producing a chimera. Several assemblers using greedy algorithms are

SSAKE125, SHARCGS126 and VCAKE127. This category of assemblers is generally not used for NGS platforms,

except when assembly is performed in combination with Sanger sequencing data.

Overlap/Layout/Consensus assembly was used extensively for Sanger data, but some assemblers have

been adapted for use with NGS data. OLC assembly involves three steps: first, all reads are aligned to each other

in a pair-wise comparison using the seed and extend algorithm. Then, an overlap graph can be constructed and

manipulated to get an approximate read layout. Finally, multiple sequence alignment (MSA) determines the

consensus sequence. Examples of assemblers that use this approach are Newbler 128, which is distributed by

454 Life Sciences, and the Celera Assembler129, which was first used for Sanger data and subsequently revised

for 454 data, now called CABOG. Edena130 and Shorty131 use the OLC approach for the assembly of shorter reads

from Solexa and SOLiD platforms.

The de Bruijn graph approach has been widely adopted and is mostly applied to shorter reads from Solexa

and SOLiD platforms. Instead of calculating all alignments and overlaps, this approach relies on k-mers of a

certain length that are present in any of the reads. k-mers must be shorter than the read length, and are

represented by nodes in the graph. These nodes have connections (edges) with other nodes based on which

other k-mers they are found in the same read with. Ideally, the k-mers would make one path that can be

traveled to form the entire genome. However, this method is more sensitive to repeats and sequencing errors

than OLC and many branches can be found in these graphs. Disadvantages of DBG assembly are that

information from reads longer than k-mers is lost and the choice of K-mer size also has a large effect on the

results. Some assemblers use approaches that still include read information during assembly, but require more

computational power. Euler132 was the first assembler to use the DBG approach. Velvet133 and ALLPATHS134

were introduced later, offer improved assembly speed and contig length and allow the use of read-pair data.

These assemblers are able to assemble entire bacterial genomes. ABySS135 was the first assembler used to

assemble a human genome from short reads. SOAPdenovo136 was introduced later and is also able to assemble

larger (and human) genomes.

Finally, String graphs can be used to compress read and overlap data in assembly124. The primary

advantages of String graphs over DBGs are that the data is compressed further so assembly can be performed

more efficiently, and the possibility to use the full reads instead of k-mers. String graphs are based on the

overlap between reads or k-mers. Similarly to DBG assembly, each sequence is represented by a node, these

have edges to other nodes with overlapping sequence. In this case, the edges are represented by the nonoverlapping sequence between the nodes. Thus, this constructs all possible paths while the entire sequence is

20

retrievable by following the edges. This approach is used by the String Graph Assembler (SGA)137, which is able

to assemble an entire human genome using one machine, and corrects single-base sequencing errors.

Several updated assemblers like ALLPATHS-LG138, Velvet1.1 and Euler-USR139 show significant