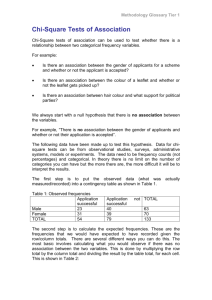

Chi-square basics

advertisement

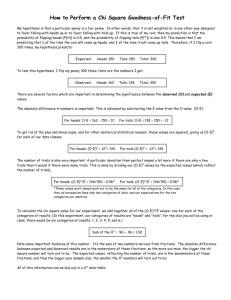

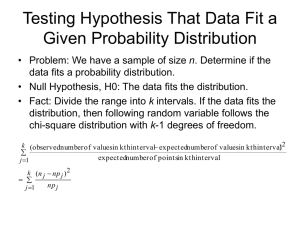

What is Chi-square? Chi-square is a statistical test commonly used to compare observed data with data we would expect to obtain according to a specific hypothesis. For example, if, according to Mendel's laws, you expected 10 of 20 offspring from a cross to be male and the actual observed number was 8 males, then you might want to know about the "goodness of fit" between the observed and expected. Were the deviations (differences between observed and expected) the result of chance, or were they due to other factors? How much deviation can occur before you, the investigator, must conclude that something other than chance is at work, causing the observed to differ from the expected? The chi-square test is always testing what scientists call the null hypothesis, which states that there is no significant difference between the expected and observed result. What do we need to conduct a Chi-square test? 1. Outcome classes (heads/tails, tall/short, purple/white, etc.) 2. Observed results (8 heads, 10 tails from an actual coin flip) 3. Expected results (9 heads, 9 tails) Remember to use numbers, not percentages 4. Chi-square formula: 5. A chi-square distribution table d.f. p=0.9 p=0.5 p=0.1 p=0.05 p=0.01 1 0.016 0.455 2.706 3.841 6.635 2 0.211 1.386 4.605 5.991 9.210 3 0.584 2.366 6.251 7.815 11.345 6. The degrees of freedom (d.f.) - simply the number of outcome classes minus one. In a coin flip we have two outcome classes ("heads" and "tails"), therefore 1 degree of freedom 7. Probabilities associated with the chi-square distributions (included in the distribution table and called p values) 8. A threshold for probability that tells us to reject the null hypothesis if p < 0.05. A threshold for probability that tells us to accept the null hypothesis if p > 0.05. In other words, when accepting the null hypothesis because p > 0.05 the deviation between observed and expected is small enough that chance alone accounts for it. Note: as the observed values diverge more from the expected values, the chisquare test statistic (X2) becomes larger. Thus, large values of chi-square are associated with large differences between observed and expected values. Example Scenario: Consider the following coin-toss experiment. We flip a coin 20 times, getting 12 "heads" and 8 "tails." There are two outcome classes, heads or tails and we would expect our probability or expected number of occurrences for each outcome to be 0.5 or 10 heads and 10 tails. Our null hypothesis states that there should be no significant difference between our observed and expected results assuming that the coin is “fair”. Outcome Observed Class Number of Occurrences of Outcome Probability of Outcome Class Expected Number of Occurrences of Outcome (obs-exp)2 / exp Heads 12 0.5 0.5 x 20 = 10.0 (12-10)2 / 10 = 0.4. Tails 8 0.5 0.5 x 20 = 10.0 (8-10)2 / 10 = 0.4. Sum 20 20 0.8 Notice that the totals for observed and expected numbers are the same (both are 20). If you ever do this test and the columns do not add up to the same total, you have done something wrong! Degrees of freedom (d.f.) = 1, using the chi-square distribution table to find where 0.8 falls we see it lies between 0.455 and 2.706 and p>0.05, so we accept our hypothesis. Try your luck using chi-square analysis on this whacky example! There are 110 houses in a particular neighborhood. Liberals live in 25 of them, moderates in 55 of them, and conservatives in the remaining 30. An airplane carrying 65 lb. sacks of flour passes over the neighborhood. For some reason, 20 sacks fall from the plane, each miraculously slamming through the roof of a different house. None hit the yards or the street, or land in trees, or anything like that. Each one slams through a roof. Anyway, 2 slam through a liberal roof, 15 slam through a moderate roof, and 3 slam through a conservative roof. Should we reject the hypothesis that the sacks of flour hit houses at random? Category Liberal Moderate Conservative Total Observed Expected Obs-Exp (Obs-Exp)2 / Exp