ch4 - Alan Moses

advertisement

Statistical modeling and machine learning for molecular biology

Alan Moses

Chapter 4 –parameter estimation and multivariate statistics.

1. Parameter estimation

Fitting a model to data: objective functions and estimation

Given a distribution or “model” for data, the next step is to “fit” the model to the data. Typical

probability distributions will have unknown parameters, numbers that change the shape of the

distribution. The technical term for the procedure of finding the values of the unknown

parameters of a probability distribution from data is “estimation”. During estimation one seeks

to find parameters that make the model “fit” the data the “best.” If this all sounds a bit subjective,

that’s because it is. In order to proceed, we have to provide some kind of mathematical

definition of what it means to fit data the best. The description of how well the model fits the

data is called the “objective function.” Typically, statisticians will try to find “estimators” for

parameters that maximize (or minimize) an objective function. And statisticians will disagree

about which estimators or objective functions are the best.

In the case of the Gaussian distribution, these parameters are called the “mean” and “standard

deviation” often written as mu and sigma. In using the Gaussian distribution as a model for some

data, one seeks to find values of mu and sigma that fit the data. As we shall see, what we

normally think of as the “average” is in fact an “estimator” for the mu parameter of the Gaussian

that we refer to as the mean.

2. Maximum Likelihood Estimation

The most commonly used objective function is known as the “likelihood”, and the most wellunderstood estimation procedures seek to find parameters that maximize the likelihood. These

maximum likelihood estimates (once found) are often referred to as MLEs.

The likelihood is defined as a conditional probability: P(data|model), the probability of the data

given the model. Typically, the only part of the model that can change are the parameters, so the

likelihood is often written as P(X|θ) where X is a data matrix, and θ is a vector containing all the

parameters of the distribution(s). This notation makes explicit that the likelihood depends on

both the data and a choice of any free parameters in the model.

More formally, let’s start by saying we have some i.i.d., observations from a pool, say X1, X2 …

Xn, which we will refer to as a vector X. We want to write down the likelihood, L, which is the

conditional probability of the data given the model. In the case of independent observations, we

can use the joint probability rule to write:

𝑛

𝐿 = 𝑃(𝑋|𝜃) = 𝑃(𝑋1|𝜃)𝑃(𝑋2 |𝜃) … 𝑃(𝑋𝑛 |𝜃) = ∏ 𝑃(𝑋𝑖 |𝜃)

𝑖=1

Statistical modeling and machine learning for molecular biology

Alan Moses

Maximum likelihood estimation says: “choose the parameters so the data is most probable given

the model” or find θ that maximizes L. In practice, this equation can be very complicated, and

there are many analytic and numerical techniques to solve it.

3. The likelihood for Gaussian data.

To make the likelihood specific we have to choose the model. If we assume that each

observation is described by the Gaussian distribution, we have two parameters, the mean, μ and

standard deviation σ.

𝑛

𝑛

𝑛

𝐿 = ∏ 𝑃(𝑋𝑖 |𝜃) = ∏ 𝑁(𝑋𝑖 |𝜇, 𝜎) = ∏

𝑖=1

𝑖=1

𝑖=1

1

𝜎√2𝜋

𝑒

−

(𝑋𝑖 −𝜇)2

2𝜎2

Admittedly, the formula looks complicated. But in fact, this is a *very* simple likelihood

function. I’ll illustrate this likelihood function by directly computing it for an example.

Observation (i)

1

2

3

4

5

L = 0.000063798

Value (Xi)

5.2

9.1

8.2

7.3

7.8

P(Xi|θ)= N(Xi | μ = 6.5, σ=1.5)

0.18269

0.059212

0.13993

0.23070

0.18269

Notice that in this table I have chosen values for the parameters, μ and σ, which is necessary to

calculate the likelihood. We will see momentarily that these parameters are *not* the maximum

likelihood estimates the parameters that maximize the likelihood), but rather just illustrative

values. The likelihood (for these parameters) is simply the product of the 5 values in the right

column of the table.

It should be clear that as the dataset gets larger, the likelihood gets smaller and smaller, but

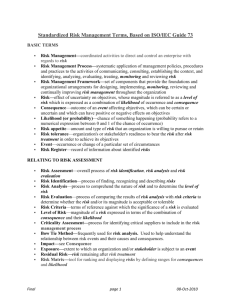

always is still greater than zero. Because the likelihood is a function of all the parameters, even

in the simple case of the Gaussian, the likelihood is still a function of two parameters (plotted in

figure X) and represents a surface in the parameter space. To make this figure I simply calculated

the likelihood (just as in the table above) for a large number of pairs of mean and standard

deviation parameters. The maximum likelihood can be read off (approximately) from this graph

as the place where the likelihood surface has its peak. This is a totally reasonable way to

calculate the likelihood if you have a model with one or two parameters. You can see that the

standard deviation parameter I chose for the table above (1.5) is close to the value that

maximizes the likelihood, but the value I chose for the mean is probably too small – the

maximum looks to occur when the mean is around 7.5.

Statistical modeling and machine learning for molecular biology

Alan Moses

0.00025

0.0002

Likelihood, L

0.00015

0.0001

10

0.00005

7.5

0

1

1.5

2

2.5

3

3.5

4

Mean, μ

5

Standard

deviation, σ

Figure X – numerical evaluation of the likelihood function

This figure also illustrates two potential problems with numerical methods to calculate the

likelihood. If your model has many parameters, drawing a graph becomes hard, and, more

importantly, the parameter space might become difficult to explore: you might think you have a

maximum in one region, but you might miss another peak somewhere else in high dimensions.

The second problem is that you have to choose individual points to numerically evaluate the

likelihood – there might always be a point in between the points you evaluated that has a slightly

higher likelihood.

4. How to maximize the likelihood analytically

Although numerical approaches are always possible now-a-days, it’s still faster (and more fun!)

to find the exact mathematical maximum of the likelihood function if you can. We’ll derive the

MLEs for the univariate Gaussian likelihood introduced above. The problem is complicated

enough to illustrate the major concepts of likelihood, as well as some important mathematical

notations and tricks that are widely used to solve statistical modeling and machine learning

problems. Once we have written down the likelihood function, the next step is to find the

maximum of this function by taking the derivatives with respect to the parameters, setting them

equal to zero and solving for the maximum likelihood estimators. Needless to say, this probably

seems very daunting at this point. But if you make it through this book, you’ll look back at this

problem with fondness because it was so *simple* to find the analytic solutions. The

mathematical trick that makes this problem go from looking very hard to being relatively easy to

solve is the following: take the logarithm. Instead of working with likelihoods, in practice, we’ll

almost always use log-likelihoods because of their mathematical convenience. (Log likelihoods

are also easier to work with numerically because instead of very very small positive numbers

near zero, we can work with big negative numbers.) Because the logarithm is monotonic (it

Statistical modeling and machine learning for molecular biology

Alan Moses

doesn’t change the ranks of numbers) the maximum of the log-likelihood is also the maximum of

the likelihood. So here’s the mathematical magic:

𝑛

𝑛

𝑛

𝑖=1

𝑖=1

𝑖=1

1

(𝑋𝑖 − 𝜇)2

𝑙𝑜𝑔𝐿 = 𝑙𝑜𝑔 ∏ 𝑁(𝑋𝑖 |𝜇, 𝜎) = ∑ log𝑁(𝑋𝑖 |𝜇, 𝜎) = ∑ −log𝜎 − log(2π)−

2

2𝜎 2

In the preceding we have used many properties of the logarithm: log(1/x) = -log x, log(xy) =

log(x) + log(y), and log (ex) = x. This formula for the log likelihood might not look much better,

but remember that we are trying to find the parameters that maximize this function. To do so we

want to take its derivative with respect to the parameters and set it equal to zero. To find the

maximum likelihood estimate of the mean, µ, we will take derivatives with respect to µ. Using

the linearity of the derivative operator, we have

𝑛

𝜕

𝜕

1

(𝑋𝑖 − 𝜇)2

𝑙𝑜𝑔𝐿 =

∑ −log𝜎 − log(2π)−

𝜕𝜇

𝜕𝜇

2

2𝜎 2

𝑖=1

𝑛

= ∑−

𝑖=1

𝜕

1 𝜕

𝜕 (𝑋𝑖 − 𝜇)2

log𝜎 −

log(2π)−

=0

𝜕𝜇

2 𝜕𝜇

𝜕𝜇 2𝜎 2

Since two of the terms have no dependence on µ, their derivatives are simply zero. Taking the

derivatives we get

𝑛

𝑛

𝑖=1

𝑖=1

𝜕

2(𝑋𝑖 − 𝜇)

1

𝑙𝑜𝑔𝐿 = ∑ −0 − 0+

= 2 ∑(𝑋𝑖 − 𝜇) = 0

2

𝜕𝜇

2𝜎

𝜎

Where in the last step we took out of the sum the σ2 that didn’t depend on i. Since we can

multiply both sides of this equation by σ2, we are left with

𝑛

𝑛

𝑛

𝑛

𝑖=1

𝑖=1

𝑖=1

𝑖=1

𝜕

𝑙𝑜𝑔𝐿 = ∑(𝑋𝑖 − 𝜇) = ∑ 𝑋𝑖 − ∑ 𝜇 = ∑ 𝑋𝑖 − 𝑛𝜇 = 0

𝜕𝜇

Which we can actually solve

𝑛

𝜇 = 𝜇𝑀𝐿𝐸

1

= ∑ 𝑋𝑖 = 𝑚𝑋

𝑛

𝑖=1

this equation tells us the value of µ that we should choose if we want to maximize the likelihood.

I hope that it is clear that the suggestion is simply to choose the sum of the observations divided

by the total number of observations – in other words, the average. I have written µMLE to remind

us that this is the maximum likelihood estimator.

Notice that although the likelihood function (illustrated in figure X) depends on both parameters,

the formula we obtained for the µMLE doesn’t. A similar (slightly more complicated) derivation

is also possible for the standard deviation.

Statistical modeling and machine learning for molecular biology

Alan Moses

𝑛

𝜕

1

𝑙𝑜𝑔𝐿 = 0 → 𝜎𝑀𝐿𝐸 = √ ∑(𝑋𝑖 − 𝜇)2 = 𝑠𝑋

𝜕𝜎

𝑛

𝑖=1

In the MLE for the standard deviation, there is an explicit dependence on the mean. Because in

order to maximize the likelihood, the derivatives with respect to *all* the parameters must be

zero, to get the maximum likelihood estimate for the standard deviation you need to first

calculate the MLE for the mean and plug it in to the formula above.

In general, setting the derivatives of the likelihood with respect to all the parameters to zero leads

to a set of equations with as many equations and unknowns as the number of parameters. In

practice there are very few problems of this kind that can be solved analytically.

Mathematical box- The distribution of parameter estimates for MLEs.

Assuming that you have managed to maximize the likelihood of your model using either analytic

or numerical approaches, it is sometimes possible to take advantage of the very well-developed

statistical theory in this area to do hypothesis testing on the parameters. The maximum

likelihood estimator is a function of the data, and therefore it will not give the same answer if

another random sample is taken from the distribution. However, it is known that (under certain

assumptions) the maximum likelihood estimates will be Gaussian distributed, with means equal

to the true means of the parameters, and variances related to the second derivatives of the

likelihood at the maximum, which are summarized in the so-called Fisher Information matrix

(which I abbreviate as FI).

𝑉𝑎𝑟(𝜃𝑀𝐿𝐸 ) = 𝐸[−𝐹𝐼 −1 ]𝜃=𝜃𝑀𝐿𝐸

This formula says that the variance of the parameter estimates is the (1) expectation of the

negative of (2) inverse of the (3) Fisher information matrix, evaluated at the maximum of the

likelihood (so that all parameters have been set equal to their MLEs. I’ve written the numbers to

indicate that getting the variance of the parameter estimates is actually a tedious three step

process, and it’s rarely used in practice for that reason. However, if you have a simple model,

and don’t mind a little math, it can be incredibly useful to have these variances. For example, in

the case of the Gaussian distribution, there are two parameters (u and sigma), so the Fisher

information matrix is a 2 x 2 matrix.

𝜕 2 𝑙𝑜𝑔𝐿

𝜕𝜇 2

𝐹𝐼 = 2

𝜕 𝑙𝑜𝑔𝐿

[ 𝜕𝜇𝜕𝜎

𝜕 2 𝑙𝑜𝑔𝐿

𝑛

− 2

𝜕𝜇𝜕𝜎

𝜎

=[

2

𝜕 𝑙𝑜𝑔𝐿

0

𝜕𝜎 2 ]

0

2𝑛]

− 2

𝜎

The first step in getting the variance of your estimator is evaluating these derivatives. In most

cases, this must be done numerically, but in textbook examples they can be evaluated

analytically. For the Gaussian model, at the maximum of the likelihood they have the simple

formulas that I’ve given above.

Statistical modeling and machine learning for molecular biology

Alan Moses

The second derivatives measure the change in the slope of the likelihood function, and it makes

sense that they come up here because the variance of the maximum likelihood estimator is

related intuitively to the shape of the likelihood function near the maximum. If the likelihood

surface is very flat around the estimate, there is less certainty, whereas if the maximum

likelihood estimate is at a very sharp peak in the likelihood surface, there is a lot of certainty -another sample from the same data is likely to give nearly the same maximum. The second

derivatives measure the local curvature of the likelihood surface near the maximum.

Once you have the derivatives (using the values of the parameters at the maximum), the next step

is to invert this matrix. In practice, this is not possible to do analytically for all but the simplest

statistical models. For the Gaussian case, the matrix is diagonal, so the inverse is just

𝐹𝐼 −1

𝜎2

−

𝑛

=

[ 0

0

𝜎2

− ]

2𝑛

Finally, once you have the inverse, you simply take the negative of the diagonal entry in the

matrix that corresponds to the parameter you’re interested in, and then take the expectation. So

the variance for the mean would be

𝜎2

𝑛

. This means that the distribution of 𝜇𝑀𝐿𝐸 is Gaussian,

with mean equal to the true mean, and standard deviation equal to the true standard deviation

divided by the square root of n.

Figure X the distribution of parameters is related to the shape of region nearby the maximum in

the likelihood

5. Other objective functions

Despite the popularity, conceptual clarity and theoretical properties of maximum likelihood

estimation, there are (many) other objective functions and corresponding estimators that are

widely used.

Another simple, intuitive objective function is the “least squares” – simply adding up the squared

differences between the model and the data. Minimizing the sum of squared differences leads to

the maximum likelihood estimates in many cases, but not always. One good thing about least

squares estimation is that it can be applied even when your model doesn’t actually conform to a

probability distribution (or it’s very hard to write out or compute the probability distribution).

One of the most important objective functions is the so-called posterior probability and the

corresponding Maximum-Apostiori-Probability or MAP estimates/estimators. In contrast to ML

estimation, MAP estimation says: “choose the parameters so the model is most probable given

the data we observed.” Now the objective function is P(theta|X) and the equation to solve is

𝜕

𝑃(𝜃|𝑋) = 0

𝜕𝜃

Statistical modeling and machine learning for molecular biology

Alan Moses

As you probably already guessed, the MAP and ML estimation problems are related via Bayes’

theorem, so that this can be written as

𝑃(𝜃|𝑋) =

𝑃(𝜃)

𝑃(𝜃)

𝑃(𝑋|𝜃) =

𝐿

𝑃(𝑋)

𝑃(𝑋)

Once again, it is convenient to think about the optimization problem in log space, where the

objective function breaks into three parts, only two of which actually depend on the parameters.

𝜕

𝜕

𝜕

𝜕

𝜕

𝜕

log 𝑃(𝜃|𝑋) =

log 𝑃(𝜃) +

log 𝐿 −

log 𝑃(𝑋) =

log 𝑃(𝜃) +

log 𝐿 = 0

𝜕𝜃

𝜕𝜃

𝜕𝜃

𝜕𝜃

𝜕𝜃

𝜕𝜃

Interestingly, optimizing the posterior probabilility therefore amounts to optimizing the

likelihood function *plus* another term that depends only on the parameters.

The posterior probability objective function turns out to be one of a class of so-called

“penalized” likelihood functions where the likelihood is combined with mathematical functions

of the parameters to create a new objective function. As we shall see, these objective functions

turn out to underlie several intuitive and powerful machine learning methods that we will see in

later chapters.

No matter what objective function is chosen, estimation usually always involves solving a

mathematical optimization problem, and in practice this is almost always done using a computer

– either with a statistical software package such as R or Matlab, or using purpose-written codes.

Box – bias, consistency and efficiency of estimators

In order to facilitate debates about which estimators are the best, statisticians developed several

objective criteria that can be used to compare estimators. For example, it is commonly taught

that the ML estimator of the standard deviation for the Gaussian is biased. This means that for a

sample of data, the value of the standard deviation obtained using the formula given will tend to

(in this case) underestimate the “true” standard deviation of the values in the pool. On the other

hand the estimator is consistent, meaning that as the sample size drawn from the pool

approaches infinity, the estimator does converge to the “true” value. Finally, the efficiency of

the estimator describes how quickly the estimate approaches the truth as a function of the sample

size. In modern molecular biology we are generally dealing with large enough sample sizes that

we don’t really need to worry about these issues. In addition, they will almost always be taken

care of by the computer statistics package used to do the calculations. This is a great example of

a topic that is traditionally covered at length in introductory statistics courses, and is of

academic interest to statisticians, but is of no practical use in modern data-rich science.

So how do we choose an objective function? In practice as biologists we usually choose the one

that’s simplest to apply, where we can find a way to reliably optimize it. We’re not usually

interested in debating with statisticians about whether the likelihood of the model is more

important than the likelihood of the data. Instead, we want to know something about the

parameters that are being estimated- testing our hypothesis about whether this experiment yields

Statistical modeling and machine learning for molecular biology

Alan Moses

more of something than another experiment, whether something is an example of A and not B,

whether there are two groups or three, or whether A predicts B better than random. So as long as

we use the same method of estimation on all of our data, it’s probably not that important which

estimation methods we used.

Box: Bayesian estimation and prior distributions

As we have seen, the MAP objective function and more generally penalized likelihood methods

can be related to the ML objective function through the use of Bayes Theorem. For this reason

these methods sometimes are given names with the word “Bayesian” in them. However, as long

as a method results in a single estimator for parameters, it is not really Bayesian in spirit. Truly

Bayesian estimation means that you don’t try to pin down a single value for your parameters.

Instead, you embrace the fundamental uncertainty that any particular estimate for your

parameters is just one possible estimate drawn from a pool. True Bayesian statistics mean that

you consider the entire distribution of your parameters given your data and your prior beliefs

about what the parameters should be. In practice Bayesian estimation is rarely used in biology,

because biologists want to know the values of their parameters. We don’t want to consider the

whole distribution of expression levels of our gene that are compatible with the observed data:

we want to know the level of the gene.

Although Bayesian estimation is rarely used, the closely associated concepts of prior and

posterior distributions are very powerful and widely used. Because the Bayesian perspective is to

think of the parameters as random variables that need to be estimated, models are used to

describe the distributions of the parameters both before (prior) and after considering the

observations (posterior). Although it might not seem intuitive to think that the parameters have a

distribution *before* we consider the data, in fact it makes a lot of sense: we might require the

parameters to be between 0 and infinity if we are using a Poisson model for our data, or ensure

that they add up to 1 if we are using a multinomial or binomial model. The idea of prior

distributions is that we can generalize this to quantitatively weight the values of the parameters

by how likely the parameters might turn out to be. Of course, if we don’t have any prior beliefs

about the parameters, we can always use uniform distributions, so that all possible values of the

parameters are equally likely (in Bayesian jargon these are called uninformative priors).

However, as we shall see, we will find it too convenient to resist putting prior knowledge into our

models using priors.

6. Multivariate statistics

An important generalization of the statistical models that we’ve seen so far is to the case where

multiple events are observed at the same time. In the models we’ve seen so far, observations

were single events: yes or no, numbers or letters. In practice, a modern molecular biology

experiment typically measures more than one thing, and a genomics experiment might yield

measurements for thousands of things: all the genes in the genome.

A familiar example of an experiment of this kind might be a set of genome-wide expression level

measurements. In the Immgen data, for each gene, we have measurements of gene expression

over ~200 different cell types. Although in the previous chapters we considered each of the cell

Statistical modeling and machine learning for molecular biology

Alan Moses

types independently, a more comprehensive way to describe the data is that for each gene, the

observation is actually a vector, X, of length ~200, where each element of the vector is the

expression measurement for a specific cell type. Alternatively, it might be more convenient to

think of each observation as a cell type, where the observation is now a vector of 24,000 gene

expression measurements. This situation is known in statistics as “multivariate” to describe the

idea that multiple variables are being measured simultaneously. Conveniently, the familiar

Gaussian distribution generalizes to the multivariate case, expect the single numbers (scalar)

mean and variance parameters are now replaced with a mean vector and (co-)variance matrix.

𝑁(𝑋⃗|𝜇⃗, 𝚺) =

1

√|𝚺|(2𝜋)𝑑

1 ⃗⃗

𝑇 𝚺 −1 (𝑋

⃗⃗−𝜇

⃗⃗)

𝑒 −2(𝑋−𝜇⃗⃗)

Here I’ve used a small d to indicate the dimensionality of the data, so that d is the length of the

vectors u and X, and the covariance is a matrix of size d x d. In this formula, I’ve explicitly

written small arrows above the vectors and bolded the matrices. In general the machine learning

people will not do this (I will adopt this convention) and it will be left to the reader to keep track

of what are the scalars, vectors and matrices. If you’re hazy on your vector and matrix

multiplications and transposes, you’ll have to review them in order to follow the rest of this

section (and most of this book).

Mathematical box: a quick review of vectors, matrices and linear algebra

As I’ve already mentioned, a convenient way to think about multiple observations at the same

time, is to think about them as lists of numbers, which are known as vectors in mathematical

jargon. We will refer to the a list of numbers X, as a vector X = (x1, x2, x3, … xn), where n is the

“length” or dimensionality of the vector (the number of numbers in the list). Once we’ve defined

these lists of numbers, we can go ahead and define arithmetic and algebra on these lists. One

interesting wrinkle to the mathematics of lists is that for any operation we define, we have to

keep track of whether the result is actually a list or a number.

𝑋 − 𝑌 = (𝑥1 , 𝑥2 , … , 𝑥𝑛 ) − (𝑦1 , 𝑦2 , … , 𝑦𝑛 ) = (𝑥1 − 𝑦1 , 𝑥2 − 𝑦2 , … , 𝑥𝑛 − 𝑦𝑛 )

Which turns out to be a vector

𝑛

𝑋 ∙ 𝑌 = 𝑥1 𝑦1 + 𝑥2 𝑦2 + ⋯ + 𝑥𝑛 𝑦𝑛 = ∑ 𝑥𝑖 𝑦𝑖

𝑖=1

Which is a number. The generalization of algebra means that we can write equations like

𝑋−𝑌 =0

Which means that

(𝑥1 − 𝑦1 , 𝑥2 − 𝑦2 , … , 𝑥𝑛 − 𝑦𝑛 ) = (0,0, … ,0)

A shorthand way of writing n equations in one line.

Statistical modeling and machine learning for molecular biology

Alan Moses

Since mathematicians love generalizations, there’s no reason we can’t generalize the idea of lists

to also include a list of lists, so that each element of the list is actually a vector. This type of

object is what we call a matrix. A = (X1, X2, X3 …, Xm) where X1 = (x1, x2, x3, … xn). To refer to

each element of A, we can write A11, A12, A13 … A21, A22, A23 … Amn. We can then go ahead and

define some mathematical operations on matrices as well: If A and B are matrices, A – B = C

means that for all i and j, Cij = Aij – Bij.

We can also do mixtures of matrices and vectors and numbers:

𝑚

𝑚

𝑚

𝑐𝑥 + 𝐴𝑦 = (𝑐𝑥1 + ∑ 𝐴1𝑗 𝑦𝑗 , 𝑐𝑥2 + ∑ 𝐴2𝑗 𝑦𝑗 , … , 𝑐𝑥𝑛 + ∑ 𝐴𝑛𝑗 𝑦𝑗 )

𝑗=1

𝑗=1

𝑗=1

Where c is a number, x and y are vectors and A is a matrix. This turns out to be a vector.

However, there’s one very inelegant issue with the generalization to matrices: what we mean

when we refer to the value Aij depends on whether the i refers to the index in 1 through m or 1

through n. In other words, we have to keep track of the structure of the matrix. To deal with this

issue, linear algebra has developed a set of internally consistent notations, which are referred to

as the “row” or “column” conventions. So anytime I write the vector, x, by default I mean the

“column” vector

𝑥1

𝑥2

𝑋 = ( ⋮ )𝑋 𝑇 = (𝑥1

𝑥𝑛

𝑥2

… 𝑥𝑛 )

To indicate the “row” vector, I have to write the “transpose” of X, or XT. The transpose is

defined as the operation of switching all the rows and columns. So in fact there are two kinds of

products that can be defined:

𝑥1

𝑥2

𝑋 ∙ 𝑌 = 𝑋 𝑇 𝑌 = ( ⋮ )(𝑦1 𝑦2 …

𝑥𝑛

known as the “inner product”, and

𝑋𝑌 𝑇 = (𝑥1

𝑥2

𝑦𝑛 ) = ∑𝑛𝑖=1 𝑥𝑖 𝑦𝑖 , which is the familiar dot product also

𝑦1

𝑥1 𝑦1

𝑦

… 𝑥𝑛 ) ( 2 ) = ( 𝑥1 𝑦2

⋮

⋮

𝑦𝑚

𝑥1 𝑦𝑚

𝑥2 𝑦1

𝑥2 𝑦2

⋯

𝑥𝑛 𝑦1

)

⋱

𝑥𝑛 𝑦𝑚

which is the so-called “outer” product that takes two vectors and produces a matrix.

This means that X*X = XTX, which is a number. X*XT works out to: which is actually a matrix.

Although you don’t really have to worry about this stuff unless you are doing the calculations, I

will try to use consistent notation, and you’ll have to get used to seeing these linear algebra

notations as we go along.

Statistical modeling and machine learning for molecular biology

Alan Moses

Finally, an interesting point here is to consider the generalization beyond lists of lists: it’s quite

reasonable to define a matrix, where each element of the matrix is actually a vector. This object

is called a tensor. Unfortunately, as you can imagine, when we get to objects with three indices,

there’s no simple convention like “rows” and “columns” that we can use to keep track of the

structure of the objects. I will at various times in this book introduce objects with more than two

indices – especially when dealing with sequence data. However, in those cases, I won’t be able

to use the generalizations of addition, subtraction from linear algebra, because we won’t be able

to keep track of the indices. We’ll have to write out the sums explicitly when we get beyond two

indices.

Matrices also have different types of multiplications: the matrix product produces a matrix, but

there are also inner products and outer products that produce other objects. A related concept

that we’ve already used is the “inverse” of a matrix. The inverse is the matrix that multiplies to

give a matrix with 1’s along the diagonal (the so-called identity matrix, I).

1

0

𝐴𝐴−1 = 𝐼 = (

⋮

0

0

1

⋯ 0

)

⋱

1

Figure XA illustrates the idea of sampling lists of observations from a pool. Although this might

all sound complicated, multivariate statistics is easy to understand because there’s a very

straightforward, beautiful geometric interpretation to it. The idea is that we think of each

component of the observation vector (say each gene’s expression level in a specific cell type) as

a “dimension.” So if we measure the expression level of two genes in each cell type, we have

two-dimensional data. If we measure three genes, then we have three-dimensional data. 24,000

genes, then we have … you get the idea. Of course, we won’t have an easy time making graphs

of 24,000-dimensional space, so we’ll typically use 2 or 3-dimensional examples for illustrative

purposes.

Statistical modeling and machine learning for molecular biology

Alan Moses

Phenotype

x4d

…

x2 =

x4 =

…

x21

x22

x41

x42

x2d

_

_

x3 = CAG

1

x3 = T

T

A

C

G

T

Codon position 1

0

0 00

1

_

_

_

_

Gene 1 level

_

1

_

0

Aa

AA

genotype

C

T

G

_

C

Codon position 3

_

A

Gene 3 level

x2

aa

_

0

x5

_

x3 x

2 x

4

_

x1

x1

A

1

Figure – multivariate observations as vectors

In biology there are lots of other types of multivariate data, and a few of these are illustrated in

Figure XB. For example, one might have observations of genotypes and phenotypes for a sample

of individuals. Another ubiquitous example is DNA sequences: the letter at each position can be

thought of as one of the dimensions. In this view, each of our genomes represent 3 billion

dimensional vectors sampled from the pool of the human population. In an even more useful

representation, each position in a DNA (or protein) sequence can be represented as a 4 (or 20)

dimensional vector, and the human genome can be thought of as a 3 billion x 4 matrix of 1s and

0s. In these cases, the components of observations are not all numbers, but this should not stop us

from using the geometrical interpretation that each observation is a vector in a high-dimensional

space.

A key generalization that becomes available in multivariate statistics is the idea of correlation.

Although we will still assume that the observations are i.i.d., the dimensions are not necessarily

independent. For example, in a multivariate Gaussian model for cell-type gene expression, the

observation of a highly expressed gene X might make us more likely to observe a highly

expressed gene Y. In the multivariate Gaussian model, the correlation between the dimensions is

controlled by the off-diagonal elements in the covariance matrix, where each off-diagonal entry

summarizes the correlation between a pair of dimensions. Intuitively, an off-diagonal term of

zero implies that there is no correlation between two dimensions. In a multivariate Gaussian

model where all the dimensions are independent, the off-diagonal terms of the covariance matrix

are all zero, so the covariance is said to be diagonal. A diagonal covariance leads to a symmetric,

isotropic or most confusingly “spherical” distribution.

8

64

512

4096

CD8 antigen alpha chain

(expression level)

4

2

-2

-4

-2

0

2

4

mvrnorm(n = 1000, rep(0, 2), matrix(c(0.2, -0.6,mvrnorm(n

-0.6, 3), 2, [,2]

= 1000, rep(0, 2), matrix(c(4, 0, 0, 1), 2, 2))[,2]

2))[,2]

-4

-2

0

2

4

-4

-2

0

2

4

8

-4

-4

-2

0

2

4

X2

2

4

mvrnorm(n = 1000, rep(0, 2), matrix(c(4, 0, 0, 1), 2, 2))[,1]

mvrnorm(n = 1000, rep(0, 2), matrix(c(1, 0, 0, 1), 2, 2))[,1]

0

64

-2

512

0

X2

-4

CD8 antigen beta chain

(expression level)

4096

mvrnorm(n = 1000, rep(0, 2), matrix(c(1, 0.8, 0.8,

1), 2, 2))[,2]

mvrnorm(n

= 1000, rep(0, 2), matrix(c(1, 0, 0, 1), 2, 2))[,2]

Statistical modeling and machine learning for molecular biology

Alan Moses

-4

-2

0

X1

2

4

-4

-2

0

2

4

mvrnorm(n

mvrnorm(n = 1000, rep(0, 2), matrix(c(1, 0.8, 0.8, 1), 2,

2))[,1] = 1000, rep(0, 2), matrix(c(0.2, -0.6, -0.6, 3), 2, [,1]

2))[,1]

X1

Figure – multivariate Gaussians and correlation

The panel on the left shows real gene expression data for the CD8 antigen (from ImmGen). The

panel on the right shows 4 parameterizations of the multivariate Gaussian in two dimensions. In

each case the mean is at (0,0). Notice that none of the simple Gaussian models fit the observed

CD8 expression data very well.

7. MLEs for multivariate distributions

The ideas that we’ve already introduced about optimizing objective functions can be transferred

directly from the univariate case to the multivariate case. The only minor technical complication

will arise because multivariate distributions have more parameters, and therefore the set of

equations to solve can be larger and more complicated.

To illustrate the kind of mathematical tricks that we’ll need to use, we’ll consider two examples

of multivariate distributions that are very commonly used. First, the multinomial distribution,

which is the multivariate generalization of the binomial. This distribution describes the numbers

of times we observe events from multiple categories. For example, the traditional use of this

type of distribution would be to describe the number of times each face of a die (1 through 6)

turned up. In bioinformatics it is often used to describe the numbers of each of the bases in DNA

(A, C, G, T).

If we say the X is the number of counts of the four bases, and f is a vector of probabilities of

observing each base, such that ∑𝑖 𝑓𝑖 = 1, where i indexes the four bases. The multinomial

probability for the counts of each base in DNA is given by

𝑀𝑁(𝑋|𝑓) =

(𝑋𝐴 + 𝑋𝐶 + 𝑋𝐺 +𝑋𝑇 )! 𝑋𝐴 𝑋𝐶 𝑋𝐺 𝑋𝑇 (∑𝑖∈{𝐴,𝐶,𝐺,𝑇} 𝑋𝑖 )!

𝑓𝐴 𝑓𝐶 𝑓𝐺 𝑓𝑇 =

∏𝑖∈{𝐴,𝐶,𝐺,𝑇} 𝑋𝑖 !

𝑋𝐴 ! 𝑋𝐶 ! 𝑋𝐺 ! 𝑋𝑇 !

∏

𝑖∈{𝐴,𝐶,𝐺,𝑇}

𝑓𝑖 𝑋𝑖

Statistical modeling and machine learning for molecular biology

Alan Moses

The term in front (with the factorials over the product) has to do with the number of “ways” that

you could observed, say X = (535 462 433 506) for A, C, G and T. For our purposes, (to derive

the MLEs) we don’t need to worry about this term because it doesn’t depend on the parameters,

and when take the log and then the derivative, it will disappear.

log 𝐿 = log

(∑𝑖∈{𝐴,𝐶,𝐺,𝑇} 𝑋𝑖 )!

+ log

∏𝑖∈{𝐴,𝐶,𝐺,𝑇} 𝑋𝑖 !

∏

𝑓𝑖 𝑋𝑖

𝑖∈{𝐴,𝐶,𝐺,𝑇}

However, the parameters are the fs, so the equation to solve for each one is

𝜕 log 𝐿

𝜕

=

𝜕𝑓𝐴

𝜕𝑓𝐴

∑

𝑋𝑖 log 𝑓𝑖 = 0

𝑖∈{𝐴,𝐶,𝐺,𝑇}

If we solved this equation directly, we get

𝜕 log 𝐿 𝑋𝐴

=

=0

𝜕𝑓𝐴

𝑓𝐴

Although this equation seems easy to solve, there is one tricky issue: the sum of the parameters

has to be 1. If we solved it, we’d always set each of the parameters to infinity, in which case

they would not sum up to one. The optimization (taking derivatives and setting them to zero)

doesn’t know that we’re working on a probabilistic model – it’s just a straight optimization. In

order to enforce that the parameters stay between 0 and 1, we need to add a constraint to the

optimization. This is most easily done through the method of lagrange multipliers, where we rewrite the constraint as an equation that equals zero, e.g., 1 − ∑𝑖 𝑓𝑖 = 0, and add it to the function

we are trying to optimize multiplied by a constant, the so-called lagrange multiplier, lamda.

𝜕 log 𝐿

𝜕

=

𝜕𝑓𝐴

𝜕𝑓𝐴

∑

𝑋𝑖 log 𝑓𝑖 + 𝜆 (1 −

𝑖∈{𝐴,𝐶,𝐺,𝑇}

∑

𝑓𝑖 ) = 0

𝑖∈{𝐴,𝐶,𝐺,𝑇}

Taking the derivatives gives

𝜕 log 𝐿 𝑋𝐴

=

−𝜆=0

𝜕𝑓𝐴

𝑓𝐴

Which we can solve to give

(𝑓𝐴 )𝑀𝐿𝐸 =

𝑋𝐴

𝜆

Where I have used MLE to indicate that we now have the maximum likelihood estimator for the

parameter fA. Of course, this is not very useful because it is in terms of the lagrange multiplier.

To figure out what the actual MLEs are we have to think about the constraint 1 − ∑𝑖 𝑓𝑖 = 0.

Since we need the derivatives with respect to all the parameters to be 0, we’ll get a similar

equation for fC fG and fT. Putting these together gives us:

Statistical modeling and machine learning for molecular biology

Alan Moses

(𝑓𝑖 )𝑀𝐿𝐸 =

∑

𝑖∈{𝐴,𝐶,𝐺,𝑇}

∑

𝑖∈{𝐴,𝐶,𝐺,𝑇}

𝑋𝑖

=1

𝜆

Or

𝜆=

∑

𝑋𝑖

𝑖∈{𝐴,𝐶,𝐺,𝑇}

Which says that lamda is just the total number of bases we observed. So the MLE for the

parameter fA is just

(𝑓𝐴 )𝑀𝐿𝐸 =

𝑋𝐴

∑𝑖∈{𝐴,𝐶,𝐺,𝑇} 𝑋𝑖

Which is the intuitive result that the estimate for probability of observing A is just the fraction of

bases that were actually A.

A more complicated example is to find the MLEs for the multivariate Gaussian. We’ll start by

trying to find the MLEs for the mean. As before we can write the log likelihood

𝑛

𝑛

𝑖=1

𝑖=1

1

1

log 𝐿 = log 𝑃(𝑋|𝜃) = log ∏ 𝑁(𝑋𝑖 |𝜇, Σ) = ∑ − log[|Σ|(2𝜋)𝑑 ] − (𝜇 − 𝑋𝑖 )𝑇 Σ −1 (𝜇 − 𝑋𝑖 )

2

2

Since we are working in the multivariate case, we now need to take a derivative with respect to a

vector 𝜇. One way to do this is to simply take the derivative with respect to each component of

the vector. So for the first component of the vector, we could write

𝑛

𝑑

𝑑

𝑖=1

𝑗=1

𝑘=1

𝑛

𝑑

𝜕 log 𝐿

𝜕

1

=∑

[− ∑(𝑋𝑖𝑗 − 𝜇𝑗 ) ∑(Σ −1 )𝑗𝑘 (𝑋𝑖𝑘 − 𝜇𝑘 ) ] = 0

𝜕𝜇1

𝜕𝜇1

2

𝑑

𝜕 log 𝐿

𝜕

1

=∑

[− ∑ ∑(𝑋𝑖𝑗 − 𝜇𝑗 )(Σ −1 )𝑗𝑘 (𝑋𝑖𝑘 − 𝜇𝑘 ) ] = 0

𝜕𝜇1

𝜕𝜇1

2

𝑖=1

𝑛

𝑗=1 𝑘=1

𝑑

𝑑

𝜕 log 𝐿

𝜕

1

=∑

[− ∑ ∑(Σ −1 )𝑗𝑘 (𝑋𝑖𝑗 𝑋𝑖𝑘 − 𝜇𝑗 𝑋𝑖𝑘 − 𝜇𝑘 𝑋𝑖𝑗 + 𝜇𝑗 𝜇𝑘 ) ] = 0

𝜕𝜇1

𝜕𝜇1

2

𝑖=1

𝑗=1 𝑘=1

Where I have tried to write out the matrix and vector multiplications explicitly. Since the

derivative will be zero for all terms that don’t depend on the first component of the mean, we

have

Statistical modeling and machine learning for molecular biology

Alan Moses

𝑛

𝜕 log 𝐿

1

𝜕

=− ∑

[(Σ −1 )11(−𝜇1 𝑋𝑖1 − 𝜇1 𝑋𝑖1 + 𝜇1 𝜇1 )

𝜕𝜇1

2

𝜕𝜇1

𝑖=1

𝑑

+ ∑(Σ −1 )𝑗1 (−𝜇𝑗 𝑋𝑖1 − 𝜇1 𝑋𝑖𝑗 + 𝜇𝑗 𝜇1 )

𝑗=2

𝑑

+ ∑(Σ −1 )1𝑘 (−𝜇1 𝑋𝑖1 − 𝜇𝑘 𝑋𝑖1 + 𝜇1 𝜇𝑘 ) ] = 0

𝑘=2

Because of the symmetry of the covariance matrix, the last two terms are actually the same:

𝑛

𝜕 log 𝐿

1

𝜕

=− ∑

[(Σ −1 )11 (−𝜇1 𝑋𝑖1 − 𝜇1 𝑋𝑖1 + 𝜇1 𝜇1 )

𝜕𝜇1

2

𝜕𝜇1

𝑖=1

𝑑

+ 2 ∑(Σ −1 )𝑗1 (−𝜇𝑗 𝑋𝑖1 − 𝜇1 𝑋𝑖𝑗 + 𝜇𝑗 𝜇1 ) ] = 0

𝑗=2

Differentiating the terms that do depend on the first component of the mean gives

𝑛

𝑑

𝑖=1

𝑗=2

𝜕 log 𝐿

1

= − ∑ [(Σ −1 )11 (−2𝑋𝑖1 + 2𝜇1 ) + 2 ∑(Σ −1 )𝑗1 (−𝑋𝑖𝑗 + 𝜇𝑗 ) ] = 0

𝜕𝜇1

2

𝑛

𝑑

𝑖=1

𝑗=2

𝜕 log 𝐿

= − ∑ [(Σ −1 )11 (−𝑋𝑖1 + 𝜇1 ) + ∑(Σ −1 )𝑗1 (−𝑋𝑖𝑗 + 𝜇𝑗 ) ] = 0

𝜕𝜇1

Merging the first term back in to the sum, we have

𝑛

𝑑

𝑛

𝜕 log 𝐿

= − ∑ [∑(−𝑋𝑖𝑗 + 𝜇𝑗 )(Σ −1 )𝑗1 ] = − ∑(𝜇 − 𝑋𝑖 )𝑇 (Σ −1 )1 = 0

𝜕𝜇1

𝑖=1 𝑗=1

𝑖=1

Where I have abused the notation somewhat to write the first column of the inverse covariance

matrix as a vector,(Σ −1 )1 .

[Alternative derivation]

Since the derivative will be zero for all terms that don’t depend on the first component of the

mean, we have

𝑛

𝑑

𝑑

𝑑

𝑑

𝑖=1

𝑘=1

𝑗=1

𝑘=1

𝑗=1

𝜕 log 𝐿

1

= − ∑ [− ∑(Σ −1 )1𝑘 𝑋𝑖𝑘 − ∑(Σ −1 )𝑗1 𝑋𝑖𝑗 + ∑(Σ −1 )1𝑘 𝜇𝑘 + ∑(Σ −1 )𝑗1 𝜇𝑗 ] = 0

𝜕𝜇1

2

Statistical modeling and machine learning for molecular biology

Alan Moses

Notice that all of these sums are over the same thing, so we can factor them and write

𝑛

𝑑

𝑑

𝑖=1

𝑘=1

𝑘=1

𝜕 log 𝐿

1

= − ∑ [− ∑[(Σ −1 )1𝑘 + (Σ −1 )𝑘1 ]𝑋𝑖𝑘 + ∑[(Σ −1 )1𝑘 + (Σ −1 )𝑘1 ]𝜇𝑘 ] = 0

𝜕𝜇1

2

𝑛

𝑑

𝜕 log 𝐿

1

= − ∑ [∑(−𝑋𝑖𝑘 + 𝜇𝑘 )[(Σ −1 )1𝑘 + (Σ −1 )𝑘1 ]] = 0

𝜕𝜇1

2

𝑖=1 𝑘=1

Because the covariance is symmetrical, (Σ −1 )1𝑘 = (Σ −1 )𝑘1, and we have

𝑛

𝑑

𝑛

𝜕 log 𝐿

1

= − ∑ [∑ 2(−𝑋𝑖𝑘 + 𝜇𝑘 )(Σ−1 )1𝑘 ] = ∑(𝜇 − 𝑋𝑖 )𝑇 (Σ −1 )1 = 0

𝜕𝜇1

2

𝑖=1 𝑘=1

𝑖=1

Where I have abused the notation somewhat to write the first column of the inverse covariance

matrix as a vector,(Σ −1 )1 and multiplied both sides by -1, so as not to bother with the negative

sign. Notice the problem: although were trying to find the MLE for the first component of the

mean only, we have an equation that includes all the components of the mean through the offdiagonal elements of the co-variance matrix. This means we have a single equation with d

variables and unknowns, which obviously cannot be solved uniquely. However, when we try to

find the maximum likelihood parameters, we have to set *all* the derivatives with respect to all

the parameters to zero, and we will end up an equations like this for each component of the

mean. This implies that we will actually have a set of d equations for each of the d components

of the mean, each involving a different row of the covariance matrix. We’ll get a set of

equations like

𝑛

𝜕 log 𝐿

= ∑(𝜇 − 𝑋𝑖 )𝑇 (Σ −1 )2 = 0

𝜕𝜇2

𝑖=1

"…"

𝑛

𝜕 log 𝐿

= ∑(𝜇 − 𝑋𝑖 )𝑇 (Σ −1 )𝑑 = 0

𝜕𝜇𝑑

𝑖=1

We can write the set of equations as

𝑛

𝜕 log 𝐿

= ∑(𝜇 − 𝑋𝑖 )𝑇 Σ −1 = 0

𝜕𝜇

𝑖=1

Where the 0 is now the vector of zeros for all the components of the mean. To solve this

equation, we note that the covariance matrix does not depend on i, so we can simply multiply

each term of the sum and the 0 vector by the covariance matrix. We get

Statistical modeling and machine learning for molecular biology

Alan Moses

𝑛

𝜕 log 𝐿

= ∑(𝜇 − 𝑋𝑖 )𝑇 = 0

𝜕𝜇

𝑖=1

The equation can be solved to give

𝑛

∑ 𝑋𝑖 𝑇 = 𝑛𝜇 𝑇

𝑖=1

Or the familiar

𝑛

1

𝜇 = ∑ 𝑋𝑖 𝑇

𝑛

𝑇

𝑖=1

Which says simply that the MLEs for the components of the mean are simply the averages of the

observations in each dimension.

It turns out that there is a much faster way of solving these types of problems using so-called

vector (or matrix) calculus. Instead of working on derivatives of each component of the mean

individually, we will use clever linear algebra notation to write all of the equations in one line by

using the following identity:

𝜕 𝑇

[𝑥 𝐴𝑥] = 𝑥 𝑇 (𝐴 + 𝐴𝑇 )

𝜕𝑥

Where A is any matrix, and the derivative is now a derivative with respect to a whole vector, x.

Using this trick, we can proceed directly (remember that for a symmetric matrix like the

covariance 𝐴 = 𝐴𝑇 )

𝑛

𝑛

𝑛

𝑖=1

𝑖=1

𝑖=1

𝜕 log 𝐿

𝜕

1

1

=

∑ − (𝜇 − 𝑋𝑖 )𝑇 Σ −1 (𝜇 − 𝑋𝑖 ) = ∑ (𝜇 − 𝑋𝑖 )𝑇 2Σ −1 = ∑(𝜇 − 𝑋𝑖 )𝑇 Σ −1 = 0

𝜕𝜇

𝜕𝜇

2

2

Similar matrix calculus tricks can be used to find the MLEs for (all the components of) the

𝜕

covariance matrix. If you know that 𝜕𝐴 log |𝐴| = (𝐴−1 )𝑇 and

𝜕

𝜕𝐴

[𝑥 𝑇 𝐴𝑥] = 𝑥𝑥 𝑇 , where again A

is a matrix and x is a vector, it’s not too hard to find the MLEs for the covariance matrix. In

general, this matrix calculus is not something that biologists (or even bioinformaticians) will be

familiar with, so if you ever have to differentiate your likelihood with respect to vectors or

matrices, you’ll probably have to look up the necessary identities.

8. Hypothesis testing revisited – the problems with high dimensions.

Since we’ve just agreed that what biologists are usually doing is testing hypotheses, we usually

think much more about our hypothesis tests than about our objective functions. Indeed, as we’ve

seen already, it’s even possible to do hypothesis testing without specifying parameters or

objective functions, (non-parameteric tests).

Statistical modeling and machine learning for molecular biology

Alan Moses

Although I said that statistics has a straightforward generalization to high-dimensions, in practice

the most powerful idea from hypothesis testing, namely, the P-value, does not generalize very

well. This has to do with the key idea that the P-value is the probability of observing something

as extreme *or more.* In high-dimensional space, it’s not clear which direction the “or more” is

in. For example, if you observed 3 genes average expression levels (7.32, 4.67, 19.3) and you

wanted to know whether this was the same as these genes’ average expression levels in another

set of experiments (8.21, 5.49, 5.37), you could try to form a 3-dimensional test statistic, but it’s

not clear how to sum up the values of the test statistic that are more extreme than the ones you

observed- you have to decide which direction(s) to do the sum. Even if you decide which

direction you want to sum up each dimension, performing these multidimensional sums is

practically difficult as the number of dimensions becomes large.

The simplest way to deal with hypothesis testing in multivariate statistics is just to do a

univariate test on each dimension, and pretend they are independent. If any dimension is

significant, then (after correcting for the number of tests) the multivariate test must also be

significant. In fact, that’s what we were doing in the previous chapter when we used Bonferroni

to correct the number of tests in the gene set enrichment analysis. Even if the tests are not

independent this treatment is conservative, and in practice, we often want to know in which

dimension the data differed. In the case of gene set enrichment analysis, we don’t really care

whether ‘something’ is enriched – we want to know what exactly is the enriched category.

However, there are some cases where we might not want to simply treat all the dimensions

independently. A good example of this might be a time-course of measurements or

measurements that are related in some natural way, like length and width of an iris petal. If you

want to test whether one sample of iris petals is bigger than another, you probably don’t want to

test whether the length is bigger and then whether the height is bigger. You want to combine

both into one test. Another example might be if you’ve made pairs of observations and you want

to test if their ratios are different, but the data include a lot of zeros, so you can’t actually form

the ratios. One possibility is to create a new test statistic and generate some type of empirical null

distribution (as described in the first chapter). However, another powerful approach is to

formulate a truly multivariate hypothesis test: a likelihood ratio test.

formally:

•

The observations (or data) are X1, X2, … XN , which we will write as a vector X

•

H0, is the null hypothesis, and H1 is another hypothesis. The two hypotheses make

specific claims about the parameters in each model. For example, H0 might state that θ = φ,

some particular values of the parameters, while H1 might state that θ ≠ φ (i.e., that the

parameters are anything but φ).

•

The likelihood ratio test statistic is -2 log [ p(X| θ = φ)/ p(X| θ ≠ φ) ], where any

parameters that are not specified by the hypotheses (i.e., free parameters) have been set to their

maximum likelihood values. (This means that in order to perform a likelihood ratio test, it is

necessary to be able to obtain maximum likelihood estimates, either numerically or analytically).

Statistical modeling and machine learning for molecular biology

Alan Moses

•

Under the null hypothesis the likelihood ratio test statistic is chi square distributed, with

degrees of freedom equal to the difference in the number of free parameters between the two

hypotheses.

Example of LRT for multinomial: GC content in genomes

The idea of the likelihood ratio test is that when two hypotheses (or models) describe the same

data using different numbers of parameters, the one with more free parameters will always

achieve a slightly higher likelihood because it can fit the data better. However, the amazing

result is that the improvement in fit that is due simply to chance is predicted by the chisquare

distribution (which is always positive). If the model with more free parameters fits the data

better than the improvement expected by chance, then we should accept that model.

The likelihood ratio test is an example of class of techniques that are widely used in machine

learning to decide if adding more parameters to make a more complex model is “worth it” or if it

is “over fitting” the data with more parameters than are really needed. We will see other

examples of techniques in this spirit later in this book.

Excercises:

1.

2.

3.

4.

What is the most probable value under a univariate Gaussian distribution? What is its

probability?

Use the joint probability rule to argue that a multivariate Gaussian with diagonal covariance is nothing but the product of univariate Gaussians.

Show that the average is also the MLE for the parameter of the Poisson distribution.

Explain why this is consistent with what I said about the average of the Gaussian

distribution in Chapter 1.

Fill in the components of the vectors and matrices for the part of the multivariate

1

Gaussian distribution: 2 (𝜇 − 𝑋𝑖 )𝑇 Σ −1 (𝜇 − 𝑋𝑖 ) = [

5.

6.

⋯][

][ ]

⋱

⋮

Derive the MLE for the covariance matrix of the multivariate Gaussian (use the

matrix calculus tricks I mentioned in the text).

Why did we need lagrange multipliers for the multinomial MLEs, but not for the

Guassian MLEs ?