AMS 572 Class Notes - Department of Applied Mathematics

advertisement

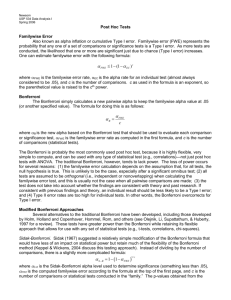

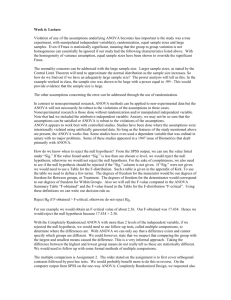

AMS 572 Class Notes Chapter 12 Analysis of Variance (ANOVA) One-way ANOVA (fixed factors) H 0 : 1 2 a * Goal: compare the means from a (a≥2) different populations. * It is an extension of the pooled variance t-test. * Assumptions: (i) Equal (unknown) population variances 12 22 a2 2 (ii) Normal populations (iii) Independent samples H a : these i ’s are not all equal. Assumptions: a population, N ( i , 2 ) , i=1,2,…,a. 2 is unknown. Samples: a independent samples. Data: population1 populationi population a sample1 samplei sample a Y11 Y 12 n1 Y1n 1 Yi1 Yi 2 ni Yini Ya1 Ya 2 na Yan a Balanced design: ni n Unbalanced design: otherwise Derivation of the test (1) PQ, can be derived (2) * Union-intersection method. Best method for this type of test as in other regression analysis related tests. Please see AMS 570/571 text book, and also the book by G.A.F. Seber: Linear Regression Model, published by John Wiley for details. 1 (3) LRT (Likelihood Ratio test) Test Statistic: ni a MSA F0 MSE ( y i i 1 j 1 a ni ( y ij i 1 j 1 y ) 2 / a 1 yi ) / N a H0 ~ Fa 1, N a 2 a Total sample size N ni . i 1 Sample mean: Y1 Yi grand mean Y Ya iid Yij ~ N (i , 2 ), i 1, j 1, ,a , ni Balanced design: ni n iid Yij ~ N ( , 2 ) Y1 ~ N ( , a (Y i 1 i 2 Y )2 /n n (Y ij i 1 j 1 a (Y i 1 i n ) ~ a21 2 a Yi ~ N ( , ), n 2 Yi )2 ~ a2( n1) N2 a 2 Y )2 H0 /n 2 ~ a21 iid Theorem Let X i ~ N ( , 2 ), i 1, (1) X ~ N ( , n (X (2) i 1 i 2 n ) X )2 2 ,n (n 1) S 2 2 ~ n21 (3) X and S 2 are independent. 2 Definition F W / k1 ~ Fk1 ,k2 , where W ~ k21 ,V ~ k22 and they are independent. V / k2 a E ( MSA) 2 n ( i 1 i i )2 a 1 , E ( MSE ) 2 . When H 0 is true: F0 ~1 , ( i ) H a is true: F0 1 . Intuitively, we reject H 0 in favor of H a if F0 C , where C is determined by the significance level as usual: P(reject H 0 | H 0 ) P( F0 C | H 0 ) . When a=2, H 0 : 1 2 H a : 1 2 T0 y1 y2 H 0 ~ Tn1 n2 2 1 1 S n1 n2 Note: If T ~ tk , T 2 ~ F1,k . (One can prove this easily using the definitions of the t- and Fdistributions) If we reject the ANOVA hypothesis, then we should do the pairwise comparisons. 3 a a(a 1) 2 2 H 01 : 1 2 H 02 : 1 3 H 0,a ( a 1) / 2 : a 1 a H a1 : 1 2 H a 2 : 1 3 H a ,a ( a 1) / 2 : a 1 a The multiple comparison problem Familywise Error (FWE): Family wise error rate = αFWE = P(reject at least 1 true null hypothesis) As more tests are conducted, the likelihood that one or more are significant just due to chance (Type I error) increases. One can estimate the familywise error rate with the following formula: FWE 1 (1 EC )c where αFWE is the familywise error rate, αEC is the alpha rate for an individual test (often set at .05), and c is the number of comparisons. Bonferroni Correction: The Bonferroni correction method simply calculates a new pairwise alpha to keep the familywise alpha value at .05 (or another specified value). The formula for doing this is as follows: B FWE c where αB is the new alpha based on the Bonferroni test that should be used to evaluate each comparison or significance test, αFWE is the familywise error rate as computed in the first formula, and c is the number of comparisons (statistical tests). Until the advent of modern multiple comparison methods, the Bonferroni correction was perhaps the most commonly used post hoc test, because it is highly flexible, very simple to compute, and can be used with any type of statistical test (e.g., correlations)—not just post hoc tests with ANOVA. The traditional Bonferroni, however, tends to lack power. The loss of power occurs for several reasons: (1) the familywise error calculation depends on the assumption that, for all tests, the null hypothesis is true. This is unlikely to be the case, especially after a significant omnibus test; (2) all tests are assumed to be orthogonal (i.e., independent or nonoverlapping) when calculating the familywise error test, and this is usually not the case when all pairwise comparisons are made; (3) the test does not take into account whether the findings are consistent with theory and past research. If consistent with previous findings and theory, an individual result should be 4 less likely to be a Type I error; and (4) Type II error rates are too high for individual tests. In other words, the Bonferroni overcorrects for Type I error. Tukey’s Studentized Range test: (* It is the preferred method to ensure the FEW when all pairwise comparisons are of interest – the resulting FWE is exact when the sample sizes are equal, and smaller (more conservative) when the sample sizes are unequal.) H 0ij : i j H aij : i j At FWE , reject H 0ij if | tij | yi y j s* 1 1 ni n j qa , N a , 2 where s 2 = MSE. Fisher’s LSD (Least Significant Difference) test: (It does not control for the familywise error rate!) One could just use multiple t-tests to make each comparison desired, but one runs the risk of greatly inflating the familywise error rate when doing so as discussed earlier. If you Yi Y j have equal sample sizes and homogeneity of variance, you can use t 0 , 2 MSE n which pools the error variance across all a groups, giving you N - a degrees of freedom. Y i Y j If you have homogeneity of variance but unequal n’s use: t 0 . MSE 1 1 MSE n n j i is the error mean square from the ANOVA. Finally, the name ANOVA came from the partitioning of the variations: a ni a ni a ni ( yij y )2 ( yij yi )2 ( yi y )2 i 1 j 1 i 1 j 1 SST i 1 j 1 SSE SSA For more details, please refer to our text book. 5 Ronald Fisher (1890-1962) Sir Ronald Aylmer Fisher was a British statistician, evolutionary biologist, and geneticist. He has been described as: “a genius who almost single-handedly created the foundations for modern statistical science” and “the greatest of Darwin's successors”. Fisher was born in East Finchley in London, to George and Katie Fisher. Although Fisher had very poor eyesight, he was a precocious student, winning the Neeld Medal (a competitive essay in Mathematics) at Harrow School at the age of 16. Because of his poor eyesight, he was tutored in mathematics without the aid of paper and pen, which developed his ability to visualize problems in geometrical terms, as opposed to using algebraic manipulations. He was legendary in being able to produce mathematical results without setting down the intermediate steps. In 1909 he won a scholarship to Gonville and Caius College, Cambridge, and graduated with a degree in mathematics in 1913. During his work as a statistician at the Rothamsted Agricultural Experiment Station, UK, Fisher pioneered the principles of the design of experiments and elaborated his studies of "analysis of variance". In addition to "analysis of variance", Fisher invented the technique of maximum likelihood and originated the concepts of sufficiency, ancillarity, Fisher's linear discriminator and Fisher information. The contributions Fisher made also included the development of methods suitable for small samples, like those of Gosset, and the discovery of the precise distributions of many sample statistics. Fisher published a number of important texts including Statistical Methods for Research Workers (1925), The design of experiments (1935) and Statistical tables (1947). Fisher's important contributions to both genetics and statistics are emphasized by the remark of L.J. Savage, "I occasionally meet geneticists who ask me whether it is true that the great geneticist R.A. Fisher was also an important statistician" (Annals of Statistics, 1976). Source: http://en.wikipedia.org/wiki/Ronald_Fisher 6 John Tukey (1915-2000) John Tukey, 85, Statistician; Coined the Word 'Software' John Wilder Tukey, one of the most influential statisticians of the last 50 years and a wide-ranging thinker credited with inventing the word ''software,'' died on Wednesday in New Brunswick, N.J. He was 85. The cause was a heart attack after a short illness, said Phyllis Anscombe, his sister-in-law. Mr. Tukey developed important theories about how to analyze data and compute series of numbers quickly. He spent decades as both a professor at Princeton University and a researcher at AT&T's Bell Laboratories, and his ideas continue to be a part of both doctoral statistics courses and high school math classes. In 1973, President Richard M. Nixon awarded him the National Medal of Science. But Mr. Tukey frequently ventured outside of the academy as well, working as a consultant to the government and corporations and taking part in social debates. In the 1950's, he criticized Alfred C. Kinsey's research on sexual behavior. In the 1970's, he was chairman of a research committee that warned that aerosol spray cans damaged 7 the ozone layer. More recently, he recommended that the 1990 Census be adjusted by using statistical formulas in order to count poor urban residents whom he believed it had missed. ''The best thing about being a statistician,'' Mr. Tukey once told a colleague, ''is that you get to play in everyone's backyard.'' An intense man who liked to argue and was fond of helping other researchers, Mr. Tukey was also an amateur linguist who made significant contributions to the language of modern times. In a 1958 article in American Mathematical Monthly, he became the first person to define the programs on which electronic calculators ran, said Fred R. Shapiro, a librarian at Yale Law School who is editing a dictionary of quotations with information on the origin of terms. Three decades before the founding of Microsoft, Mr. Tukey saw that ''software,'' as he called it, was gaining prominence. ''Today,'' he wrote at the time, it is ''at least as important'' as the '' 'hardware' of tubes, transistors, wires, tapes and the like.'' Twelve years earlier, while working at Bell Laboratories, he had coined the term ''bit,'' an abbreviation of ''binary digit'' that described the 1's and 0's that are the basis of computer programs. Both words caught on, to the chagrin of some computer scientists who saw Mr. Tukey as an outsider. ''Not everyone was happy that he was naming things in their field,'' said Steven M. Schultz, a spokesman for Princeton. Mr. Tukey had no immediate survivors. His wife of 48 years, Elizabeth Rapp Tukey, an antiques appraiser and preservation activist, died in 1998. Mr. Tukey was born in 1915 in New Bedford, a fishing town on the southern coast of Massachusetts, and was the only child of Ralph H. Tukey and Adah Tasker Tukey. His mother was the valedictorian of the class of 1898 at Bates College in Lewiston, Me., and her closest competition was her eventual husband, who became the salutatorian. Classmates referred to them as the couple most likely to give birth to a genius, said Marc G. Glass, a Bates spokesman. The elder Mr. Tukey became a Latin teacher at New Bedford's high school, but, because of a rule barring spouses from teaching at the school, Mrs. Tukey was a private tutor, Mrs. Anscombe said. Mrs. Tukey's main pupil became her son, who attended regular classes only for special subjects like French. ''They were afraid that if he went to school, he'd get lazy,'' said Howard Wainer, a friend and former student of John Tukey's. In 1936, Mr. Tukey graduated from nearby Brown University with a bachelor's degree in chemistry, and in the next three years earned three graduate degrees, one in chemistry at Brown and two in mathematics at Princeton, where he would spend the rest of his career. At the age of 35, he became a full professor, and in 1965 he became the founding chairman of Princeton's statistics department. 8 Mr. Tukey worked for the United States government during World War II. Friends said he did not discuss the details of his projects, but Mrs. Anscombe said he helped design the U-2 spy plane. In later years, much of his important work came in a field that statisticians call robust analysis, which allows researchers to devise credible conclusions even when the data with which they are working are flawed. In 1970, Mr. Tukey published ''Exploratory Data Analysis,'' which gave mathematicians new ways to analyze and present data clearly. One of those tools, the stem-and-leaf display, continues to be part of many high school curriculums. Using it, students arrange a series of data points in a series of simple rows and columns and can then make judgments about what techniques, like calculating the average or median, would allow them to analyze the information intelligently. That display was typical of Mr. Tukey's belief that mathematicians, professional or amateur, should often start with their data and then look for a theorem, rather than vice versa, said Mr. Wainer, who is now the principal research scientist at the Educational Testing Service. ''He legitimized that, because he wasn't doing it because he wasn't good at math,'' Mr. Wainer said. ''He was doing it because it was the right thing to do.'' Along with another scientist, James Cooley, Mr. Tukey also developed the Fast Fourier Transform, an algorithm with wide application to the physical sciences. It helps astronomers, for example, determine the spectrum of light coming from a star more quickly than previously possible. As his career progressed, he also became a hub for other scientists. He was part of a group of Princeton professors that gathered regularly and included Lyman Spitzer Jr., who inspired the Hubble Space Telescope. Mr. Tukey also persuaded a group of the nation's top statisticians to spend a year at Princeton in the early 1970's working together on robust analysis problems, said David C. Hoaglin, a former student of Mr. Tukey. Mr. Tukey was a consultant to the Educational Testing Service, the Xerox Corporation and Merck & Company. From 1960 to 1980, he helped design the polls that the NBC television network used to predict and analyze elections. His first brush with publicity came in 1950, when the National Research Council appointed him to a committee to evaluate the Kinsey Report, which shocked many Americans by describing the country's sexual habits as far more diverse than had been thought. From their first meeting, when Mr. Kinsey told Mr. Tukey to stop singing a Gilbert and Sullivan tune aloud while working, the two men clashed, according to ''Alfred C. Kinsey,'' a biography by James H. Jones. In a series of meetings over two years, Mr. Kinsey vigorously defended his work, which Mr. Tukey believed was seriously flawed, relying on a sample of people who knew each 9 other. Mr. Tukey said a random selection of three people would have been better than a group of 300 chosen by Mr. Kinsey. By DAVID LEONHARDT, July 28, 2000 © The New York Times Company Example 1. A deer (definitely not reindeer) hunter prefers to practice with several different rifles before deciding which one to use for hunting. The hunter has chosen five particular rifles to practice with this season. In one test to see which rifles could shoot the farthest and still have sufficient knock-down power, each rifle was fired six times and the distance the bullet traveled recorded. A summary of the sample data is listed below, where the distances are recorded in yards. Rifle 1 2 3 4 5 Mean 341.7 412.5 365.8 505.0 430.0 Std. Dev. 40.8 23.6 62.2 28.3 38.1 (a) Are these rifles equally good? Test at =0.05. Answer: This is one-way ANOVA with 5 “samples” (a=5), and 6 observations per sample ( ni n 6 ), and thus the total sample size is N=30. The grand mean is 341.7 412.5 430.0 y 411 5 We are testing H 0 : 1 2 5 versus H a : at least one of these equalities is not true. The test statistic is MSA F0 MSE where a MSA n ( y y) i 1 i i a 1 2 6 [(341.7 411) 2 4 (430.0 411) 2 ] 24067.17 and a MSE s 2 (n 1)s i 1 a 2 i i (n 1) i 1 1 [40.82 5 38.12 ] 1668.588 i Therefore F0 24067.17 14.42 1668.588 10 Since F0 14.42 F4,25,0.05 2.67 , we reject the ANOVA hypothesis H 0 and claim that the five rifles are not equally good. (b) Use Tukey’s procedure with =0.05 to make pairwise comparisons among the five population means. Answer: Now we will do the pairwise comparison using Tukey’s method. The Tukey method will reject any pairwise null hypothesis H 0ij : i j at FWE= if yi y j qa , N a , s/ n In our case, a=5, n=6, s 1668.588 40.85 , N-a=25, =0.05, and qa , N a , q5,30,0.05 4.17 . Therefore, we would reject H 0ij if yi y j s * qa , N a , / n 69.54 The conclusion is that at the familywise error rate of 0.05, we declare that the following rifle pairs are significantly different: 4/1, 4/2, 4/3, 4/5, 5/1, 2/1. Example 2. Fifteen subjects were randomly assigned to three treatment groups X, Y and Z (with 5 subjects per treatment). Each of the three groups has received a different method of speed-reading instruction. A reading test is given, and the number of words per minute is recorded for each subject. The following data are collected: X 700 850 820 640 920 Y 480 460 500 570 580 Z 500 550 480 600 610 Please write a SAS program to answer the following questions. (a) Are these treatments equally effective? Test at α = 0.05. (b) If these treatments are not equally good, please use Tukey’s procedure with α = 0.05 to make pairwise comparisons. Answer: This is one-way ANOVA with 3 samples and 5 observations per sample. The SAS code is as follows: DATA READING; INPUT GROUP $ WORDS @@; DATALINES; X 700 X 850 X 820 X 640 X 920 Y 480 Y 460 Y 500 Y 570 Y 580 Z 500 Z 550 Z 480 Z 600 Z 610 ; RUN ; 11 PROC ANOVA DATA=READING ; TITLE ‘Analysis of Reading Data’ ; CLASS GROUP; MODEL WORDS = GROUP; MEANS GROUP / TUKEY; RUN; The SAS output is as follows: Analysis of Reading Data The ANOVA Procedure Class Level Information Class Levels GROUP 3 Values X Y Z Number of observations 15 The ANOVA Procedure Dependent Variable: WORDS Source DF Sum of Squares Mean Square F Value Pr > F Model 2 215613.3333 107806.6667 16.78 0.0003 Error 12 77080.0000 6423.3333 Corrected Total 14 292693.3333 R-Square Coeff Var Root MSE WORDS Mean 0.736653 12.98256 80.14570 617.3333 Source DF Anova SS Mean Square F Value Pr > F GROUP 2 215613.3333 107806.6667 16.78 0.0003 The ANOVA Procedure Tukey's Studentized Range (HSD) Test for WORDS NOTE: This test controls the Type I experimentwise error rate, but it generally has a higher Type II error rate than REGWQ. Alpha 0.05 12 Error Degrees of Freedom 12 Error Mean Square 6423.333 Critical Value of Studentized Range 3.77278 Minimum Significant Difference 135.22 Means with the same letter are not significantly different. Tukey Grouping Mean N GROUP A 786.00 5 X B B B 548.00 5 Z 518.00 5 Y Conclusion: (a) The p-value of the ANOVA F-test is 0.0003, less than the significance level α = 0.05. Thus we conclude that the three reading methods are not equally good. (b) Furthermore, the Tukey’s procedure with α = 0.05 shows that method X is superior to methods Y and Z, while methods Y and Z are not significantly different. Example 3. The effect of caffeine levels on performing a simple finger tapping task was investigated in a double blind study. Thirty male college students were trained in finger tapping and randomly assigned to receive three different doses of caffeine (0, 100, or 200 mg) with 10 students per dose group. Two hours following the caffeine treatment, students were asked to finger tap and the numbers of taps per minute were counted. The data are tabulated below. Caffeine Dose 0 mg 242 245 244 100 mg 248 246 245 200 mg 246 248 250 Finger Taps per Minute 248 247 248 242 244 246 242 247 248 250 247 246 243 244 252 248 250 246 248 245 250 (a) Construct an ANOVA table and test if there are significant differences in finger tapping between the groups at α =.05. (b) Compare the finger tapping speed between the 0 mg and the 200 mg groups at α =.05. List assumptions necessary – and, please perform tests for the assumptions that you can test in an exam setting. (c) Please write up the entire SAS program necessary to answer question raised in (a), including the data step. (d) Please write up the entire SAS program necessary to answer question raised in (b), including the data step, and the tests for all assumptions necessary. Answer: (a) This is Problem 12.2(b) in our text book, one-way ANOVA. We are testing whether the mean tapping speed in the three groups are equal or not. That is: H 0 : 1 2 3 versus H a : The above is not true 13 Since F0 = 6.181 > F2,27,0.05 = 3.35, there do appear to be significant differences in the number of finger taps for different doses of caffeine. (b) This is inference on two population means, independent samples. The first assumption is that both populations are normal. The second is the equal variance assumption which we can test in the exam setting as the follows. Group 1 (dose 0 mg): X 1 244.8 , s12 5.73 , n1 10 Group 2 (dose 200 mg): X 2 248.3 , s22 4.9 , n2 10 Under the normality assumption, we first test if the two population variances are equal. That is, H 0 : 12 22 versus H a : 12 22 . The test statistic is F0 s12 5.73 1.17 , F9,9,0.05,U 3.18 . s22 4.9 Since F0 < 3.18, we cannot reject H0 . Therefore it is reasonable to assume that 12 22 . Next we perform the pooled-variance t-test with hypotheses H 0 : 1 2 0 versus H a : 1 2 0 t0 X 1 X 2 0 244.8 248.3 0 3.39 1 1 1 1 sp 5.315 n n2 10 10 Since t0 3.39 is smaller than t18,0.025 2.10092 , we reject H0 and claim that the finger tapping speed are significantly different between the two groups at the significance level of 0.05. (c) data finger; input group taps @@; datalines; 0 242 0 245 0 244 0 248 0 247 0 248 0 242 0 244 0 246 0 242 1 248 1 246 1 245 1 247 1 248 1 250 1 247 1 246 1 243 1 244 2 246 2 248 2 250 2 252 2 248 2 250 2 246 2 248 2 245 2 250 ; run; 14 proc anova data = finger; class group; model taps = group; means group/tukey; run; /*the means step is not necessary for the given problem.*/ (d) data finger2; set finger; where group ne 1; run; proc univariate data = finger2 normal; class group; var taps; run; proc ttest data = finger2; class group; var taps; run; proc npar1way data = finger2; class group; var taps; run; /* the data step from part (d) follows immediately after that from part (c).*/ /* alternatively, one can save the data finger as a permanent sas data, and then you can use that later*/ Example 4. A taste test was conducted by general Foods to determine the palatability resulting from using a high or low amount of liquid and making the texture fine or coarse. The palatability was recorded from -3 (terrible) to =3 (excellent). Sixteen consumer groups (50 people per group) were given one of the four liquid-texture combinations to score, four groups per combination. The response for each group is the total of their individual palatability scores, which is tabled below. Low liquid, Low liquid, High liquid, High liquid, Coarse texture Fine texture Coarse texture Fine texture 35 104 24 65 39 129 21 94 77 97 39 86 16 84 60 64 (a) Please perform suitable statistical tests at the significance level of α =.05 to examine 15 whether the palatability results are significantly influenced by [1] a high or low amount of liquid, or [2] by fine or coarse texture. (b) Please discuss which factor appears to influence the palatability more: liquid amount or food texture? Answers: This problem can be done in at least 3 approaches: (1) Independent samples t-tests; (2) One-way ANOVA with pairwise comparisons; (3) Two-way ANOVA with interaction. The best solution is approach 3, two-way ANOVA; however, since it is not required, we expect you to get the problem done using either approach (1) t-tests on the combined groups, or approach (2) one-way ANOVA with pairwise comparisons – since the table for Tukey’s studentized range test is not provided, you can simply replace it with the ttests on individual groups. In below, I have provided the SAS programs (excluding tests on some assumptions such as normality etc for simplicity) and the corresponding output for your reference. However, in the exam situation, you are expected to write up the complete formula and solutions as usual. DATA taste; INPUT liquid $ texture $ comb $ palat @@; DATALINES; L C LC 35 L C LC 39 L C LC 77 L C LC 16 L F LF 104 L F LF 129 L F LF 97 L F LF 84 H C HC 24 H C HC 21 H C HC 39 H C HC 60 H F HF 65 H F HF 94 H F HF 86 H F HF 64 ; RUN; /*t-tests*/ proc ttest data=taste; class liquid; var palat; run; proc ttest data=taste; class texture; var palat; run; /*one-way ANOVA*/ PROC ANOVA DATA=taste; CLASS comb; MODEL palat = comb; 16 MEANS comb / TUKEY; RUN; /*two-way ANOVA*/ PROC ANOVA DATA=taste; CLASS liquid texture; MODEL palat = liquid|texture; RUN; 17 Homework 6 & Solutions: Chapter 12: 1, 2, 5, 8, 10, 33 (*Dear students, Please do the homework by yourself first before looking at the solutions!) Solutions to the above homework problems: 12.1 (a) Sugar : (2.138) 2 (1.985) 2 (1.865) 2 s 3.996 , 3 So that s 3.996 1.999 with 3 19 57 d.f. Using the critical value t 57, 0.025 2.000 , the 95% CI’s are : 1.999 Shelf 1 : 4.80 (2.000) [3.906,5.694] 20 1.999 Shelf 2 : 9.85 (2.000) [8.956,10.744] 20 1.999 Shelf 3 : 6.10 (2.000) [5.206,6.994] 20 Fiber : (1.166) 2 (1.162) 2 (1.277) 2 2 s 1.447 , 3 So that s 1.447 1.203 with 3 19 57 d.f. Using the critical value t 57, 0.025 2.000 , the 95% CI’s are : 1.203 Shelf 1 : 1.68 (2.000) [1.142,2.218] 20 1.203 Shelf 2 : 0.95 (2.000) [0.412,1.488] 20 1.203 Shelf 3 : 2.17 (2.000) [1.632,2.708] 20 Shelf 2 cereals are higher in sugar content than shelves 1 and 3, since the CI for shelf 2 is above those of shelves 1 and 3. Similarly, the shelf 2 fiber content CI is below that of shelf 3. So in general, shelf 2 cereals are higher in sugar and lower in fiber. 2 (b) Sugar : SSE 57 .3.996 227.80 , SSA n yi2 Ny 2 20[( 4.80) 2 (9.85) 2 (6.10) 2 ] 60 (6.92) 2 275.03 Then the ANOVA table is below : 18 Source Shelves Error Total Analysis of Variance SS d.f. MS 275.03 2 137.5 227.80 57 3.996 502.83 59 F 34.41 Since F f 2.57, 0.05 3.15 , there do appear to be significant differences among the shelves in terms of sugar content. Fiber : SSE 57 .1.447 82.47 , SSA n yi2 Ny 2 20[(1.68) 2 (0.95) 2 (2.17) 2 ] 60 (1.60) 2 15.08 Then the ANOVA table is below : Analysis of Variance Source SS d.f. MS F Shelves 15.08 2 7.54 5.21 Error 82.47 57 1.447 Total 97.55 59 Since F f 2.57, 0.05 3.15 , there do appear to be significant differences among the shelves in terms of fiber content, as well as sugar content. (c) The grocery store strategy is to place high sugar/low fiber cereals at the eye height of school children where they can easily see them. 12.2 (a) The boxplot indicates that the number of finger taps increases with higher doses of caffeine. (b) Analysis of Variance Source SS d.f. MS F Dose 61.400 2 30.700 6.181 Error 134.100 27 4.967 Total 195.500 29 Since F f 2.27, 0.05 3.35 , there do appear to be significant differences in the numbers of finger taps for different doses of caffeine. (c) From the plot of the residuals against the predicted values, the constant variance assumption appears satisfied. From the normal plot of the residuals, the residuals appear to follow the normal distribution. 19 12.5 (a) The boxplot indicates that HBSC has the highest average hemoglobin level, followed by HBS, and then HBSS. (b) Analysis of Variance Source SS d.f. MS F Disease type 99.889 2 49.945 49.999 Error 37.959 38 0.999 Total 137.848 40 Since F0 F2,.38,0.05 3.23 , there do appear to be significant differences in the hemoglobin levels between patients with different types of sickle cell disease. (c) From the plot of the residuals against the predicted values, the constant variance assumption appears satisfied. From the normal plot of the residuals, the residuals appear to follow the normal distribution. 12.8 Sugar : The number of comparisons is a 3 3 , 2 2 Then t 0.05 t 57, 0.0083 2.468 , 57, 23 and the Bonferroni critical value is 2 2 t 57,0.0083 s 2.468 1.999 1.56 . n 20 For the Tukey method, q3,57, 0.05 3.40 , And the Tukey critical value is 1 1 q3,57,0.05 s 3.40 1.999 1.52 n 20 Comparison yi y j 1 vs. 2 1 vs. 3 2 vs. 3 5.05 1.30 3.75 Significant? Bonferroni Tukey Yes Yes No No Yes Yes Fiber : The Bonferroni critical value is 2 2.468 1.203 0.939 20 And the Tukey critical value is 1 3.40 1.203 0.915 20 20 Comparison yi y j 1 vs. 2 1 vs. 3 2 vs. 3 0.73 0.49 1.22 Significant? Bonferroni Tukey No No No No Yes Yes 12.10 The number of comparisons is a 3 3 , 2 2 Then t 0.01 t 38, 0.0017 3.136 , 38, 23 And, since s 0.999 1 , the form of the Bonferroni confidence interval is yi y j (3.136)(1) 1 1 ni n j For the Tukey method, q3,38,0.01 4.39 3.104 , 2 2 And the form of the Tukey confidence interval is 1 1 yi y j (3.104)(1) ni n j For the LSD method, t 38,0.01 2 2.712 , and the form of the LSD confidence interval is yi y j (2.712)(1) 1 1 ni n j The 99% confidence intervals are summarized in the table below : Bonferroni Tukey LSD yi y j Comparison Lower Upper Lower Upper Lower Upper HBSC(3) vs. HBS(2) 1.670 0.392 2.948 0.406 2.934 0.564 2.776 HBS(2) vs. HBSS(1) 1.918 0.656 3.179 0.669 3.166 0.825 3.010 HBSC(3) vs. HBSS 3.588 2.462 4.713 2.475 4.700 2.614 4.561 (1) Since all of these intervals are entirely above 0, all of the types of disease have significantly different hemoglobin levels from one another. Note that the Bonferroni method has the widest intervals, followed by the Tukey. The LSD intervals are narrowest because there is no adjustment for multiplicity. 21 12.33 (a) Note that y n1 y1 n2 y 2 n1 n2 Therefore, SSA MSA n1 ( y1 y ) 2 n2 ( y 2 y ) 2 2 n y n2 y 2 n1 y1 n2 y 2 n1 y1 1 1 n2 y 2 n1 n2 n1 n2 2 n ( y y2 ) n1 ( y1 y 2 ) n1 2 1 n2 n1 n2 n1 n2 n n 2 ( y y 2 ) 2 n2 n12 ( y1 y 2 ) 2 1 2 1 (n1 n2 ) 2 (n1 n2 ) 2 2 2 n1 n2 (n1 n2 )( y1 y 2 ) 2 (n1 n2 ) 2 n1 n2 ( y1 y 2 ) 2 (n1 n2 ) ( y1 y 2 ) 2 (1 n1 1 n2 ) (b) ( y1 y 2 ) 2 MSA 2 F0 2 t0 MSE s (1 n1 1 n2 ) (c) F0 F1, , F f1, , t t , 2 22