df <- transform(df, PredictedY = predict(poly.fit))

advertisement

Computational Methods for Data Analysis – 2014/15

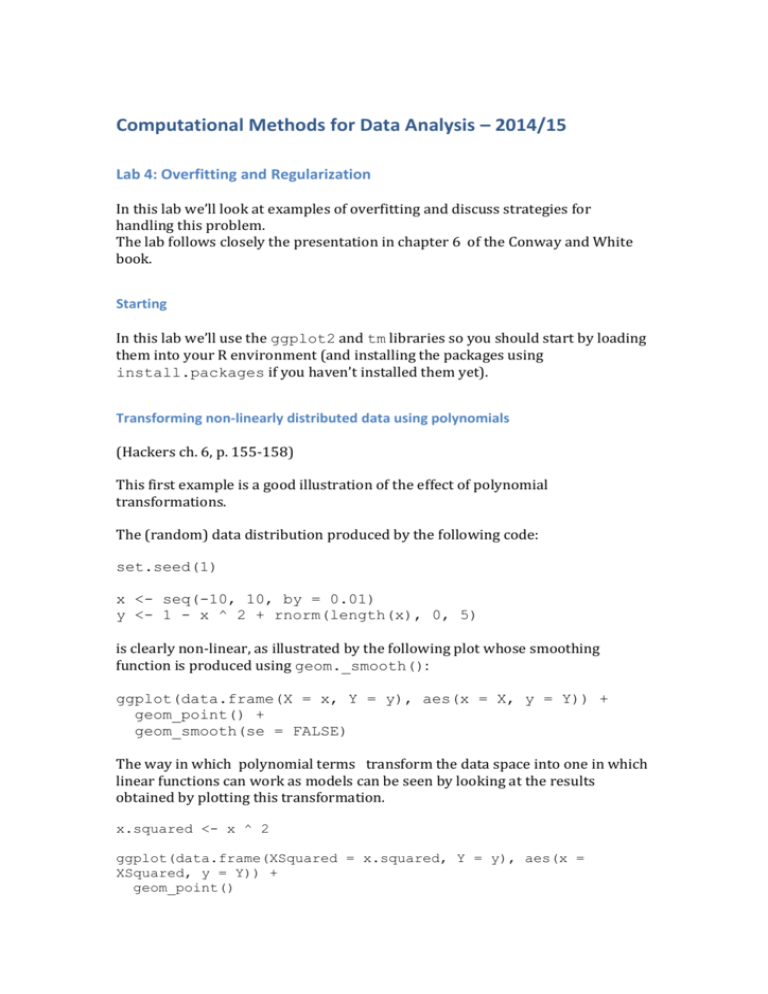

Lab 4: Overfitting and Regularization

In this lab we’ll look at examples of overfitting and discuss strategies for

handling this problem.

The lab follows closely the presentation in chapter 6 of the Conway and White

book.

Starting

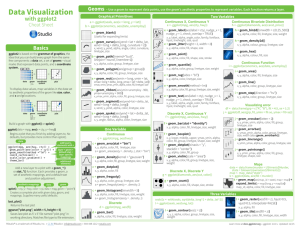

In this lab we’ll use the ggplot2 and tm libraries so you should start by loading

them into your R environment (and installing the packages using

install.packages if you haven’t installed them yet).

Transforming non-linearly distributed data using polynomials

(Hackers ch. 6, p. 155-158)

This first example is a good illustration of the effect of polynomial

transformations.

The (random) data distribution produced by the following code:

set.seed(1)

x <- seq(-10, 10, by = 0.01)

y <- 1 - x ^ 2 + rnorm(length(x), 0, 5)

is clearly non-linear, as illustrated by the following plot whose smoothing

function is produced using geom._smooth():

ggplot(data.frame(X = x, Y = y), aes(x = X, y = Y)) +

geom_point() +

geom_smooth(se = FALSE)

The way in which polynomial terms transform the data space into one in which

linear functions can work as models can be seen by looking at the results

obtained by plotting this transformation.

x.squared <- x ^ 2

ggplot(data.frame(XSquared = x.squared, Y = y), aes(x =

XSquared, y = Y)) +

geom_point()

Now a linear regression provides a good model:

ggplot(data.frame(XSquared = x.squared, Y = y), aes(x =

XSquared, y = Y)) +

geom_point() +

geom_smooth(method = 'lm', se = FALSE)

This transformation maps the original non-linear problem into a problem in

which the relationship between input and output does satisfy the linearity

assumptions. This intuition can be backed up looking at the respective values of

R2:

summary(lm(y ~ x))$r.squared

#[1] 2.973e-06

summary(lm(y ~ x.squared))$r.squared

#[1] 0.9707

The poly function; overfitting

(Hackers ch. 6, p. 158-ff)

A type of data that clearly cannot be described using a linear function is data

with a sinusoidal shape, which can be created as follows:

x <- seq(0, 1, by = 0.01)

y <- sin(2 * pi * x) + rnorm(length(x), 0, 0.1)

df <- data.frame(X = x, Y = y)

ggplot(df, aes(x = X, y = Y)) +

geom_point()

In fact, a linear regression doesn’t produce as bad a model of these data as one

would expect:

summary(lm(Y ~ X, data = df))

(check the output: about 60% of the variance can be accounted for). But we

should be able to do better by using higher degree terms. The following code

adds to df columns encoding square and cubic terms:

df <- transform(df, X2 = X ^ 2)

df <- transform(df, X3 = X ^ 3)

summary(lm(Y ~ X + X2 + X3, data = df))

Adding the new terms increases the variance accounted for from 60% to 97%. So

we may now think that adding more powers of X we could get an even better fit:

df

df

df

df

df

df

df

df

df

df

df

df

<<<<<<<<<<<<-

transform(df,

transform(df,

transform(df,

transform(df,

transform(df,

transform(df,

transform(df,

transform(df,

transform(df,

transform(df,

transform(df,

transform(df,

X4 = X ^ 4)

X5 = X ^ 5)

X6 = X ^ 6)

X7 = X ^ 7)

X8 = X ^ 8)

X9 = X ^ 9)

X10 = X ^ 10)

X11 = X ^ 11)

X12 = X ^ 12)

X13 = X ^ 13)

X14 = X ^ 14)

X15 = X ^ 15)

But in fact this is not what happens:

summary(lm(Y ~ X + X2 + X3 + X4 + X5 + X6 + X7 + X8 + X9

+ X10 + X11 + X12 + X13 + X14, data = df))

Part of the problem is that these extra dimensions are all correlated. A solution

to this particular problem is to use the function poly, that creates orthogonal

polynomials that aren’t correlated with each other. This does allow us to get a

better fit:

summary(lm(Y ~ poly(X, degree = 14), data = df))

But looking at the curves produced this way suggests that what we are getting

are models that overfit the data:

poly.fit <- lm(Y ~ poly(X, degree = 1), data = df)

df <- transform(df, PredictedY = predict(poly.fit))

ggplot(df, aes(x = X, y = PredictedY)) +

geom_point() +

geom_line()

poly.fit <- lm(Y ~ poly(X, degree = 3), data = df)

df <- transform(df, PredictedY = predict(poly.fit))

ggplot(df, aes(x = X, y = PredictedY)) +

geom_point() +

geom_line()

poly.fit <- lm(Y ~ poly(X, degree = 5), data = df)

df <- transform(df, PredictedY = predict(poly.fit))

ggplot(df, aes(x = X, y = PredictedY)) +

geom_point() +

geom_line()

poly.fit <- lm(Y ~ poly(X, degree = 25), data = df)

df <- transform(df, PredictedY = predict(poly.fit))

ggplot(df, aes(x = X, y = PredictedY)) +

geom_point() +

geom_line()

Methods for dealing with overfitting: regularization

The function glmnet() in the glmnet package fits linear models using

regularization. Glmnet() outputs a set of regularizations (values of lambda).

set.seed(1)

x <- seq(0, 1, by = 0.01)

y <- sin(2 * pi * x) + rnorm(length(x), 0, 0.1)

x <- as.matrix(cbind(x,rev(x)))

library('glmnet')

glmnet(x, y)

#Call: glmnet(x = x, y = y)

#

# Df %Dev Lambda

# [1,] 0 0.00000 0.542800

# [2,] 1 0.09991 0.494600

# [3,] 1 0.18290 0.450700

# [4,] 1 0.25170 0.410600

# [5,] 1 0.30890 0.374200

#...

#[51,] 1 0.58840 0.005182

#[52,] 1 0.58840 0.004721

#[53,] 1 0.58850 0.004302

#[54,] 1 0.58850 0.003920

#[55,] 1 0.58850 0.003571

To fit the model, we use a combination of regularization and cross validation. We

divide the data in a training set and a test set and then we loop over possible

values of lambda to see which one gives us the best values, use RMSE (Root Mean

Square Error) as a cost function.

First we create the training and test set

set.seed(1)

x <- seq(0, 1, by = 0.01)

y <- sin(2 * pi * x) + rnorm(length(x), 0, 0.1)

n <- length(x)

indices <- sort(sample(1:n, round(0.5 * n)))

training.x <- x[indices]

training.y <- y[indices]

test.x <- x[-indices]

test.y <- y[-indices]

df <- data.frame(X = x, Y = y)

training.df <- data.frame(X = training.x, Y = training.y)

test.df <- data.frame(X = test.x, Y = test.y)

then we define RMSE

rmse <- function(y, h)

{

return(sqrt(mean((y - h) ^ 2)))

}

Now we fit a model

library('glmnet')

glmnet.fit <- with(training.df, glmnet(poly(X, degree =

10), Y))

lambdas <- glmnet.fit$lambda

at this point we can measure the performance of each lambda and plot the result

performance <- data.frame()

for (lambda in lambdas)

{

performance <- rbind(performance,

data.frame(Lambda = lambda,

RMSE = rmse(test.y, with(test.df,

predict(glmnet.fit,

poly(X, degree = 10),

s = lambda)))))

}

ggplot(performance, aes(x = Lambda, y = RMSE)) +

geom_point() +

geom_line()