The Convergence Prediction method for Genetic and PBIL

advertisement

1

The Convergence Prediction method

for Genetic and PBIL-like algorithms

with binary representation

Eugene A. Sopov, Sergey A. Sopov

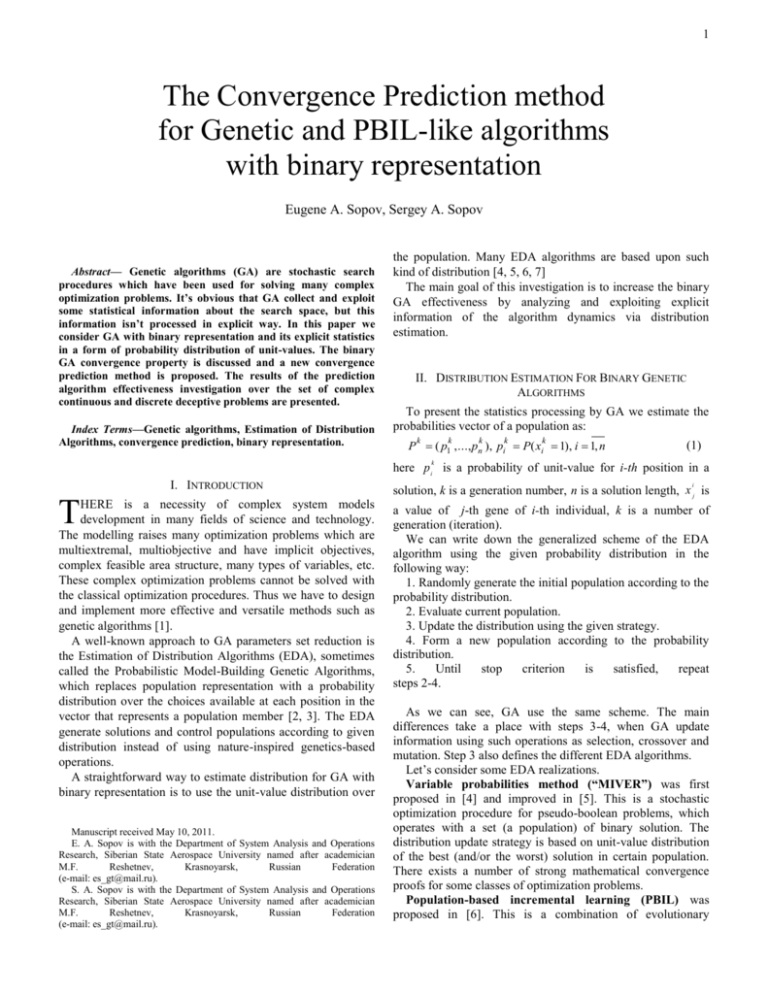

Abstract— Genetic algorithms (GA) are stochastic search

procedures which have been used for solving many complex

optimization problems. It’s obvious that GA collect and exploit

some statistical information about the search space, but this

information isn’t processed in explicit way. In this paper we

consider GA with binary representation and its explicit statistics

in a form of probability distribution of unit-values. The binary

GA convergence property is discussed and a new convergence

prediction method is proposed. The results of the prediction

algorithm effectiveness investigation over the set of complex

continuous and discrete deceptive problems are presented.

Index Terms—Genetic algorithms, Estimation of Distribution

Algorithms, convergence prediction, binary representation.

the population. Many EDA algorithms are based upon such

kind of distribution [4, 5, 6, 7]

The main goal of this investigation is to increase the binary

GA effectiveness by analyzing and exploiting explicit

information of the algorithm dynamics via distribution

estimation.

II. DISTRIBUTION ESTIMATION FOR BINARY GENETIC

ALGORITHMS

To present the statistics processing by GA we estimate the

probabilities vector of a population as:

k

k

k

k

k

P ( p1 ,..., pn ), pi P( xi 1), i 1, n

(1)

k

here p i is a probability of unit-value for i-th position in a

I. INTRODUCTION

solution, k is a generation number, n is a solution length, x j is

HERE is a necessity of complex system models

development in many fields of science and technology.

The modelling raises many optimization problems which are

multiextremal, multiobjective and have implicit objectives,

complex feasible area structure, many types of variables, etc.

These complex optimization problems cannot be solved with

the classical optimization procedures. Thus we have to design

and implement more effective and versatile methods such as

genetic algorithms [1].

A well-known approach to GA parameters set reduction is

the Estimation of Distribution Algorithms (EDA), sometimes

called the Probabilistic Model-Building Genetic Algorithms,

which replaces population representation with a probability

distribution over the choices available at each position in the

vector that represents a population member [2, 3]. The EDA

generate solutions and control populations according to given

distribution instead of using nature-inspired genetics-based

operations.

A straightforward way to estimate distribution for GA with

binary representation is to use the unit-value distribution over

a value of j-th gene of i-th individual, k is a number of

generation (iteration).

We can write down the generalized scheme of the EDA

algorithm using the given probability distribution in the

following way:

1. Randomly generate the initial population according to the

probability distribution.

2. Evaluate current population.

3. Update the distribution using the given strategy.

4. Form a new population according to the probability

distribution.

5.

Until

stop

criterion

is

satisfied,

repeat

steps 2-4.

T

Manuscript received May 10, 2011.

E. A. Sopov is with the Department of System Analysis and Operations

Research, Siberian State Aerospace University named after academician

M.F.

Reshetnev,

Krasnoyarsk,

Russian

Federation

(e-mail: es_gt@mail.ru).

S. A. Sopov is with the Department of System Analysis and Operations

Research, Siberian State Aerospace University named after academician

M.F.

Reshetnev,

Krasnoyarsk,

Russian

Federation

(e-mail: es_gt@mail.ru).

i

As we can see, GA use the same scheme. The main

differences take a place with steps 3-4, when GA update

information using such operations as selection, crossover and

mutation. Step 3 also defines the different EDA algorithms.

Let’s consider some EDA realizations.

Variable probabilities method (“MIVER”) was first

proposed in [4] and improved in [5]. This is a stochastic

optimization procedure for pseudo-boolean problems, which

operates with a set (a population) of binary solution. The

distribution update strategy is based on unit-value distribution

of the best (and/or the worst) solution in certain population.

There exists a number of strong mathematical convergence

proofs for some classes of optimization problems.

Population-based incremental learning (PBIL) was

proposed in [6]. This is a combination of evolutionary

2

optimization and artificial neural networks learning technique.

PBIL updates the distribution in the same way as “MIVER”

does – according to unit-value distribution of the best and the

worst solution.

Both PBIL and “MIVER” use greedy linear update strategy

(taking into account only the best/worst solution), so they

demonstrate much more local convergence than standard GA

do. Thus standard GA performance on average is better for

many complex optimization problems.

Probabilities-based GA was proposed in [7]. It’s a further

evolution of the ideas of Variable probabilities method and

PBIL. In this algorithm genetic operators are not substituted

with distribution estimation, but estimated distribution is used

to model genetic operators. Probabilities-based GA uses the

whole population information to update distribution, so it

demonstrates as global performance as standard GA do.

Moreover it contains less parameters to tune and process

additional information about the search space (probabilities

distribution), thus probabilities-based GA exceeds standard

GA on average.

We have investigated the performance of algorithms using a

visual representation of distribution. Each component value of

probability vector, which performs distribution, varies during

the run, so we can show it on diagram (fig.1-4).

As we can see the probability value oscillates about

p 0.5 (initial steps, uniform distribution over the search

space) and then converges to value one (or zero in other case)

as the algorithm converges to optimal (or suboptimal)

solution.

We can estimate and visualize distribution of unit-values for

any binary GA, even if GA doesn’t use such distribution in the

run:

1 N i

x j , j 1, n (2)

P p1 , , pn , p j Px j 1

N i 1

where N is a population size.

As different algorithms use different distribution update

strategies, so we obtain different behavior of probabilities

dynamics during the run (fig.1-4).

Analyzing these diagrams with different optimization

problems we can find that the components of the probabilities

vector frequently converge to value one if optimal solution

contains unit-value in corresponding position (or to zero if

optimal solution contains zero value). It means that if

probability converges to one (or zero), the value of

corresponding gene of optimal solution most probably is equal

to one (or zero). The higher algorithm performance the more

often probabilities converge to the correct value.

Fig. 2: Evolution of the probability vector component for a typical run of

PBIL.

Fig. 3: Evolution of the probability vector component for a typical run of the

Probabilities-based GA.

Fig. 4: Evolution of the probability vector component for a typical run of

Standard GA.

III. CONVERGENCE PREDICTION METHOD

We describe the convergence feature of stochastic binary

algorithms as:

opt

pi 1, if xi 1,

(3)

opt

pi 0, if xi 0,

opt

for algorithm's iteration number converges infinity. Here xi

is the i-th position value of the problem optimal solution, i =

1, n .

So we can use this feature to predict the optimal solution of

the given problem. The convergence prediction method is:

1. Choose the binary stochastic algorithm (e.g. GA, PBIL or

other), set the iteration number i 1,..., I and the number of

independent algorithm run s 1,..., S .

2. Collect the statistics ( p j ) i , j 1,..., n . Average ( p j ) i

s

over s. Determine the tendency for p j change.

1, if p j 1,

opt

3. Set x j

.

0, if p j 0

A simple way to determine the convergence tendency is to

use the following integral criterion on step 3:

1, if I ( p ) 0.5 0,

j i

i 1

xj

0, otherwise

opt

Fig. 1: Evolution of the probability vector component for a typical run of the

Variable probabilities method (“MIVER”).

(4)

The main idea is that the more often probability value is

greater than 0.5, the higher probability of optimal solution to

be equal to one.

3

Unfortunately, in many real-world problems there is a

situation when binary stochastic algorithm collects not enough

information on the early steps and the gene value of certain

position is equal to one (or zero) for almost every individual.

But at the final stage algorithm can find the much better

solution with inverted values of gene and it means that the

probability vector values will change their convergence

direction.

We propose to use the following modification of

convergence prediction algorithm to solve above mentioned

problem:

1. Set the prediction step K.

2. Every K iteration use the given statistics P i , i 1, N K ,

N K t K , t 1,2, , to evaluate the probability vector

change: P i P i P i 1 .

3. Set the weights for every iteration accordingly to its

number: i 2 i N K N K 1 , i 1, , N K .

4. Evaluate probability vector weighted change as:

NK

i Pi .

~

P ~

pj

i 1

5. Set the optimal solution:

p j 0,

1, if ~

opt

xj

0, otherwise

6. Add the optimal solution in the current population. Stop

the run and set the predicted solution as optimal or continue

algorithm run to find better solution.

The main idea of the given modification is that the

probability values on the later iterations have the greater

weights as algorithm collects more information about the

search space. The weights should have values such that

i 1 i and

NK

i

1.

i 1

IV. CONVERGENCE AND DECEPTIVE PROBLEMS

The practice has shown us that GA are very effective on

many real-world problems, so GA researchers need to

construct special test functions, which are real challenge for a

certain algorithm. In a field of GA, these test functions are

deceptive functions [9, 10].

The deceptive functions design is based upon the building

blocks hypothesis [1] - the problems are GA-hard (GAdifficult) or deceptive [11].

It is necessary to notice that deceptive trap functions are a

class of specially constructed functions, which consider how

genetic search works – so standard GA have no opportunity to

escape such traps in principle. In this work we supply GA with

additional operation, which can be called a strong inversion.

The inversion takes place in natural systems. Holland in

[12] describes this operation as inversion of gene order in a

selected segment of chromosome. The strong inversion for the

binary representation is a bit-inversion for all positions in a

chromosome.

It’s obviously that in a real-world problem we cannot

identify if a local optimum is the trap, so the strong inversion

can destroy all useful genes in a chromosome. To avoid this

harmful effect we should use the strong inversion with very

low probability (e.g. 𝑝𝑖𝑛𝑣𝑒𝑟𝑡 ∈ {0.001; 0.01}) in a manner of

mutation. It means that if there are no traps in a search space,

the inverted solution probably will have low fitness and its

genes will be eliminated from population. In a case, GA fall

into a trap, the inverted solution provides the total escape (for

simple problems) or new genes with better fitness.

V. TEST PROBLEMS AND NUMERICAL EXPERIMENTS

We have tested the proposed convergence prediction

algorithms for discussed EDA and standard GA with a

representative set of complex optimization problems.

Continuous optimization problems. We have included 12

internationally known test problems, and 6 of them are from

the test function set approved at Special Session on RealParameter Optimization, CEC’05 [8]. The test problem set

contains the following functions: Rastrigin and Shifted

Rotated Rastrigin, Rosenbrock, Griewank, De Jong 2,

“Sombrero” (another name is “Mexican Hat”), Schekel,

Katkovnik, Additive Potential function, Multiplicative

Potential function and 2 one-dimensional multiextremal

functions.

Deceptive Trap functions. A variety of deceptive problems

are based on the function of unitation, which is defined as

number of ones in chromosome. We have included the

following functions in test set: Trap on Unitation (with

Ackley, Whitley and Goldberg parameters), Trap-4, Trap-5,

DEC2TRAP, Liepins and Vose.

To estimate algorithms efficiency we have carried out a

series of independent run of each algorithm for each test

problem. We used reliability as a criterion to evaluate

algorithm efficiency. Reliability is the percentage of

successful algorithm run (i.e. exact solution of the problem

was found) over the whole number of independent runs.

We have set the following parameters values for algorithms:

Accuracy (binary solution length) – 100,

Population size – 200,

Generation number – 500,

Independent run number – 100.

The special parameters for each algorithm (e.g. selection in

GA, learning rate in PBIL and others) are chosen to be

efficient on average over function set.

Table 1 presents efficiency estimation results for modified

convergence prediction algorithm.

As the probabilities-based GA exceeds standard GA, PBIL

and MIVER, below we demonstrate results only for the

probabilities-based GA.

The investigation shows that the probabilities-based GA

cannot find the global optimum solution on the given test

functions set with chromosome length greater than 20 bits.

The reliability is equal to zero even with fine tuning the

algorithm parameters (the same situation with other

algorithms). The probabilities-based GA rapidly falls into the

local trap optimum and cannot overcome its attraction.

4

Next we have investigated the probabilities-based GA with

the strong inversion and the prediction algorithm. The

prediction takes place through each 10 generation, the

predicted solution replaces the worst individual. At the final

generation we fulfill the complete prediction over the whole

run.

TABLE I

THE RELIABILITY ESTIMATION FOR MODIFIED

CONVERGENCE PREDICTION ALGORITHM

Problem

Rastrigin

Shifted Rotated

Rastrigin

Rosenbrock

Griewank

De Jong 2

Sombrero

Schekel

Katkovnik

Additive

potential

Multiplicative

potential

Onedimensional

N1

Onedimensional

N2

88

Prob.based GA

98

Standard

GA

94

28

40

72

50

24

10

8

22

28

36

41

51

28

55

62

100

80

100

70

60

30

100

38

58

22

56

70

100

76

100

98

100

28

100

86

98

70

98

75

100

MIVER

PBIL

61

The stochastic search algorithms are not random walk

through the search space, they have a purposeful behavior. We

have demonstrates in the paper, that stochastic search

procedures with binary representation (GA, PHIL and others)

can be analyzed through visualization of its estimated

distribution dynamics. We have proposed the efficient method

to predict the point of convergence (optimal solution) using

the results of distribution analysis.

Two approaches were investigated: a simple way of using

integral criterion and more complex strategy with contribution

weights calculation. As numerical experiments shows the

modified convergence prediction provides the better

performance, even for algorithms with greedy search strategy

and local behavior.

It’s clear that the proposed prediction strategy is

straightforward and there are situations, which lead to

incorrect prediction. We have demonstrates that the slight

modification of the base algorithms can overcome such GAhard problem as deceptiveness.

In further work we suppose to develop the prediction and its

implementations with other EDA and evolutionary algorithms

and investigate this approach with a variety of test problems,

which are the challenge for the search algorithms

REFERENCES

86

100

80

100

TABLE II

THE RELIABILITY AND AVERAGE EVALUATION NUMBER ESTIMATION OF

THE PROBABILITIES-BASED GA WITH INVERSION AND PREDICTION FOR

DECEPTIVE AND TRAP FUNCTIONS

Prob.-based GA with

inversion

Problem

42

41

53

61

38

78

aver.

evaluation

number

37862

42891

27771

31463

38332

15712

51

39871

reliability

Ackley Trap

Whitley Trap

Goldgerg Trap

Trap-4

Trap-5

DEC2TRAP

Liepins

and

Vose

VI. CONCLUSIONS

Prob.-based GA with

inversion and

prediction

aver.

reliaevaluability

tion

number

46

28732

40

38701

55

25981

62

23712

45

32778

98

15899

50

33520

The results are shown in a Table 2. As we can see, the

modification with the strong inversion is able to overcome the

attraction of the trap, so the algorithm can find the global

optimum. In cases when the probabilities-based GA with

prediction yields, its reliability is also is high and has

comparable values. When we add predicted solution every 10

generation in a population, the probabilities-based GA solves

the given problem with less evaluations of the objective

function.

[1]

Goldberg, D.E., 1989, Genetic Algorithms in Search, Optimization and

Machine Learning, Addison-Wesley.

[2] Muehlenbein, H., Paas, G., 1996, From Recombination of Genes to the

Estimation of Distribution. Part 1, Binary Parameter, Lecture Notes in

Computer Science 1141, Parallel Problem Solving from Nature, pp.

178-187.

[3] Larraenaga, P., Lozano, J.A., 2001. Estimation of Distribution

Algorithms: A New Tool for Evolutionary Computation, Kluwer

Academic Publishers.

[4] Antamoshkin, A.N., 1986. Brainware for search pseudoboolean

optimization, Pr. of 10th Prague Conf. on Inf. Theory, Stat. Dec.

Functions, Random Processes - Prague: Academia.

[5] Antamoshkin, A.N, Saraev, V.N., 1987. The method of variable

probabilities", in Problemy sluchaynogo poiska (Problems of random

search), Riga: Zinatne, (in Russian).

[6] Baluja, S., 1994. Population-Based Incremental Learning: A Method for

Integrating Genetic Search Based Function Optimization and

Competitive Learning, Technical Report CMU-CS-94-163, Carnegie

Mellon University.

[7] Semenkin, E.S., Sopov, E.A., 2005. Probabilities-based evolutionary

algorithms of complex systems optimization, In Intelligent Systems

(AIS’05) and Intelligent CAD (CAD-2005), Moscow, FIZMATLIT (in

Russian, abstract in English).

[8] Suganthan, P.N., Hansen, N. et al., 2005. Problem Definitions and

Evaluation Criteria for the CEC 2005 Special Session on Real-Parameter

Optimization, Technical Report, Nanyang Technological University,

Singapore and KanGAL Report Number 2005005, Kanpur Genetic

Algorithms Laboratory, IIT Kanpur.

[9] Deb, K., Goldberg, D.E., 1992. Analysing deception in trap functions. In

Foundations of Genetic Algorithms 2, D. Whitley (Ed.), San Mateo:

Morgan Kaufmann.

[10] Whitley, L. D., 1991. Fundamental principles of deception in genetic

search. In Foundations of Genetic Algorithms, G. J. E. Rawlins (Ed.),

San Mateo, CA: Morgan Kaufmann.

[11] Wilson, S.W., 1991. GA-easy does not imply steepest-ascent

optimizable. Proceedings of the Fourth International Conference of

Genetic Algorithms (pp. 85-89). San Mateo, CA: Morgan Kaufmann.

[12] Holland, J.H., 1975. Adaptation in natural and artificial systems, Ann

Arbor. MI: University of Michigan Press.