Author Guidelines for 8 - The ACIS Lab

advertisement

Towards Simulation of Parallel File System Scheduling Algorithms with

PFSsim

Yonggang Liu, Renato Figueiredo

Department of Electrical and Computer

Engineering, University of Florida,

Gainesville, FL

{yonggang,renato}@acis.ufl.edu

Abstract

Many high-end computing (HEC) centers and

commercial data centers adopt parallel file systems

(PFSs) as their storage solutions. With the concurrent

applications in PFS grow in both quantity and variety,

it is expected that scheduling algorithms for data

access will play an increasingly important role in PFS

service quality. However, it is costly and disruptive to

thoroughly research different scheduling mechanisms

in the peta- or exascale systems; and the complexity in

scheduling policy implementation and experimental

data gathering even makes the tests harder. While a

few parallel file system simulation frameworks have

been proposed (e.g., [15,16]), their goals have not

been in the scheduling algorithm evaluation. In this

paper, we propose PFSsim, a simulator designed for

the purpose of evaluating I/O scheduling algorithms in

PFS. PFSsim is a trace-driven simulator based on the

network simulation framework OMNeT++ and the disk

device simulator DiskSim. A proxy-based scheduler

module is implemented for scheduling algorithm

deployment, and the system parameters are highly

configurable. We have simulated PVFS2 on PFSsim,

and the experimental results show that PFSsim is

capable of simulating the system characteristics and

capturing the effects of the scheduling algorithms.

1. Introduction

Recent years, Parallel file systems (PFS) such as

Lustre[1], PVFS2[2], Ceph[3], and PanFS[4] are

increasingly popular in high-end computing (HEC)

centers and commercial data centers — for instance, as

of April 2009, half of the world’s top 30

supercomputers use Lustre[5] as their storage

solutions. PFSs outperform traditional distributed file

systems such as NFS[6] in many application domains,

and among the reasons, an important one is that they

adopt the object-based storage model[7] and stripe

Dulcardo Clavijo, Yiqi Xu, Ming Zhao

School of Computing and Information

Sciences, Florida International University,

Miami, FL

{darte003,yxu006,ming}@cis.fiu.edu

large data accesses into smaller storage objects

distributed in the storage system for high-throughput

parallel access and load balancing.

In HEC and data center systems, there are often

large numbers of applications that access data from a

distributed storage system with a variety of Quality-ofService requirements[8]. As such systems are predicted

to continue to grow in terms of amount of resources

and concurrent applications, I/O scheduling strategies

that allow performance isolation are expected to

become increasingly important. Unfortunately, most

PFSs are not able to manage I/O flows on a per-dataflow basis. The scheduling modules that come with the

PFSs are typically configured to fulfill an overall

performance goal, instead of the quality of service for

each application.

There is a considerable amount of existing

work[9,10,11,12,13,24] on the problem of achieving

differentiated service on a centralized management

point. Nevertheless, the applicability of these

algorithms in the context of parallel file systems has

not been thoroughly studied. Challenges in HEC

environments include the facts that applications have

data flows issued from potentially large number of

clients and parallel checkpointing of the applications

becomes increasingly important to achieve desired

levels of reliability; in such environment, centralized

scheduling algorithms can be limiting from scalability

and availability standpoints. To the best of our

knowledge, there are not many existing decentralized

I/O scheduling algorithms for distributed storage

systems, while the proposed decentralized algorithms

(e.g., [14]) may still need verification in terms of their

suitability for PFSs, which are a subset of distributed

storage systems.

While PFSs are widely adopted in the HEC field,

research on corresponding scheduling algorithms is not

easy. The two key factors that prevent the testing on

real systems are: 1) the cost of scheduler testing on a

peta- or exascale file system requires complex

deployment and experimental data gathering; 2)

experiments with the storage resources used in HEC

systems can be very disruptive, as deployed production

systems typically are expected to have high utilization.

Under this context, a simulator that allows developers

to test and evaluate different scheduler designs for

HEC systems is very valuable. It extricates the

developers from complicated deployment headaches in

the real systems and cuts their cost in the algorithm

development. Even though simulation results are

bound to have discrepancies compared to the real

performance, simulation results can offer very useful

insights in the performance trends and allow the

pruning of the design space before implementation and

evaluation on a real testbed or a deployed system.

In this paper, we propose a Parallel File System

simulator, PFSsim. Our design objectives for this

simulator are: 1) Easy-to-use: Scheduling algorithms,

PFS characteristics and network topologies can be

easily configured at compile-time; 2) fidelity: It can

accurately model the effect of HEC workloads and

scheduling algorithms; 3) Ubiquity: the simulator

should have good flexibility to simulate large variety

of storage and network characteristics. 4) Scalable: It

should be able to simulate up to thousands of machines

in a medium-scale scheduling algorithm study.

The rest of the paper is organized as follows. In

section 2, we introduce the related work on PFS

simulation. In section 3, we talk about the PFS and

scheduler abstractions. In section 4, we illustrate the

implementation details of PFSsim. In section 5, we

show the validation results. In the last section, we

conclude our work and discuss the future work.

efficient simulation, IMPIOUS simplifies the PFS

model by omitting the metadata server modules and

corresponding communications, and since the focus is

not on the scheduling strategies, it does not support

explicit deployment of scheduling policies.

The other PFS simulator is described in the paper

written by P. H. Carns et. al., which is about the

PVFS2 server-to-server communication mechanisms

[16]. This simulator is used for testing the overhead of

metadata communications, specifically in PVFS2.

Thus, a detailed TCP/IP based network model is

implemented. The authors employed the INET

extension[18] of the OMNeT++ discrete event

simulation framework[19] to simulate the network. The

simulator also uses a “bottom-up” technique to

simulate the underlying systems (PVFS2 and Linux),

which achieves high fidelity but compromises on

flexibility.

We take inspiration from these related systems and

develop an expandable, modularized design where the

emphasis is on the scheduler. Based on this goal, we

use DiskSim to simulate physical disks in detail, and

we use the extensible OMNeT++ framework for the

network simulation and the handling of simulated

events. While we currently use a simple networking

model, as pointed out above OMNeT++ supports INET

extensions that can be incorporated to enable precise

network simulations in our simulator, at the expense of

longer simulation times.

2. Related Work

In this subsection, we will first describe the

similarities in Parallel File Systems (PFSs), and then

we are going to discuss the differences exist among

different PFSs.

Considering all the commonly used PFSs, we find

that the majority of them share the same basic

architecture:

1. There is one or more data servers, which are built

based on the local file systems (e.g., Lustre, PVFS2) or

the block devices (e.g., Ceph). The application data are

stored in the form of fixed-size PFS objects, whose IDs

are unique in a global name space. File clocking

feature is enabled in some PFSs (e.g., Lustre, Ceph);

2. There is one or more metadata servers, which

typically manage the mappings from PFS file name

space to PFS storage object name space, PFS object

placement, as well as the metadata operations;

3. The PFS clients are run on the system users’

machines; they provide the interface (e.g., POSIX) for

users/user applications to access the PFS.

To the best of our knowledge, there are two parallel

file system simulators presented in the literature, one is

the IMPIOUS simulator proposed by E. MolinaEstolano, et. al.[15], and the other one is the simulator

developed by P. Carns et. al.[16].

The IMPIOUS simulator is developed for fast

evaluation of PFS designs. It simulates the parallel file

system abstraction with user-provided file system

specifications, which include data placement strategies,

replication strategies, locking disciplines and cache

strategies. In this simulator, the client modules read the

I/O traces and the PFS specifications, and then directly

issue them to the Object Storage Device (OSD)

modules according to the configurations. The OSD

modules can be simulated with the DiskSim

simulator[17] or the “simple disk model”, while the

former one provides higher accuracy and the later one

provides higher efficiency. For the goal of fast and

3. System Modeling

3.1. Abstraction of Parallel File Systems

For a general PFS, a file access request (read/write

operation) goes through the following steps:

1. Receiving the file I/O request: By calling an API,

the system user sends its request {operation, offset,

file_path, size} to the PFS client running on the user’s

machine.

2. Object mapping: The client tries to map the tuple

{offset, file_path, size} to a serial of objects, which

contain the file data. This information is either

available locally or requires the client to query the

metadata server.

3. Locating the object: The client locates the object

to the data servers. Typically each data server stores a

static set of object IDs, and this mapping information is

often available on the client.

4. Data transmission: The client sends out data I/O

requests to the designated data servers with the

information {operation, object_ID}. The data servers

reply the requests, and the data I/O starts.

Note that we have omitted the access permission

check (often conducted on the metadata server) locking

schemes (conducted on either the metadata server or

the data server).

Although different PFSs follow the same

architecture, they differ from each other in many ways,

such as data distribution methodology, metadata

storage pattern, user API, etc. Nevertheless, there are

four aspects that we consider to have significant effects

on the I/O performance: metadata management, data

placement strategy, data replication model and data

caching policy. Thus, to construct a scheduling policy

test simulator for various PFSs, we should have the

above specifications faithfully simulated.

It is proved that at least in some cases, metadata

operation takes a big proportion of file system

workloads[27], and also because it lies in the critical

path, the metadata management can be very important

to the overall I/O performance. Different PFSs use

different techniques to manage metadata to achieve

different levels of metadata access speed, consistency

and reliability. For example, Ceph adopts the dynamic

subtree partitioning technique[30] to distribute the

metadata onto multiple metadata servers for high

access locality and cache efficiency. Lustre deploys

two metadata servers, which includes one “active”

server and one “standby” server for failover. In PVFS2,

metadata are distributed onto data servers to prevent

single point of failure and the performance bottleneck.

By tuning the metadata server module and the network

topology in our simulator, system users are able to set

up the metadata storage, caching and access patterns.

Data placement strategies are designed with the

basic goal of achieving high I/O parallelism and server

utilization/load balancing. But different PFS still vary

with each other significantly, by reason of different

usage contexts. Ceph is aiming at the large-scale

storage systems that potentially have big metadata

communication overhead. Thus, Ceph uses the local

hashing function and CRUSH (Controlled Replication

Under Scalable Hashing) technique[26] to map the

object IDs to the corresponding OSDs in a distributed

manner, which avoids metadata communication during

data location lookup, and reduces the update frequency

of the system map. In contrast, aiming to serve the

users with higher trust and skills, PVFS2 provides

flexible data placement options to the users, and it even

delegates the users the ability to store the data on userspecified data servers. In our simulation, data

placement strategies are set up on the client modules,

and the reason is that, this approach gives the clients

the capability to simulate both the distributed-style and

centralized-style data location lookup.

Data replication and failover models also affect the

I/O performance, because for systems with data

replication setup, data are written to multiple locations,

which may prolong the writing process. For example,

with data replication enabled in Ceph, every write

operation is committed to both the primary OSD and

the replica OSDs inside a placement group. Though

Ceph maintains parallelism when forwarding the data

to the replica OSDs, the costs of data forwarding and

synchronization are still non-negligible. Lustre and

PVFS2 do not implement explicit data replication

models assuming that the replication is done by the

underlay hardware.

Data caching on the server side and client side may

promote the PFS I/O performance. But it is importance

that, the client-side caching coherency also needs to be

managed. PanFS data servers implement write-data

caching that aggregates multiple writes for efficient

transmission and data layout at the OSDs, which may

increase the disk I/O rate. Ceph implements the

O_LAZY flag for open operation at client-side that

allows applications to explicitly relax the usual

coherency requirements for a shared-write file, which

facilitates those HPC applications that often have

concurrent accesses to different parts of the files. Some

PFSs do not implement client caching by default, such

as PVFS. We have not implemented the data caching

components in PFSsim, and we plan to implement it in

the near future.

3.2. Abstraction of PFS Scheduler

trace

Stripping

strategy

trace

...

output

client

client

Simulated network

Scheduling

Algorithm

Metadata

server

Metadata

server

...

Schedulers

local file

system

local file

system

local file

system

Disk

Disk

Disk

...

OMNeT++

DiskSim

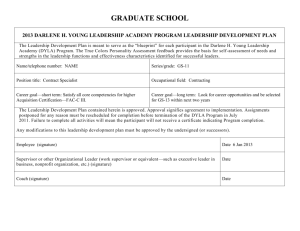

Figure 1. The architecture of PFSsim. The two big dash-line boxes mean the entities inside are

simulated by OMNeT++ platform and DiskSim platform.

Among the many proposed centralized or

decentralized scheduling algorithms in distributed

storage systems, large varieties of network fabric and

deployment locations are chosen. For instance, in [14],

the schedulers are deployed on the Coordinators,

which reside between the system clients and the

storage Bricks. In [24], the scheduler is implemented

on a centralized broker, which captures all the system

I/O and dispatches them to the disks. In [30], the

scheduling policies are deployed on the network

gateways which serve as the storage system portals to

the clients. And in [29], the scheduling policies are

deployed on the per-server proxies, which intercept I/O

and virtualize the data servers to the system clients.

In our simulator, the system network is simulated

with high flexibility, which means the users are able to

deploy their own network fabric with the basic or userdefined devices. The schedulers can also be created

and positioned to any part of the network. For more

advanced designs, inter-scheduler communications can

also be enabled. The scheduling algorithms are to be

defined by the PFSsim users, and abstractive APIs are

exposed to enable the schedulers to keep track of the

data server status.

4. Simulator Implementation

4.1. Parallel File System Scheduling Simulator

Based on the abstractions we mentioned, we have

developed the Parallel File System scheduling

simulator (PFSsim) based on the discrete event

simulation framework OMNeT++4.0 and the disk

model simulator DiskSim4.0.

In our simulator, the client modules, metadata

server modules, scheduler modules and the local file

system modules are simulated by OMNeT++.

OMNeT++ also simulates the network for

communications between client – metadata server,

client – data server, and scheduler – scheduler.

DiskSim is employed for the detailed simulation of

disk models; one DiskSim process is deployed for each

independent disk module being simulated. For details,

please refer to Figure 1.

The simulation input is provided in the form of

trace files which contain the I/O requests of the system

users. Upon reading one I/O request from the input file,

the client creates a QUERY object, and sends it to the

metadata server through the simulated network. On the

metadata server, the corresponding data server IDs and

the stripping information are added to the QUERY

object, which is sent back to the client. The client

stripes the I/O request according to the stripping

information, and for each stripe the client issues a JOB

object to the designated data server through the

simulated network.

The JOB object is received by the scheduler module

on the data server (the detailed design of the scheduler

is covered in following subsection). When a JOB

object is dispatched by the scheduler, the local file

system module maps the logical address of the job to

the physical block number. Finally, the job information

is sent to the DiskSim simulator via inter-process

communication over a network connection (currently,

TCP).

When a job is finished on DiskSim, its ID and finish

time are sent back to the data server module on

OMNeT++. Then, the corresponding JOB object is

found from the local record. After writing the “finish

time” into the JOB object, it is sent to the client.

Finally, the client writes the job information into the

output file, and the JOB object is destroyed.

4.2. Scheduler Implementation

To provide an interface for the users to implement

their own scheduling algorithms, we provide a base

class for all algorithms, so that scheduling algorithms

can be implemented by inheriting this class. The base

class mainly contains the following functions:

void jobArrival(JOB * job);

void jobFinish(int ID);

void getSchInfo(Message *msg);

JOB means the JOB object referred in the above

subsection. Message is defined by the schedulers for

exchanging the scheduling information. The jobArrival

function is called by the data server when a new job

arrives at the scheduler. The jobFinish function is

called when a job just finishes the serving phase. The

getSchInfo function is called when the data server

receives a scheduler-to-scheduler message. The

simulator users can overwrite these functions to specify

the corresponding behaviors regarding to their

algorithms.

The data server module also exports interfaces to

the schedulers. Two important functions exported by

the DataServer class are:

bool dispatchJob(JOB * job);

bool sendInfo(int ID, Message * msg);

The dispatchJob function is called for dispatching

jobs to the resources. The sendInfo function is called

for sending scheduling information to other schedulers.

4.3. TCP Connection between OMNET++ and

DiskSim

One challenge of building a system with multiple

integrated

simulators

is

the

virtual

time

synchronization. Each simulator instance runs its own

virtual time, and each one has a large amount of events

emerging every second. The synchronization, if

performed inefficiently, can inevitably become a

bottleneck on the simulation speed.

Since DiskSim has the functionality of getting the

time stamp for the next event, OMNeT++ can always

proactively synchronize with every DiskSim instance

at the provided time stamp.

Currently we have implemented TCP connections

between the OMNeT++ simulator and the DiskSim

instances. Even though optimizations are done in

improving the synchronization efficiency, we found the

TCP connection cost is still a bottleneck of the

simulation speed. In the future work, we plan to

introduce more efficient synchronization mechanisms,

such as shared memory.

4.4. Local File System Simulation

In our simulator, the local file system is a simple

file system that provides a static mapping between file

and disk blocks. This model is inaccurate, but the

reason why we did not implement a fully simulated

local file system is the local file system block

allocation heavily depends on the context of storage

usage (e.g., EXT4 [22]), which is out of our control.

This simple file system model guarantees the data that

are adjacent in the logical space are also adjacent on

the physical storage. In the future, we plan to

implement a general file system with cache

functionality enabled.

5. Validation and Evaluation

In this section, we validate the PFSsim simulation

results against the real system performance. In the real

system, we deployed a set of virtual machines; each

virtual machine is configured with 2.4GHz AMD CPU

and 1 GB memory. EXT3 is used as the local file

system. We deployed the PVFS2 parallel file system,

containing 1 metadata server and 4 data servers on both

the real system and the simulator. On each data server

node, we also deploy a proxy-based scheduler that

intercepts all the I/O requests going through the local

machine.

In the experiments, we use the Interleaved or

Random (IOR) benchmark [23] developed by the

Scalable I/O Project (SIOP) at Lawrence Livermore

National Laboratory. This benchmark facilitates us by

allowing the specification of the trace file patterns.

5.1. Simulator Validation

To validate the simulator fidelity in different scales,

we have performed five independent experiments with

4, 8, 16, 32 and 64 clients. In this subsection, we do

not implement the scheduling algorithms on the

proxies. We conduct two sets of experiments and

measure the performance of the system. In Set 1, each

client continuously issues 400 requests to the data

servers. Each of these requests is a 1 MB sequential

read from one file, and the file content is stripped and

evenly distributed onto 4 data servers. Set 2 is has the

same configuration as Set 1, except that the clients

issue sequential write requests instead of sequential

read requests.

We measure the system performance by collecting

the average response time for all I/O requests. The

term “response time” is the elapsed time between when

a I/O request issues its first I/O packet and when it

receives the response for its last packet. As shown in

Figure 2, the average response time of the real system

increases super-linearly as the number of client

Figure 2. Average Response Time with Different

Number of clients

increases. The same behavior is also observed on the

PFSsim simulator. For the read requests from smaller

number of clients, the simulated results do not match

the real results very well. This is probably because the

real local file system EXT3 provides read prefetching,

which accelerates the reading speed when recent

accessed data are adjacent in locality. This can also be

proved by the fact that, as the number of clients

accessing the file system increases, the difference

between real results and simulated results gets smaller,

which means the read prefetching becomes less

effective. For both read and write requests, the

simulated results follow the same trend of the real

system results as the number of client grows, which

confirms that while the absolute simulated values may

not predict with accuracy for the real system, relative

values can still be used to infer performance trade-offs.

5.2. Scheduler Validation

In this subsection, we validate the ability of PFSsim

in implementing request scheduling algorithms. We

deploy 32 clients in both real system and the simulator.

The clients are separated in two groups, each with 16

clients, for the purpose of algorithm validation. Each

client continuously issues 400 I/O requests. Each of

these requests is a 1 MB sequential write to a single

file, and the file content is stripped and evenly

distributed onto 4 data servers. The Start-time Fair

Queuing algorithm with depth D = 4 (SFQ(4)) [10] is

deployed on each data server for resource proportionalsharing.

We conducted three sets of tests, and in each set, we

assign a static weight ratio for two groups to enforce

the proportional-sharing. Every set is done in both real

system and the simulator. Set a, b, c, have the weight

ratio (Group1:Group2) of 1:1, 1:2 and 1:4,

respectively. We measure and analyze the system

throughput ratio each group achieves during the first

100 seconds of system runtime.

As shown in Figure 3, we see that the average

throughput ratios of the simulated system have the

same trend as that of the results from the real system,

which reflects the characteristics of the proportionalsharing algorithm. This validation proves that the

PFSsim simulator is able to simulate the performance

effect of proportional-sharing algorithms under various

configurations on the real system.

From the real system results, we can also see the

throughput ratio has more oscillation as the difference

in two groups’ sharing ratio grows. This behavior also

slightly exists in the results from the simulator, but we

observe that the results from the simulator have less

oscillation. This is because the real system

environment is more complex, where many factors can

contribute to the variations in the results, such as TCP

time out. But in our simulator, we do not have the

variations caused by these factors. In order to capture

dynamic variations with higher accuracy, future work

will investigate which system modeling aspects need to

be accounted for.

6. Conclusion and Future Work

The design objective of PFSsim is to simulate the

important effects of workload and scheduling

algorithms on parallel file systems. We implement a

proxy-based scheduler model for flexible scheduler

deployment. The validations on system performance

and scheduling effectiveness show the system is

capable of simulating system performance trend given

specified workload and scheduling algorithms. For

scalability, as far as we tested, the system scales for

simulations of up to 512 clients and 32 data servers.

In the future, we will work on optimizing the

simulator to improve simulation speed and fidelity. For

this goal, we plan to implement efficient

synchronization scheme between OMNeT++ and

DiskSim, such as shared memory. We will refine the

system by implementing some important factors that

also contribute to the performance, such as TCP

timeout and characteristics of the local file system.

7. References

Sun Microsystems, Inc., “Lustre File System:

High-Performance Storage Architecture and

Scalable Cluster File System”, White Paper,

October 2008.

[2] P. Carns, W. Ligon, R.Ross and R. Thakur,

“PVFS: A Parallel File System For Linux

Clusters”, Proceedings of the 4th AnnualLinux

Showcase and Conference, Atlanta, GA, October

2000, pp. 317-327

[1]

(a1) weight ratio = 1:1, real system (b1) weight ratio =1:2, real system (c1) weight ratio = 1:4, real system

(a2) weight ratio = 1:1, simulated

(b2) weight ratio = 1:2,simulated

(c2) weight ratio = 1:4, simulated

Figure 3. The throughput percentage each group takes over in the first 100 second of runtime in both real

sytem and the simulated sytem. Under the pictures we indicate the weight ratio of Group1:Group2 in SFQ(4)

algorithm. The average throughput ratios for Group 2 are: (a1)50.00%; (a2)50.23%; (b1)63.41%;

(b2)66.19%; (c1)68.58%; (c2)77.67%.

[3]

[4]

[5]

[6]

[7]

[8]

[9]

S. Weil, S. Brandt, E. Miller, D. Long and C.

Maltzahn,“Ceph: A Scalable, High-Performance

Distributed File System”, Proceedings of the 7th

Conference on Operating Systems Design and

Implementation (OSDI’06), November 2006.

D. Nagle, D. Serenyi and A. Matthews. “The

Panasas ActiveScale storage cluster-delivering

scalable high bandwidth storage”, ACM

Symposium on Theory of Computing (Pittsburgh,

PA, 06–12 November 2004), 2004.

F. Wang, S. Oral, G. Shipman, O. Drokin, T.

Wang, and I. Huang. “Understanding lustre

filesystem

internals”,

Technical

Report

ORNL/TM-2009/117, Oak Ridge National Lab.,

National Center for Computational Sciences,

2009.

R. Sandberg, “The Sun Network Filesystem:

Design, Implementation, and Experience,” in

Proceedings of the Summer 1986 USENIX

Technical Conference and Exhibition, 1986.

M. Mesnier, G. Ganger, and E. Riedel, “Objectbased strage”, IEEE Communications Magazine,

41(8):84-900, August 2003.

Z. Dimitrijevic and R. Rangaswami, “Quality of

service support for real-time storage systems”, In

Proceedings of the International IPSI-2003

Conference, October 2003.

C. Lumb, A. Merchant, and G. Alvarez,“Façade:

Virtual Storage Devices with Performance

Guarantees”, In Proceedings of the 2nd USENIX

Conference on File and Storage Technologies,

2003.

[10]

[11]

[12]

[13]

[14]

[15]

[16]

W. Jin, J. Chase, and J. Kaur. “Interposed

proportional sharing for a storage service utility”,

Proceedings of the International Conference on

Measurement and Modeling of Computer Systems

(SIGMETRICS), Jun 2004.

P. Goyal, H. M. Vin, and H. Cheng, “Start-Time

Fair Queueing: A Scheduling Algorithm

ForIntegrated

Services

Packet

Switching

Networks”, IEEE/ACM Trans. Networking, vol. 5,

no. 5, pp. 690–704, 1997.

J. Zhang, A. Sivasubramaniam, A. Riska, Q.

Wang, and E. Riedel, “An interposed 2-level I/O

scheduling

framework

for

performance

virtualization”, In Proceedings ofthe International

Conference on Measurement and Modelingof

Computer Systems (SIGMETRICS), June 2005.

W. Jin, J. S. Chase, and J. Kaur, “Interposed

Proportional Sharing For A Storage Service

Utility”, InSIGMETRICS, E. G. C. Jr., Z. Liu, and

A. Merchant,Eds. ACM, 2004, pp. 37–48.

Y. Wang, A. Merchant, “Proportional-share

scheduling for distributed storage systems”, In

Proceedings of the 5th USENIX Conference on

File and Storage Technologies, San Jose, CA, 47–

60.

E. Molina-Estolano, C. Maltzahn, J. Bent and S.

Brandt, “Building a parallel file system simulator”,

2009, Journal of Physics: Conference Series 180

012050.

P. Carns, B. Settlemyer and W. Ligon, “Using

Server-to-Server Communication in Parallel File

Systems to Simplify Consistency and Improve

Performance”, Proceedings of the 4th Annual

[17]

[18]

[19]

[20]

[21]

[22]

[23]

[24]

Linux Showcase and Conference (Atlanta, GA) pp

317-327.

J. Bucy, J. Schindler, S. Schlosser, G. Ganger and

contributers, “The disksim simulation environment

version 4.0 reference manual”, Technical Report,

CMU-PDL-08-101 Parallel Data Laboratory,

Carnegie Mellon University Pittsburgh, PA.

INET framework, URL: http://inet.omnetpp.org/

A. Varga, “The OMNeT++ discrete event

simulation system”, In European Simulation

Multiconference (ESM'2001), Prague, Czech

Republic, June 2001.

J. C. Wu and S. A. Brandt, “The design and

implementation of AQuA: an adaptive quality of

service aware object-based storage device”, In

Proceedings of the 23rd IEEE / 14th NASA

Goddard Conference on Mass Storage Systems

and Technologies, pages 209–218, College Park,

MD, May 2006.

Y. Saito, S. Frølund, A. Veitch, A. Merchant, and

S. Spence. “FAB: Building distributed enterprise

disk arrays from commodity components”, In

Proceedings of ASPLOS. ACM, October 2004.

A. Mathur, M. Cao, and S. Bhattacharya. “The

new ext4 filesystem: current status and future

plans”, In Proceedings of the 2007 Ottawa Linux

Symposium, pages 21–34, June 2007.

IOR: I/O Performance benchmark, URL:

https://asc.llnl.gov/sequoia/

benchmarks/IOR_summary_v1.0.pdf

A. Gulati and P. Varman, “Lexicographic QoS

Scheduling for Parallel I/O”. In Proceedings of the

[25]

[26]

[27]

[28]

[29]

[30]

17th annual ACM symposium on Parallelism in

algorithms and architectures (SPAA’05), Las

Vegas, NV, June, 2005.

S.A. Weil, K.T. Pollack, S.A. Brandt, and E.L.

Miller. “Dynamic metadata managemnet for

petabyte-scale file systems”. In proceedings of the

2004 ACM/IEEE Conference on Supercomuting

(SC’04). ACM, Nov. 2004.

S.A. Weil, S. A. Brandt, E. L. Miller, and C.

Maltzahn. “CRUSH: Controlled, scalable,

decentralized placement of replicated data”. In

Proceedings of the 2006 ACM/IEEE Conference

on Supercomputing (SC’06), Tampa, FL, Nov.

2006. ACM.

D. Roselli, Jo Lorch, and T. Anderson. “A

comparison of file system workloads”, In

Proceedings of the 2000 USENIX Annual

Technical Conference, pages 41-54, San Diego,

CA, June 2000. USENIX Association.

R. B. Ross and W. B. Ligon III, “Server-side

scheduling in cluster parallel I/O systems”. in

Calculateurs Parallèles Journal, 2001.

Y. Xu, L. Wang, D. Arteaga, M. Zhao, Y. Liu and

R. Figueiredo, “Virtualization-based Bandwidth

Management for Parallel Storage Systems”. In 5th

Petascale Data Storage Workshop (PDSW’10),

pages 1-5, New Orleans, LA, Nov. 2010.

D. Chambliss, G. Alvarez, P. Pandey and D. Jadav,

“Performance virtualization for large-scale storage

sytems”, in Symposium on Reliable Distributed

Systems, pages 109–118. IEEE, 2003.