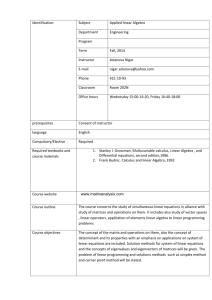

Two matrices A

advertisement

MATRICES Matrix. A Matrix is a rectangular array of numbers. A set of mn numbers (real or complex) arranged in the form of a rectangular array having m rows and n columns is called an m x n matrix (read as m by n matrix). 𝑎11 ⋯ 𝑎1𝑛 An mxn matrix is usually written as A=[ ⋮ ⋱ ⋮ ] 𝑎𝑚1 ⋯ 𝑎𝑚𝑛 In a compact form the above matrix is represented as A=[𝑎 𝑖𝑗] or A=[𝑎 𝑖𝑗]mxn ; where i=1,2,3……m, and j=1,2,3,…….n. The numbers a11, a12….. etc of the rectangular array are called the elements or entries of the matrix. The element aij belongs to ith row and jth column of the matrix. mxn is called as order of the matrix. A matrix of order mxn contains total mn elements. The elements of a matrix are always enclosed by a ( ) or [ ] bracket. A Matrix is essentially an arrangement of numbers and has no value. Following are some examples of matrices. 2 56 2 3 4 6 1 0 [5 7], [ ] , [ ] , [7 53] , [5 6 7] 9 0 1 8 34 8 9 0 Types of matrix. Row matrix: Any 1xn matrix which has only one row and n columns is called a row matrix or row vector. e.g. [5 7], [3 4 5] Column matrix: Any mx1 matrix which has only m rows and 1 column is called 3 5 a column matrix or column vector. e.g. [ ] , [4] 7 5 Square matrix: A matrix in which number of rows is equal to the number of columns is called a square matrix. 2 3 4 2 3 e.g. [ ] , [5 6 7] 4 5 8 9 0 The elements aij, when i=j of a square matrix A=[𝑎 𝑖𝑗] are called the diagonal elements and the line along which they lie is called principal diagonal or usually only diagonal of the matrix. In above square matrices, the diagonal elements are 2, 5 and 2, 6, 0. Null matrix (Zero matrix): The mxn matrix whose elements are all zero is called the null matrix or zero matrix. It is represented by O or more clearly Omxn. 0 0 0 0 0 e.g. [0 0] [ ] [0 0 0] are null matrices of the order of 1x2, 2x2, 3x3 0 0 0 0 0 respectively. Identity matrix (Unit matrix): A square matrix each of whose diagonal elements is 1 and each of whose non-diagonal elements is equal to zero is called an identity matrix or a unit matrix. It is denoted by I. In will denote a unit matrix of order n. 1 0 0 1 0 e.g. I2=[ ], I3=[0 1 0] 0 1 0 0 1 Thus, a square matrix A=[𝑎 𝑖𝑗] is a unit matrix, if aij=1 when i=j and aij=0 when i≠j. Diagonal matrix: A square matrix in which all non-diagonal elements are zero is called a diagonal matrix. 2 0 0 2 0 0 1 0 e.g.[ ] [0 3 0 ] [0 0 0 ] are diagonal matrices. 0 3 0 0 −1 0 0 −1 Thus, a square matrix A=[𝑎 𝑖𝑗] is a diagonal matrix if aij=0 when i≠j. Scalar matrix: A square matrix in which all the diagonal elements are equal and other elements are zero is called a scalar matrix. 2 0 0 4 0 0 1 0 e.g.[ ] [0 2 0] [0 4 0] are scalar matrices. 0 1 0 0 2 0 0 4 Thus, a square matrix A=[𝑎 𝑖𝑗] is a scalar matrix if aij=k when i=j and aij=0 when i≠j. Symmetric matrix: A square matrix A=[𝑎 𝑖𝑗] is said to be a symmetric if its (ij)th 1 2 3 2 4 th element is the same as its (ji) element, i.e. if [𝑎 𝑖𝑗] = [𝑎 𝑗𝑖] e.g. [ ] [2 4 5] 4 3 3 5 6 Skew Symmetric matrix: A square matrix A= [𝑎 𝑖𝑗] is said to be a skew symmetric if its (ij)th element is negative of its (ji)th element, i.e. if [𝑎 𝑖𝑗] = [−𝑎 𝑗𝑖] 0 2 3 0 4 e.g. [ ] [−2 0 5] −4 0 −3 −5 0 For a matrix to become a skew symmetric matrix, aij=-aji. For all diagonal elements i=j. Hence, aii=-aii Or, 2aii=0 Or, aii=0. Thus the diagonal elements of a skew symmetric matrix are all zero. Singular and non-singular matrix: A square matrix is called singular matrix if its determinant is zero, or otherwise called non-singular (regular) matrix. Equality of matrices. Two matrices A = [aij} and B = [bij] are said to be equal if (a) They are of same order and (b) The elements on the corresponding places of the two matrices are the same i.e. aij=bij. It is represented as A=B. 1 5 8 1 5 8 e.g. 𝐴 = [ ] and 𝐵 = [ ] ; Here A=B. 2 4 9 2 4 9 If two matrices are of different same order, but aij≠bij then also 1 5 8 1 5 e.g. 𝐴 = [ ] and 𝐵 = [ 2 4 9 2 4 From (a) (b) (c) order they can’t be equal at all. If both are of they can’t be equal. It is represented as A≠B. 8 ] ; As a23≠b23, here A≠B. 5 the above definition, it can be concluded that If A is any matrix, then A=A (reflexive). If A=B, then B=A (symmetry). If A=B and B=C, then A=C (transitive). Addition (subtraction) of matrices. Let A and B be two matrices of the same order mxn. Then their sum (difference) will be a matrix of same order by adding (subtracting) corresponding elements of A and B. If A=[aij]mxn and B=[bij]mxn ; then A+B=[aij+bij]mxn If A=[aij]mxn and B=[bij]mxn ; then A-B=[aij-bij]mxn 12 e.g. 𝐴 = [ 56 A+B=[ 12 + 1 56 + 2 5 78 1 5 8 ] and 𝐵 = [ ]; 2 9 2 4 5 5+5 2+4 78 + 8 13 ]=[ 58 9+4 10 6 86 ] and 13 12 − 1 5 − 5 78 − 8 11 0 70 ]=[ ] 54 −2 5 56 − 2 2 − 4 9 − 4 If the two matrices are of the same order then only they can be added or subtracted and then they are said to be conformable for addition or subtraction or otherwise are called non-conformable. A-B=[ Properties of matrix addition. Let A = [ai,j]mxn ; B = [bi,j] mxn ; C = [ci,j] mxn (a) A+B =[ai,j]mxn + [bi,j] mxn =[ai,j +bi,j] mxn (by definition of addition of two matrices) =[bi,j +ai,j] mxn (as addition of two numbers is commutative) =[bi,j] mxn +[ai,j]mxn (by definition of addition of two matrices) =B+A Hence matrix addition is commutative i.e A+B=B+A. (b) (A+B)+C=([ai,j]mxn + [bi,j] mxn )+[ci,j] mxn =[ai,j +bi,j] mxn +[ci,j] mxn (by definition of addition of two matrices) =[(ai,j +bi,j)+ ci,j] mxn (by definition of addition of two matrices) =[ai,j +(bi,j+ ci,j)] mxn (as addition of three numbers is associative) =[ai,j]mxn + [bi,j+ci,j] mxn (by definition of addition of two matrices) =A+(B+C) Hence matrix addition is associative i.e (A + B)+C =A+ (B + C). (c) If A+B=A+C, implies that B=C, provided all matrices are of same order. This is called Cancellation law and holds for matrix addition. (d) A+O=[aij+o]mxn=[aij]mxn =A Additive identity for [𝐴]mxn is a zero matrix [𝑂]mxn. Hence a zero matrix is an additive identity i.e. A+O=A. (e) A+(-A)=[aij- aij]mxn=[o]mxn =O Additive inverse for [𝐴]mxn is its negative matrix [−𝐴]mxn. Hence negative matrix is an additive inverse i.e. A+ (-A) =O. Scalar Multiplication. If A is a matrix and k is a scalar (number), then the matrix obtained by multiplying every element of the matrix A by k is called the scalar multiple of A by k and is denoted by kA or Ak. Symbolically if A=[aij]mxn then kA=Ak=[kaij]mxn. 9 2 E.g. 5. [−2 8 −3 −5 3 45 10 ] = [ 5 −10 40 7 −15 −25 15 25] 35 Remember, in case of scalar multiplication with a determinant, only a single row/column is multiplied by the scalar. Properties of Scalar Multiplications. Let A = [ai,j]mxn ; B = [bi,j] mxn ; k and l are scalars. (a) k(A+B)=k([ai,j]mxn + [bi,j] mxn ) =k[ai,j +bi,j] mxn (by definition of addition of two matrices) = [k(ai,j +bi,j)] mxn (by definition of scalar multiplication) = [kai,j +kbi,j)] mxn (by distributive law of numbers) = [kai,j ] mxn +[kbi,j)] mxn = k[ai,j ] mxn +k[bi,j)] mxn =kA+kB Hence k(A+B)=kA+kB (b) (k+l)A=(k+l) [ai,j]mxn =[(k+l) ai,j]mxn =[kai,j]mxn + [lai,j]mxn =k[ai,j]mxn + l[ai,j]mxn =kA+lA Hence (k+l)A=kA+lA (c) k(lA) = k(l[ai,j]mxn) = kl[ai,j]mxn =(kl)A Hence k(lA) = (kl)A (d) More corollary properties (-k)A=-(kA)=k(-A) 1.A=A -1.A=-A Multiplication of Two Matrices. Let A = [ai,j]mxn ; B = [bi,j] nxp be two matrices such that number of columns of A is equal to number of rows of B. Then only both matrices are conformable for multiplication. Otherwise their product AB is not defined. The order of the matrix after multiplication will be mxp. For finding an element of the resultant matrix, multiply the first element of a given row from A with the first element of a given column from B, then add to that the product of the second element of the same row from the A and the second element of the same column from B, then add the product of the third elements and so on, until the last element of that row from the A is multiplied by the last element of that column from B and added to the sum of the other products. The ij entry in the third matrix is the sum of the products of the elements in the ith row in the first matrix and the jth column in second matrix. Suppose the ith row equals [ai1,ai2,...,ain] and the jth column equals [b1j,b2j,...,bnj]. Then the i,j entry of the third matrix is ai1b1j + ai2b2j + ... + ainbnj. 𝑤 𝑥 𝑏 ] and B=[ 𝑦 𝑧 ] 𝑑 𝑎𝑤 + 𝑏𝑦 𝑎 𝑏 𝑤 𝑥 Then, AB =[ ] [ 𝑦 𝑧] = [ 𝑐𝑤 + 𝑑𝑦 𝑐 𝑑 e.g. A=[ 𝑎 𝑐 𝑎𝑥 + 𝑏𝑧 ] 𝑐𝑥 + 𝑑𝑧 The figure illustrates the product of two matrices A and B, showing how each intersection in the product matrix corresponds to a row of A and a column of B. The size of the output matrix is always the largest possible, i.e. for each row of A and for each column of B there are always corresponding intersections in the product matrix. The product matrix AB consists of all combinations of dot products of rows of A and columns of B. Properties of Matrix Multiplications. (a) If the product AB exists, then it is not necessary that the product BA will also exist. E.g. if A be a 5x4 matrix and B be a 4x3 matrix, then AB exists but BA does not exist. If AB and BA both too exist, it is always not necessary that they should be matrices of same order. E.g. if A be a 4x3 matrix and B be a 3x4 matrix, then both AB and BA exist and of 4x4 and 3x3 matrix respectively. Hence we have AB≠BA. If AB and BA both exist and are too of same order, then also AB may be or may not be equal to BA. This will be clear in these examples. 1 2 1 10 −4 −1 −3 1 0 E.g. Let A= [3 4 2] , B= [−11 5 0 ] ; Then AB= [ 4 −2 −1] and 1 3 2 9 −5 1 −5 1 1 −3 1 0 BA=[ 4 −2 −1]; Here AB=BA. Commutative rule follows. −5 1 1 1 0 0 1 0 1 Take another example. Let A= [ ] , B= [ ] ; Then AB= [ ] and 0 −1 1 0 −1 0 0 −1 BA=[ ] ; Here AB≠BA. Commutative rule does not follow. 1 0 Hence matrix multiplication is not always communicative i.e. AB=BA or AB≠BA. (b) Let A = [ai,j]mxn ; B = [bi,j] nxp and C = [ci,j]pxq Now BC will be a nxq matrix and A(BC) will be a mxq matrix. Similarly AB will be a mxp matrix and (AB)C will be a mxq matrix. Xxxxxxxxxxxxxxxxxxx Xxxxxxxxxxxxxxxxxxx Hence matrix multiplication is associative if conformability is assured i.e. A (BC) = (AB) C. For product of a chain of matrices the associative property is used with the condition that if adjacent matrices of the chain are conformable. (c) If a square matrix A is multiplied by itself n times, the resultant matrix is defined as An i.e. An=A.A.A.A… n times. (d) Xxxxxxxxxxxxxxxxxxx Xxxxxxxxxxxxxxxxxxx Hence matrix multiplication is distributive w.r.t. addition of matrices i.e. A(B+C)=AB+AC. (e) If CA=CB does not imply that A=B, provided their products are defined. Hence Cancellation law does not hold for matrix multiplication. (f) AB=0 does not necessarily imply that at least one of the matrices A and B must be 0 e.g. [1 1] [ 1 1 0 0 0 ]=[ ] 1 −1 0 0 0 (g) Multiplicative identity for [𝐴]mxn is a unit matrix [𝐼]mxn. Hence a unit matrix is a multiplicative identity i.e AI=IA=A. (h) If A and B are square matrices of order n such that AB=BA=I, where I is the identity matrix of order n; then B is called multiplicative inverse of A and is 1 written as A-1(which is not equal to 𝐴). Similarly A is also called multiplicative inverse of B and is written as B-1. Hence an inverse matrix is a multiplicative inverse i.e. A A-1= A-1A=I. Positive integral power of a square matrix. If A be an n-rowed square matrix, then A1=A and Am+1=Am.A for each positive integer m. It can also be shown that Ap+q=Ap.Aq and (Ap) q=Apq. Sub-matrix of a matrix. Any matrix obtained by omitting some rows and columns from a given (mxn) matrix A is called sub-matrix of A. The matrix A itself is a sub-matrix of A as it can be obtained from A by omitting no rows and columns. 1 9 3 𝑃 𝑄 Consider 𝐴 = [2 8 4] written in sub-matrix form 𝐴 = [ ] where sub𝑅 𝑆 6 2 7 1 9 3 matrices 𝑃 = [ ], 𝑄 = [ ] , 𝑅 = [6 2] and 𝑆 = [7] 2 8 4 2 9 4 𝑃1 𝑄1 Similarly consider 𝐵 = [3 6 8] written in sub-matrix form 𝐵 = [ ] where 𝑅1 𝑆1 1 5 7 2 9 4 sub-matrices𝑃1 = [ ], 𝑄1 = [ ] , 𝑅1 = [1 5] and 𝑆1 = [7] 3 6 8 𝑃 + 𝑃1 𝑄 + 𝑄1 𝑃 𝑄 𝑃1 𝑄1 Then, it may be verified that, 𝐴 + 𝐵 = [ ]+[ ]=[ ] 𝑅 + 𝑅1 𝑆 + 𝑆1 𝑅 𝑆 𝑅1 𝑆1 𝑃𝑃1 + 𝑄𝑅1 𝑃𝑄1 + 𝑄𝑆1 𝑃 𝑄 𝑃1 𝑄1 and 𝐴𝐵 = [ ].[ ]=[ ] 𝑅𝑃1 + 𝑆𝑅1 𝑅𝑄1 + 𝑆𝑆1 𝑅 𝑆 𝑅1 𝑆1 Transpose of a Matrix. The matrix obtained from a given matrix A by changing its rows into columns or columns into rows is called the transpose matrix of the given matrix A. It is 1 2 1 3 5 T ’ T represented by A or A . E.g. If = [ ] ; A = [3 4] 2 4 6 5 6 Properties of Transpose of a Matrix. (a) The transpose of an mxn matrix is an nxm matrix. (b) The transpose of the sum of two matrices is the sum of their transposes, i.e. (A+B)T= AT+ BT. (c) (d) (e) (f) (g) (g) (h) (i) (j) (k) The transpose of the transpose of a matrix is the matrix itself, i.e. (AT)T= A. If A is any mxn matrix, then (kA) T=kAT, where k is any number. The transpose of the product of two matrices is the product in reverse order of their transpose, i.e. (AB) T =BTAT. This property is called the reversal law of transpose. If A=(AT)-1, then the matrix is and orthogonal matrix. If A=(A)-1, then the matrix is and involutory matrix. If AT =A, then the matrix is a symmetric matrix. If AT =-A, then the matrix is a skew symmetric matrix. If A is a square matrix then A+AT is a symmetric matrix. Because (A+AT )T = AT+(AT)T= AT+A= (A+AT), Hence A+AT is a symmetric matrix. If A is a square matrix then A-AT is a skew symmetric matrix. Because (A-AT) T = AT-(AT) T= AT-A= - (A-AT), Hence (A-AT) is a skew symmetric matrix. Every square matrix is uniquely expressible as the sum of a symmetric matrix and a skew matrix. Transposed Conjugate of a matrix. If all the elements of a matrix A of order mxn are replaced by their corresponding complex conjugates then that matrix is called as the conjugate of A and is denoted by 𝐴̅. If the elements of A are all real, then = 𝐴̅ . The nxm matrix obtained by transposing the conjugate of A (i.e.𝐴̅) is called transposed conjugate of A and is denoted by Aθ. It may be noted that the conjugate of 𝑇) ̅̅̅̅̅̅ transpose of A is also the same as Aθ. Symbolically, Aθ= (𝐴̅)T=(𝐴 E.g. If = [ 1+𝑖 −4 + 𝑖 2 1 1−𝑖 3𝑖 ] , then Aθ = [ 2 2 − 6𝑖 −3𝑖 −4 − 𝑖 1 ] 2 + 6𝑖 Properties of Transposed Conjugate of a matrix. (a) (Aθ) θ=A (b) (A+B) θ= Aθ +Bθ, A and B being comparable. (c) (kA) θ= 𝑘̅ Aθ , k being any complex number. θ θ θ (d) (AB) = B A , A and B being conformable for multiplication (e) If (Aθ)=A, then the matrix is called Hermitian Matrix e.g. [ 2 4+𝑖 4−𝑖 ]. All 3 diagonal elements of a Hermitian Matrix must be real. A Hermitian Matrix of real elements is a real symmetric matrix. (f) If (Aθ)=-A, then the matrix is called Skew-Hermitian Matrix e.g. 3𝑖 [ −2 + 5𝑖 0 2 + 5𝑖 ],[ 1+𝑖 4𝑖 1+𝑖 ]. All diagonal elements of a Skew-Hermitian Matrix 0 must be pure imaginary or zeros. A Skew-Hermitian Matrix of real elements is a real skew-symmetric matrix. Adjoint of a matrix. The adjoint of a matrix A is the transpose of the matrix obtained replacing each element aij in A by it cofactors Cij. It is written as Adj A. 𝑎11 ⋯ 𝑎1𝑛 Let A=[𝑎𝑖𝑗]mxn=[ ⋮ ⋱ ⋮ ], 𝑎𝑚1 ⋯ 𝑎𝑚𝑛 𝐶11 ⋯ 𝐶1𝑛 Cofactor matrix of A= [ ⋮ ⋱ ⋮ ] 𝐶𝑚1 ⋯ 𝐶𝑚𝑛 𝐶11 ⋯ 𝐶𝑚1 Adj A=Transpose of Cofactor matrix of A=[ ⋮ ⋱ ⋮ ] 𝐶1𝑛 ⋯ 𝐶𝑚𝑛 Theorem If A be a square matrix of order n, then (Adj A)A=A(Adj A)=|𝐴|In Proof: 𝑎11 ⋯ 𝑎1𝑛 Let A=[𝑎𝑖𝑗]nxn=[ ⋮ ⋱ ⋮ ] 𝑎𝑛1 ⋯ 𝑎𝑛𝑛 𝐶11 ⋯ 𝐶𝑛1 Adj A=[ ⋮ ⋱ ⋮ ] 𝐶1𝑛 ⋯ 𝐶𝑛𝑛 𝑎11 ⋯ 𝑎1𝑛 𝐶11 ⋯ 𝐶𝑛1 Now, A(Adj A)=[ ⋮ ⋱ ⋮ ][ ⋮ ⋱ ⋮ ] 𝑎𝑛1 ⋯ 𝑎𝑛𝑛 𝐶1𝑛 ⋯ 𝐶𝑛𝑛 ⋯ 𝑎11. 𝐶𝑛1 + 𝑎12. 𝐶𝑛2 + ⋯ + 𝑎1𝑛. 𝐶𝑛𝑛 ] ⋱ ⋮ 𝑎𝑛1. 𝐶11 + 𝑎𝑛2. 𝐶12 + ⋯ + 𝑎𝑛𝑛. 𝐶1𝑛 ⋯ 𝑎𝑛1. 𝐶𝑛1 + 𝑎𝑛2. 𝐶𝑛2 + ⋯ + 𝑎𝑛𝑛. 𝐶𝑛𝑛 𝑎11. 𝐶11 + 𝑎12. 𝐶12 + ⋯ + 𝑎1𝑛. 𝐶1𝑛 =[ ⋮ From the property of determinants, we know that the sum of the product of the elements of any row or column and their corresponding cofactors is the value of the determinant and the sum of the product of the elements of a row (or a column) with corresponding cofactors of any other parallel row (or column) is zero. Hence above all the elements at the diagonal will be |𝐴| and rest all elements will be zero. |𝐴| ⋯ 0 1 ⋯ 0 Or, A(Adj A)=[ ⋮ ⋱ ⋮ ]=|𝐴| [ ⋮ ⋱ ⋮ ] = |𝐴|In 0 ⋯ |𝐴| 0 ⋯ 1 Similarly it can be proved that (Adj A) A =|𝐴|In Hence the matrix A and Adj A are commutative and their product is a scalar matrix where every diagonal element of which is|𝐴|. Inverse (reciprocal) of a matrix. If a matrix B for a given square matrix A be such that AB=I=BA, then B is known as inverse or reciprocal matrix of A and is denoted by A-1 (read as A inverse). Thus A-1 satisfies the relation AA-1= A-1A=I. Let B and C are two possible inverse matrices of A. Then AB=BA=I and AC=CA=I. Now B=BI=B(AC)=(BA)C=IC=C. Or in other words, B and C is the same matrix. Hence the inverse of any square matrix if it exists is unique. But (Adj A) A =|𝐴|I; Dividing both sides by scalar |𝐴| gives with equation A-1A=I we can write A-1= 𝐴𝑑𝑗 𝐴 |𝐴| 𝐴𝑑𝑗 𝐴 |𝐴| A=I. Comparing , provided |𝐴| ≠ 0. Therefore the inverse of a nonsingular matrix only exists. The negative powers of A are defined by raising the inverse matrix of A, to positive powers i.e. A-n= [A-1] n= [A-1] [A-1] [A-1]…. n times. If we pre-multiply B-1A-1 by AB, we get (AB) (B-1A-1)=A(B-1B-1) A-1=AIA-1=AA-1=I. As product of two matrices (AB) and (B-1A-1) is I, hence one is the inverse of the other i.e. (AB)-1= (B-1A-1). Generalizing (ABCDEF...)-1= (…F-1E-1D-1C-1B-1A-1). Taking transpose of both sides of AA-1=I gives us (AA-1)T=IT=I, Or, AT.(A-1)T=I. As product of both matrices is I, one is the inverse of other. Hence (AT)-1=(A-1)T. The zero matrixes have no multiplicative inverse. The unit matrix is the multiplicative inverse of itself. The inverse of a singular matrix does not exist. AB=BA does not imply that A and B are multiplicative inverse of each other. Liner Equation in Matrix Notation. Consider following m-equations with n-unknowns : a11x1+a12x2+…+a1nxn=b1 a21x1+a22x2+…+a2nxn=b2 ----------------------------------------------------------and am1x1+am2x2+…+amnxn=bm These equations are called the system of linear equations. Consider the system of two equations with two unknowns: a11x+a12y=b1 and a21x+a22y=b2 𝑎11 In matrix form it can be written as [ 𝑎21 𝑎12 𝑥 𝑏1 ][ ] = [ ] 𝑎22 𝑦 𝑏2 Or, AX=B 𝑎11 𝑎12 Here A, X and B correspond to the coefficient matrix[ ], column matrix 𝑎21 𝑎22 𝑥 𝑏1 of unknowns [𝑦] and column matrix of constants [ ] respectively. 𝑏2 Now consider the system of three equations with three unknowns: a11x+a12y+a13z=b1 a21x+a22y+a23z=b2 and a31x+a32y+a33z=b3 𝑎11 𝑎12 In matrix form it can be written [𝑎21 𝑎22 𝑎31 𝑎32 Or, 𝑎13 𝑥 𝑏1 𝑎23] [𝑦] = [𝑏2] 𝑎33 𝑧 𝑏3 AX=B Again the system of m-equations with n-unknowns 𝑎11 ⋯ 𝑎1𝑛 𝑥1 𝑏1 [ ⋮ ] [ ] = [ ⋱ ⋮ ⋮ ⋮ ] 𝑎𝑚1 ⋯ 𝑎𝑚𝑛 𝑥𝑛 𝑏𝑚 Or, AX=B can be written Solutions of Liner Equations. A system of equations is called homogeneous if the constants (b1,b2…bm) are zero value, or otherwise called non-homogeneous equations. E.g. 2x+3y=0 and 3x-y=0 is a system of homogeneous equations; but 2x+3y=1 and 3x-y=5 is a system of non-homogeneous equations. When the system of equations has one or more solutions, the equations are said to be consistent (intersecting or coincident straight lines), otherwise they are said to be inconsistent (parallel straight lines). For example consider 2x+3y=5 and 4x+6y=10 is consistent, because x=1, y=1 and x=2, y=1/3 are solution of these equations. But 2x+3y=5 and 4x+6y=9 is inconsistent, because there is no x and y values which satisfy both equations. Solution of non-homogeneous Liner Equations. Consider the system of non-homogeneous equations a11x+a12y+a13z=b1 a21x+a22y+a23z=b2 and a31x+a32y+a33z=b3 In matrix form, Or, 𝑎11 𝑎12 [𝑎21 𝑎22 𝑎31 𝑎32 𝑎13 𝑥 𝑏1 𝑦 ] [ ] = [ 𝑎23 𝑏2] 𝑎33 𝑧 𝑏3 AX=B. Case-1: If A is nonsingular i.e. |𝐴| ≠ 0, A-1 exists and is unique. Pre-multiplying A-1 on both sides, we get A-1(AX)=A-1B Or, (A-1A)X= A-1B Or, IX= A-1B Or, X= A-1B The system of equations is consistent and has unique solution. Case-2: If A is singular i.e.|𝐴| = 0; Pre-multiplying (Adj A)on both sides, we get (Adj A) AX = (Adj A) B Or, [(Adj A) A] X= (Adj A) B Or, [|𝐴|.I] X = (Adj A) B Or, |𝐴| X = (Adj A) B If |𝐴| = 0 and (Adj A) B = 0; then |𝐴| X= (Adj A) B is true for every value of X. In other words, the system has infinite solutions and is consistent. If |𝐴| = 0 and (Adj A) B ≠ 0; then |𝐴| X= (Adj A) B is not true because |𝐴| X is a null matrix and (Adj A) B is a non-null matrix. In other words, the system has no solution and therefore inconsistent. Both cases will be clear in the following examples. Consider following equations, 2x+y=3 and 4x+2y=6. Both are equations of the same straight line. 2 1 Observe here|𝐴| = | | = 0 , or A is a singular matrix. As geometrically both 4 2 represent coincident lines in x-y plane, hence these equations have infinitely many solutions. As both equations have more than one solution, these will be consistent too. Now consider following equations, 2x+y=3 and 4x+2y=5. Both are equations represent two non-coincident parallel straight lines. Observe here also A is a singular matrix. As geometrically both represent non-coincident lines in x-y plane, hence these equations have no solutions and hence inconsistent too. Solution of Homogeneous Liner Equations. Consider the system of homogeneous equations a11x+a12y+a13z=0 a21x+a22y+a23z=0 and a31x+a32y+a33z=0 𝑎11 𝑎12 In matrix form it can be written [𝑎21 𝑎22 𝑎31 𝑎32 Or, 𝑎13 𝑥 0 𝑎23] [𝑦] = [0] 𝑎33 𝑧 0 AX=0 Case-1: If A is non-singular i.e. |𝐴| ≠ 0, A-1 exists and is unique. Pre-multiplying A-1 on both sides, we get A-1(AX)= A-10 Or, (A-1A)X= 0 Or, IX= 0 Or, X= 0 X=0 means x=y=z=0 and is called a trivial solution. Hence, if |𝐴| ≠ 0 ; coefficient matrix A is non-singular and it has got trivial solution. Case-2: If |𝐴| = 0; coefficient matrix A is singular and it has got non-trivial solution and infinitely many solutions. Rank of a matrix. The rank of a matrix denoted by ρ(A) is the order of any highest order nonvanishing minor of the matrix. Hence the rank of a matrix of order mxn can at most be equal to the smaller of the numbers m and n, but it may be less. A non-zero matrix A is said to be of rank r if (a) A has at least one non-zero minor of order r and (b) every minor of order r+1 of A, if any, is zero. In other words the rank of a matrix is ≤r, if all (r+1) rowed minor of the matrix vanishes. The rank of a matrix is ≥r, if there is at least one r-rowed minor of the matrix which is not equal to zero. 0 0 0 Consider a null matrix 0= [0 0 0]. Here |𝐴| = 0. A is singular. Since the rank 0 0 0 of every non-zero matrix is ≥1, we agree to assign the rank zero to every null matrix i.e. ρ(A)=0 . Hence the rank of a null matrix is always 0. 1 0 Consider a unit third order matrix I3= [0 1 0 0 Hence ρ(A)=3 . Hence the rank of n-order unit 0 0]. Here |𝐴| = 1. A is non-singular. 1 matrix is always n. 1 2 3 Consider a third order matrix A=[2 3 4]. Here |𝐴| = 2. A is non-singular. As 0 2 2 this is the highest order non-vanishing minor, hence ρ(A)=3 . Thus the rank of every non-singular matrix of order n is n. The rank of a square matrix A of order n can be less than n if and only if A is 1 2 3 singular i.e. |𝐴| = 0 e.g. consider A=[3 4 5]. Here |𝐴| = 0. A is singular. As this 4 5 6 is a vanishing minor, hence ρ(A)<3 . Now consider the lower order minor 1 2 | | = −2 = Non-vanishing. hence ρ(A)=2. Considering another matrix 3 4 3 1 2 A=[6 2 4]. Here |𝐴| = 0. A is singular. As this is a vanishing minor, hence 3 1 2 ρ(A)<3 . Now considering all lower order minors, we can see that they are also vanishing, hence ρ(A)<2. But A is not a null matrix, hence ρ(A)=1. Thus the rank of every singular matrix of order n is less than n. For finding rank of a higher order matrix, following three types of elementary row-transformations are carried out: (a) Interchange any two distinct rows of a matrix (b) Multiply any row by a scalar (c) Addition of elements of any row to scalar-multiple of corresponding elements of another row. The rank of any matrix does not alter by any of these elementary rowtransformations. This is because for the fact that the order of the largest nonsingular square sub-matrix is not affected by these transformations. As the matrix obtained (say B) after row-transformation has the same rank with original matrix (say A), both matrices are called equivalent matrices and is denoted by A~B. Augmented matrix. Consider the system of equations and a11x+a12y=b1 a21x+a22y=b2 𝑎11 𝑎12 ], 𝑎21 𝑎22 𝑥 𝑏1 column matrix of unknowns X= [𝑦] and column matrix of constants B= [ ] 𝑏2 respectively. In matrix form it can be written AX=B. Here coefficient matrix 𝐴 = [ The column matrix of constants B on the RHS of equations, when associated with the coefficient matrix A, the matrix thus formed is called augmented 𝑎11 𝑎12 𝑏1 matrix given by [ ] and is denoted by [A:B]. 𝑎21 𝑎22 𝑏2 Solution of Non-Homogeneous Liner Equations. A system of non-homogeneous equations AX=B of m linear equations and n unknowns has no solution, a unique solution or infinitely many solutions. Case-1: If ρ(A)≠ ρ([A:B]); then the system has no solution. Case-2: If ρ(A)= ρ([A:B])=n; then the system has unique solution provided A is nonsingular. As it has got at least one solution of the system of equations, it is said to be consistent. Case-3: If ρ(A)= ρ([A:B])=r<n; then the system has infinitely many solution. In this case the solution set involves n-r parameters. Solution of Homogeneous Liner Equations. Let AX=0 be a system of m number of homogeneous equations with n unknowns. Since in this case the column matrix of constants is a zero matrix, the rank of coefficient matrix A and augmented matrix [A:0] are same. Therefore the system is always consistent. Again as x1=x2=…=xn=0 is always a solution, and then it is a trivial solution. If m<n, the system of equations have non-trivial solutions. For this the coefficient matrix A must be a singular matrix. Case-1: ρ(A)= r=n; the system has X=0 as the only solution. Case-2: ρ(A)= r<n; here n-r variables can be selected and assigned arbitrary values and hence there are infinitely many solutions. If ρ(A)=r and r≤m, <n, n-r variables can be selected and assigned arbitrary values. When the number of equations (m) is less than the number of unknowns (n), the equations will always have non-trivial infinitely many solutions.